Mobile Robot Control 2023 HAL-9000: Difference between revisions

Tag: 2017 source edit |

|||

| Line 138: | Line 138: | ||

[[File:Wikireport.gif|center|thumb|450px|Hero navigating the first 3 tables using A*]] | [[File:Wikireport.gif|center|thumb|450px|Hero navigating the first 3 tables using A*]] | ||

In this video Hero, arrives at a table, turns towards it and says 'I arrived at Table'. For the video with sound https://tuenl-my.sharepoint.com/:v:/g/personal/a_h_d_pauw_student_tue_nl/EcRTOid_dJhFuJpP7wPTtF4BfdEl4oyFL_uu3NJc-nJ8Ww?e=aWCaYW&nav=eyJyZWZlcnJhbEluZm8iOnsicmVmZXJyYWxBcHAiOiJTdHJlYW1XZWJBcHAiLCJyZWZlcnJhbFZpZXciOiJTaGFyZURpYWxvZyIsInJlZmVycmFsQXBwUGxhdGZvcm0iOiJXZWIiLCJyZWZlcnJhbE1vZGUiOiJ2aWV3In19 | In this video Hero, arrives at a table, turns towards it and says 'I arrived at Table'. For the video with [https://tuenl-my.sharepoint.com/:v:/g/personal/a_h_d_pauw_student_tue_nl/EcRTOid_dJhFuJpP7wPTtF4BfdEl4oyFL_uu3NJc-nJ8Ww?e=aWCaYW&nav=eyJyZWZlcnJhbEluZm8iOnsicmVmZXJyYWxBcHAiOiJTdHJlYW1XZWJBcHAiLCJyZWZlcnJhbFZpZXciOiJTaGFyZURpYWxvZyIsInJlZmVycmFsQXBwUGxhdGZvcm0iOiJXZWIiLCJyZWZlcnJhbE1vZGUiOiJ2aWV3In19 sound] https://tuenl-my.sharepoint.com/:v:/g/personal/a_h_d_pauw_student_tue_nl/EcRTOid_dJhFuJpP7wPTtF4BfdEl4oyFL_uu3NJc-nJ8Ww?e=aWCaYW&nav=eyJyZWZlcnJhbEluZm8iOnsicmVmZXJyYWxBcHAiOiJTdHJlYW1XZWJBcHAiLCJyZWZlcnJhbFZpZXciOiJTaGFyZURpYWxvZyIsInJlZmVycmFsQXBwUGxhdGZvcm0iOiJXZWIiLCJyZWZlcnJhbE1vZGUiOiJ2aWV3In19 | ||

We obtain the result seen in the image underneath. | We obtain the result seen in the image underneath. | ||

Revision as of 17:41, 5 July 2023

Group members:

| Name | student ID |

|---|---|

| Xingyi Li | 1820443 |

| Alexander De Pauw | 1877267 |

| Timo van Gerwen | 1321854 |

| Yuzhou Nie | 1863428 |

| Ronghui Li | 1707183 |

| Guglielmo Morselli | 1959301 |

Design Presentation:

https://cstwiki.wtb.tue.nl/wiki/File:Midterm_presentation.pdf

Tasks Divide:

Data-flow Diagram& State Diagram:

[[File:|center|thumb|600px]]

Towards Final Challenge

Introduction (Ronghui)

In recent days, robots have become indispensable in various domains, particularly in tasks that involve repetition. Among these applications, one instance is enabling robots to autonomously navigate and serve orders in restaurant, which is encapsulated in the project called Restaurant Challenge. This project evaluates the capabilities of an autonomous robot to navigate and deliver orders within a restaurant setting. The challenge mandates teams to develop sophisticated software and algorithms for robot 'Hero' to let it autonomously traverse the restaurant, avoiding obstacles and humans, while accurately delivering orders to the designated tables.

The wiki page will encompasses the strategies and algorithms employed by the team HAL-9000 to Restaurant Challenge.

First it begins with introducing strategies, which encompasses the development and integration of algorithms and software to facilitate the robot's navigation and order delivery tasks.

Following the strategy description, the report delves into an in-depth explanation of the algorithms that used in this project. The first algorithm discussed is Localization, which is achieved through the particle filter algorithm, and employs a probabilistic approach to estimate the robot's position relative to a predefined map.

Subsequently, the report elucidates the Global Path Planning using the A* algorithm, which utilizes a nodal map and prior knowledge of the environment, including the robot's current position and the location of the next table.

In addition to global path planning, the report explores Local Path Planning, which is essential for real-time obstacle avoidance. The Artificial Potential Field (APF) algorithm is employed for this purpose, which generates attractive and repulsive fields around the target and detected obstacles, respectively. By summing these forces, the robot could avoid objects on path.

After explicating the individual algorithms, the report then explain how these algorithms are integrated to work in unison.

Finally the report culminates with an evaluation of the robot's performance. This evaluation is conducted by comparing the simulation results with the outcomes of the final challenge and also encompasses a discussion of the results, deficiencies, conclusion and recommendations.

Strategy (Timo)

The goal is to deliver the orders to the tables in a fast yet safe manner. This will be done by computing the shortest path to the next table, as provided by the table sequence, while continuously scanning the environment to detect obstacles. If an obstacle is detected, the obstacle avoidance mode will take over and prevent the robot causing crashes. Before making the code rather complicated, only known static obstacles will be considered. This enables the creation of a robust system. As soon as the simplified version of the challenge is working, the complexity can be increased. The order of features to be added is to cope with unknown static obstacles, following up with a feature to handle doors and finally enabling the robot to deal with unknown dynamic obstacles like humans.

By creating a state and data flow diagram, a policy to create the separate blocks as well as for the integration of these blocks is determined. While progressing with the code, these diagrams should be taken into account to keep a clear and logic connection between the different parts of the code.

The state diagram can be seen in the figure on the right. This is the state flow of the system that is desired to be reached by designing the code and integrating the different blocks. This state diagram could be detailed further by including humans. For now it is decided that humans are considered as general unknown obstacles, therefore there is no dedicated state for different behavior if a human is detected.

The data flow diagram can be seen in the figure at the right. It shows which blocks communicate with each other by sending or receiving data.

To complete the final challenge, an efficient and robust code is created to make the robot deliver orders to multiple tables in a restaurant which environment is known beforehand. However, as the world around us is not static nor constant, even in the presence of unknown static or dynamic obstacles the robot should still complete its task. To solve this challenge, an approach that incorporates global path planning, local path planning for obstacle avoidance and localization is proposed.

The first task the robot performs during the challenge is to find out where it is. This is called localization, for which a particle filter algorithm is utilized. Particle filters, or sequential Monte Carlo methods, are a set of Monte Carlo algorithms used to find approximate solutions for filtering problems for nonlinear state-space systems. The algorithm will estimate the robot's position with respect to the restaurant map by resampling particles until convergence to the position of the physical robot is achieved. The more particles used in the algorithm, the more accurate the algorithm can determine the position of the robot. However, the increase in particles will have a substantial effect on the computation time and could result in lag. Therefore, a balance has to be found, with the amount of particles as a tuning knob, between the computation time and the accuracy of the algorithm.

With the robot having determined its location with respect to the map, it will try and find the most optimal global path to reach the first table. The global path planning requires prior knowledge of the robot's environment, being the supplied map, the current position of the robot and the location of the next table. This is done using the A* algorithm, which identifies the path from an initial position to a targeted position in a nodal map with the smallest cost. The cost in this challenge is time or distance, therefore the shortest path will be selected by the A* algorithm.

Unknown objects cannot be taken into account by the A* algorithm. For to robot to handle these objects as well, a local path planning is required. The local path planning relies on laser data obtained by the sensors of the robot scanning the environment. Within their range, these sensors can detect any unknown obstacles present around the robot. As the obstacles are detected, the robot has to navigate around them locally by utilizing the Artificial Potential Field algorithm. APF creates attractive fields around the set target and repulsive fields around detected obstacles. These fields create a attractive and a repulsive force respectively, providing the robot with a collision-free path by taking the sum of these forces.

Algorithm details

The algorithms used make sure the robot can perform its tasks. The three algorithms used are a particle filter for localization, A* for global path planning and APF for local path planning. The reasoning behind these choices as well as the general working of the algorithms will be elaborated on in this section.

Localization - (Yuzhou)

Particle filters are capable of estimating the position and orientation of a robot in environments with noise and uncertainty by combining motion models and sensor observations. This method can more effectively handle the nonlinear condition, as well as provide robust localization results in complex environments. The steps that were carried out to accomplish this method for localization are as following:

1) Initialization

The first step is to construct the weighted particles. As the following figure shows, particles with Gaussian noise and random initial poses are constructed along with their filters. There are 1000 particles being generated and the center of them is the initial position of the robot.

2) Filter prediction

After generated the particle filters, now the prediction of the filter (average pose) should be calculated. The average pose can be obtained by iterating through the particle set while performing accumulation calculations on the position. The green arrow in the figure below shows the visualization of the average pose of particles generated around the initial point of the robot.

3) Propagation of particles

The robot could move around instead of staying still. Thus, updating the positions and orientations of particles based on the robot's motion model is crucial. In this step, each particle undergoes translation and rotation according to the robot's motion information while accounting for the noise and uncertainties in the motion model. The simulation result is shown as the gif below. With the propagation of particles, now the pose prediction (average pose) can be generated even though the robot is moving, getting the primary localization information.

4) Likelihood of particles

Calculating the likelihood of a particle is an important step for particle filter. It is used to determine the weight of each particle, which in turn affects the sampling probability in the subsequent resampling step. By calculating the likelihood of a particle, the particle filter can better estimate the true state of the robot and assign more weight to particles that have a higher match with the observed data.

Generally there're two sub-steps to accomplish this step. First, generate the prediction of the expected measurements (ranges to obstacles) based on laser. Then, compute the likelihood of each measurement relative to this prediction using the parameters set in the config file (incorporating noise).

5)Resampling of particles

Now the particle filter method is almost done. But the resampling of particles is also crucial for the optimization of performance. Multinomial method was chosen as the resampling method. This multinomial resampling method samples particles based on their weights and in a probabilistical way, such that particles with higher weights have a greater probability of being selected into the new particle set, while particles with lower weights have a smaller probability of being selected. This allows the particle set to better reflect the posterior probability distribution, thereby improving the performance of the filter.

6) Performance and analysis

After all the steps above, the particle filter method should be achieved. Thus, now the RVIZ and mrc-teleop can be utilized to simulate the performance of this method for localization. The simulation result is as the below gif shows

Global path planning - A* (Alex)

A* algorithm

The A* algorithm has been chosen as global path planner for this project. A* is a popular and widely-used search algorithm that finds the shortest path between two nodes in a graph. It combines the concepts of both breadth-first search and greedy best-first search to efficiently navigate through the graph. The algorithm maintains two lists: the open list and the closed list. It starts by initializing the open list with the starting node and assigns a heuristic value to each node, estimating the cost from that node to the goal. The algorithm then repeatedly selects the node with the lowest total cost, which is the sum of the cost from the start node and the heuristic value. It expands this selected node by examining its neighbouring nodes, updating their costs and heuristic values accordingly. The process continues until the goal node is reached or there are no more nodes to explore. By carefully considering the estimated cost and choosing the most promising nodes first, A* efficiently finds the optimal path while avoiding unnecessary exploration.

Creating of nodes and connections

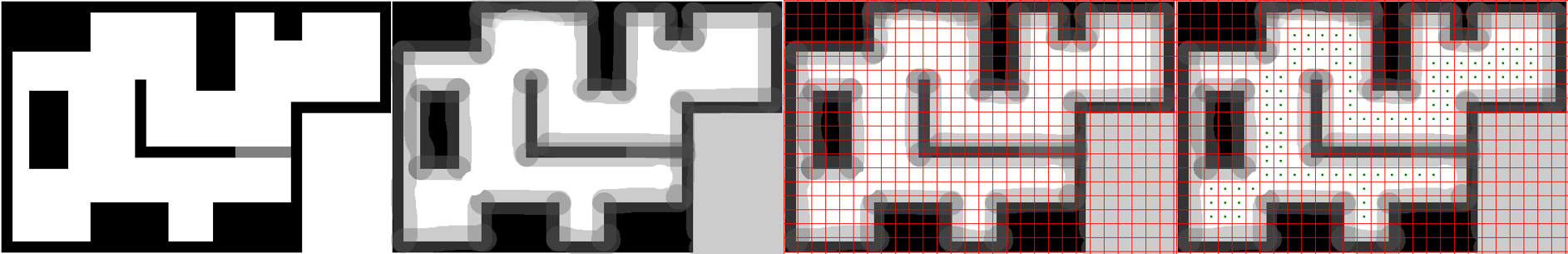

To create the nodes and connections the provided map was taken and divided into equal size squares. The size of these squares can be decreased or increased for an accordingly finer or coarser grid. The current squares seen in the image are 20x20 pixels. A finer grid will represent the map more accurately but also increases the chance of hitting walls while driving with the physical robot as well as increasing computation time and making the robot visit more nodes leading to a less smooth motion. The centre of each square, in which no obstacles are present, are assigned as nodes. From these nodes the connections are found by checking each corner of the squares, in which the nodes are located, and if two squares have at least one corner in common then a connections between the two nodes will be made. Each wall and obstacles in the map were made bigger to account for uncertainty of the environment and size of the robot.

Performance of A*

Simulation

In simulation A* works really well as can be seen in the following videos, in both videos it is assumed the door in the map is closed. The first simulation video is made from a map in which the walls and objects have not been made bigger and hence the robot makes very sharp corners.

And the following video shows the final result of a safer path that was made with the grid map and nodes shown above.

On physical system

On the real robot, as can be seen from the following video:

In this video Hero, arrives at a table, turns towards it and says 'I arrived at Table'. For the video with sound https://tuenl-my.sharepoint.com/:v:/g/personal/a_h_d_pauw_student_tue_nl/EcRTOid_dJhFuJpP7wPTtF4BfdEl4oyFL_uu3NJc-nJ8Ww?e=aWCaYW&nav=eyJyZWZlcnJhbEluZm8iOnsicmVmZXJyYWxBcHAiOiJTdHJlYW1XZWJBcHAiLCJyZWZlcnJhbFZpZXciOiJTaGFyZURpYWxvZyIsInJlZmVycmFsQXBwUGxhdGZvcm0iOiJXZWIiLCJyZWZlcnJhbE1vZGUiOiJ2aWV3In19

We obtain the result seen in the image underneath.

Hero can be seen going from the starting point (first sheet of paper on the right) to table 1 (second sheet) then table 0 (third sheet) and finally stopping at table 2 (last sheet). The reason hero is not in the restaurant maze is because at that point in our testing there was some initialization problem in which hero would first go from the centre of the map to the starting point (first sheet of paper on the right) of the maze ignoring all obstacles. This problem can be seen in the video above and in the following video in simulation:

This problem was later fixed and the robot now takes it's starting point as the start of the maze as can be seen in this simulation video:

Local path planning - Artificial Potential Field (Xingyi & Guglielmo)

The Artificial Potential Field method has been chosen as a local path planning algorithm. The main idea behind it is to model obstacles and the goal as, respectively, repulsive and attractive vector fields. From a potential point of view this implies assigning a low potential to the goal and a large potential to the obstacles. According to these potential fields, the robot is expected to move autonomously towards the lowest potential coordinates.

Basing on the resulting potential of the robot's position, the induced velocities can be computed and sent to the robot as references for the navigation. Practically speaking, the APF was designed as a function that given the current pose of the robot, the goal pose and the laser data, returns the reference velocities needed to move towards the minimum potential position.

The laser data is used to model obstacles. One easy approach could be to just take into account the closest obstacle represented as a point in the space. Its relative position with respect to the robot would be given by the range and the angle of the minimum laser scan. This approach would however not be robust, since a single laser measurement is not robust and thus the obstacle position would be uncertain. To tackle this, every laser measurement below a fixed detection threshold is chosen to represent an obstacle. In this way, every obstacle is modeled as a point cloud, of which every point generates a repulsive velocity reference for the robot. The overall repulsive velocity is then the sum of all these point-generated velocity, considering the orientation of those. Summing up these values allows the algorithm to find the right balance between forces pointing in different directions. It also fights the uncertainty of the laser data compensating for outliers.

Calling the APF function until the goal is reached allows the robot to get to the destination avoiding both static and dynamic obstacles. This is due to the fact that the obstacle set is re-calculated every time the function is called, dealing with errors or moving objects.

The goal is just a point in the map as well, thus the attractive velocity is unique. To address this, all the parameters have been tuned to find the right balance between the attractive and the repulsive action.

The pros of this approach:

- It can deal with all kinds of obstacles;

- It is robust with respect to uncertainties in the measurements;

- It is able to find a smooth path towards the goal.

The cons of this approach:

- It relies on good localization: the attractive force is, in fact, a function of the current pose and the goal pose;

- It is intrinsically affected by local minima issues, leading the robot to a low-potential position which might not be the goal;

- It relies on parameter tuning: all the velocity coefficients and al the thresholds must be found through a trial-and-error process.

Integration (Xingyi & Guglielmo)

To combine each of the block, we first merge the libraries from navigation and localization. Then, we need to develop the right policy between local path planning and global path planning.

The first designed policy is rather simple. The robot follows the global path as long as possible, then if it detects an obstacle, it switches to the obstacle detection mode. When obstacle detection mode is active, the robot gives control to the APF algorithm in order to find a safe path, drive past the obstacle and get back on the global track. The APF, however, needs a goal to compute velocity references. The idea is then to skip a few nodes, setting the goal for APF as the current_global_path_goal + n_skip. The assumption is that obstacles such that chairs or bags (which can be found in a restaurant) are not so large to take up many nodes.

However, after simulating the first policy from gif1, we can see that although the robot switches to the local path planning state at the previous node of the obstacle, a significant portion of the robot's edges pass right through the robot in the local path planning state, which means that the current algorithm is not feasible. Since we were pretty sure our APF function doesn't have any issues, the only issue would from the policy. It took us quite a while to figure out what the policy problem was, but in the end we couldn't quite fix it totally.

Therefore, we began to change the new policy without using APF: When the robot encounters an obstacle, the robot stops at the node Pn in front of the obstacle. The robot will then calculate whether it will pass the next node Pn+1 based on the data returned by lidar, with the path being a straight line from node Pn to node Pn+1. If the obstacle occupies the next node Pn+1, then the robot will calculate whether it will pass node Pn+2, which is a straight line from node Pn to node Pn+2, and so on. In the end, we can see the gif2 that the robot avoided the obstacle and the "local" path is a straight line. However, the biggest disadvantage of this method is that if the obstacle is too large to obscure the robot to fail to detect the node after it, then the robot will stall forever.

Performance (Ronghui)

The performance including two parts: simulation and performance on the final challenge day.

1. Simulation performance

Since localization part has not been finished properly, we only test the rest part with a simple object-avoidance function, and simulation result is as follows:

From the simulation we can see that the robot move smoothly, avoid an unknown object and successfully plan and follow its way to all 6 tables in sequence.

2. Performance on the final challenge day

We have two trials on the final challenge day and we use software version that did not include complete localization algorithm.

- The recording of first trial is presented as follows:

From the recording we could see that Hero first make a fast turn to the left side, plan its way, move straight and collides into the wall with an attempt to turn left.

- The recording of second trial was presented as follows:

From the recording we could see that Hero first turn left to plan its way, then go straight but unfortunately run into an unknown static object.

Deficiencies

// What did not work

// during the challenge it seemed as if the robot did not see the obstacles and rammed into a table

//why didn't localization work?

// Improvement: Localization, policy, door

Conclusion

// Conclusions on project

// The code can run in the simulation, and should have worked in Hero. Unfortunately, it failed.

Recommendations (Timo)

Since code is has not been finished, the next topics that must be improved are quite clear. The first steps to take are to create a robust localization for the robot to know where it is. Simultaneously, it should be made sure the physical robots performs in similar fashion to the robot in simulation regarding the integration between global and local path planning. After that, the localization has to be integrated as well, creating a system that can handle unknown static objects and keep track of its location with respect to the map. This system is expected to handle dynamical objects already to some degree.

As proposed in the strategy, the next step is to include a feature to handle doors. This can be done by making the robot understand the difference between a wall and a door using map semantics. The hard part when adding this feature is to adapt the global path planning to plan the path via the door as an intermediate target, while also being able to switch the path if the door does not open for a certain amount of time after requesting.

The following step of the strategy is expected to be even harder, making to robot deal with dynamic obstacles like humans. First, dynamic obstacles should be detected and recognized. The robot should understand a human is nearby and should therefore take extra safety measures to prevent causing a collision as humans are harder to 'repair' if a collision ought to happen.

The last step to take is intensive and thorough testing to check the performance and robustness of the robot for all kinds of situations that might happen when such a robot is set to work in a restaurant. The importance of these tests is crucial for the safety of the robot and the environment around it.