PRE2020 3 Group6: Difference between revisions

| (61 intermediate revisions by 5 users not shown) | |||

| Line 24: | Line 24: | ||

=Problem Statement and Objective= | =Problem Statement and Objective= | ||

At the moment 466 million people suffer from hearing loss | At the moment 466 million people suffer from hearing loss. It has been predicted that this number will increase to 900 million by 2050. Hearing loss has, among other things, a social and emotional impact on one's life. The inability to communicate easily with others can cause an array of negative emotions such as loneliness, feelings of isolation, and sometimes also frustration <ref name="Deafness and hearing loss">[https://www.who.int/news-room/fact-sheets/detail/deafness-and-hearing-loss] Deafness and Hearing Loss - World Health Organization. (2021) WHO. </ref>. Although there are many different types of speech recognition technologies for live subtitling that can help people that are deaf or hard of hearing, hereafter referred to as DHH, these feelings can still be exacerbated during online meetings. DHH individuals must concentrate on the person talking, the interpretation, and of any potential interruptions that can occur <ref name="CollabAll">[https://doi.org/10.1145/3132525.3134800] Peruma, A., & El-Glaly, Y. N. (2017). CollabAll: Inclusive discussion support system for deaf and hearing students. ASSETS 2017 - Proceedings of the 19th International ACM SIGACCESS Conference on Computers and Accessibility, 315–316. </ref>. Furthermore, to be able to take part in the discussion, they must be able to spontaneously react in conversation. However, not everyone understands sign language, which makes communicating even more difficult. Nowadays, especially due to the COVID-19 pandemic, it is becoming more normal to work from home and therefore the number of online meetings is increasing quickly <ref name="Teams statistics">[https://www.microsoft.com/en-us/microsoft-365/blog/2020/10/28/microsoft-teams-reaches-115-million-dau-plus-a-new-daily-collaboration-minutes-metric-for-microsoft-365/] Microsoft Teams reaches 115 million DAU—plus, a new daily collaboration minutes metric for Microsoft 365 - Microsoft 365 Blog. (2021).</ref>. | ||

This leads us to our objective: to develop software that translates Sign Language to text to help DHH individuals communicate in an online environment. This system will be a tool that DHH individuals can use to communicate during online meetings. The number of people that have to work or be educated from home has rapidly increased due to the COVID-19 pandemic <ref name="European Commission Telework">[https://ec.europa.eu/jrc/sites/jrcsh/files/jrc120945_policy_brief_-_covid_and_telework_final.pdf] European Commission. (2020). Telework in the EU before and after the COVID-19 : where we were, where we head to. Science for Policy Briefs, 2009, 8 . </ref>. This means that the number of DHH individuals that have to work in online environments also increases. Previous studies have shown that DHH individuals obtain a lower score on an Academic Engagement Form for communication compared to students with no disability <ref name="Academic engagement in students with a hearing loss in distance education">[https://doi.org/10.1093/deafed/enh009] Richardson, J. T. E., Long, G. L., & Foster, S. B. (2004). Academic engagement in students with a hearing loss in distance education. Journal of Deaf Studies and Deaf Education, 9(1), 68–85. </ref>. This finding can be explained by the fact that DHH people are usually unable to understand speech without aid. This aid can be a hearing aid, technology that converts speech to text, or even an interpreter, however the latter is expensive and not available for most DHH individuals. To talk to or react to other people, DHH individuals can use pen and paper, or in an online environment by typing. However, this is a lot slower than speech or sign language which makes it almost impossible for DHH individual to keep up with the impromptu nature of discussions or online meetings <ref name="Deaf, Hard of Hearing, and Hearing perspectives on using Automatic Speech Recognition in Conversation">Glasser, A., Kushalnagar, K., & Kushalnagar, R. (2019). Deaf, Hard of Hearing, and Hearing perspectives on using Automatic Speech Recognition in Conversation. ArXiv, 427–432. </ref>. Therefore, by creating software that can convert sign language to text, or even to speech, DHH individuals will be able to actively participate in meetings. To do this, it is important to understand what sign language is and to understand what research has already been done into this subject. These issues will be discussed in this wiki page. | |||

=Sign Language: what is it?= | =Sign Language: what is it?= | ||

Sign language is a natural language that is predominantly used by people who are deaf or hard of hearing, but also by hearing people as well. Of all the children who are born deaf, 9 out of 10 are born to hearing parents. This means that the parents often have to learn sign language alongside the child <ref name="American Sign Language"> [https://doi.org/10.4135/9781483346243.n11]Scarlett, W. G. (2015). American Sign Language. The SAGE Encyclopedia of Classroom Management.</ref>. | Sign language is a natural language that is predominantly used by people who are deaf or hard of hearing, but also by hearing people as well. Of all the children who are born deaf, 9 out of 10 are born to hearing parents. This means that the parents often have to learn sign language alongside the child <ref name="American Sign Language"> [https://doi.org/10.4135/9781483346243.n11]Scarlett, W. G. (2015). American Sign Language. The SAGE Encyclopedia of Classroom Management.</ref>. | ||

Sign language is comparable to spoken language in the sense that it differs per country. American Sign Language (ASL) and British Sign Language (BSL) were developed separately and are therefore incomparable, meaning that people that use ASL will not necessarily be able to understand BSL <ref name="American Sign Language" />. | Sign language is comparable to spoken language in the sense that it differs per country. American Sign Language (ASL) and British Sign Language (BSL) were developed separately and are therefore incomparable, meaning that people that use ASL will not necessarily be able to understand BSL <ref name="American Sign Language" />. | ||

Sign language does not express single words, it expresses meanings. For example, the word right has two definitions. It could mean correct, or opposite of left. In spoken English, right is used for both meanings. In sign language, there are different signs for the different definitions of the word right. A single sign can also mean a whole entire sentence. By varying the hand orientation and direction, the meaning of the sign, and therefore the sentence, changes <ref name="What is sign language?"> Perlmutter, D. M. (2013). What is Sign Language ? Linguistic Society of America, 6501(202).</ref>. | Sign language does not express single words, it expresses meanings. For example, the word right has two definitions. It could mean correct, or opposite of left. In spoken English, right is used for both meanings. In sign language, there are different signs for the different definitions of the word right. A single sign can also mean a whole entire sentence. By varying the hand orientation and direction, the meaning of the sign, and therefore the sentence, changes <ref name="What is sign language?"> Perlmutter, D. M. (2013). What is Sign Language ? Linguistic Society of America, 6501(202).</ref>. | ||

That being said, all sign languages rely on certain parameters, or a selection of these parameters, to indicate meaning. These parameters are <ref name="Sign language parameters"> [https://doi.org/10.20354/b4414110002] Tatman, R. (2015). The Cross-linguistic Distribution of Sign Language Parameters. Proceedings of the Annual Meeting of the Berkeley Linguistics Society, 41(January). </ref>: | |||

* Handshape: the general shape one's hands and fingers make; | * Handshape: the general shape one's hands and fingers make; | ||

* Location: where the sign is located in space; body and face are used as reference points to indicate location; | * Location: where the sign is located in space; body and face are used as reference points to indicate location; | ||

| Line 47: | Line 53: | ||

In this section, we will highlight how each of the aspects of USE relates to our system. | In this section, we will highlight how each of the aspects of USE relates to our system. | ||

==User== | ==User== | ||

The target user group of our software is people who are not able to express themselves with speech. There are | The target user group of our software is people who are not able to express themselves with speech. There are various things that could cause this. People who are deaf or hard of hearing often have trouble speaking. There are also people who cannot speak due to physical disabilities. When a person cannot express themselves through speech, they are usually able to express themselves through sign language. Because of this non-verbal manner of expression, the target group is not able to function like a person who is able to speak in a meeting. This holds for both online and offline meetings. A direct solution for this is to hire an interpreter to translate sign language into speech, working as a proxy for the DHH person. However, interpreters can cost anywhere between $18 and up to $125 an hour <ref name="Price interpreter"> [https://www.universal-translation-services.com/how-much-does-a-sign-language-interpreter-cost/] How Much Does A Sign Language Interpreter Cost | UTS. (n.d.) Retrieved March 25, 2021.</ref>. The average hourly wage in the USA is around $35 <ref name="Hourly wage"> [https://www.bls.gov/news.release/empsit.t19.htm] Table B-3. Average hourly and weekly earnings of all employees on private nonfarm payrolls by industry sector, seasonally adjusted. Retrieved March 25, 2021.</ref>, meaning that DHH individuals that need to pay for interpretation out of pocket may not be able to do so. Furthermore, individuals that need this extra help, may be less attractive financially to employers because of the extra costs. The proposed software would eliminate the need for interpreters, and therefore all the aforementioned problems linked to this. Online meetings would become more inclusive for individuals who use sign-language as the software would allow those individuals to participate actively. | ||

Furthermore, people who work with or are related to DHH individuals are also members of our target group. Communication between DHH and non-DHH people can be difficult for both parties. Facilitating this communication either requires both persons involved to speak some form of sign language or both use a textual approach. Neither of these options seem realistic in a professional meeting environment. Using only text is extremely slow and inefficient, but it is accessible to everyone, while sign language is much more responsive, but not everyone is able to understand it. Even though our software does not directly affect this side of the target group, it does allow for more inclusivity of DHH people in the professional world, particularly in the current state of working life due to the COVID crisis. | |||

Finally, our product will enable the user to express themselves using not only text but also their facial expressions. This will help the user to function in meetings more easily. In the past 10 years, the number of people working from home, teleworking, has increased slowly, depending on the sector and occupation. However, since the start of the COVID-19 pandemic, it has been estimated that around 40% of working individuals in the EU switched to working from home full-time <ref name="Teleworking EU"> [https://ec.europa.eu/jrc/sites/jrcsh/files/jrc120945_policy_brief_-_covid_and_telework_final.pdf] European Commission. (2020). Telework in the EU before and after the COVID-19 : where we were , where we head to. Science for Policy Briefs, 2009, 8. </ref>. It has also been suggested that this shift in work culture is something that could stay, even after the COVID-19 pandemic. Therefore, by allowing individuals that rely on sign-language to communicate naturally, teleworking will become as easy for them as anyone else. | Finally, our product will enable the user to express themselves using not only text but also their facial expressions. This will help the user to function in meetings more easily. In the past 10 years, the number of people working from home, also known as teleworking, has increased slowly, depending on the sector and occupation. However, since the start of the COVID-19 pandemic, it has been estimated that around 40% of working individuals in the EU switched to working from home full-time <ref name="Teleworking EU"> [https://ec.europa.eu/jrc/sites/jrcsh/files/jrc120945_policy_brief_-_covid_and_telework_final.pdf] European Commission. (2020). Telework in the EU before and after the COVID-19 : where we were , where we head to. Science for Policy Briefs, 2009, 8. </ref>. It has also been suggested that this shift in work culture is something that could stay, even after the COVID-19 pandemic. Therefore, by allowing individuals that rely on sign-language to communicate naturally, teleworking will become as easy for them as anyone else. This could also eventually lead to more possibilities in the job market as well. | ||

==Society== | ==Society== | ||

Since the beginning of the Covid-19 pandemic a lot of people started | Since the beginning of the Covid-19 pandemic, a lot of people have started to work from home. A study shows that 60% of all UK citizens are working from home at the moment <ref name="WFH 2020"> [https://www.finder.com/uk/working-from-home-statistics]</ref>. The same study has also shown that this is not a temporary situation, 26% of the people currently working at home plan to continue working from home permanently or occasionally after the lockdown. However, working from home does pose challenges for certain groups of people, for example, the DHH community. Society may be be less inclusive if the individuals in this community that rely on sign language to communicate may be unable to keep up with certain aspects of online meetings. Today inclusivity is more important than ever, and society is working on becoming more inclusive for all groups. The government, employers, and educational institutions are affected in the sense that they can live up to any inclusivity policies they have if they implement this software. As mentioned before, communicating online can be difficult for people that use sign language. The inability of those individuals to respond (quickly) decreases inclusivity. This software would increase inclusivity again, which is important to stakeholders such as the government, employers, and educational institutions. | ||

Working from home has the | Working from home also has other benefits, such as the fact that there is no commute which costs time and money. These advantages will also benefit the environment becuase of the decrease in daily commutes, and therefore decrease in smog, carbon monoxide and other toxins <ref name="environment"> [Marzotto, T., Burnor, V. M., & Bonham, G. S. (1999). The Evolution of Public Policy: Cars and the Environment. Lynne Rienner Pub]</ref>. Everyone should be able to profit from these advantages, which means that everyone should be able to work from home. The proposed technology will give DHH individuals the possibility to take advantage of these benefits. Also, due to the lack of communication skills of DHH individuals, some coworkers might not prefer working together with a DHH coworker. This technology could help combat these negative preconceived notions that coworkers may have. | ||

==Enterprise== | ==Enterprise== | ||

As mentioned previously, an important aspect of the software is the integration into a professional work environment. Since most work-related meetings happen online nowadays, it is only natural that through the use of technology | As mentioned previously, an important aspect of the software is the integration into a professional work environment. Since most work-related meetings happen online nowadays, it is only natural that through the use of technology opportunties are afforded to everyone. Including the people who may have more difficulties with certain aspects of a job, in this case DHH indiviuals in online meetings. An especially common platform for online meetings is Microsoft Teams, which is used in over 500,000 organizations to facilitate meetings<ref name="MSTeams">[https://www.businessofapps.com/data/microsoft-teams-statistics/] David Curry, BussinessofApps.com, Microsoft Teams Revenue and Usage Statistics (2021) </ref>. The Microsoft Teams platform supports integrated apps. The vision we have for our software is to integrate it into Microsoft teams to allow DHH individuals to take part in discussions more easily. | ||

During an online meeting, it is important to speak clearly but due to the conversational nature it is faster than simple clear speech. Clear speech has a speaking rate of 100 words per minute (wpm), while conversational speech is 200 wpm. One could therefore | During an online meeting, it is important to speak clearly but due to the conversational nature of discussions it is faster than simple clear speech. Clear speech has a speaking rate of 100 words per minute (wpm), while conversational speech is 200 wpm. One could therefore that on average, online meetings have a speaking rate of around 150 wpm <ref name="Speech rate"> [https://doi.org/10.7874/jao.2019.00115] Yoo, J., Oh, H., Jeong, S., & Jin, I. K. (2019). Comparison of speech rate and long-term average speech spectrum between Korean clear speech and conversational speech. </ref>. The average typing rate is around 50 words per minute for an untrained typist <ref name="Typing rate"> [https://doi.org/10.1145/3173574.3174220] Dhakal, V., Feit, A. M., Kristensson, O., & Oulasvirta, A. (2018). Observations on Typing from 136 Million Keystrokes. </ref>. By having to solely rely on typing during online meetings, the communication rate decreases by 67% which is very inefficient. Financially this would mean that an hour of (sign-to-)speech communication would take 1.67 hours if the employee had to type. In other words, to communicate the same amount of information, based on average wage, $58 will be spent on the emplyee instead of $35. This is an extreme waste of money for employers, meaning that they would profit greatly from this software as well. | ||

=Design concept= | =Design concept= | ||

The user will participate in an online meeting using a camera with | The user will participate in an online meeting using a camera with sufficient quality. The video feed of the user will be extracted by the sign-to-text software. It will be able to detect when it should be extracting frames through the detection of a hand in the screen or through manual activation. The extracted frames will be used to compute the output using a neural network. The neural network will be trained using a dataset of sign language. It will be trained to detect single letters and numbers. The program takes the frames that are input and matches them with one of the letters or numbers. | ||

The output of the program is the sequence of letters and numbers the user has signed. The output will be displayed like subtitles would be. The delay of these subtitles will have to be as low as possible since this will increase the effect of facial expressions in their sentences. | The output of the program is the sequence of letters and numbers the user has signed. The output will be displayed like subtitles would be. The delay of these subtitles will have to be as low as possible since this will increase the effect of facial expressions in their sentences. | ||

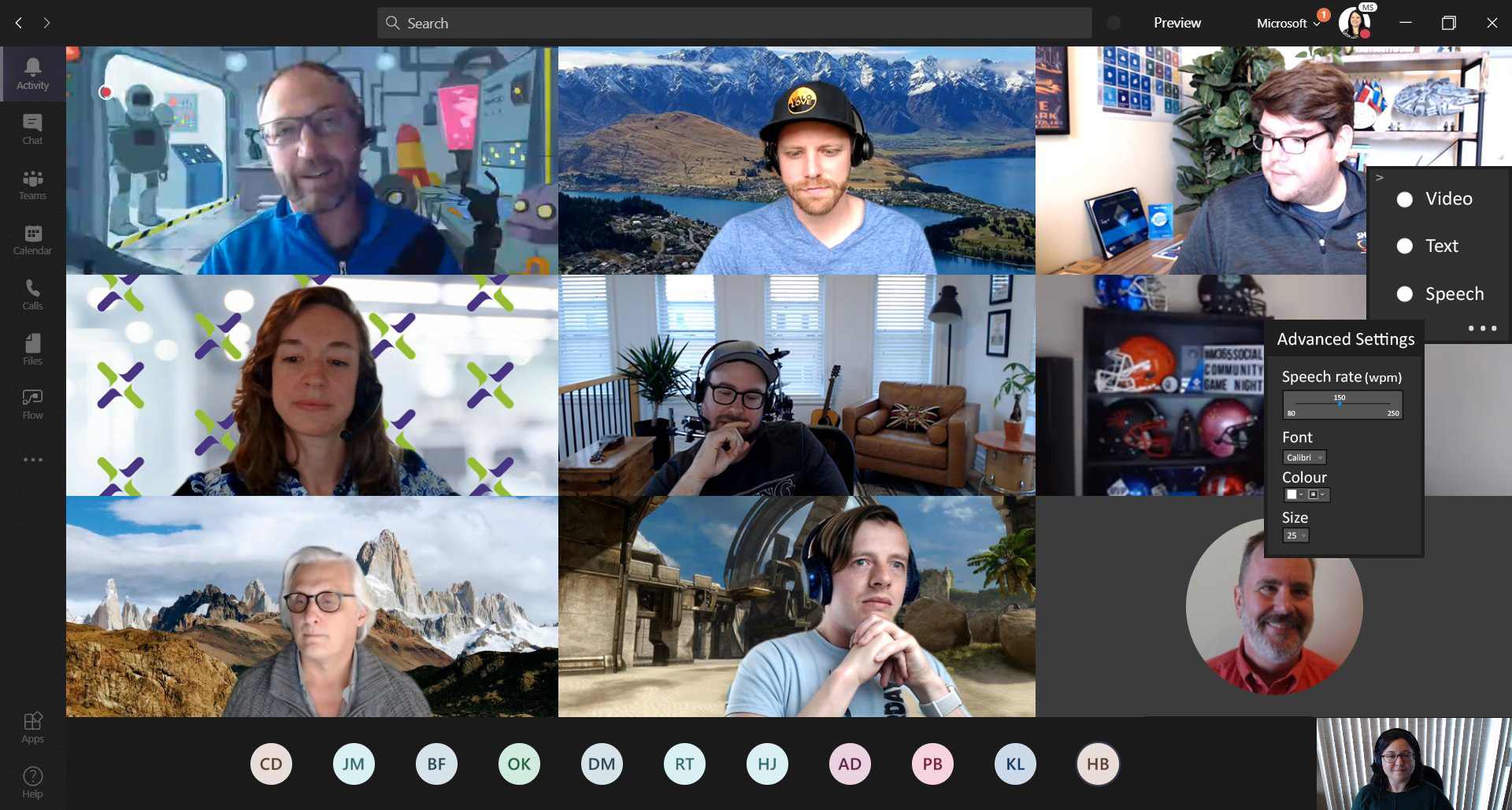

As mentioned before, the software will be integrated | As mentioned before, the software will be integrated into Microsoft Teams. Users will be able to select whether they want speech, video, and/or text using a pop-up menu. By navigating to the advanced settings menu (by clicking on the ellipses in the bottom right corner of the main pop-up menu), they will be able to adjust the speech rate of the text-to-speech software, the font, font color, and the size of the displayed text. | ||

[[File:0LAUK0 Teams Integration.jpg|1000px|Image: 1000 pixels|center|thumb|]] | [[File:0LAUK0 Teams Integration.jpg|1000px|Image: 1000 pixels|center|thumb|An example of how the software would look in a Microsoft Teams meeting.]] | ||

Unfortunately, it appears to be impossible to capture the video feed that is sent to Microsoft Teams meetings. | Unfortunately, it appears to be impossible to capture the video feed that is sent to Microsoft Teams meetings. | ||

Luckily, a solution was found for this problem. Using the | Luckily, a solution was found for this problem. Using the OpenCV library for Python, a program can be written to display footage captured by a camera or webcam to a window. Furthermore, before displaying a frame of a video it is possible to also add some text to this frame. Extending this from one frame to multiple frames comes down to the trivial task of keeping track of a counter. | ||

Sadly, getting the frames on this window to the | Sadly, getting the frames on this window to the Microsoft Teams meeting requires the use of a third-party program. This program needs the functionality of virtual cameras. One program that comes to mind is the widely used OBS or Open Broadcaster Software. This software allows users to capture the window with the video and text and broadcast it to the virtual camera. Microsoft Teams can then use this virtual camera as its source, instead of the standard webcam. In a final piece of software, it is of course the goal to integrate this entire functionality into our own application. | ||

=State of the art= | =State of the art= | ||

Research into sign language, luckily, has already been done many times before this project. The challenges that previous investigations have run into are well documented and defined, allowing us to work around them and being able to identify them early in the project. Furthermore, due to the large amount of research already done into sign language recognition it is easier for us to identify what methods to pick for this project. | Research into sign language, luckily, has already been done many times before this project. The challenges that previous investigations have run into are well documented and defined, allowing us to work around them and being able to identify them early in the project. Furthermore, due to the large amount of research already done into sign language recognition, it is easier for us to identify what methods to pick for this project. | ||

In this state-of-the-art section the most up to date findings will be shortly discussed. First, the challenges that this project can face are considered. Second, several different approaches that have been taken are reviewed. Finally, out of all this information the method that has been chosen for this project will be explained. | In this state-of-the-art section, the most up-to-date findings will be shortly discussed. First, the challenges that this project can face are considered. Second, several different approaches that have been taken are reviewed. Finally, out of all this information, the method that has been chosen for this project will be explained. | ||

==Challenges== | ==Challenges== | ||

The first and most obvious challenge originates from sign language itself. Signs are often not identifiable based on one frame or image. Almost all signs require some sort of motion which is simply not possible to capture in an image. Therefore, whatever classifier is decided on for this project, it is most likely going to have to be able to identify signs from video, which is significantly harder than recognizing signs from just one image. Classifying signs from video, although much more difficult than classifying from images, is not impossible. An example of a successful sign language recognizer is given by Pu, Junfu, Zhou, Wengang and Li, Houqiang in their 2019 paper “Iterative Alignment Network for Continuous Sign Language Recognition” <ref>Pu, Junfu & Zhou, Wengang & Li, Houqiang. (2019). Iterative Alignment Network for Continuous Sign Language Recognition. 4160-4169. https://doi.org/10.1109/CVPR.2019.00429</ref>. In this paper a method is proposed to map some T number of frames to an amount L of words or signs. Sadly, this method combines several highly advanced neural network techniques, which are | The first and most obvious challenge originates from sign language itself. Signs are often not identifiable based on one frame or image. Almost all signs require some sort of motion which is simply not possible to capture in an image. Therefore, whatever classifier is decided on for this project, it is most likely going to have to be able to identify signs from video, which is significantly harder than recognizing signs from just one image. Classifying signs from video, although much more difficult than classifying from images, is not impossible. An example of a successful sign language recognizer is given by Pu, Junfu, Zhou, Wengang and Li, Houqiang in their 2019 paper “Iterative Alignment Network for Continuous Sign Language Recognition” <ref>Pu, Junfu & Zhou, Wengang & Li, Houqiang. (2019). Iterative Alignment Network for Continuous Sign Language Recognition. 4160-4169. https://doi.org/10.1109/CVPR.2019.00429</ref>. In this paper, a method is proposed to map some T number of frames to an amount L of words or signs. Sadly, this method combines several highly advanced neural network techniques, which are outside of the scope of this course. Furthermore, this method is designed specifically for use with 3D videos, which is something users of this product might not have access to. | ||

| Line 100: | Line 105: | ||

Moreover, signs can look very much like each other. Again, the sign for ‘gray’ comes to mind. The signs for both ‘gray’ and ‘whatever’ are very similar (see this link for how ‘whatever’ can be signed: https://www.signingsavvy.com/search/whatever). Both signs have the signer moving their hands back and forth with very little difference in hand gesture. A way around this problem can be found in the facial expression of the signer. As shown by U. von Agris, M. Knorr and K. Kraiss the facial expressions that are made during signing are key to recognizing the correct sign <ref>U. von Agris, M. Knorr and K. Kraiss, "The significance of facial features for automatic sign language recognition," 2008 8th IEEE International Conference on Automatic Face & Gesture Recognition, Amsterdam, Netherlands, 2008, pp. 1-6, doi: 10.1109/AFGR.2008.4813472.</ref>. This is also something that can be seen if attention is paid to the face of the signers in the videos for the ‘gray’ and ‘whatever’ signs. During execution of the sign, it is almost like the signer is saying the word out loud. The only difference of course | Moreover, signs can look very much like each other. Again, the sign for ‘gray’ comes to mind. The signs for both ‘gray’ and ‘whatever’ are very similar (see this link for how ‘whatever’ can be signed: https://www.signingsavvy.com/search/whatever). Both signs have the signer moving their hands back and forth with very little difference in hand gesture. A way around this problem can be found in the facial expression of the signer. As shown by U. von Agris, M. Knorr and K. Kraiss the facial expressions that are made during signing are key to recognizing the correct sign <ref>U. von Agris, M. Knorr and K. Kraiss, "The significance of facial features for automatic sign language recognition," 2008 8th IEEE International Conference on Automatic Face & Gesture Recognition, Amsterdam, Netherlands, 2008, pp. 1-6, doi: 10.1109/AFGR.2008.4813472.</ref>. This is also something that can be seen if attention is paid to the face of the signers in the videos for the ‘gray’ and ‘whatever’ signs. During the execution of the sign, it is almost like the signer is saying the word out loud. The only difference of course is that no sound is made. However, as shown in the paper, this is not the only facial feature that can help with classification. As shown in the paper, the facial expression of the signer can change the meaning of a sign. Therefore, to make even better sign language recognition software, the facial expressions should be considered as well. | ||

The fact that signs can look very much alike is not really an issue that is specific to this project. In general, for all neural networks it will be very hard to classify two images that are very similar. Even when adding another dimension this problem is not easy to solve for hand gestures, as shown by L. K. Phadtare, R. S. Kushalnagar and N. D. Cahill <ref>L. K. Phadtare, R. S. Kushalnagar and N. D. Cahill, "Detecting hand-palm orientation and hand shapes for sign language gesture recognition using 3D images," 2012 Western New York Image Processing Workshop, Rochester, NY, USA, 2012, pp. 29-32, doi: 10.1109/WNYIPW.2012.6466652.</ref>. In this paper an algorithm is proposed to detect hand gestures using the Kinect, a camera that has depth perception. The algorithm is shown to be incredibly accurate in distinguishing hand gestures that are very different from one another. However, the authors also show that the smaller the difference between gestures, the less accurate the classification will be. | The fact that signs can look very much alike is not really an issue that is specific to this project. In general, for all neural networks, it will be very hard to classify two images that are very similar. Even when adding another dimension this problem is not easy to solve for hand gestures, as shown by L. K. Phadtare, R. S. Kushalnagar, and N. D. Cahill <ref>L. K. Phadtare, R. S. Kushalnagar and N. D. Cahill, "Detecting hand-palm orientation and hand shapes for sign language gesture recognition using 3D images," 2012 Western New York Image Processing Workshop, Rochester, NY, USA, 2012, pp. 29-32, doi: 10.1109/WNYIPW.2012.6466652.</ref>. In this paper an algorithm is proposed to detect hand gestures using the Kinect, a camera that has depth perception. The algorithm is shown to be incredibly accurate in distinguishing hand gestures that are very different from one another. However, the authors also show that the smaller the difference between gestures, the less accurate the classification will be. | ||

However, the fact that the face can impact the meaning of signs so much means that the classifier should look at more than just the hands, adding another layer of difficulty to this already hard problem. Luckily, this is an issue that has already been looked at before by K. Imagawa, Shan Lu and S. Igi <ref>K. Imagawa, Shan Lu and S. Igi, "Color-based hands tracking system for sign language recognition," Proceedings Third IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, 1998, pp. 462-467, doi: 10.1109/AFGR.1998.670991.</ref>. In this paper methods to distinguish specifically the face and hands from a 2D image are discussed. The idea of the proposed solution is to use a color mapping based on the skin color of the signer to identify hand and face ‘blobs’ in the image. Although the findings in this paper are very interesting, the implementation difficulty and time must be considered. | However, the fact that the face can impact the meaning of signs so much means that the classifier should look at more than just the hands, adding another layer of difficulty to this already hard problem. Luckily, this is an issue that has already been looked at before by K. Imagawa, Shan Lu, and S. Igi <ref>K. Imagawa, Shan Lu and S. Igi, "Color-based hands tracking system for sign language recognition," Proceedings Third IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, 1998, pp. 462-467, doi: 10.1109/AFGR.1998.670991.</ref>. In this paper methods to distinguish specifically the face and hands from a 2D image are discussed. The idea of the proposed solution is to use a color mapping based on the skin color of the signer to identify hand and face ‘blobs’ in the image. Although the findings in this paper are very interesting, the implementation difficulty and time must be considered. | ||

| Line 113: | Line 118: | ||

==Approaches== | ==Approaches== | ||

To make sign language recognizers several approaches have been taken throughout the years. Some of these approaches have been highlighted by Cheok, M.J., Omar, Z. and Jaward, M.H. in their article reviewing existing hand gesture and sign recognition techniques <ref>Cheok, M.J., Omar, Z. & Jaward, M.H. A review of hand gesture and sign language recognition techniques. Int. J. Mach. Learn. & Cyber. 10, 131–153 (2019). https://doi.org/10.1007/s13042-017-0705-5 </ref>. In this article the several approaches have been split into two major classes: vision and sensor based. | To make sign language recognizers, several approaches have been taken throughout the years. Some of these approaches have been highlighted by Cheok, M.J., Omar, Z. and Jaward, M.H. in their article reviewing existing hand gesture and sign recognition techniques <ref>Cheok, M.J., Omar, Z. & Jaward, M.H. A review of hand gesture and sign language recognition techniques. Int. J. Mach. Learn. & Cyber. 10, 131–153 (2019). https://doi.org/10.1007/s13042-017-0705-5 </ref>. In this article the several approaches have been split into two major classes: vision- and sensor-based. | ||

===Vision based=== | ===Vision-based=== | ||

Vision based sign language recognition, as the name implies, uses videos or images acquired using one or more cameras and possibly extended with extra more sophisticated techniques. | Vision-based sign language recognition, as the name implies, uses videos or images acquired using one or more cameras and possibly extended with extra, more sophisticated techniques. | ||

| Line 123: | Line 128: | ||

Both approaches can be expanded on using markers to more easily identify and even track hands. Examples of this are mentioned in the article by Cheok, M.J., Omar, Z. and Jaward, M.H. as well: signers can be asked to wear two | Both approaches can be expanded on using markers to more easily identify and even track hands. Examples of this are mentioned in the article by Cheok, M.J., Omar, Z. and Jaward, M.H. as well: signers can be asked to wear two different colored gloves or little wristbands. These colored markers were used in the paper mentioned earlier by K. Imagawa, Shan Lu and S. Igi <ref>K. Imagawa, Shan Lu and S. Igi, "Color-based hands tracking system for sign language recognition," Proceedings Third IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, 1998, pp. 462-467, doi: 10.1109/AFGR.1998.670991.</ref>. Using brightly colored markers allows makes it easier for the program to recognize a certain object. In this case, using wristbands or gloves allows the program to easily identify hands. | ||

===Sensor based=== | ===Sensor based=== | ||

| Line 131: | Line 136: | ||

==Our solution== | ==Our solution== | ||

The solution that was used in this project mostly came down to what can be expected from users of the product. The goal of this project is a sign language to text interpreter so deaf people can participate in online meetings more easily. Since it just can not be expected of users to have expansive 3D cameras or to get all kinds of equipment before the classifier works it seemed kind of obvious to go for the easiest to implement solution: the product will be convolutional neural network that can classify 2D images to the correct signs. Do note that this being the ‘easiest’ solution does not imply that it will work very well, in fact, due to the lack of information caused by only having access to a 2D image or video feed the product might actually end up being very bad at recognizing sign language. | The solution that was used in this project mostly came down to what can be expected from users of the product. The goal of this project is a sign language to text interpreter so deaf people can participate in online meetings more easily. Since it just can not be expected of users to have expansive 3D cameras or to get all kinds of equipment before the classifier works it seemed kind of obvious to go for the easiest to implement solution: the product will be a convolutional neural network that can classify 2D images to the correct signs. Do note that this being the ‘easiest’ solution does not imply that it will work very well, in fact, due to the lack of information caused by only having access to a 2D image or video feed the product might actually end up being very bad at recognizing sign language. | ||

=Technical specifications= | =Technical specifications= | ||

To accurately detect what the user wants to convey from video footage of them using sign language we have decided to look into making classifier models. In this scenario a classifier model should correctly classify certain frames of the video footage to contain the signed language actually within that video footage. | To accurately detect what the user wants to convey from video footage of them using sign language we have decided to look into making classifier models. In this scenario, a classifier model should correctly classify certain frames of the video footage to contain the signed language actually within that video footage. | ||

To make this classifier we have opted to use neural networks. To build a classifier you will need a dataset containing labelled images for the classes which the classifier is supposed to detect. This data is then divided | To make this classifier we have opted to use neural networks. To build a classifier you will need a dataset containing labelled images for the classes which the classifier is supposed to detect. This data is then divided into train, test and validation datasets where no 2 sets overlap. The train data set is the data on which the models of the neural network is trained, the validation set is used to compare performances between models and the test dataset is used to test the accuracy of the eventually generated models of the neural network. | ||

For sign language we have found two distinctly different types of sign language which require different approaches in how to make an effective classifier for them. Signs which consist of a single hand position and do not contain movement, such as the sign for the number 1, and signs throughout which the hands appear in different positions and thus naturally contain movement, such as the sign for butterfly. The main difference for the classifiers for these 2 types of signs is that all the information for the first type of signs can be detected from a single frame, while for the second type of signs you will require multiple frames to relay all the relevant information regarding the sign. As such henceforth we will refer to these types of signs as single-frame and multiple-frame signs. | For sign language, we have found two distinctly different types of sign language which require different approaches in how to make an effective classifier for them. Signs which consist of a single hand position and do not contain movement, such as the sign for the number 1, and signs throughout which the hands appear in different positions and thus naturally contain movement, such as the sign for butterfly. The main difference for the classifiers for these 2 types of signs is that all the information for the first type of signs can be detected from a single frame, while for the second type of signs you will require multiple frames to relay all the relevant information regarding the sign. As such henceforth we will refer to these types of signs as single-frame and multiple-frame signs. | ||

Building a classifier for strictly single-frame signs is (considerably) easier as the classifier only needs to look at a single frame. The input of the classifier will consist of a single image file with consistent resolutions. As such there is by default uniformity within the data and there is little need for pre-processing of the data. When inputted into the neural network, the image file will be | Building a classifier for strictly single-frame signs is (considerably) easier as the classifier only needs to look at a single frame. The input of the classifier will consist of a single image file with consistent resolutions. As such, there is by default uniformity within the data and there is little need for pre-processing of the data. When inputted into the neural network, the image file will be read by the computer as a matrix of pixels. For the images that we used for the single-frame signs for classifying alphabet signs, we used images of 200x200 pixels which were grey-scaled. Scaling the images grey means the input for the neural network and its models will consist of a single matrix of 200 by 200 values. If we were to have used coloured images, then an image would consist of 3 layers of 2-dimensional matrices, one for each colour in RGB (Red, Green, Blue). | ||

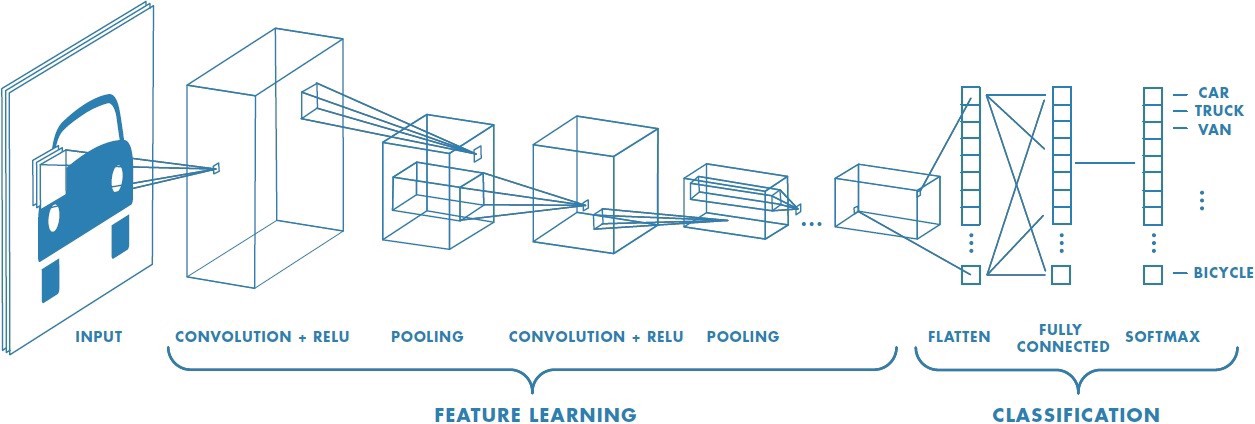

To create the classifier for single-frame signs, we used a neural network with convolution layers and max pooling layers. The convolution layers in the neural network convolute a group of values in the matrix by applying a kernel on the group of values and returning a single value for the next layer of the neural network. The kernel can be for example | To create the classifier for single-frame signs, we used a neural network with convolution layers and max-pooling layers. The convolution layers in the neural network convolute a group of values in the matrix by applying a kernel on the group of values and returning a single value for the next layer of the neural network. The kernel can be for example 3x3 pixels and only count the leftmost pixels and the centre pixel, the resulting value of this kernel would give the summed up values of the counted pixels. The kernel computes for all 3x3 squares of the matrix the output value and creates a new matrix with these new values, the new layer of the neural network (this does not decrease the number of values in the matrix, it would still be 200x200 matrix as the values in the groups are not exclusive). In this way, the convolutional layers are meant to extract high-level features from the images, such as where the edges of images or critical sections of images are. In addition to this, max-pooling layers are used to decrease the size of the matrices. A max-pooling layer runs a kernel over a matrix but with a larger stride (the distance between the placement of the kernel) so fewer values are outputted as fewer groups of pixels are inspected. Max pooling simply returns the largest value in a kernel, which is meant to summarize the information in a group of pixels into a single pixel to smoothen out the layers in the neural network. | ||

[[File:Kernel3x3.gif|800px| | [[File:Kernel3x3.gif|800px|Image: 800 pixels|center|thumb|A 3x3 kernel with a stride of 1 computing the convolutional layer of a 5x5 matrix. <ref name="CNN images">[https://towardsdatascience.com/a-comprehensive-guide-to-convolutional-neural-networks-the-eli5-way-3bd2b1164a53] A Comprehensive Guide to Convolutional Neural Networks - towardsdatascience.com (Accessed Februari 2021). </ref>]] | ||

After the convolution layers and max pooling layers have been applied to the original matrix representing the inputted image, a flattening layer is applied to turn the matrix into a single vector of values. On this single vector which now represents all the relevant information from the original image, we apply fully connected layers (also known as dense layers) which predicts the correct label for the inputted image. The fully connected layers apply weight to the values in the inputted vector and calculate the predicted probabilities for each class within our classifier. In the first few fully connected layers the ReLU (Rectified Linear Units) activation function is used to reduce the size of the vector. In the last fully connected layer which outputs the results for all the classes the softmax activation function is used to normalize the vector to output vectors between 0 and 1, denoting the probabilities for the inputted image to be each class. | After the convolution layers and max-pooling layers have been applied to the original matrix representing the inputted image, a flattening layer is applied to turn the matrix into a single vector of values. On this single vector which now represents all the relevant information from the original image, we apply fully connected layers (also known as dense layers) which predicts the correct label for the inputted image. The fully connected layers apply weight to the values in the inputted vector and calculate the predicted probabilities for each class within our classifier. In the first few fully connected layers, the ReLU (Rectified Linear Units) activation function is used to reduce the size of the vector. In the last fully connected layer which outputs the results for all the classes, the softmax activation function is used to normalize the vector to output vectors between 0 and 1, denoting the probabilities for the inputted image to be each class. | ||

[[File:CNNOverview.jpeg|800px|thumb|center|Conventional CNN structure <ref name="CNN images"/>]] | |||

=Realization= | =Realization= | ||

| Line 156: | Line 163: | ||

<ref name="Kaggle asl 02">[https://www.kaggle.com/kuzivakwashe/significant-asl-sign-language-alphabet-dataset] Significant ASL Alphabet dataset - Kaggle (Accessed March 2021). </ref>. Both of the datasets which we used for this contained around 3000 images per class (27-29 classes, alphabet + space/delete/empty). | <ref name="Kaggle asl 02">[https://www.kaggle.com/kuzivakwashe/significant-asl-sign-language-alphabet-dataset] Significant ASL Alphabet dataset - Kaggle (Accessed March 2021). </ref>. Both of the datasets which we used for this contained around 3000 images per class (27-29 classes, alphabet + space/delete/empty). | ||

For the multi-frame database we made use of the Isolated Gesture Recognition (ICPR '16) dataset <ref name="Chalearn ICPR">[http://chalearnlap.cvc.uab.es/dataset/21/description/] Isolated Gesture Recognition dataset (ICPR '16) - Chalearn Looking at People(Accessed March 2021). </ref>. This dataset comes from Chalearn LAP (Looking at People) organisation, and contains video-files of people performing a sign. The dataset contains 249 classes | For the multi-frame database we made use of the Isolated Gesture Recognition (ICPR '16) dataset <ref name="Chalearn ICPR">[http://chalearnlap.cvc.uab.es/dataset/21/description/] Isolated Gesture Recognition dataset (ICPR '16) - Chalearn Looking at People(Accessed March 2021). </ref>. This dataset comes from Chalearn LAP (Looking at People) organisation, and contains video-files of people performing a sign. The dataset contains 249 classes (sign gestures), which are each performed by 21 different individuals. In total the dataset contains 47933 video files. | ||

==Classifiers== | ==Classifiers== | ||

'''Single-frame Iteration 1''' | '''Single-frame Iteration 1''' | ||

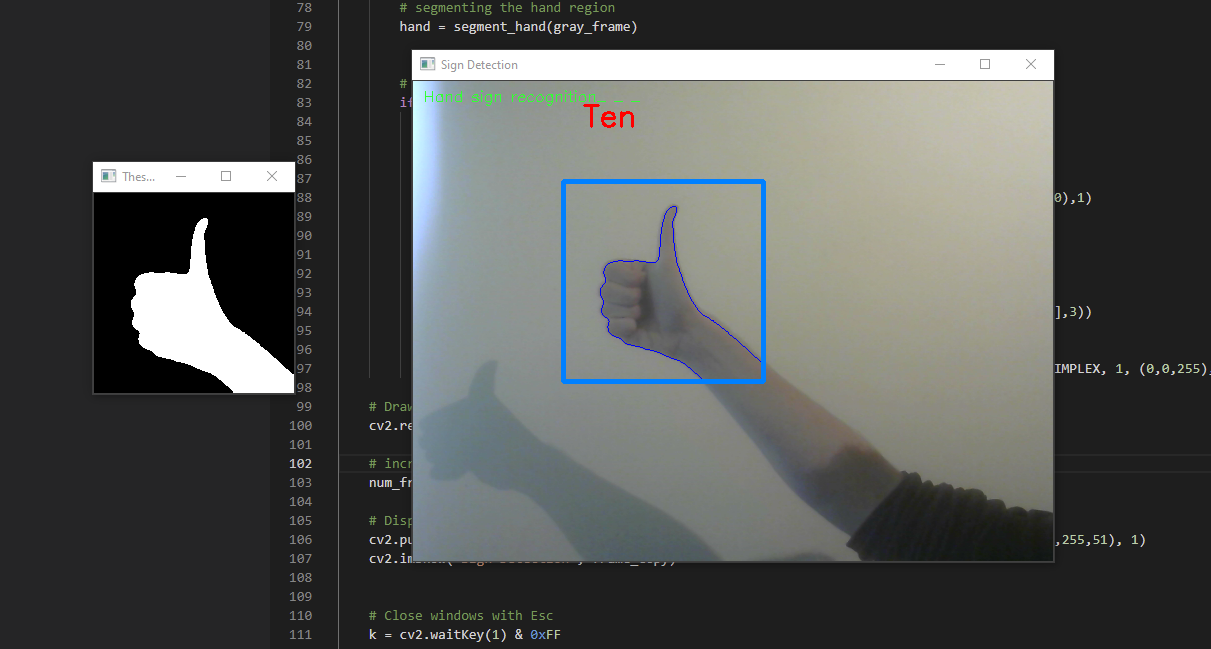

The first iteration of a classifier | The first iteration of a classifier that we made was trained on a data set containing only single-framed signs. This was easier to start out with, as single frame signs require far less pre-processing and are far easier to incorporate into a video stream from a webcam. For the first iteration, we started with the numbers 1 to 10 as these signs are relatively easy to distinguish from each other and only require you to single out the handshape of a single hand. For this first iteration, we made the used data ourselves and did not yet use a (large) tested dataset. To make this data we used a clear background and calculated the average pixel values of the captured image of the background, then when a hand moves into the picture we simply have to look at which pixel saw a significant change in value to find the contours of the hand. This allowed us to capture frames containing the hand contours easily, which contains the most relevant data in the picture. We used a webcam to record our hands in the positions of the 10 numbers for a few seconds while moving our hands slightly to create some variance, until we had captured about 700 frames (a few seconds of footage). We then trained a CNN on this data to create a classifier model. Having made a classifier model, we started looking into connecting this to a video stream from our webcam to test the effectiveness of our model when applied in real life. Since our model takes in only black and white pictures containing the contours of the hand, this also means that the frames captured from the video stream of a webcam first need to be turned into such frames. As such in the demo which we made for this classifier, you first need to calculate the background of the environment of the user. As such it is also crucial that this background (and the lighting within it) is constant, as you do not want to recalculate the background of the image before every time you sign. As can be seen in the images of the classification of the sign for the number 8 and for the number 10, the handcontours are detected by the model and black and white handshapes are extracted. When theses contour images are then ran through the neural network a predicted result can than be displayed in red letters on the imageview. | ||

[[File:sign-08.png|800px|thumb|center|Number 8 sign classification]] | |||

[[File:sign-10.png|800px|thumb|center|Number 10 sign classification]] | |||

The downside of this implementation is that you require the user to have a meticulously specific setup for their computer and work environment as the background cannot be too cluttered and obstructive, nor can people walk or move in the background without confusing the model. Furthermore, it is important that the lighting in the image is from a good angle as to not cast shadows on the background, which are taken in as significant changes in the model. We found these issues when we were testing the models which we created, as when the lighting conditions were changed a bit or when the camera angle and the light did not match up perfectly, the models classified poorly. We also found that it was hard to set up the lights in a room and the camera in a room in a way such that the model worked accurately, and as such the reliability of the models created in this iteration was unsatisfactory. Therefore we found that a classifier constructed in this way is too restrictive in its setup to yield models useful for a wider array of users, as well as to unreliable to use in a non-isolated desk setup. | |||

'''Single-frame Iteration 2''' | '''Single-frame Iteration 2''' | ||

Therefore in the second classifier which we constructed we did not pre-process our data by calculating the background of images and calculating the threshold values to create an image solely containing the hand contours. For our second classifier model we opted to just use frames containing hands in single framed signs (this time we looked at signs for the Roman alphabetical letters) and used larger (pretested) databases. The databases which we initially used | Therefore in the second classifier which we constructed, we did not pre-process our data by calculating the background of images and calculating the threshold values to create an image solely containing the hand contours. For our second classifier model, we opted to just use frames containing hands in single framed signs (this time we looked at signs for the Roman alphabetical letters) and used larger (pretested) databases. The databases<ref name="KaggleDB">[https://www.kaggle.com/grassknoted/asl-alphabet] Nagaraj, K. (2018) Image data set for alphabets in the American Sign Language </ref> which we initially used contains about 3000 image files per class. We trained these once again on a CNN. On the first models which we made for this data, we did not find satisfying results, as the model did not classify to a high accuracy when tested on new data. We assume this was a result of overfitting, as the image files on which the model was trained were all of the same person's hands and with the same background. Therefore we searched for a more diversified dataset to run the neural network on. After having found a more varied dataset<ref name="Kaggle asl 02"/>[ (in this case a dataset with hands from multiple different people with multiple different backgrounds), we ran the CNN on this data to form new models. Sadly though the new models which were created did not yield satisfying accuracy rates and still misclassified test images most of the time. One of the factors which may have prevented the CNN from creating models with high accuracy is that not all the images were of the same resolution and thus had to be reshaped to acquire uniformity. This means some pictures were inputted flattened or widened and may have prevented the CNN from finding useful patterns to indicate which class a picture belonged to. | ||

'''Multi-frame Iteration 1''' | '''Multi-frame Iteration 1''' | ||

While we were working on our second single-frame classifier we also started to look at creating a classifier for multiple frame signs. For the multiple-frame classifier we have used the Isolated Gesture Recognition (ICPR '16) dataset <ref name="Chalearn ICPR" />. This dataset contains a large degree of variance, as the recorded signs have been performed by many different people. This helps when making a classifier as it reduces the chance of producing an overfitted classifier model. To work with this dataset we | While we were working on our second single-frame classifier we also started to look at creating a classifier for multiple frame signs. For the multiple-frame classifier we have used the Isolated Gesture Recognition (ICPR '16) dataset <ref name="Chalearn ICPR" />. This dataset contains a large degree of variance, as the recorded signs have been performed by many different people. This helps when making a classifier as it reduces the chance of producing an overfitted classifier model. To work with this dataset we used and adapted code from F. Schorr<ref name="FrederikSchorr"> [https://github.com/FrederikSchorr/sign-language] Schorr, F. (2018) Sign Language Recognition for Deaf People. </ref>, and have pre-processed the data by turning the video files which are in the dataset into frames and optical flow frames.The video files were all turned into 40 frame selections, to adhere to the uniformity which is required for a neural network input (since every input should contain an equal amount of values). To calculate the optical flow, the code uses the OpenCV <ref name="opencv"> [https://opencv-python-tutroals.readthedocs.io/en/latest/index.html#] OpenCV – Python library </ref> library for python. With the OpenCV library, which is also used to load in the image data, the optical flow is calculated with the “Dual TV L1” algorithm <ref name="tvl1a"> [http://www.ipol.im/pub/art/2013/26/article_lr.pdf] avier Sánchez Pérez, Enric Meinhardt-Llopis, and Gabriele Facciolo, TV-L1 Optical Flow Estimation, Image Processing On Line, 3 (2013), pp. 137–150. https://doi.org/10.5201/ipol.2013.26</ref> <ref name="tvl1b"> [https://link.springer.com/chapter/10.1007/978-3-540-74936-3_22] Zach C., Pock T., Bischof H. (2007) A Duality Based Approach for Realtime TV-L1 Optical Flow. In: Hamprecht F.A., Schnörr C., Jähne B. (eds) Pattern Recognition. DAGM 2007. Lecture Notes in Computer Science, vol 4713. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-540-74936-3_22 </ref>. | ||

With this algorithm the optical flow images are computed which can then be used for the training the neural network. The multiframe classifier is trained on optical flow images rather than raw image data as optical flow data can highlight the relevant information in the image which we want the classifier to look at. The optical flow data which contains the movement of the objects in the images can highlight and extract the features which are most important to classify the signing in the videos. The optical flow data will contain the hand shapes and hand/arm movements while removing most of the other ‘noise’ in the images, namely the background and static objects in the frames. It will in this way result in images which are significantly more uniform in comparison to raw image data. This will make it far easier for the CNN to form relations based on the relevant data and reduce the chances of the CNN getting confused by the noise in the background of the images. This is also further supported by studies indicating the success rate of using optical flow data for sign language classification | |||

<ref name="optical01">[https://www.sciencedirect.com/science/article/pii/S1047320316301468] Kian Ming Lim, Alan W.C. Tan, Shing Chiang Tan, Block-based histogram of optical flow for isolated sign language recognition, Journal of Visual Communication and Image Representation, Volume 40, Part B, 2016, Pages 538-545, ISSN 1047-3203, https://doi.org/10.1016/j.jvcir.2016.07.020. </ref> | |||

<ref name="optical02">[https://ieeexplore.ieee.org/abstract/document/7544860] P. V. V. Kishore, M. V. D. Prasad, D. A. Kumar and A. S. C. S. Sastry, "Optical Flow Hand Tracking and Active Contour Hand Shape Features for Continuous Sign Language Recognition with Artificial Neural Networks," 2016 IEEE 6th International Conference on Advanced Computing (IACC), Bhimavaram, India, 2016, pp. 346-351, doi: 10.1109/IACC.2016.71.</ref> | |||

Using optical flow data rather than raw image data does however give the downside of a larger computational cost when using a model created with it, as computing optical flow is rather expensive computationally. Since the input of the neural network will consist of a set of optical flow images, the input of the produced classifier will also require optical flow images. This means that when the model is used live, that the optical flow must also be computed live. This means there will be a relative delay in relation to the computable power of the device used by the user. We have tested the model on a desktop with a dedicated graphics card (Nvidia GeForce GTX 1070 Ti), with these specifications computing the optical flow of 40 frames of 200 by 200 pixels takes at most 2-3 seconds. Thus the use of a classifier that intakes optical flow images can limit the accessibility of the model for users, especially those with devices with limited computational power. As such the model’s speed and thus usefulness may vary. | |||

To create this model a (partly) pre-trained Inflated 3d Inception architecture neural network<ref name="Inflated 3d Inception architecture"> [https://arxiv.org/abs/1705.07750] Carreira, J., Zisserman, A. (2018) Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. </ref> is used, which is also trained on optical flow data. A Keras implementation of this model was used<ref name="keras"> [https://github.com/dlpbc/keras-kinetics-i3d] Keras implementation of inflated 3d from Quo Vardis paper + weights. </ref>. | |||

As such we | We first made a multiple-frame classifier for all the classes (249 signs) in the chalearn dataset<ref name="Chalearn ICPR" />, though this proved to be computationally very expensive as running a single epoch could take upwards of 2 hours. The model that we produced with it was only run on 3 epochs and merely reached an accuracy of around 56%. The sources that we had looked at also indicated that making a classifier for all the classes of this dataset is complex, as there is such a large number of classes to test. Since the dataset also contains signs in multiple different languages, this may also add to the difficulty of making an accurate classifier for the dataset as a whole. | ||

As such, we ran the neural network with a subset of the (Chalearn) dataset. We only used 31 classes which consisted of 2 coherent sets of signs within the dataset. These 2 sets of signs contain signs on SWAT hand signals and Canadian aviation ground circulation. On this smaller set, we ran 20 epochs, as it is was not as computationally expensive. This yielded a model with around 80% accuracy. | |||

==Model Usage== | ==Model Usage== | ||

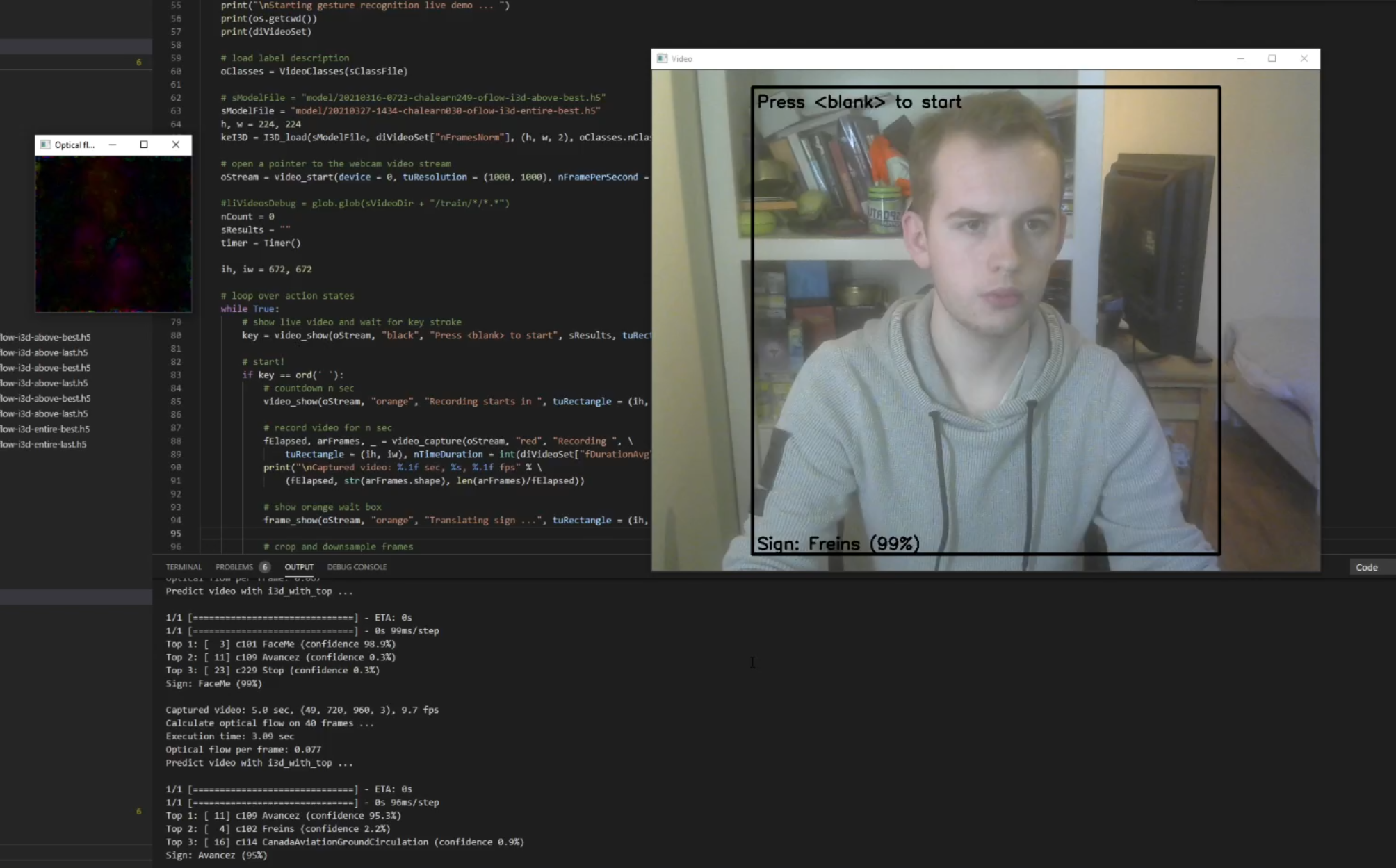

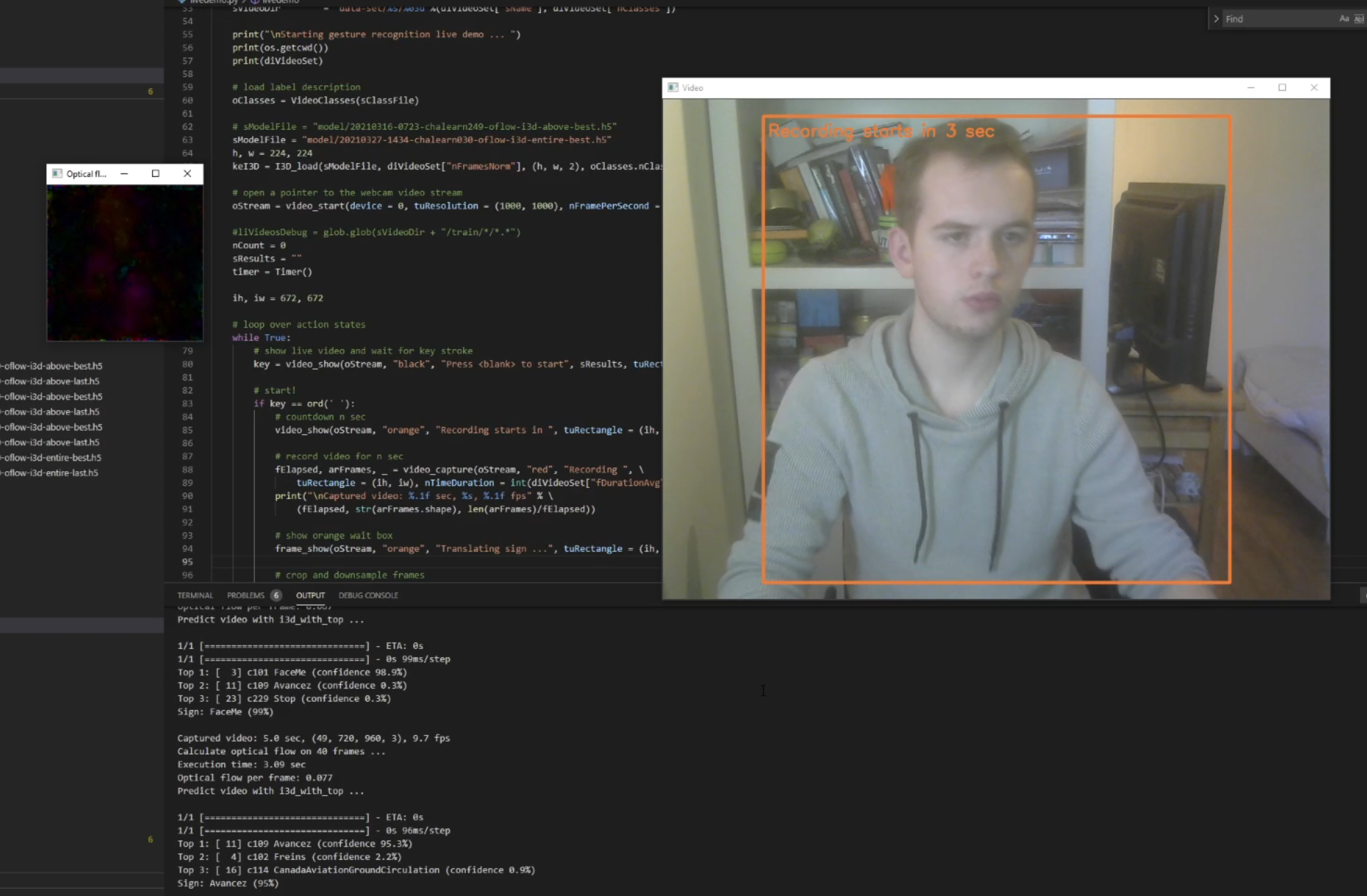

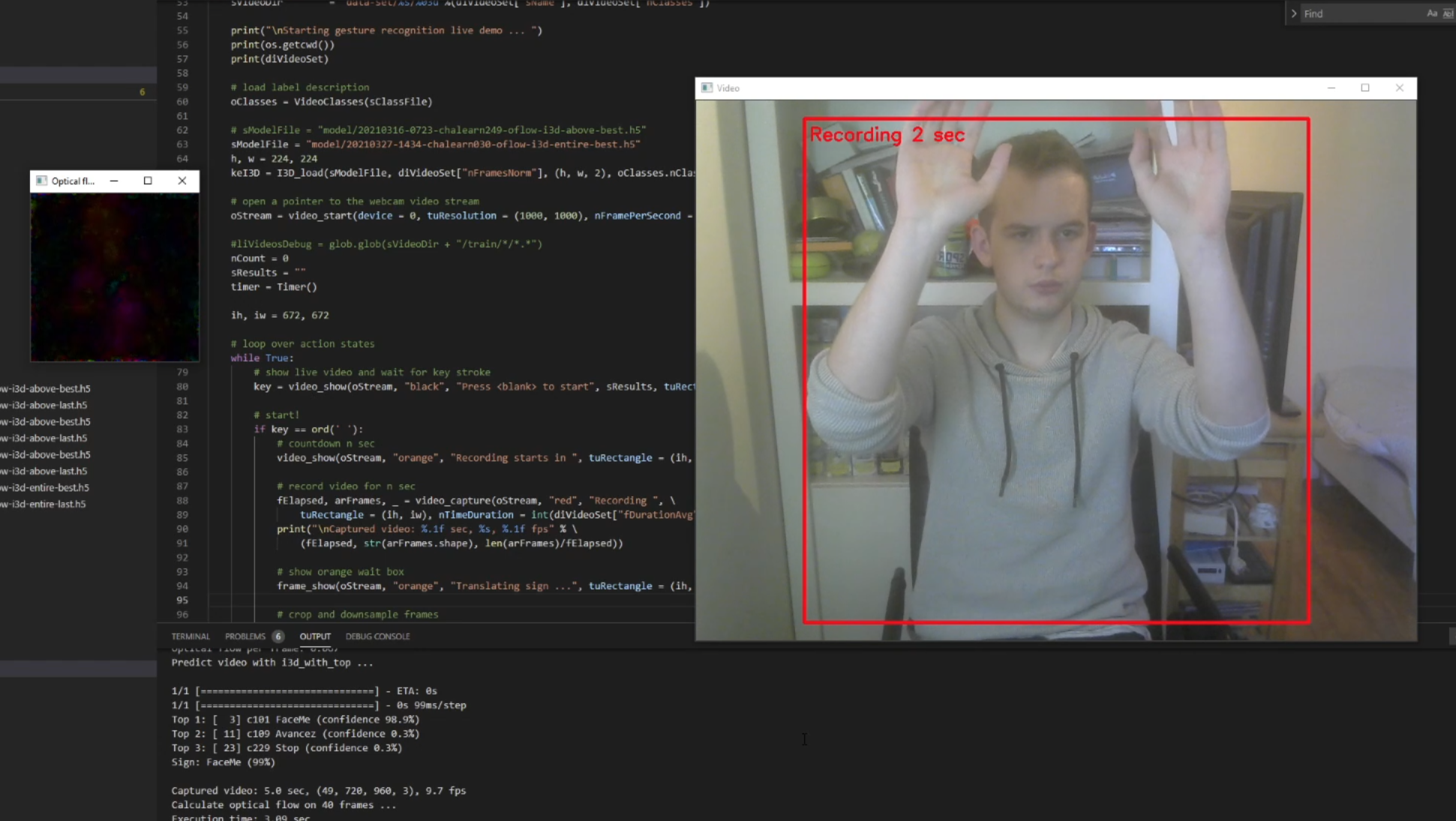

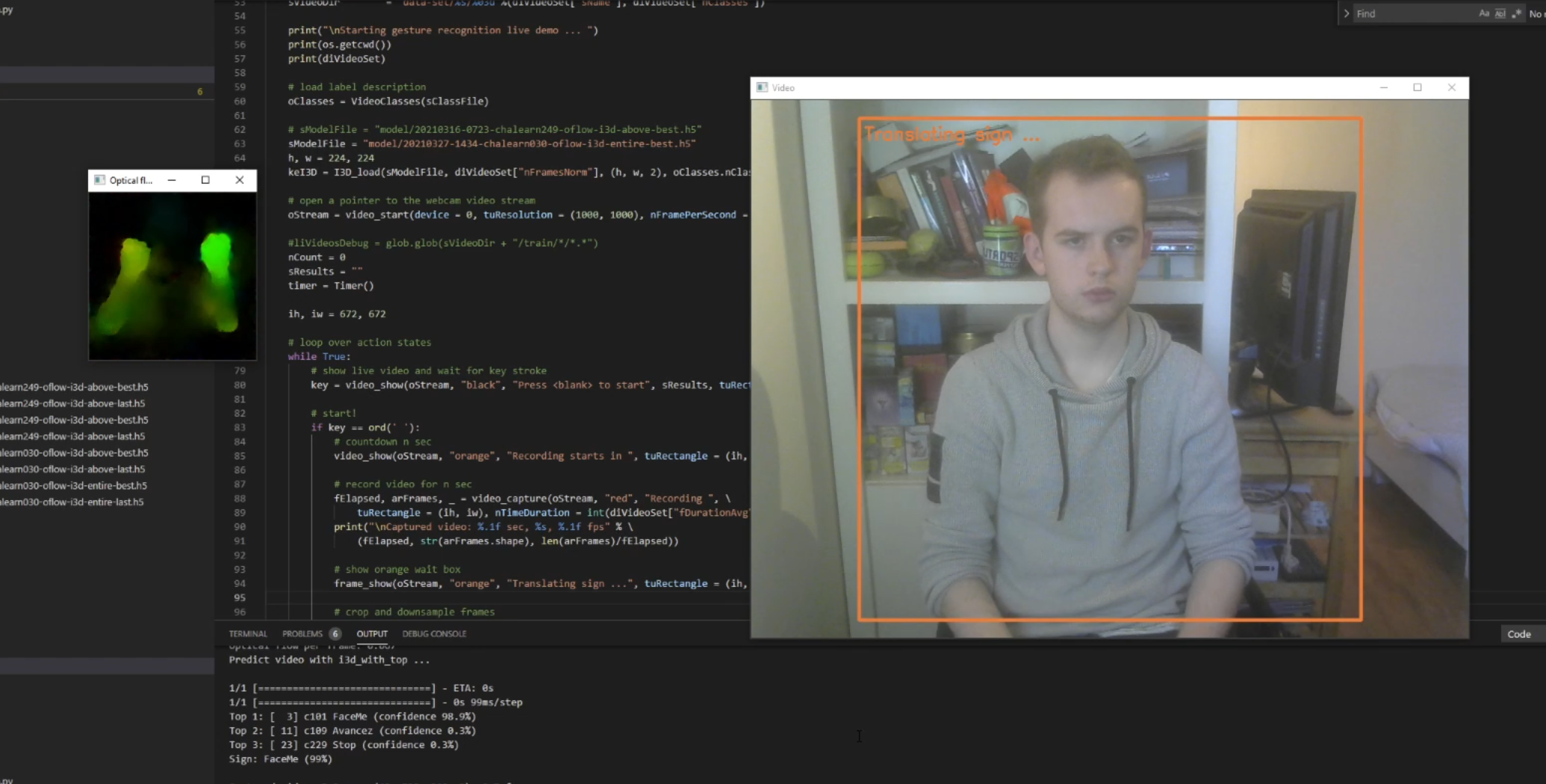

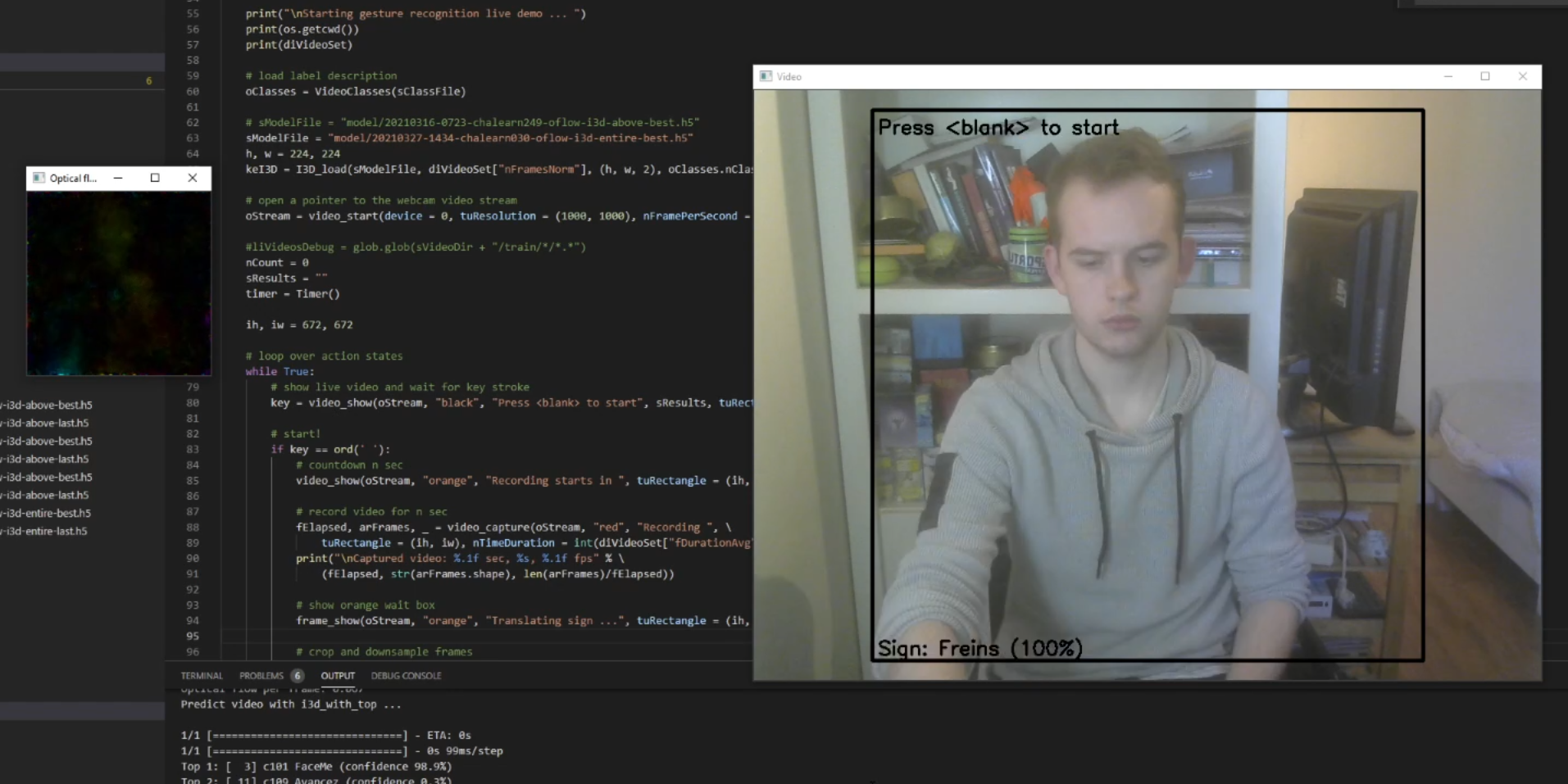

After the classifying models were made, we made | After the classifying models were made, we made a demo program to connect them in python to a webcam video stream so the models can be used to classify live footage. In the pictures, you can see the demo program capture footage of the sign Freins (a Canadian aviation-related sign) and return an output to the user. In the first image you can see the default state of the program, nothing is being computed and at the top of the screen, there is an indication that the user should press the spacebar to start. The default state is also indicated by black text and bars as visual cues. In picture 2 you can see that after the spacebar has been pressed that the program will enter a waiting stage that lasts 3 seconds before the sign recording starts, which is also indicated by the orange coloring of the text and bars. In picture 3 you can see that the user being recorded while the user is performing a sign, this recording always lasts 5 seconds. The recording state is also indicated by the red text and bars. After the recording is finished the program enters a translating state, where the video footage is extrapolated to 40 frames and the optical flow is computed as can be seen to the right of the camera view. Afterward (still in the translating state) the optical flow frames are run through the model to get a prediction of the sign. This state is once again indicated in orange. After the program is done with translating the sign, it will return to the default state where the predicted sign and its prediction rate are shown in the bottom left of the camera view. This can be seen in picture 5. | ||

[[File:Frein01.png|800px|thumb|center|Frein Classification 1]] | [[File:Frein01.png|800px|thumb|center|Frein Classification step 1]] | ||

[[File:Frein02.png|800px|thumb|center|Frein Classification 2]] | [[File:Frein02.png|800px|thumb|center|Frein Classification step 2]] | ||

[[File:Frein03.png|800px|thumb|center|Frein Classification 3]] | [[File:Frein03.png|800px|thumb|center|Frein Classification step 3]] | ||

[[File:Frein04.png|800px|thumb|center|Frein Classification 4]] | [[File:Frein04.png|800px|thumb|center|Frein Classification step 4]] | ||

[[File:Frein05.png|800px|thumb|center|Frein Classification 5]] | [[File:Frein05.png|800px|thumb|center|Frein Classification step 5]] | ||

=Testing= | =Testing= | ||

| Line 193: | Line 210: | ||

'''Scope''' | '''Scope''' | ||

The first scenario to be tested will be the 'out of context' scenario. In this situation we will test the software by making sure everything is set up correctly for a person to make some signs. There will be no other speech or discussions during this scenario. This scenario will be used to validate if the software will actually function as expected. There will be as few as possible variables considered. | The first scenario to be tested will be the 'out of context' scenario. In this situation, we will test the software by making sure everything is set up correctly for a person to make some signs. There will be no other speech or discussions during this scenario. This scenario will be used to validate if the software will actually function as expected. There will be as few as possible variables considered. | ||

The second scenario is | The second scenario is real-world testing. In this scenario the group members will start a discussion, then one of the members will express themselves through sign language. This scenario is to test whether the software correctly realises when it will need to translate, but also to test if there is not too much delay to keep the conversation alive. | ||

| Line 202: | Line 219: | ||

We are not going to cover the actual implementation in MS teams. We are also not going to cover the multiple-frame signs. We will also not include subject who actually suffer from not being able to talk. | We are not going to cover the actual implementation in MS teams. We are also not going to cover the multiple-frame signs. We will also not include subject who actually suffer from not being able to talk. | ||

'''Schedule''' | '''Schedule''' | ||

preparation: Subject person has to have to program installed. The camera of the subject needs to be of sufficient quality with sufficient lighting. The subject has to know the signs of the letters of | preparation: Subject person has to have to program installed. The camera of the subject needs to be of sufficient quality with sufficient lighting. The subject has to know the signs of the letters of their name and age. | ||

Test 1: Camera responsible starts a recording of the screen. The subject will also start a recording. Both recordings also need to include a clock with the accurate time. The software is prepared to translate and in the case this is necessary the subject will indicate the start of each sign to the software. The subject will sign their name and age. The output of the program will be logged with timestamps. | |||

Test 2: Camera responsible starts a recording of the screen. The subject will also start a recording. Both recordings also need to include a clock with the accurate time. An observer will ask the subject what their name and age are. The subject will respond in sign language. The output of the program will be logged with timestamps. | |||

==Testing== | |||

The software as we developed it does not have the functionality in which this test is a viable strategy to measure its performance. This test is focused around the usability with respect to the delay of the subtitles. Since the software is not able to chain multiple signs together, it will not be able to function in an actual conversation. Therefore, it is not important at this moment to perform this test. | |||

=Week 1= | =Week 1= | ||

{| class="wikitable" style="border-style: solid; border-width: 1px;" cellpadding="4" | {| class="wikitable" style="border-style: solid; border-width: 1px;" cellpadding="4" | ||

| Line 257: | Line 255: | ||

=Week 2= | =Week 2= | ||

{| class="wikitable" style="border-style: solid; border-width: 1px;" cellpadding="4" | {| class="wikitable" style="border-style: solid; border-width: 1px;" cellpadding="4" | ||

| Line 327: | Line 324: | ||

| Sven Bierenbroodspot || 1334859 || 6,5 || Video for different display possibilities (5h), Flowchart global design specs (1h 30m) | | Sven Bierenbroodspot || 1334859 || 6,5 || Video for different display possibilities (5h), Flowchart global design specs (1h 30m) | ||

|- | |- | ||

| Sterre van der Horst || 1227255 || || | | Sterre van der Horst || 1227255 || 6h || meeting with supervisor (1h), rewriting the questionnaire (2h), researching potential groups to place the questionnaire in (1h), contacting groups (30m), researching USE (1.5h) | ||

|- | |- | ||

| Pieter Michels || 1307789 || 10h || meeting with supervisor (1h), worked on the preprocessor for the classifier (5h), making sure preprocessor works (1h), working on state of the art section - which then got deleted for some unkown reason so I have to do it again :))))) (3h) | | Pieter Michels || 1307789 || 10h || meeting with supervisor (1h), worked on the preprocessor for the classifier (5h), making sure preprocessor works (1h), working on state of the art section - which then got deleted for some unkown reason so I have to do it again :))))) (3h) | ||

| Line 347: | Line 344: | ||

| Sven Bierenbroodspot || 1334859 || 8h || Meeting with supervisor (1h), Design concept writing (2h), User writing (2h), Test plan writing (3h) | | Sven Bierenbroodspot || 1334859 || 8h || Meeting with supervisor (1h), Design concept writing (2h), User writing (2h), Test plan writing (3h) | ||

|- | |- | ||

| Sterre van der Horst || 1227255 || || | | Sterre van der Horst || 1227255 || 3.5h || Meeting with supervisor (1h), editing questionnaire again (1h), researching DHH communities and finding other groups (1h), answering messages from said groups rejecting us (.5h) | ||

|- | |- | ||

| Pieter Michels || 1307789 || 10h || Meeting with supervisor (1h), rewriting/checking the state-of-the-art section (5h), investigating how to integrate classifier with teams (4h) | | Pieter Michels || 1307789 || 10h || Meeting with supervisor (1h), rewriting/checking the state-of-the-art section (5h), investigating how to integrate classifier with teams (4h) | ||

| Line 365: | Line 362: | ||

!style="text-align:left;"| Description | !style="text-align:left;"| Description | ||

|- | |- | ||

| Sven Bierenbroodspot || 1334859 || || | | Sven Bierenbroodspot || 1334859 || 4.5h || Meeting with supervisor (1h), Meeting feedback user side (30m), Improving user wiki page (3h) | ||

|- | |- | ||

| Sterre van der Horst || 1227255 || || | | Sterre van der Horst || 1227255 || 6.5h || Meeting with supervisor (1h), preparing for meeting with Raymond Cuijpers (1h), meeting (.5h), more literature research for statistics wiki page and integrating other feedback into the USE side of the wiki page (3h), finding layout for powerpoint and adding USE part to powerpoint (1h) | ||

|- | |- | ||

| Pieter Michels || 1307789 || | | Pieter Michels || 1307789 || 5h || Meeting with supervisor (1h), Updating design concept and checking state of the art section (4h) | ||

|- | |- | ||

| Pim Rietjes || 1321617 || 8.5h || Meeting with supervisor (1h), Meeting feedback user side (.5h), Analysing/preprocessing larger single-frame dataset (1h), Running single-frame model (2.5h), Making subset multi-frame dataset (1h), Running model multi-frame model (2h), testing multi-frame model (.5h) | | Pim Rietjes || 1321617 || 8.5h || Meeting with supervisor (1h), Meeting feedback user side (.5h), Analysing/preprocessing larger single-frame dataset (1h), Running single-frame model (2.5h), Making subset multi-frame dataset (1h), Running model multi-frame model (2h), testing multi-frame model (.5h) | ||

|- | |- | ||

| Ruben Wolters || 1342355 || | | Ruben Wolters || 1342355 || 1.5h || Meeting with supervisor (1h), Meeting feedback user side (.5h) | ||

|- | |- | ||

|} | |} | ||

| Line 385: | Line 382: | ||

!style="text-align:left;"| Description | !style="text-align:left;"| Description | ||

|- | |- | ||

| Sven Bierenbroodspot || 1334859 || || | | Sven Bierenbroodspot || 1334859 || 11h|| Final meeting with supervisor (1h), meeting with group to discuss presentation plans (1h),making commercial (2h), preparing presentation (2h), Final presentations (3h), Adding and proofreading wiki (2h) | ||

|- | |- | ||

| Sterre van der Horst || 1227255 || || | | Sterre van der Horst || 1227255 || 7h || Final meeting with supervisor (1h), meeting with group to discuss presentation plans (1h), final presentations (3h), proofreading and editing wiki (2h) | ||

|- | |- | ||

| Pieter Michels || 1307789 || | | Pieter Michels || 1307789 || 8h || Final meeting with supervisor (1h), meeting with group to discuss presentation plans (1h), final presentations (3h), proofreading and finalizing wiki (3h) | ||

|- | |- | ||

| Pim Rietjes || 1321617 || | | Pim Rietjes || 1321617 || 11.5h || Final meeting with supervisor (1h), meeting with group to discuss presentation plans (1h), preparing demo for presentation (.5h), Final presentation (3h), Adding/proofreading wiki text (6h) | ||

|- | |- | ||

| Ruben Wolters || 1342355 || | | Ruben Wolters || 1342355 || 9h || Final meeting with supervisor (1h), meeting with group to discuss presentation plans (1h), preparing presentation (2h), Final presentations (3h), Adding and proofreading wiki (2h) | ||

|- | |- | ||

|} | |} | ||

Latest revision as of 08:35, 8 April 2021

Sign to text software

Group Members

| Name | Student ID | Department | Email address |

|---|---|---|---|

| Ruben Wolters | 1342355 | Computer Science | r.wolters@student.tue.nl |

| Pim Rietjens | 1321617 | Computer Science | p.g.e.rietjens@student.tue.nl |

| Pieter Michels | 1307789 | Computer Science | p.michels@student.tue.nl |

| Sterre van der Horst | 1227255 | Psychology and Technology | s.a.m.v.d.horst1@student.tue.nl |

| Sven Bierenbroodspot | 1334859 | Automotive Technology | s.a.k.bierenbroodspot@student.tue.nl |

Problem Statement and Objective

At the moment 466 million people suffer from hearing loss. It has been predicted that this number will increase to 900 million by 2050. Hearing loss has, among other things, a social and emotional impact on one's life. The inability to communicate easily with others can cause an array of negative emotions such as loneliness, feelings of isolation, and sometimes also frustration [1]. Although there are many different types of speech recognition technologies for live subtitling that can help people that are deaf or hard of hearing, hereafter referred to as DHH, these feelings can still be exacerbated during online meetings. DHH individuals must concentrate on the person talking, the interpretation, and of any potential interruptions that can occur [2]. Furthermore, to be able to take part in the discussion, they must be able to spontaneously react in conversation. However, not everyone understands sign language, which makes communicating even more difficult. Nowadays, especially due to the COVID-19 pandemic, it is becoming more normal to work from home and therefore the number of online meetings is increasing quickly [3].

This leads us to our objective: to develop software that translates Sign Language to text to help DHH individuals communicate in an online environment. This system will be a tool that DHH individuals can use to communicate during online meetings. The number of people that have to work or be educated from home has rapidly increased due to the COVID-19 pandemic [4]. This means that the number of DHH individuals that have to work in online environments also increases. Previous studies have shown that DHH individuals obtain a lower score on an Academic Engagement Form for communication compared to students with no disability [5]. This finding can be explained by the fact that DHH people are usually unable to understand speech without aid. This aid can be a hearing aid, technology that converts speech to text, or even an interpreter, however the latter is expensive and not available for most DHH individuals. To talk to or react to other people, DHH individuals can use pen and paper, or in an online environment by typing. However, this is a lot slower than speech or sign language which makes it almost impossible for DHH individual to keep up with the impromptu nature of discussions or online meetings [6]. Therefore, by creating software that can convert sign language to text, or even to speech, DHH individuals will be able to actively participate in meetings. To do this, it is important to understand what sign language is and to understand what research has already been done into this subject. These issues will be discussed in this wiki page.

Sign Language: what is it?

Sign language is a natural language that is predominantly used by people who are deaf or hard of hearing, but also by hearing people as well. Of all the children who are born deaf, 9 out of 10 are born to hearing parents. This means that the parents often have to learn sign language alongside the child [7].

Sign language is comparable to spoken language in the sense that it differs per country. American Sign Language (ASL) and British Sign Language (BSL) were developed separately and are therefore incomparable, meaning that people that use ASL will not necessarily be able to understand BSL [7].

Sign language does not express single words, it expresses meanings. For example, the word right has two definitions. It could mean correct, or opposite of left. In spoken English, right is used for both meanings. In sign language, there are different signs for the different definitions of the word right. A single sign can also mean a whole entire sentence. By varying the hand orientation and direction, the meaning of the sign, and therefore the sentence, changes [8].

That being said, all sign languages rely on certain parameters, or a selection of these parameters, to indicate meaning. These parameters are [9]:

- Handshape: the general shape one's hands and fingers make;

- Location: where the sign is located in space; body and face are used as reference points to indicate location;

- Movement: how the hands move;

- Number of hands: this naturally refers to how many hands are used for the sign, and it also refers to the ‘relationship of the hands to each other’ ;

- Palm orientation: this is how the forearm and wrist rotate when signing;

- Non-manuals: this refers to the face and body. Facial expressions can be used for different meanings or lexical distinctions. They can also be used to indicate mood, topics, and aspects.

According to the study by Tatman, the first three parameters are universal in all sign languages. However, using facial expressions for lexical distinctions is something that is not used in most languages. The use of parameters also depends on the cultural and cognitive context and feasibility of those parameters [9].

USE

In this section, we will highlight how each of the aspects of USE relates to our system.

User