PRE2020 3 Group12: Difference between revisions

| (144 intermediate revisions by 5 users not shown) | |||

| Line 155: | Line 155: | ||

*Add capitalisation to section names, name the tables, make sure subheadings and headings are consistent | *Add capitalisation to section names, name the tables, make sure subheadings and headings are consistent | ||

*Conclusion of the research part | *Conclusion of the research part | ||

*Change headers: level 2 to level 3 and level 3 to level 5 | |||

*Rewrite enterprise structure and update implementation details | |||

Everyone: | Everyone: | ||

*Include sources! Use the citing Jeroen sent in the chat or refer to the appendices | *Include sources! Use the citing Jeroen sent in the chat or refer to the appendices | ||

| Line 167: | Line 169: | ||

*Add Future Improvements part to Enterprise, based on the feedback from the interview | *Add Future Improvements part to Enterprise, based on the feedback from the interview | ||

*Add a short summary of the structure of the wiki | *Add a short summary of the structure of the wiki | ||

*Add Future Improvements to the "Future Enterprise Research" | |||

Marco, Bart & Robert: | Marco, Bart & Robert: | ||

*Work on the presentation according to how the work is divided in the Presentation section | *Work on the presentation according to how the work is divided in the Presentation section | ||

| Line 172: | Line 175: | ||

= '''Introduction''' = | = '''Introduction''' = | ||

For the longest time in human history, humans have seized every opportunity they could find to automate and make their lives easier. This already started in the classical period with a very famous example, where the Romans would build large bridge-like waterways, aqueducts, to automatically transport water from outer areas to Rome, automated by the force of gravity and the general water cycle of nature. Today, computational sciences | For the longest time in human history, humans have seized every opportunity they could find to automate and make their lives easier. This already started in the classical period with a very famous example, where the Romans would build large bridge-like waterways, aqueducts, to automatically transport water from outer areas to Rome, automated by the force of gravity and the general water cycle of nature. Today, computational sciences are opening ways for AI and robotics have opened many new opportunities for this automation. Think about all the robots that are used in production to do the same programmed task over and over again, classification of images (often better than human results) with deep learning networks, and more recently, the combined robotics and AI task of self-driving cars! | ||

Slowly but surely, robots can take over repetitive, task-specified jobs, and do them more efficiently than their human counterparts. Currently, however, such jobs are very concrete. For robots to have more versatility, they simply need to become more like us. Robots should be adaptive, be able to learn from their mistakes, and handle anomalies efficiently; essentially, a robot should have a level of decision-making and freedom equivalent to that of a human being. For example, when a robot is specifically tasked with picking up a football from the ground, it should find out a way to get the football back when it's accidentally thrown in a tree. Another issue arises in this social situation as well: the robot has to have a certain level of flexibility in its movement, since it should not just be able to move around and pick up a ball, but also to use tools that help it in fulfilling its "out of the ordinary" task. All in all, one thing becomes very clear: These tasks can not be hardcoded, they have to be learned. | Slowly but surely, robots can take over repetitive, task-specified jobs, and do them more efficiently than their human counterparts. Currently, however, such jobs are very concrete. For robots to have more versatility, they simply need to become more like us. Robots should be adaptive, be able to learn from their mistakes, and handle anomalies efficiently; essentially, a robot should have a level of decision-making and freedom equivalent to that of a human being. For example, when a robot is specifically tasked with picking up a football from the ground, it should find out a way to get the football back when it's accidentally thrown in a tree. Another issue arises in this social situation as well: the robot has to have a certain level of flexibility in its movement, since it should not just be able to move around and pick up a ball, but also to use tools that help it in fulfilling its "out of the ordinary" task. All in all, one thing becomes very clear: These tasks can not be hardcoded, they have to be learned. | ||

It stands to reason that building a robot with all implementations specified above, a robot suitable in a social environment, requires a humanoid design. This causes many challenges, among which the most notable would be the challenges grouped into Electrical/Mechanical Engineering and Computer/Data Science. A human has many joints and muscles for various movements, expressions, and goals. The human face in particular is an incredibly complex design, consisting of 43 muscles, using about 10 of those muscles to smile and about 30 to simply laugh. All these joints and muscles have to work together perfectly since every joint's state influences the entire structure of joints and muscles. One huge aspect in the collaborative work of these joints and muscles is our sense of balance, meaning how the robot prevents itself from falling over. At every iteration, the robot has to check the state of its balance and decide which joints and muscles need to be given a task for the next iteration. Additionally, there is the Computer Science-related challenge of mapping such an unpredictable environment to binary code interpretable by the robot. Every signal from its surroundings has to be carefully processed and divided accordingly. The robot has to learn, diving into AI, and more specifically, Reinforcement Learning. Reinforcement Learning currently only exists as a solution to very basic learning problems, where the rules are very concretely specified, and suffers from the environment being too broad in terms of data. This is why it is still a very experimental field, and many robot studies include abstract definitions of states and policies for environment data that heavily imply the usage of Reinforcement Learning in theory, but lack a practical implementation. | It stands to reason that building a robot with all implementations specified above, a robot suitable in a social environment, requires a humanoid design. This causes many challenges, among which the most notable would be the challenges grouped into Electrical/Mechanical Engineering and Computer/Data Science. A human has many joints and muscles for various movements, expressions, and goals. The human face in particular is an incredibly complex design, consisting of 43 muscles, using about 10 of those muscles to smile and about 30 to simply laugh. All these joints and muscles have to work together perfectly since every joint's state influences the entire structure of joints and muscles. One huge aspect in the collaborative work of these joints and muscles is our sense of balance, meaning how the robot prevents itself from falling over, which has recently been attempted by Boston Dynamics and their 'Dancing Robots.' At every iteration, the robot has to check the state of its balance and decide which joints and muscles need to be given a task for the next iteration. Additionally, there is the Computer Science-related challenge of mapping such an unpredictable environment to binary code interpretable by the robot, of which several paper summaries have been included below. Every signal from its surroundings has to be carefully processed and divided accordingly. The robot has to learn, diving into AI, and more specifically, Reinforcement Learning. Reinforcement Learning currently only exists as a solution to very basic learning problems, where the rules are very concretely specified, and suffers from the environment being too broad in terms of data. This is why it is still a very experimental field, and many robot studies include abstract definitions of states and policies for environment data that heavily imply the usage of Reinforcement Learning in theory, but lack a practical implementation. | ||

As a consequence, social/humanoid robots are still a widely researched topic. The research has for example huge usage potential in care facilities and other forms of | As a consequence, social/humanoid robots are still a widely researched topic. The research has for example huge usage potential in care facilities and other forms of care for the elderly, people with a disability, or even in the battle against loneliness and depression. Many papers cover how the robot could be taught certain skills with respect to the environment (Reinforcement Learning) and how it would communicate with humans. However, an oftentimes overlooked question is whether this robot would be accepted by humans at all. Many studies have found that a robot in the shape of a cute animal — a more easily modeled robot than a humanoid robot because humans expect less from the robot and the mimicked behavior is often very repetitive and standard — has a positive impact on its environment. People like engaging with the robot, as some see it as a kind of pet, and it successfully requests the attention of its audience. But, as we have established, such a robot would be very limited in the tasks it could fulfill, and modeling a humanoid robot is not as easy as modeling the behavior of an animal because we expect a lot more detail from the humanoid robot being humanoid beings ourselves. What would this robot look like to make it appealing instead of scary? | ||

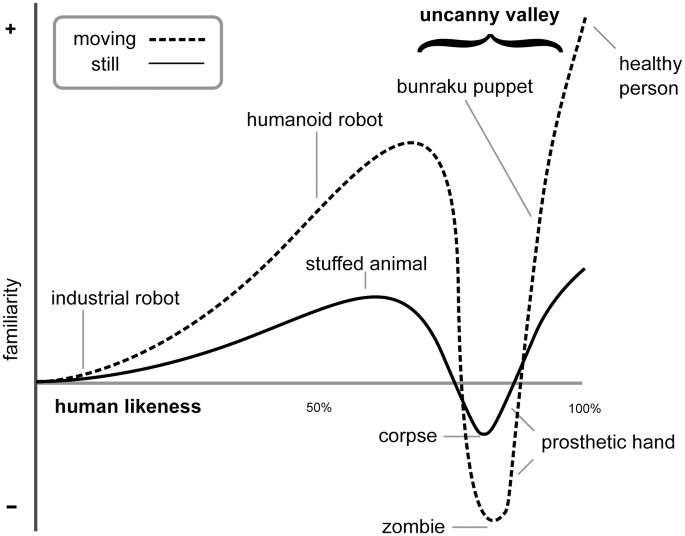

This phenomenon, a robot becoming scary as it approaches human-likeness, is what is referred to as the Uncanny Valley. Humans are able to relate to objects that act and look in a certain human way (generally humanoid), but something strange happens when we approach reality. If a robot or screen-captured character is manually made to look exactly like a human, we are often scared, disgusted, or even grossed out, making the robot 'uncanny.' It's as if we hate that it tries to fool us for being human, when we know it isn't from the various signs we pick up on as red flags. More on the Uncanny Valley can be found under the Theoretical Analysis. Because modeling a perfect human is impossible with the current state-of-the-art, designers try to refrain from creating a very human-like robot even if it has to be humanoid to properly fulfill its tasks. However, in a social situation, a robot has to be appealing enough for us to appreciate, value, and even accept it in our environment. On the other hand, though, if the robot is too 'cute', we tend to not open up to the robot at all, but rather use our attention to watch it, actively 'awwh' it, and help it in any way we can; not very useful when the purpose is to assist you, although this can be helpful in battling loneliness, as has been proven by for example (Robinson et al, 2013)<ref>Robinson et al (2002). ''The Psychosocial Effects of a Companion Robot: A Randomized Controlled Trial''</ref> | |||

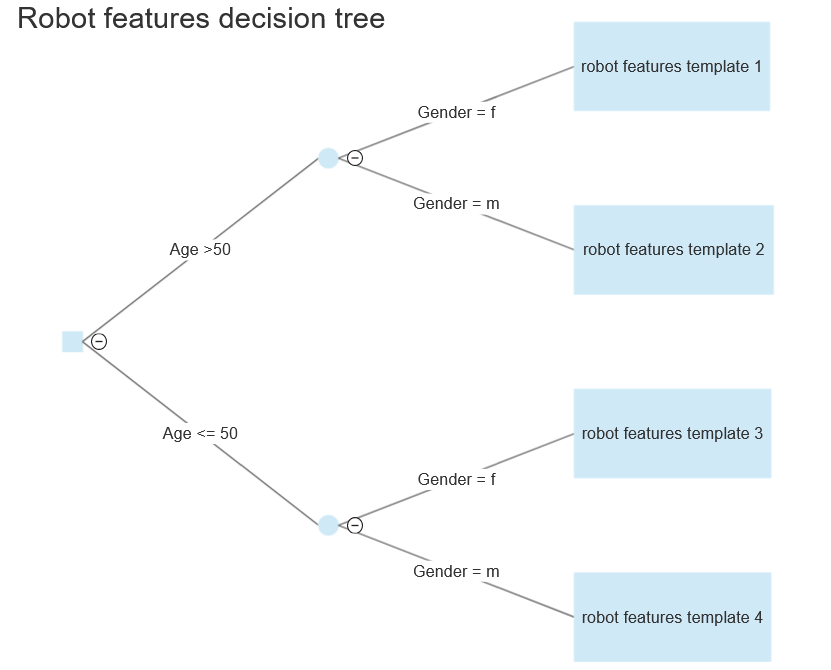

Since most of this technology is still very experimental, it is important that we | Since most of this technology is still very experimental, it is important that we start to define the variables involved in determining this Uncanny Valley in advance with the examples we can already offer. Such examples are widely available, not only due to attempts in developing human robots, but also because of the art of recreating a human face in CGI (Computer Generated Imagery) that has become significantly important in the movie-making and gaming industry. By defining variables for various attributes of the appearance of a robot and its effects on humans, or even just elderly, adults and students, we can help prevent designers from falling into the same pitfalls that others already have. Designers can decide on the appearance of a robot based on what exactly they need the robot to be able to do: a center of appearance studies. | ||

== ''' | === '''Structure of the Wiki''' === | ||

The contents of our projects on this wikipage is structured as follows: | |||

We perform a theorethical exploration of three state of the art antropomorphic robots, the human perception of others humans and human robot interaction. Moreover, we extensively study the Uncanney valley effect as mentioned earlier. | |||

With this theoretical exploration we have the basis to create a questionnaire. How and why will be explained in the sections about the "Godspeed and RoSAS scale" and "Methods". The results of the questionnaire, how each robot was evaluated, and interpretation thereof are explained in the following section. | |||

A conclusion and discussion conclude the questionnaire section and thus our deliverable. | |||

Since we did not want to gather information for the sake of gathering it we decided to use the expertise to setup an enterprise. | |||

The details, problem in society we hope the enterprise will contribute to solving and the vision is explained next. Thereafter, we concretely discuss the users involved, cost & profit of the enterprise, the funding campaign and platform for the enterprise. | |||

Lastly, we spoke to experts in the robotics field to evaluate our enterprise and discussed its viability in sections "Input from Experts" and "Future Expansions and Improvements". | |||

== '''Study Objective''' == | === '''Study Objective''' === | ||

Designing the appearance of a robot can be quite challenging. If it looks too different from a human, it might be difficult to create a sense of warmth, interaction and humanlikeness. If it is very humanlike, it runs the risk of falling into the uncanny valley. | Designing the appearance of a robot can be quite challenging. If it looks too different from a human, it might be difficult to create a sense of warmth, interaction and humanlikeness. If it is very humanlike, it runs the risk of falling into the uncanny valley. | ||

| Line 199: | Line 205: | ||

= '''Theoretical Exploration''' = | = '''Theoretical Exploration''' = | ||

== ''' | === '''Relevance of Robot Appearance''' === | ||

''' | In a social context, robots may be subject to judgment from humans based on their appearance (Walters, M.L. et al., 2008)<ref>Walters, M.L. et al. (2008). "Avoiding the uncanny valley: robot appearance, personality and consistency of behavior in an attention-seeking home scenario for a robot companion."</ref>. The perceived intelligence of the robot is correlated to the attractiveness of the robot since it is the case that humans make a ‘mental model’ of the robot during social interaction and adjust their expectations accordingly: | ||

=== '''Atlas''' === | *“If the appearance and the behavior of the robot are more advanced than the true state of the robot, then people will tend to judge the robot as dishonest as the (social) signals being emitted by the robot, and unconsciously assessed by humans, will be misleading. On the other hand, if the appearance and behavior of the robot are unconsciously signaling that the robot is less attentive, socially or physically capable than it actually is, then humans may misunderstand or not take advantage of the robot to its full abilities.” | ||

The Atlas robot made by Boston Dynamics with the purpose of accomplishing the tasks necessary for search and rescue missions has increased in popularity in the public eye especially for its similarity in appearance to a human. The capability of this robot, as showcased in the YouTube videos released by the manufacturer | It is thus very important to predict and attribute the correct level of attractiveness depending on the intellectual capabilities of a robot. Not only that, but humans attribute different levels of trust and satisfaction when dealing with robots, depending on how much they like it (Li, D. et al., 2010)<ref name="Li">Li, D. et al. (2010). "A Cross-cultural Study: Effect of Robot Appearance and Task."</ref>. Furthermore, an anthropomorphic robot is said to be better when high sociability tasks are required (Lohse, M. et al., 2007)<ref>Lohse, M. et al. (2007). "What can I do for you? Appearance and application of robots. In: Artificial intelligence and simulation of behaviour."</ref>, which is a statement that does not lack controversy as some research did not find any conclusive evidence of this aspect (Li, D. et al., 2010)<ref name="Li"/>. | ||

=== '''State of the Art''' === | |||

===== '''Atlas''' ===== | |||

The Atlas robot made by Boston Dynamics with the purpose of accomplishing the tasks necessary for search and rescue missions has increased in popularity in the public eye especially for its similarity in appearance to a human. The capability of this robot, as showcased in the YouTube videos released by the manufacturer(see apendix B), namely Boston Dynamics, accomplishes a set of physical tasks such as jumping, running in uneven terrain, and acrobatic postures such as a handstand and a somersault. The 150 cm tall, weighing 80kg, the robot is able to maneuver across obstacles with human-like mobility (Boston Dynamics) <ref>Atlas product page, https://www.bostondynamics.com/atlas</ref>. In the past couple of years, the maneuverability of the robot has increased substantially and supposedly resembles the movements of professional athletes. The robot has 28 hydraulic joints, which it uses to move fluidly and gracefully. These joints are moved using complex algorithms that optimize the set of joints to be moved and to what degree to reach a target state. | |||

First, an optimization algorithm transforms high-level descriptions of each maneuver into dynamically-feasible reference motions. Then Atlas tracks the motions using a model predictive controller that smoothly blends from one maneuver to the next.” Boston Dynamics wrote. | First, an optimization algorithm transforms high-level descriptions of each maneuver into dynamically-feasible reference motions. Then Atlas tracks the motions using a model predictive controller that smoothly blends from one maneuver to the next.” Boston Dynamics wrote. | ||

| Line 209: | Line 219: | ||

The similarity to the human body is thus only achieved by its structure and movement. Although, it is worth mentioning that defining human body components such as a round head, a face, together with hands are missing components from this robot. That is a reason why we estimate that this robot sits right before the uncanny valley. | The similarity to the human body is thus only achieved by its structure and movement. Although, it is worth mentioning that defining human body components such as a round head, a face, together with hands are missing components from this robot. That is a reason why we estimate that this robot sits right before the uncanny valley. | ||

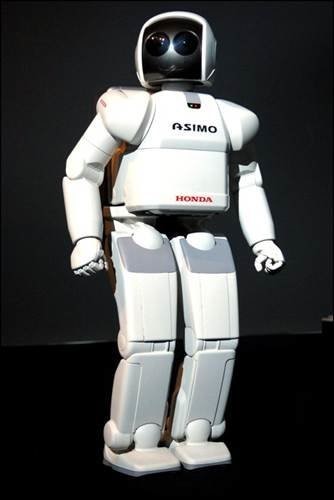

=== '''Honda ASIMO''' === | ===== '''Honda ASIMO''' ===== | ||

The ASIMO (Advanced Step in Innovative Mobility) robot produced by Honda, was developed to achieve the same physical abilities as humans, especially walking. This humanoid robot, unlike Atlas, comes equipped with hands and a head, and its structure is more like the one of a human. Also, ASIMO has the ability to recognize postures and gestures of humans, moving objects, sounds and faces and its surrounding environment. Its surroundings are captured by two | The ASIMO (Advanced Step in Innovative Mobility) robot produced by Honda, was developed to achieve the same physical abilities as humans, especially walking. This humanoid robot, unlike Atlas, comes equipped with hands and a head, and its structure is more like the one of a human (Honda ASIMO) <ref>Honda ASIMO product page, https://asimo.honda.com/</ref>. Also, ASIMO has the ability to recognize postures and gestures of humans, moving objects, sounds, and faces, and its surrounding environment. Its surroundings are captured by two cameras located in the "eyes" of the robot, this all allows ASIMO to interact with humans. It is capable of following or facing a person talking to it and recognizing and shaking hands, waving and pointing. ASIMO can distinguish between different voices or sounds, therefore it is able to identify a person by its voice and successfully face the person speaking in a conversation. ASIMO's advanced level of interaction with users is the reason why we think that this robot is situated higher than Atlas when it will come down to user preference. | ||

[[File:ASIMO.jpg|300px]] | [[File:ASIMO.jpg|300px]] | ||

=== '''Geminoid DK''' === | ===== '''Geminoid DK''' ===== | ||

Geminoid DK robot was conceived with the purpose of pushing the state-of-the-art robot imitation of humans. By creating a surrogate of himself, the creator was able to achieve remarkable human features that would otherwise be hard to create without a base model. With a facial structure almost indistinguishable from the human one, only movement remains a factor that plays a role in the uncanniness of this robot | Geminoid DK robot was conceived with the purpose of pushing the state-of-the-art robot imitation of humans. By creating a surrogate of himself, the creator was able to achieve remarkable human features that would otherwise be hard to create without a base model. With a facial structure almost indistinguishable from the human one, the only movement remains a factor that plays a role in the uncanniness of this robot. The robot mimics the external appearance and the facial characteristics of the original, being its creator and a Danish professor Hendrik Scharfe (Henrik Scharfe, 2011) <ref>Geminoid DK product page, https://robots.ieee.org/robots/geminoiddk/</ref>. Apart from the movements in the robot’s facial expressions and head, it is not able to move. The Geminoid DK does not possess any intelligence of itself and has to be remotely controlled by an operator. Pre-programmed sequences of movements can be executed for subtle motions such as blinking and breathing. Moreover, the speech of the operator can be transmitted through the computer network of the Geminoid to a speaker located inside the robot. At this moment the Geminoid DK is used to examine how the presence, appearance, behavior, and personality traits of an anthropomorphic robot affect communication with human partners. | ||

[[File:DK.jpg|600px]] | [[File:DK.jpg|600px]] | ||

== '''Human Perception of Others''' == | === '''Human Perception of Others''' === | ||

Human beings interact which each other on a very frequent basis. These interactions can be partially explained by using psychological, social-psychological, and behavioral theories that have been developed over the years. It is of utter importance to understand how exactly humans interact because many robot developers aim to create robots that are as close to an actual human as possible. Understanding how humans interact with computers and/or technology is not enough, there is an important human factor that needs investigation. | Human beings interact which each other on a very frequent basis. These interactions can be partially explained by using psychological, social-psychological, and behavioral theories that have been developed over the years. It is of utter importance to understand how exactly humans interact because many robot developers aim to create robots that are as close to an actual human as possible. Understanding how humans interact with computers and/or technology is not enough, there is an important human factor that needs investigation. | ||

Human non-verbal perception of others is influenced by both body aspect and body language. | Human non-verbal perception of others is influenced by both body aspect and body language. Verbal communication is very intricate and can change our perception of others through what the messages that are communicated. For example, people that lie very often are harder to resonate with and can find themselves judged by others. However, more meaningful to us will be the non-verbal category of perception. People are judged by both their looks and by their actions, and rightfully so. The ability to deduce others’ intentions, moods, and actions by their movement alone is one of the greatest skills that humans possess. Kinematics plays an especially important role in this: ''"human actions visually radiate social cues to which we are exquisitely sensitive"'' (Blake, R., & Shiffrar, M., 2007)<ref>Blake, R., & Shiffrar, M. (2007). "Perception of human motion. Annual Review of Psychology", 58(1), 47-73. doi:10.1146/annurev.psych.57.102904.190152</ref>, ''“when we observe another individual acting we strongly ‘resonate’ with his or her action”'' (Luciano F. et al., 2005)<ref>Luciano F. et al. (2005). "Human motor cortex excitability during the perception of others’ action."</ref>. | ||

Although movement is a big leap in the detection of social cues, it is not at all necessary. A static picture of a person is enough to detect the mood of the person well as some inferred features from their beauty. It even produces a physical response on the side of the viewer, since more angry-looking people will trigger the dilatation of the viewer’s pupils | Although movement is a big leap in the detection of social cues, it is not at all necessary. A static picture of a person is enough to detect the mood of the person well as some inferred features from their beauty. It even produces a physical response on the side of the viewer, since more angry-looking people will trigger the dilatation of the viewer’s pupils (Anne, R. et al, 2018)<ref>Anne, R. (2018). "Pupillary Responses to Robotic and Human Emotions: The Uncanny Valley and Media Equation Confirmed"</ref>. Furthermore, the level of intelligence of the observed individual can be inferred from their beauty (Zebrowitz, L.A. et al, 2002)<ref>Zebrowitz, L.A. et al. (2002). "Looking Smart and Looking Good: Facial Cues to Intelligence and their Origins."</ref>. | ||

== '''Human Robot Interaction (HRI)''' == | === '''Human Robot Interaction (HRI)''' === | ||

Robotics integrate ideas from information technology with physical embodiment. They obviously share the same physical spaces as people do in which they manipulate some of the very same objects. Human-robot interaction therefore often involves pointers to spaces or objects that are meaningful to both robots and people. Also, many robots have to interact directly with people while performing their tasks. This raises a lot of questions regarding the 'right way' of interacting. | Robotics integrate ideas from information technology with physical embodiment. They obviously share the same physical spaces as people do in which they manipulate some of the very same objects. Human-robot interaction therefore often involves pointers to spaces or objects that are meaningful to both robots and people. Also, many robots have to interact directly with people while performing their tasks. This raises a lot of questions regarding the 'right way' of interacting. | ||

| Line 233: | Line 243: | ||

The United Nations (U.N.) in their most recent robotics survey (U.N. & I.F.R.R., 2002)<ref>U.N. and I.F.R.R. (2002). ''United Nations and The International Federation of Robotics: World Robotics 2002''. New York and Geneva: United Nations.</ref>, grouped robots into three major categories, primarily defined through their application domains: industrial robotics, professional service robotics and personal service robotics. The earliest robotics belongs to the industrial category, which started in the early 1960, then much later, the professional service robots category started growing, and at a much faster pace than the industrial robots. Both categories manipulate and navigate their physical environments, however professional service robots assist people in the pursuit of their professional goals, largely outside industrial settings. For example robots that clear up nuclear waste contribute to that category. The last category promises the most growth. These robots assist or entertain people in domestic settings or in recreational activities. | The United Nations (U.N.) in their most recent robotics survey (U.N. & I.F.R.R., 2002)<ref>U.N. and I.F.R.R. (2002). ''United Nations and The International Federation of Robotics: World Robotics 2002''. New York and Geneva: United Nations.</ref>, grouped robots into three major categories, primarily defined through their application domains: industrial robotics, professional service robotics and personal service robotics. The earliest robotics belongs to the industrial category, which started in the early 1960, then much later, the professional service robots category started growing, and at a much faster pace than the industrial robots. Both categories manipulate and navigate their physical environments, however professional service robots assist people in the pursuit of their professional goals, largely outside industrial settings. For example robots that clear up nuclear waste contribute to that category. The last category promises the most growth. These robots assist or entertain people in domestic settings or in recreational activities. | ||

The shift from industrial to service robotics and the increase of the number of robots that work in close proximity to people raises a number of challenges. One of them is the fact that these robots share the same physical space with people | The shift from industrial to service robotics and the increase of the number of robots that work in close proximity to people raises a number of challenges. One of them is the fact that these robots share the same physical space with people (Thrus, 2011)<ref name="thrus_toward_a_framework_for_human_robot_interaction">Thrus, S., (2011). Toward a Framework for Human-Robot Interaction.</ref>. These people could be professionals trained to operate robots, but they could also be children, elderly or people with disabilities, whose ability to adapt to robotic technology may be limited. | ||

[[File:0LSUE0_2021_group_12_sony_aibo_robotic_dog.jpg|right|200px]] | [[File:0LSUE0_2021_group_12_sony_aibo_robotic_dog.jpg|right|200px]] | ||

One of the key factors in solving challenges like mentioned above is autonomy. Industrial robots are mostly designed to do routine tasks over and over again and are therefore easily programmed. Sometimes they require environmental modifications, for example a special paint on the floor that helps them navigate properly, which is no problem in the industrial sector, though for service robots this is much harder to accomplish. In that case such modifications are not always possible, which requires the robots to have a higher level of autonomy. Additionally, these robots tend to be targeted towards low-cost markets, which results in much more difficulty endowing it with autonomy. For example, the robot dog shown in the figure is equipped with a low-resolution CCD camera and an onboard computer whose processing power lags behind most professional service robots by orders of magnitude(Thrus, 2011)<ref name="thrus_toward_a_framework_for_human_robot_interaction" />. | One of the key factors in solving challenges like mentioned above is autonomy. Industrial robots are mostly designed to do routine tasks over and over again and are therefore easily programmed. Sometimes they require environmental modifications, for example a special paint on the floor that helps them navigate properly, which is no problem in the industrial sector, though for service robots this is much harder to accomplish. In that case such modifications are not always possible, which requires the robots to have a higher level of autonomy. Additionally, these robots tend to be targeted towards low-cost markets, which results in much more difficulty endowing it with autonomy. For example, the robot dog shown in the figure is equipped with a low-resolution CCD camera and an onboard computer whose processing power lags behind most professional service robots by orders of magnitude (Thrus, 2011)<ref name="thrus_toward_a_framework_for_human_robot_interaction" />. | ||

Robots require interfaces for interacting with people, though industrial robots tend not to interact directly with people. Because of this the interfaces of these robots are very limited, while most service robots require, and have, much richer interfaces. We can distinguish between two interfaces: for indirect and direct interaction. Indirect interaction would be defined as when a person operates a robot, while with direct interaction the robot acts on its own and the person responds or vice versa. As a general rule of thumb, the interaction with professional service robots is usually indirect, whereas the interaction with personal service robots tends to be more direct. | Robots require interfaces for interacting with people, though industrial robots tend not to interact directly with people. Because of this the interfaces of these robots are very limited, while most service robots require, and have, much richer interfaces. We can distinguish between two interfaces: for indirect and direct interaction. Indirect interaction would be defined as when a person operates a robot, while with direct interaction the robot acts on its own and the person responds or vice versa. As a general rule of thumb, the interaction with professional service robots is usually indirect, whereas the interaction with personal service robots tends to be more direct. | ||

| Line 246: | Line 256: | ||

This being said, the human-robot interaction is a field in change. The field develops itself so fast that the types of interaction that can be performed today differ substantially from those that were possible even a decade ago. Furthermore, the interaction can only be studied based on the available technology, and because the field of robotics is still very young, there are still many unknown factors in human-robot interaction (Thrus, 2011)<ref name="thrus_toward_a_framework_for_human_robot_interaction" />. | This being said, the human-robot interaction is a field in change. The field develops itself so fast that the types of interaction that can be performed today differ substantially from those that were possible even a decade ago. Furthermore, the interaction can only be studied based on the available technology, and because the field of robotics is still very young, there are still many unknown factors in human-robot interaction (Thrus, 2011)<ref name="thrus_toward_a_framework_for_human_robot_interaction" />. | ||

== '''Uncanny Valley''' == | === '''Uncanny Valley''' === | ||

In 1970, Masahiro Mori, a Japanese roboticist, defined a scale for the human emotional response to non-human entities in (Mori et al, 2012)<ref>Mori et al (2012). "The Uncanny Valley: The Original Essay by Masahiro Mori"</ref>, of which a summary is included below. Mori talks about how most phenomena in life are described by monotonically increasing functions. However, when we climb towards the goal of making an entity seem more human, this is not the case. There is a certain feeling of disgust when an entity is almost human-like, but not exactly; as if our mind doesn't like that it is being mislead. | |||

Mori defined this scale with examples available in his time period, around 1970. He thought this phenomenon might have something to do with our natural ability to recognize a corpse and tell it apart from a human in that it isn't alive, but we will explore more theories about the cause of the Uncanny Valley later. Consequently, the minimum of the uncanny valley graph is that of a corpse. It is also established that movement changes this scale drastically. Of course, we are particularly grossed out, or even terrified, by a walking corpse. The fact that exactly that which we find unappealing starts moving and acting like a human when we know it is not, scares us more than the still image. In Image 1 you can find the scale and certain key examples that defined the first iteration of the Uncanny Valley. | |||

[[File:UncannyValley.jpg|frame|''Image 1.'']] | |||

===== '''Theories About the Uncanny Valley''' ===== | |||

=== '''Theories About the Uncanny Valley''' === | |||

There have been numerous attempts at explaining the cognitive mechanism that causes this phenomenon. Some of the most noteworthy theories are listed below. | There have been numerous attempts at explaining the cognitive mechanism that causes this phenomenon. Some of the most noteworthy theories are listed below. | ||

# '''Pathogen Avoidance:''' Uncanny features of an entity might trigger our cognitive responses of disgust that evolved to protect our bodies from diseases. The more human an entity looks, the more we associate with it. Hence, every defect or small malfunction the entity shows compared to the human behavior they are trying to imitate/perform leads to an instinctual warning that we should keep our distance. This warning elicits a behavioral response that matches our response to a corpse or visibly diseased individual. | # '''Pathogen Avoidance:''' Uncanny features of an entity might trigger our cognitive responses of disgust that evolved to protect our bodies from diseases. The more human an entity looks, the more we associate with it. Hence, every defect or small malfunction the entity shows compared to the human behavior they are trying to imitate/perform leads to an instinctual warning that we should keep our distance. This warning elicits a behavioral response that matches our response to a corpse or visibly diseased individual. This is supported by (Roberts, 2012)<ref>Roberts (2012). "Applied Evolutionary Psychology. Oxford University Press. p. 423."</ref> and (Moosa et al, 2010).<ref>Moosa et al (2010). "Danger Avoidance: An Evolutionary Explanation of Uncanny Valley"</ref> | ||

# '''Mate Selection:''' Humans constantly 'rate' other humans based on several instinctive, often bodily features. When we are able to perceive an entity as human-like, we automatically check all these features in the entity and can be easily disgusted when our expectations are not met. | # '''Mate Selection:''' Humans constantly 'rate' other humans based on several instinctive, often bodily features. When we are able to perceive an entity as human-like, we automatically check all these features in the entity and can be easily disgusted when our expectations are not met (Rhodes & Zebrowitz, 2002).<ref>Rhodes & Zebrowitz (2002). "Facial Attractiveness: Evolutionary, Cognitive, and Social Perspectives, Ablex Publishing"</ref> | ||

# '''Mortality Salience:''' When we are almost fooled by an entity to pass as human, but we can still tell that something is off by minor mismatched traits, we end up in a state of fear. Knowing that a human model can be recreated by humans themselves not only elicits the less realistic fear of annihilation (a commonly discovered concept in Science-Fiction), but it also makes us realize we are nothing but soulless machines. Given that humans have a part of their brain dedicated to the belief of a higher entity, this leads to conflict. Furthermore, it makes us scared of our mortality. Not only does an almost human entity remind us of a human in a worsened state, seeing its limits and defects results in a fear of losing bodily control or even dying, given that we cognitively perceive the entity as human-like enough to relate to on an emotional level. | # '''Mortality Salience:''' When we are almost fooled by an entity to pass as human, but we can still tell that something is off by minor mismatched traits, we end up in a state of fear. Knowing that a human model can be recreated by humans themselves not only elicits the less realistic fear of annihilation (a commonly discovered concept in Science-Fiction), but it also makes us realize we are nothing but soulless machines. Given that humans have a part of their brain dedicated to the belief of a higher entity, this leads to conflict. Furthermore, it makes us scared of our mortality. Not only does an almost human entity remind us of a human in a worsened state, seeing its limits and defects results in a fear of losing bodily control or even dying, given that we cognitively perceive the entity as human-like enough to relate to on an emotional level. This theory is discovered in more detail by MacDorman & Ishiguro (2006).<ref>MacDorman & Ishiguro (2006). "The uncanny advantage of using androids in cognitive and social science research"</ref> | ||

# '''Conflicting Perceptual Cues:''' Produced by the activation of conflicting cognitive representations, this theory suggests that the feeling of eeriness is caused by the mind having both reasons to believe the entity is human, and reasons to believe it isn't. Several studies support this possibility, and one particularly clear measure was that a negative impact is maximized when at the midpoint of a morph between fake and real (like the characters in the movie Cats). However, other research shows that this effect can only be responsible for a portion of the Uncanny Valley effect. Several mechanisms have been linked to this response, and there is still debate on which of these mechanisms, if not all, are responsible for this effect: Perceptual mismatch, categorization difficulty, frequency-based sensitization and inhibitory devaluation are all candidates. | # '''Conflicting Perceptual Cues:''' Produced by the activation of conflicting cognitive representations, this theory suggests that the feeling of eeriness is caused by the mind having both reasons to believe the entity is human, and reasons to believe it isn't. Several studies support this possibility, e.g. (Mathur & Reichling, 2016)<ref>Mathur & Reichling (2016). "Navigating a social world with robot partners: A quantitative cartography of the Uncanny Valley"</ref> on this phenomenon causing a cognitive challenge, and one particularly clear measure was that a negative impact is maximized when at the midpoint of a morph between fake and real (like the characters in the movie Cats), as shown in (Yamada et al, 2013)<ref name="Yamada">Yamada et al (2013). "Categorization difficulty is associated with negative evaluation in the 'uncanny valley' phenomenon"</ref> and (Ferrey et al, 2015).<ref name="Ferrey">Ferrey et al (2015). "Stimulus-category competition, inhibition, and affective devaluation: a novel account of the uncanny valley"</ref> However, other research shows that this effect can only be responsible for a portion of the Uncanny Valley effect. Several mechanisms have been linked to this response, and there is still debate on which of these mechanisms, if not all, are responsible for this effect: Perceptual mismatch<ref>Chattopadhyay & MacDorman (2016). "Familiar faces rendered strange: Why inconsistent realism drives characters into the uncanny valley"</ref>, categorization difficulty<ref name="Yamada" />, frequency-based sensitization<ref>Burley & Schoenherr (2015). "A reappraisal of the uncanny valley: categorical perception or frequency-based sensitization?"</ref> and inhibitory devaluation<ref name="Ferrey"/> are all candidates. | ||

=== '''Design Principles as a Consequence of the Uncanny Valley''' === | ===== '''Design Principles as a Consequence of the Uncanny Valley''' ===== | ||

There are a number of design principles many designers already follow in some form to avoid the Uncanny Valley altogether. While this often means staying in front of the Uncanny Valley, and thus making it clear that your character is not trying to be 'real', there are other principles that might help. | There are a number of design principles many designers already follow in some form to avoid the Uncanny Valley altogether. While this often means staying in front of the Uncanny Valley, and thus making it clear that your character is not trying to be 'real', there are other principles that might help. | ||

# '''All body parts of entities should match each other's detail:''' A robot may quickly look uncanny when real and fake elements are mixed to create a character. For example, a robot that moves perfectly like a human but is unable to match facial emotions can quickly be seen as uncanny. It is important that the realism of its appearance should perfectly match the realism of its behavior. | # '''All body parts of entities should match each other's detail:''' A robot may quickly look uncanny when real and fake elements are mixed to create a character.<ref>Ho et al (2008). "Human emotion and the uncanny valley: A GLM, MDS, and Isomap analysis of robot video ratings"</ref> For example, a robot that moves perfectly like a human but is unable to match facial emotions can quickly be seen as uncanny. It is important that the realism of its appearance should perfectly match the realism of its behavior.<ref name="Goetz">Goetz et al (2003). "Matching robot appearance and behavior to tasks to improve human-robot cooperation"</ref> | ||

# '''Realism and expectation:''' When an entity looks very practical, people don't have too high expectations. In contrast, the opposite is true when an entity looks very human-like, where people often expect many additional, unnecessary features. It is important that | # '''Realism and expectation:''' When an entity looks very practical, people don't have too high expectations. In contrast, the opposite is true when an entity looks very human-like, where people often expect many additional, unnecessary features.<ref name="Goetz"/> It is important that the design is useful and even essential to what the entity is supposed to be doing, or its limitations will cause eeriness. | ||

# '''Human facial proportions should always accompany photorealistic texture:''' Only when the texture of an entity is not too human-like, some leeway is allowed in these proportions, and can even help | # '''Human facial proportions should always accompany photorealistic texture:''' Only when the texture of an entity is not too human-like, some leeway is allowed in these proportions, and can even help to lift your design out of the Uncanny Valley.<ref>MacDorman et al (2009). "Too real for comfort? Uncanny responses to computer generated faces"</ref> | ||

=== '''Critiques of the Uncanny Valley Theory''' === | ===== '''Critiques of the Uncanny Valley Theory''' ===== | ||

* '''Outdated:''' Mori defined his scale for the Uncanny Valley in 1970. While it is clear from many examples that Mori was indeed onto something, his definition is rather outdated. After all, a Bunraku puppet was the only entity that managed to 'pass' the Uncanny Valley and was therefore considered to be one of the most human-like figures Mori was aware of. Nowadays, | * '''Outdated:''' Mori defined his scale for the Uncanny Valley in 1970. While it is clear from many examples that Mori was indeed onto something, his definition is rather outdated. After all, a Bunraku puppet was the only entity that managed to 'pass' the Uncanny Valley and was therefore considered to be one of the most human-like figures Mori was aware of. Nowadays, designers have managed to approach artificial humans or human-like entities much better than could ever be imagined in 1970, which has proven that the scale is indeed outdated and has thus gone through several 'iterations' to most accurately predict the Uncanny Valley with state of the art designs. | ||

* '''Generation Specific:''' Younger generations are more used to robots, CGI and alike, and are therefore potentially less affected by this more or less hypothetical phenomenon. | * '''Generation Specific:''' Younger generations are more used to robots, CGI and alike, and are therefore potentially less affected by this more or less hypothetical phenomenon, as shown when the Uncanny Valley is viewed as a hypothetical issue by Newitz (2013)<ref>Newitz (2013). "Is the "uncanny valley" a myth?"</ref> | ||

* '''The Uncanny Valley is a collection of distinct phenomena:''' Phenomena labeled as uncanny have very distinct causes and rely on various different stimuli. These stimuli sometimes even overlap and can vary based on cultural belief and its comfort as well as someone's state of mind and the goal of the entity in question. | * '''The Uncanny Valley is a collection of distinct phenomena:''' Phenomena labeled as uncanny have very distinct causes and rely on various different stimuli. These stimuli sometimes even overlap and can vary based on cultural belief and its comfort as well as someone's state of mind and the goal of the entity in question. Examples are different perceptions due to different cultural backgrounds, which was one of the unexpected findings of Bartneck et al (2007).<ref>Bartneck et al (2007). "Is The Uncanny Valley An Uncanny Cliff?"</ref>, or simply human face perception and consequences when the face is 'odd.'<ref>MacDorman et al (2009). "Too real for comfort? Uncanny responses to computer generated faces"</ref> | ||

* '''Not one degree of human-likeness:''' While the Uncanny Valley is perceived as this perfect diagram with 1 valley near the end, researchers such as David Hanson have pointed out that this valley may appear anywhere on the monotonically increasing line. This idea supports the view that the Uncanny Valley arises from conflicting categorization or even issues with categorical perception, which cause conditions such as Capgras Delusion. | * '''Not one degree of human-likeness:''' While the Uncanny Valley is perceived as this perfect diagram with 1 valley near the end, researchers such as David Hanson<ref>Hanson et al (2005). "Upending the Uncanny Valley"</ref> have pointed out that this valley may appear anywhere on the monotonically increasing line. This idea supports the view that the Uncanny Valley arises from conflicting categorization or even issues with categorical perception, which cause conditions such as Capgras Delusion.<ref>Ellis & Lewis (2001). "Capgras delusion: A window on face recognition"</ref> | ||

* '''Good design can negate the Uncanny Valley:''' Using the idea that humans are programmed to find baby-like features cute, incorporating cartoon-ish features can help lift any design out of the Uncanny Valley. David Hanson further supported this by having participants rate photographs of robots on various points on the scale, where he could 'fix' the eerie robots by adding baby- and cartoon-like features. This is in conflict with the theoretical basis, which states that the human-likeness of an entity should perfectly match in any (body)part. | * '''Good design can negate the Uncanny Valley:''' Using the idea that humans are programmed to find baby-like features cute, incorporating cartoon-ish features can help lift any design out of the Uncanny Valley. David Hanson<ref>Hanson et al (2005). "Upending the Uncanny Valley"</ref> further supported this by having participants rate photographs of robots on various points on the scale, where he could 'fix' the eerie robots by adding baby- and cartoon-like features. This is in conflict with the theoretical basis, which states that the human-likeness of an entity should perfectly match in any (body)part. | ||

== '''Godspeed and RoSAS Scale''' == | === '''Godspeed and RoSAS Scale''' === | ||

=== '''Godspeed Scale''' === | ===== '''Godspeed Scale''' ===== | ||

Because the aim is to determine how a robot is rated on a set of items, and how movement alters these ratings, it is of critical importance to find a scale that measures this accurately. Luckily, many different scales exist for these purposes. One of such scales is the Godspeed scale, developed by Bartneck et al. (2009)<ref name ="Bartneck">Bartneck, C., Kulić, D., Croft, E., & Zoghbi, S. (2009). Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. In International Journal of Social Robotics. https://doi.org/10.1007/s12369-008-0001-3</ref>. This scale aims to provide a way to assess how different robots score on the following dimensions: anthropomorphism (1), animacy (2), likeability (3), perceived intelligence (4) and perceived safety (5). Each of these dimensions has a set of items associated, on which participants can rate the robot in question. Each item is presented in a semantic differential format. Examples of these items are: artificial-lifelike, mechanical-organic, unkind-kind, irresponsible-responsible and agitated-calm. Participants are asked to evaluate to which extent all of these items apply to the robot using a 5-point likert-scale (Bartneck et al., 2009)<ref name ="Bartneck" />. | Because the aim is to determine how a robot is rated on a set of items, and how movement alters these ratings, it is of critical importance to find a scale that measures this accurately. Luckily, many different scales exist for these purposes. One of such scales is the Godspeed scale, developed by Bartneck et al. (2009)<ref name ="Bartneck">Bartneck, C., Kulić, D., Croft, E., & Zoghbi, S. (2009). Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. In International Journal of Social Robotics. https://doi.org/10.1007/s12369-008-0001-3</ref>. This scale aims to provide a way to assess how different robots score on the following dimensions: anthropomorphism (1), animacy (2), likeability (3), perceived intelligence (4) and perceived safety (5). Each of these dimensions has a set of items associated, on which participants can rate the robot in question. Each item is presented in a semantic differential format. Examples of these items are: artificial-lifelike, mechanical-organic, unkind-kind, irresponsible-responsible and agitated-calm. Participants are asked to evaluate to which extent all of these items apply to the robot using a 5-point likert-scale (Bartneck et al., 2009)<ref name ="Bartneck" />. | ||

=== '''Godspeed Scale Critiques''' === | ===== '''Godspeed Scale Critiques''' ===== | ||

Even though this is a widely used tool for assessing robot appearance, there has been some critique. Some scholars have argued that there are quite a few issues regarding this scale, and that it needs further investigation. Here we will go over two main issues with the Godspeed scale, as discussed by Carpinella (2017), as well as Chin-Chang & MacDorman (2010). | Even though this is a widely used tool for assessing robot appearance, there has been some critique. Some scholars have argued that there are quite a few issues regarding this scale, and that it needs further investigation. Here we will go over two main issues with the Godspeed scale, as discussed by (Carpinella, 2017)<ref name="Carpinella">Carpinella, C. M., Wyman, A. B., Perez, M. A., & Stroessner, S. J. (2017). The Robotic Social Attributes Scale (RoSAS): Development and Validation. ACM/IEEE International Conference on Human-Robot Interaction. https://doi.org/10.1145/2909824.3020208</ref>, as well as Chin-Chang & MacDorman (2010)<ref name="ChinChang">Ho, C. C., & MacDorman, K. F. (2017). Measuring the Uncanny Valley Effect: Refinements to Indices for Perceived Humanness, Attractiveness, and Eeriness. International Journal of Social Robotics. https://doi.org/10.1007/s12369-016-0380-9</ref>. | ||

First of all, sometimes the items do not load onto the dimensions like expected. For example, according to the godspeed scale the item fake-natural is supposed to load onto the anthropomorpism dimension, but research has shown that this is not always the case. This means that the fake-natural item might not contribute much to the score of the anthropomorphism dimension, and that it could be statistically useless. On the other hand, sometimes items load onto factors that they are not supposed to load onto. The item inert-interactive loading onto the perceived intelligence dimension is an example of such a situation. According to the Godspeed scale it is not supposed to be on there, yet research tells us that it might (Carpinella, 2017). Situations like these suggest that the dimensions and their corresponding items might need further investigation and/or tweaking. | First of all, sometimes the items do not load onto the dimensions like expected. For example, according to the godspeed scale the item fake-natural is supposed to load onto the anthropomorpism dimension, but research has shown that this is not always the case. This means that the fake-natural item might not contribute much to the score of the anthropomorphism dimension, and that it could be statistically useless. On the other hand, sometimes items load onto factors that they are not supposed to load onto. The item inert-interactive loading onto the perceived intelligence dimension is an example of such a situation. According to the Godspeed scale it is not supposed to be on there, yet research tells us that it might (Carpinella, 2017)<ref name="Carpinella" />. Situations like these suggest that the dimensions and their corresponding items might need further investigation and/or tweaking. | ||

Secondly, the scale uses a semantic differential response format, which means that the items used to rate the robots have two extremes, instead of just being one word. This makes sense when an item uses antonyms as endpoints, like unpleasant-pleasant, yet in some situations this is not the case (Chin-Chang & MacDorman, 2010). It can be argued, for example, that the item awful-nice contains to endpoints that are not direct antonyms of eachother. This might be evidence that the Godspeed scale is not as exact as one might think. | Secondly, the scale uses a semantic differential response format, which means that the items used to rate the robots have two extremes, instead of just being one word. This makes sense when an item uses antonyms as endpoints, like unpleasant-pleasant, yet in some situations this is not the case (Chin-Chang & MacDorman, 2010)<ref name="ChinChang" />. It can be argued, for example, that the item awful-nice contains to endpoints that are not direct antonyms of eachother. This might be evidence that the Godspeed scale is not as exact as one might think. | ||

=== '''RoSAS: Robot Social Attribute Scale''' === | ===== '''RoSAS: Robot Social Attribute Scale''' ===== | ||

Building on the work of Bartneck et al. (2017)<ref name ="Bartneck" />, Carpinella (2017) developed a new scale called the Robot Social Attribute Scale, or RoSAS in short. This scale is based on extensive research and has its roots in the Godspeed scale. There are three main differences between the Godspeed scale and the RoSAS. | Building on the work of Bartneck et al. (2017)<ref name ="Bartneck" />, Carpinella (2017)<ref name="Carpinella" /> developed a new scale called the Robot Social Attribute Scale, or RoSAS in short. This scale is based on extensive research and has its roots in the Godspeed scale. There are three main differences between the Godspeed scale and the RoSAS. | ||

{| border="1" style="border-collapse:collapse;text-align: left;width: 60%;float:right; margin-left: 20px;margin-bottom:20px" | {| border="1" style="border-collapse:collapse;text-align: left;width: 60%;float:right; margin-left: 20px;margin-bottom:20px" | ||

|+''Table1. the three factors in the RoSAS: warmth, competence and discomfort'' | |+''Table1. the three factors in the RoSAS: warmth, competence and discomfort'' | ||

!style="padding: 5px"|Discomfort | |||

!style="padding: 5px"|Competence | |||

!style="padding: 5px"|Warmth | !style="padding: 5px"|Warmth | ||

|- | |- | ||

|style="padding: 5px"|Aggressive | |style="padding: 5px"|Aggressive | ||

| Line 333: | Line 341: | ||

Firstly, the RoSAS tests robot appearance on three dimensions instead of five: warmth, discomfort and competence. These three dimensions are derived from the Godspeed scale, but should be more reliable and robust (Bartneck et al., 2017). These dimensions are measured using six items per dimension, which can be seen in table 1. | Firstly, the RoSAS tests robot appearance on three dimensions instead of five: warmth, discomfort and competence. These three dimensions are derived from the Godspeed scale, but should be more reliable and robust (Bartneck et al., 2017)<ref name ="Bartneck" />. These dimensions are measured using six items per dimension, which can be seen in table 1. | ||

| Line 345: | Line 353: | ||

All participants will be asked to fill out the questionnaire. In this questionnaire, subjects will see an image of a robot, and a video of the same robot moving, for three different anthropomorphic robots. For the exact form and contents of the questionnaire, please refer to appendix A. The robots used in the survey fall into three different categories: slightly humanlike, humanlike and extremely humanlike. These three categories will be illustrated by the following robots: Atlas (Boston Dynamics), Honda ASIMO (Honda), and Geminoid DK (Aalborg University, Osaka University, Kokoro & ATR). | All participants will be asked to fill out the questionnaire. In this questionnaire, subjects will see an image of a robot, and a video of the same robot moving, for three different anthropomorphic robots. For the exact form and contents of the questionnaire, please refer to appendix A. The robots used in the survey fall into three different categories: slightly humanlike, humanlike and extremely humanlike. These three categories will be illustrated by the following robots: Atlas (Boston Dynamics), Honda ASIMO (Honda), and Geminoid DK (Aalborg University, Osaka University, Kokoro & ATR). | ||

Subject responses will be measured using the Robotic Social Attributes Scale (RoSAS), developed by Carpinella, M. et al. (2017). Each robot will be rated on its warmth, discomfort and competence twice: once after seeing a still image, and once after seeing a video. To ensure that confounding variables like habituation to the robot have a minimal effect on participant judgement, the order of presentation is counterbalanced. This means that half of all participants will first see the image, then the video (normal order), and the other half will first see the video, then the image (reversed order). The order in which the different robots are presented is randomized. For a visual representation, please refer to table 2. All three measurement dimensions (warmth, discomfort and competence) consist of six items that are supposed to measure the dimension they are in. Furthermore, after having rated a robot in both the image and video condition, participants will be asked if their opinion about the robot changed after the second exposure, and if so, how/why. We are using a sample size of 40 students. This is a convenience sample; during COVID it can be hard to find participants, so we chose students because they are the easiest for us to reach. However, it also makes sense to pick students as our sample, since they will be the engineers designing the robots in the future. We set the size at 40 because we feel like this was achievable, and still of decent size. | Subject responses will be measured using the Robotic Social Attributes Scale (RoSAS), developed by Carpinella, M. et al. (2017)<ref name="Carpinella" />. Each robot will be rated on its warmth, discomfort and competence twice: once after seeing a still image, and once after seeing a video. To ensure that confounding variables like habituation to the robot have a minimal effect on participant judgement, the order of presentation is counterbalanced. This means that half of all participants will first see the image, then the video (normal order), and the other half will first see the video, then the image (reversed order). The order in which the different robots are presented is randomized. For a visual representation, please refer to table 2. All three measurement dimensions (warmth, discomfort and competence) consist of six items that are supposed to measure the dimension they are in. Furthermore, after having rated a robot in both the image and video condition, participants will be asked if their opinion about the robot changed after the second exposure, and if so, how/why. We are using a sample size of 40 students. This is a convenience sample; during COVID it can be hard to find participants, so we chose students because they are the easiest for us to reach. However, it also makes sense to pick students as our sample, since they will be the engineers designing the robots in the future. We set the size at 40 because we feel like this was achievable, and still of decent size. | ||

The statistical software that will be used to analyze the data is Stata/IC 16.0. | The statistical software that will be used to analyze the data is Stata/IC 16.0. | ||

| Line 352: | Line 360: | ||

The data gathered by the survey were processed in two different ways. Firstly, the results corresponding to the RoSAS scale were analyzed using statistical tests like t tests and the Wilcoxon Rank Sum Test, to find mean differences for the dimensions between moving and non-moving conditions. Other then the RoSAS data, participants were also asked, for each robot, whether their opinion on the robot changed due to the second exposure, and if so, how/why. This in qualitative data and was therefore processed using a simple thematic analysis. | The data gathered by the survey were processed in two different ways. Firstly, the results corresponding to the RoSAS scale were analyzed using statistical tests like t tests and the Wilcoxon Rank Sum Test, to find mean differences for the dimensions between moving and non-moving conditions. Other then the RoSAS data, participants were also asked, for each robot, whether their opinion on the robot changed due to the second exposure, and if so, how/why. This in qualitative data and was therefore processed using a simple thematic analysis. | ||

== '''RoSAS Data''' == | === '''RoSAS Data''' === | ||

For all three robots, the data was analyzed seperately. For each robot, the 18 RoSAS items were divided over 3 dimensions according to the RoSAS scale using Cronbach's Alpha: warmth, discomfort and competence. Then, for each dimension, the difference in means was computed for the still-image and video condition. Which statistical test to perform was determined by analyzing the distribution of the dimensions using Shapiro-Wilk W tests and Skewness-Kurtosis tests. If, according to these tests, the distribution is normal, a simple two sided t test was performed. When this was not the case, we tried to transform the data. Lastly, if this did not yield a useable distribution, a Wilcoxon Rank Sum Test was performed. In all of these tests, H<sub>0</sub>: μ<sub>moving</sub> = μ<sub>notmoving</sub>, H<sub>a</sub>: μ<sub>moving</sub> ≠ μ<sub>notmoving</sub>. | For all three robots, the data was analyzed seperately. For each robot, the 18 RoSAS items were divided over 3 dimensions according to the RoSAS scale using Cronbach's Alpha: warmth, discomfort and competence. Then, for each dimension, the difference in means was computed for the still-image and video condition. Which statistical test to perform was determined by analyzing the distribution of the dimensions using Shapiro-Wilk W tests and Skewness-Kurtosis tests. If, according to these tests, the distribution is normal, a simple two sided t test was performed. When this was not the case, we tried to transform the data. Lastly, if this did not yield a useable distribution, a Wilcoxon Rank Sum Test was performed. In all of these tests, H<sub>0</sub>: μ<sub>moving</sub> = μ<sub>notmoving</sub>, H<sub>a</sub>: μ<sub>moving</sub> ≠ μ<sub>notmoving</sub>. | ||

=== '''Atlas''' === | ===== '''Atlas''' ===== | ||

For warmth, there was a significant difference in means between the movement conditions (μ<sub>moving</sub> = 3.61, σ<sub>moving</sub> = 1.34, μ<sub>notmoving</sub> = 2.85, σ<sub>notmoving</sub> = 1.19, p = 9.00 * 10<sup>-3</sup>). Both for discomfort (μ<sub>moving</sub> = 2.54, σ<sub>moving</sub> = 9.86 * 10<sup>-1</sup>, μ<sub>notmoving</sub> = 2.98, σ<sub>notmoving</sub> = 1.17, p = 7.16 * 10<sup>-2</sup>) and competence (μ<sub>moving</sub> = 5.02, σ<sub>moving</sub> = 9.62 * 10<sup>-1</sup>, μ<sub>notmoving</sub> = 4.70, σ<sub>notmoving</sub> = 1.09, p = 2.47 * 10<sup>-1</sup>), there was no statistically significant difference in means between the movement conditions. To compute these values, a two-sided t test was used for warmth and discomfort, and a rank sum test was used for competence. | For warmth, there was a significant difference in means between the movement conditions (μ<sub>moving</sub> = 3.61, σ<sub>moving</sub> = 1.34, μ<sub>notmoving</sub> = 2.85, σ<sub>notmoving</sub> = 1.19, p = 9.00 * 10<sup>-3</sup>). Both for discomfort (μ<sub>moving</sub> = 2.54, σ<sub>moving</sub> = 9.86 * 10<sup>-1</sup>, μ<sub>notmoving</sub> = 2.98, σ<sub>notmoving</sub> = 1.17, p = 7.16 * 10<sup>-2</sup>) and competence (μ<sub>moving</sub> = 5.02, σ<sub>moving</sub> = 9.62 * 10<sup>-1</sup>, μ<sub>notmoving</sub> = 4.70, σ<sub>notmoving</sub> = 1.09, p = 2.47 * 10<sup>-1</sup>), there was no statistically significant difference in means between the movement conditions. To compute these values, a two-sided t test was used for warmth and discomfort, and a rank sum test was used for competence. | ||

=== '''ASIMO''' === | ===== '''ASIMO''' ===== | ||

Both warmth (μ<sub>moving</sub> = 4.67, σ<sub>moving</sub> = 1.46, μ<sub>notmoving</sub> = 3.53, σ<sub>notmoving</sub> = 1.45, p = 7.00 * 10<sup>-4</sup>) and competence (μ<sub>moving</sub> = 5.10, σ<sub>moving</sub> = 1.32, μ<sub>notmoving</sub> = 4.13, σ<sub>notmoving</sub> = 1.31, p = 1.50 * 10<sup>-3</sup>) show significantly different means for the movement conditions. Just like Atlas, there is no significant difference in means for discomfort (μ<sub>moving</sub> = 2.13, σ<sub>moving</sub> = 8.64 * 10<sup>-1</sup>, μ<sub>notmoving</sub> = 2.50, σ<sub>notmoving</sub> = 9.40 * 10<sup>-1</sup>, p = 6.99 * 10<sup>-2</sup>). Again, we used a two-sided t test to compute the values for warmth and discomfort, whereas a rank sum test was used for competence. | Both warmth (μ<sub>moving</sub> = 4.67, σ<sub>moving</sub> = 1.46, μ<sub>notmoving</sub> = 3.53, σ<sub>notmoving</sub> = 1.45, p = 7.00 * 10<sup>-4</sup>) and competence (μ<sub>moving</sub> = 5.10, σ<sub>moving</sub> = 1.32, μ<sub>notmoving</sub> = 4.13, σ<sub>notmoving</sub> = 1.31, p = 1.50 * 10<sup>-3</sup>) show significantly different means for the movement conditions. Just like Atlas, there is no significant difference in means for discomfort (μ<sub>moving</sub> = 2.13, σ<sub>moving</sub> = 8.64 * 10<sup>-1</sup>, μ<sub>notmoving</sub> = 2.50, σ<sub>notmoving</sub> = 9.40 * 10<sup>-1</sup>, p = 6.99 * 10<sup>-2</sup>). Again, we used a two-sided t test to compute the values for warmth and discomfort, whereas a rank sum test was used for competence. | ||

=== '''Geminoid DK''' === | ===== '''Geminoid DK''' ===== | ||

None of the dimensions had a statistically significant difference in means between the movement conditions (warmth: μ<sub>moving</sub> = 3.91, σ<sub>moving</sub> = 1.54, μ<sub>notmoving</sub> = 4.20, σ<sub>notmoving</sub> = 1.43, p = 3.89 * 10<sup>-1</sup>; discomfort: μ<sub>moving</sub> = 3.81, σ<sub>moving</sub> = 1.48, μ<sub>notmoving</sub> = 3.54, σ<sub>notmoving</sub> = 1.43, p = 4.15 * 10<sup>-1</sup>; competence: μ<sub>moving</sub> = 4.11, σ<sub>moving</sub> = 1.32, μ<sub>notmoving</sub> = 4.33, σ<sub>notmoving</sub> = 1.30, p = 4.52 * 10<sup>-1</sup>). All values corresponding to Geminoid DK were computed using two-sided t tests. | None of the dimensions had a statistically significant difference in means between the movement conditions (warmth: μ<sub>moving</sub> = 3.91, σ<sub>moving</sub> = 1.54, μ<sub>notmoving</sub> = 4.20, σ<sub>notmoving</sub> = 1.43, p = 3.89 * 10<sup>-1</sup>; discomfort: μ<sub>moving</sub> = 3.81, σ<sub>moving</sub> = 1.48, μ<sub>notmoving</sub> = 3.54, σ<sub>notmoving</sub> = 1.43, p = 4.15 * 10<sup>-1</sup>; competence: μ<sub>moving</sub> = 4.11, σ<sub>moving</sub> = 1.32, μ<sub>notmoving</sub> = 4.33, σ<sub>notmoving</sub> = 1.30, p = 4.52 * 10<sup>-1</sup>). All values corresponding to Geminoid DK were computed using two-sided t tests. | ||

| Line 441: | Line 449: | ||

|} | |} | ||

== '''Qualitative Data''' == | === '''Qualitative Data''' === | ||

To analyze the qualitative data obtained from the open questions, we performed a thematic analysis. All answers were analyzed one by one, and in iterative fashion, five main themes were found. Almost all responses can be associated with one of the following five themes. Each theme also contains some example sentences, filled in by subjects. For the full document with all color coded responses, please refer to appendix | To analyze the qualitative data obtained from the open questions, we performed a thematic analysis. All answers were analyzed one by one, and in iterative fashion, five main themes were found. Almost all responses can be associated with one of the following five themes. Each theme also contains some example sentences, filled in by subjects. For the full document with all color coded responses, please refer to appendix C. | ||

# The video makes the robot look more friendly. | # The video makes the robot look more friendly. | ||

| Line 463: | Line 471: | ||

#* ''"I think the unnatural movement in the video influenced my view of the picture. It does look more natural when standing still compared to when it's moving."'' | #* ''"I think the unnatural movement in the video influenced my view of the picture. It does look more natural when standing still compared to when it's moving."'' | ||

#* ''"In the picture the robot looks like a human. In the video, its movements show that it is a robot."'' | #* ''"In the picture the robot looks like a human. In the video, its movements show that it is a robot."'' | ||

#* ''"It looked even more like a real human."'' (This person saw the video first, then the picture. This is a response to the picture. | #* ''"It looked even more like a real human."'' (This person saw the video first, then the picture. This is a response to the picture.) | ||

== '''Interpretation''' == | === '''Interpretation''' === | ||

With the obtained results, there are multiple conclusions to be made. | With the obtained results, there are multiple conclusions to be made. | ||

| Line 509: | Line 517: | ||

=== '''Future Research''' === | === '''Future Research''' === | ||

First and foremost, future research needs to be concluded on a random sample. This is so that the audience interviewed is broad and more general. As of now, a convenience sample was a limitation due to lack of volunteers, but in the future, research done by the enterprise will consists of randomly chosen individuals. Due to the broader audience of the future studies, we want to encapsulate questions distinguishing the individuals taking the test by ethnicity, culture, religion, sex, and age. This hopefully will yield significant differences, such that each category can be improved individually. For example, if we realize that the Asian market does not like Geminoid Dk as much, but prefers a robot that is more appropriate to their culture, then future studies could use more examples to correctly measure the influence that this factor has on likeness for example. Due to prior research, it is already expected that culture will have an influence on people’s opinions, but the magnitude of this influence needs to be better measured. An international robot manufacturer would want to know for sure if making an entirely new robot look for the Asian market would be worthwhile in terms of cost of production vs return. The same point can be made with regards to elder-looking robots that might have an influence on human likeliness with respect to age. | First and foremost, future research needs to be concluded on a random sample. This is so that the audience interviewed is broad and more general. As of now, a convenience sample was a limitation due to lack of volunteers, but in the future, research done by the enterprise will consists of randomly chosen individuals. Due to the broader audience of the future studies, we want to encapsulate questions distinguishing the individuals taking the test by ethnicity, culture, religion, sex, and age. This hopefully will yield significant differences, such that each category can be improved individually. For example, if we realize that the Asian market does not like Geminoid Dk as much, but prefers a robot that is more appropriate to their culture, then future studies could use more examples to correctly measure the influence that this factor has on likeness for example. Due to prior research, it is already expected that culture will have an influence on people’s opinions, but the magnitude of this influence needs to be better measured. An international robot manufacturer would want to know for sure if making an entirely new robot look for the Asian market would be worthwhile in terms of cost of production vs return. The same point can be made with regards to elder-looking robots that might have an influence on human likeliness with respect to age. | ||

= '''Enterprise''' = | = '''Enterprise''' = | ||

=='''Introduction'''== | ==='''Introduction'''=== | ||