PRE2020 3 Group9: Difference between revisions

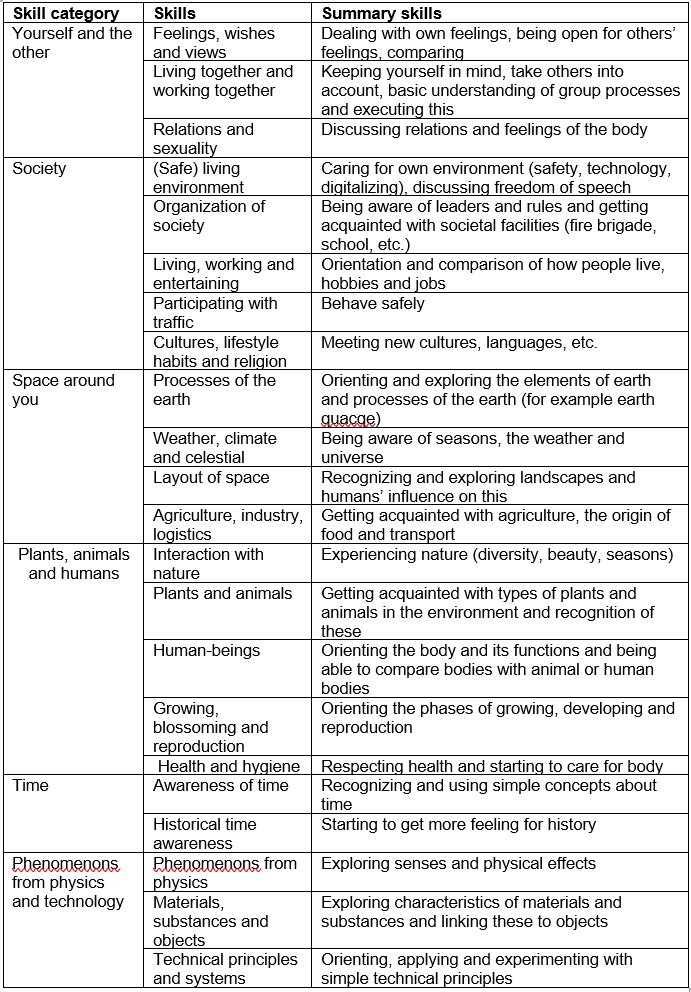

TUe\20182065 (talk | contribs) |

|||

| (35 intermediate revisions by 4 users not shown) | |||

| Line 447: | Line 447: | ||

=Deliverable 1: Local navigation model= | =Deliverable 1: Local navigation model= | ||

This chapter describes how a model of the path planning is made. It will also describe the steps that have been taken to try to achieve local navigation. Unfortunately the local navigation goal was not met, but a model of the path planning was created successfully. | This chapter describes how a model of the path planning is made. It will also describe the steps that have been taken to try to achieve local navigation. Unfortunately the local navigation goal was not met, but a model of the path planning was created successfully. | ||

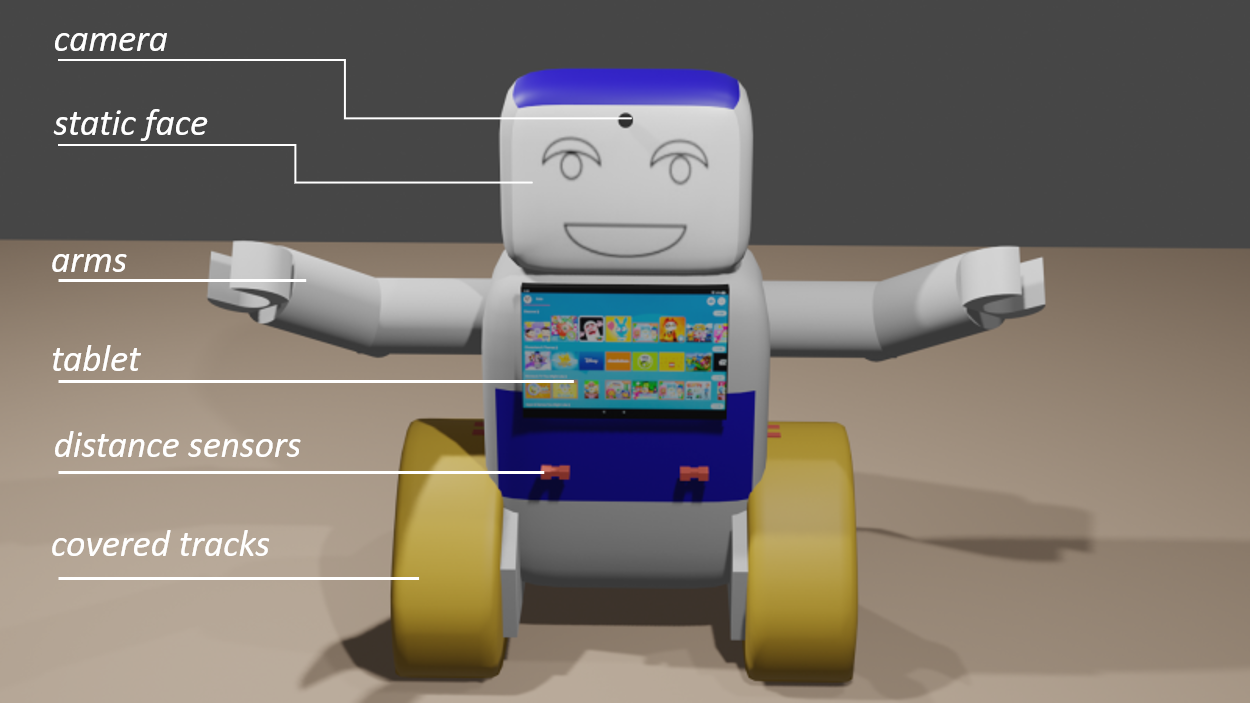

'''Why should Rubby move?''' | |||

Rubby has to move so that it will always face the child. When the parents are working and want to see if their child is safe they can see on their monitor what the robot is filming. So the robot should always face with it's camera to the child. Also movement is needed since there will be made games in the future that include physical training. For example playing with a ball (by moving against a ball it will start rolling and the child will play with it). But it will also be important for the future when facial expression recognition will be included. So when the robot faces the child it can interact with it when certain emotions are detected. Local navigation is needed for avoiding obstacles when achieving these goals. | |||

'''The difference between navigation, localization and path planning''' | '''The difference between navigation, localization and path planning''' | ||

| Line 471: | Line 475: | ||

'''Roadmap path planner''' | '''Roadmap path planner''' | ||

When the map has been inflated by the dimension of the robot, mobileRobotPRM object is used as a roadmap path planner. The object uses the map to generate a roadmap, which is a network graph of possible paths in the map based on free and occupied spaces. | When the map has been inflated by the dimension of the robot, mobileRobotPRM object is used as a roadmap path planner. The object uses the map to generate a roadmap, which is a network graph of possible paths in the map based on free and occupied spaces. To find an obstacle-free path from start to end location, the number of nodes and connection distances are adapted so the complexity is fitted into the map. After the map is defined, the mobileRobotPRM path planner generates the specified number of nodes throughout the free spaces in the map. A connection between nodes is made when a line between two nodes contains no obstacles and is within the specified connection distance. You can see this in the figure PRM Algorithm. | ||

'''Controller''' | '''Controller''' | ||

| Line 481: | Line 485: | ||

== Progress on Local Navigation == | == Progress on Local Navigation == | ||

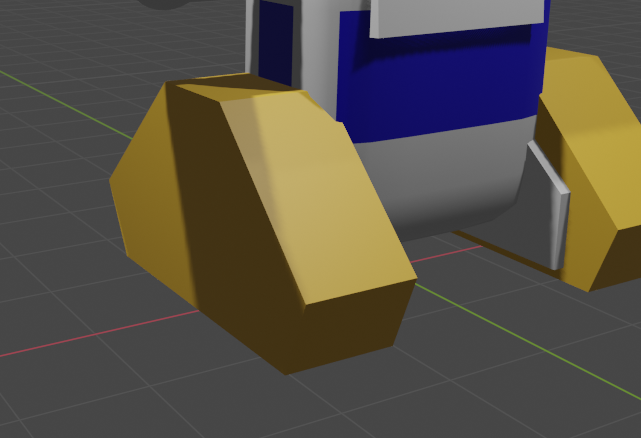

[[File:Simscape_only_movement.PNG|400px|thumb|right|Overwiev of simscape model]] | |||

A start was made on developing local navigation for the robot. To start off, a model of Rubby was made in the Unified Robot Description Format (urdf). This model was kinematically similar to the actual robot (it moved in exactly the same way), but the shape was simplified to make simulation easier. The goal was to load this model into a simulation environment and test the obstacle avoidance capabilities of the robot. A LiDAR sensor was to be used to identify obstacles and to avoid them, a program should be written in Matlab. Two different simulation environments were tested. The first one was the simulink simulation environment and the second one was Gazebo in cooperation with Matlab Simulink. | A start was made on developing local navigation for the robot. To start off, a model of Rubby was made in the Unified Robot Description Format (urdf). This model was kinematically similar to the actual robot (it moved in exactly the same way), but the shape was simplified to make simulation easier. The goal was to load this model into a simulation environment and test the obstacle avoidance capabilities of the robot. A LiDAR sensor was to be used to identify obstacles and to avoid them, a program should be written in Matlab. Two different simulation environments were tested. The first one was the simulink simulation environment and the second one was Gazebo in cooperation with Matlab Simulink. | ||

'''Simulink Simulation''' | '''Simulink Simulation''' | ||

| Line 492: | Line 497: | ||

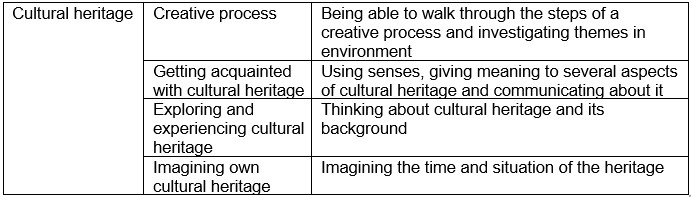

The first step was achieved by analytically determining the motion of the robot for given wheel positions. The exact derivation of the motion of the robot is will not be elaborated on here, but the conclusion was that for a given velocity of both the wheels, the robot would move in the desired way. An overview of the simscape model that defined the motion of the robot can be seen in the figure. | The first step was achieved by analytically determining the motion of the robot for given wheel positions. The exact derivation of the motion of the robot is will not be elaborated on here, but the conclusion was that for a given velocity of both the wheels, the robot would move in the desired way. An overview of the simscape model that defined the motion of the robot can be seen in the figure. | ||

The second step was achieved by using a test environment used in a webinar by Mathworks (MathWorks Robotics and Autonomous Systems Team, 2021). | The second step was achieved by using a test environment used in a webinar by Mathworks (MathWorks Robotics and Autonomous Systems Team, 2021). A video of the robot moving through this environment can be seen on: https://youtu.be/NxY3lH0EniM | ||

[[File:rubbyingazebo.jpg|320px|thumb|right|Rubby in the gazebo environment]] | |||

Adding sensors in the simulink environment has to be done entirely manually, meaning that for a LiDAR sensor with 180 rays, all 180 rays have to be defined and implemented manually. This is of course not a completely straightforward task and after some further consideration, it was decided to use the Gazebo simulation environment instead. This environment automatically generates all of the sensor data, which is a far more efficient way of doing things. Gazebo can also perform Co-simulations with Matlab simulink, which allowed us to use the pre-existing knowledge of this program to develop a control algorithm. | Adding sensors in the simulink environment has to be done entirely manually, meaning that for a LiDAR sensor with 180 rays, all 180 rays have to be defined and implemented manually. This is of course not a completely straightforward task and after some further consideration, it was decided to use the Gazebo simulation environment instead. This environment automatically generates all of the sensor data, which is a far more efficient way of doing things. Gazebo can also perform Co-simulations with Matlab simulink, which allowed us to use the pre-existing knowledge of this program to develop a control algorithm. | ||

'''Gazebo Simulation''' | '''Gazebo Simulation''' | ||

[[File:Lidar_data.jpg|320px|thumb|right| | [[File:Lidar_data.jpg|320px|thumb|right| LiDAR data from the Gazebo simulation]] | ||

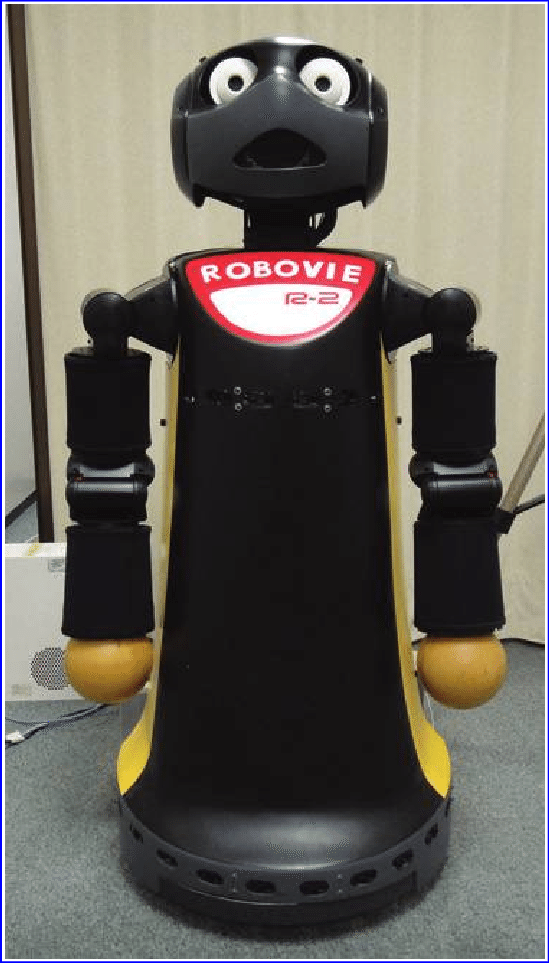

Since Gazebo has limited support on windows, it had to be used on a Linux system (Ubuntu in this case). Implementing the robot in Gazebo had some other challenges as well. To launch a world in Gazebo with the robot in it, ROS (Robot Operating System) packages had to be used. A package was made for both the Robot and the world that was designed within gazebo. To test the connection to matlab, | Since Gazebo has limited support on windows, it had to be used on a Linux system (Ubuntu in this case). Implementing the robot in Gazebo had some other challenges as well. To launch a world in Gazebo with the robot in it, ROS (Robot Operating System) packages had to be used. A package was made for both the Robot and the world that was designed within gazebo. The robot and a LiDAR sensor could then be seen in the world, as can be seen in the figure on the right. To test the connection to matlab, the sensor data from this LiDAR was plotted in Matlab, an example of which can be seen in the figure. When this LiDAR data corresponded to the data that was expected, see the figure on the right, an attempt was made at adding such a LiDAR sensor to the robot. Since the world file and the robot file were in two different file formats, it was not just a case of copy-pasting the code used to make the sensor from the world file and adding it to the robot. The support for Sensors in the urdf file format is small at best, and significant knowledge of the ROS and the Gazebo program is required to properly implement this. This knowledge is not present within the group however, and there was not enough time to properly acquire this within the time frame given for this project. | ||

'''Continuation''' | '''Continuation''' | ||

| Line 552: | Line 558: | ||

In [[#Appendix F: Designs in Blender|Appendix F]] | In [[#Appendix F: Designs in Blender|Appendix F]] more figures of the preliminary designs in Blender can be found. | ||

==Final design== | ==Final design== | ||

| Line 721: | Line 727: | ||

It is important that all stakeholders are involved in the development plan from begin to end. The primary, secondary and tertiary user needs are therefore key elements in the future plan. | It is important that all stakeholders are involved in the development plan from begin to end. The primary, secondary and tertiary user needs are therefore key elements in the future plan. | ||

The following stakeholders are involved in the development of Rubby: | The following stakeholders are involved in the development of Rubby: | ||

*Children: These are the primary users. Therefore, their needs form the most important factor in the future plan. They need to actually benefit from Rubby, the robot entertains them in an interactional way and is not only a toy, but also a 'buddy'. The goal is that the child will develop | *Children: These are the primary users. Therefore, their needs form the most important factor in the future plan. They need to actually benefit from Rubby, the robot entertains them in an interactional way and is not only a toy, but also a 'buddy'. The goal is that the child will develop its educational and general skills. | ||

*Parents: These are the secondary users. They benefit from Rubby in such a way that they can work from home more easily without being disturbed and also by having children that are happier and are more supported in their learning development. Therefore, their involvement in the future plan is of great importance. | *Parents: These are the secondary users. They benefit from Rubby in such a way that they can work from home more easily without being disturbed and also by having children that are happier and are more supported in their learning development. Therefore, their involvement in the future plan is of great importance. | ||

*Teachers: They can be seen as the tertiary users. When children are ill they are still able to learn things at home. Development of basic skills can be supported by the robot so more time can be spent on more profound subjects. Hence, teachers' experiences and needs should also be taken into account. | *Teachers: They can be seen as the tertiary users. When children are ill they are still able to learn things at home. Development of basic skills can be supported by the robot so more time can be spent on more profound subjects. Hence, teachers' experiences and needs should also be taken into account. | ||

| Line 727: | Line 733: | ||

*Schools: These are important stakeholders, since there is a shortage of teachers and Rubby can therefore be of added value in education. | *Schools: These are important stakeholders, since there is a shortage of teachers and Rubby can therefore be of added value in education. | ||

*Ministry of education: The same holds for this stakeholder. Furthermore, the implementation of Rubby can help to give each child equal chances to learn. Hence it might be interesting for the ministry to invest in the robot. | *Ministry of education: The same holds for this stakeholder. Furthermore, the implementation of Rubby can help to give each child equal chances to learn. Hence it might be interesting for the ministry to invest in the robot. | ||

*Teaching method companies: These stakeholders benefit from the robot, as their educational | *Teaching method companies: These stakeholders benefit from the robot, as their educational programs can also be involved in homes and not only in school settings. The experience of teaching method companies are therefore essential to the future plan. | ||

| Line 735: | Line 741: | ||

The robot needs to be made commercially available and also attractive. In order to achieve this, the implementation of Rubby starts at small-scale. It will be used in a small group; in this stage the users will be questioned about their experience and about required improvements. In this way, an evaluation of the preliminary state of the robot is made. These experiences and improvements on the robot based on the recommendations will be used to convince the stakeholders (parents and schools, but also teaching method companies and the education ministry) to invest in the robot. | The robot needs to be made commercially available and also attractive. In order to achieve this, the implementation of Rubby starts at small-scale. It will be used in a small group; in this stage the users will be questioned about their experience and about required improvements. In this way, an evaluation of the preliminary state of the robot is made. These experiences and improvements on the robot based on the recommendations will be used to convince the stakeholders (parents and schools, but also teaching method companies and the education ministry) to invest in the robot. | ||

Concerning the financial aspect, since the robot may not become too expensive for parents (as becomes clear from the results of the survey) nor for schools who want to invest in it, a loan system is introduced by which schools can invest in Rubby. In the long term, this | Concerning the financial aspect, since the robot may not become too expensive for parents (as becomes clear from the results of the survey) nor for schools who want to invest in it, a loan system is introduced by which schools can invest in Rubby. This can be paid by the corona education subsidy that is introduced by the Dutch government to catch up. In the long term, this will result in an increased general willingness to invest in the robot. | ||

For parents that work at home for a company (due to the corona measures), the investment of them in the robot will be supported by that company. These companies will partially pay for the robot. After all, this will also benefit them, because parents will then be able to work better from home and thus be more productive. | For parents that work at home for a company (due to the corona restricted measures), the investment of them in the robot will be supported by that company. These companies will partially pay for the robot. After all, this will also benefit them, because parents will then be able to work better from home and thus be more productive. | ||

Ultimately, when the robot is used on a larger scale, then also (producing) costs will drop, and hence it will become more and more interesting for more users. | Ultimately, when the robot is used on a larger scale, then also (producing) costs will drop, and hence it will become more and more interesting for more users. | ||

| Line 1,221: | Line 1,227: | ||

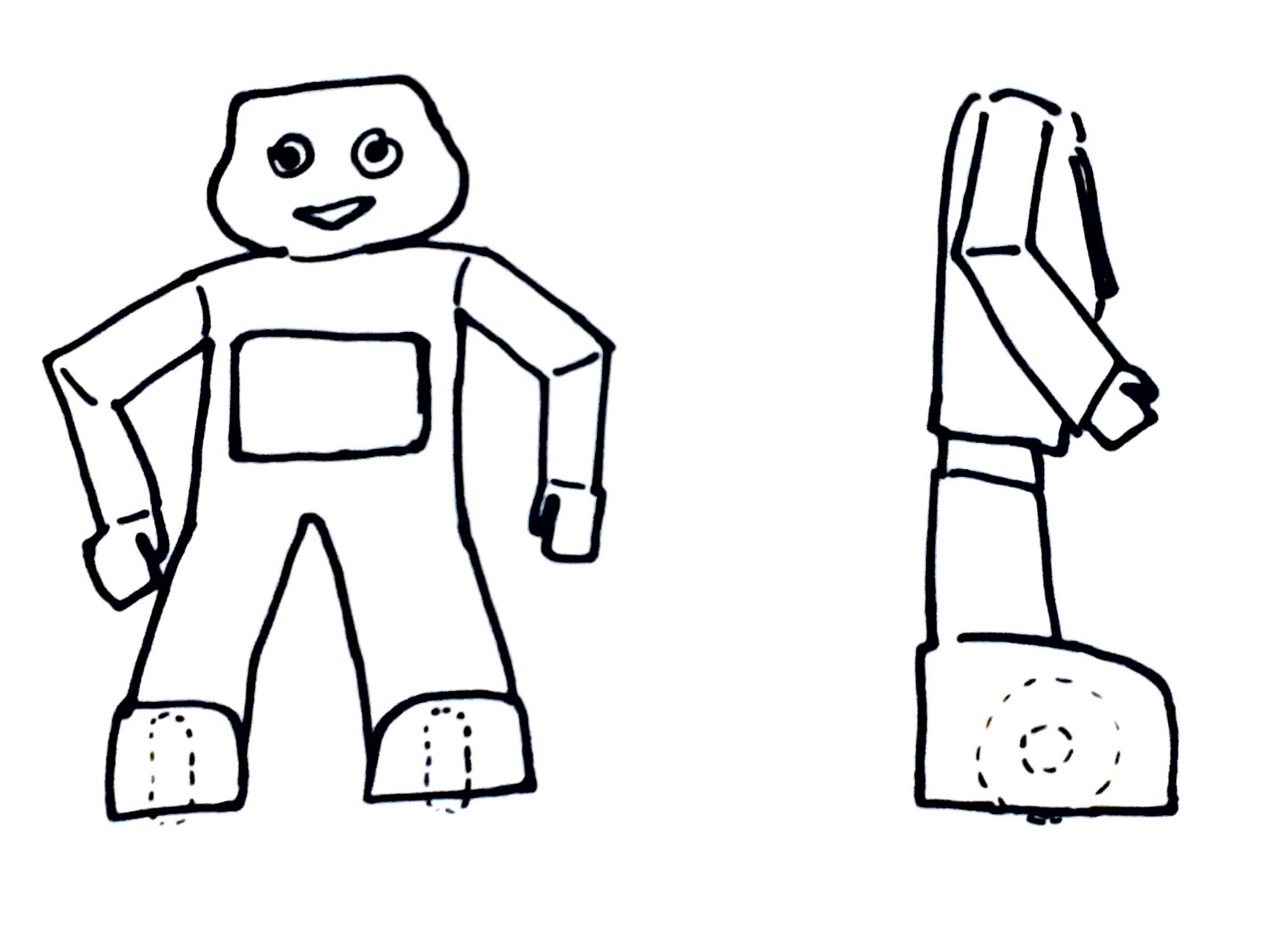

==Appendix E: Sketches external design== | ==Appendix E: Sketches external design== | ||

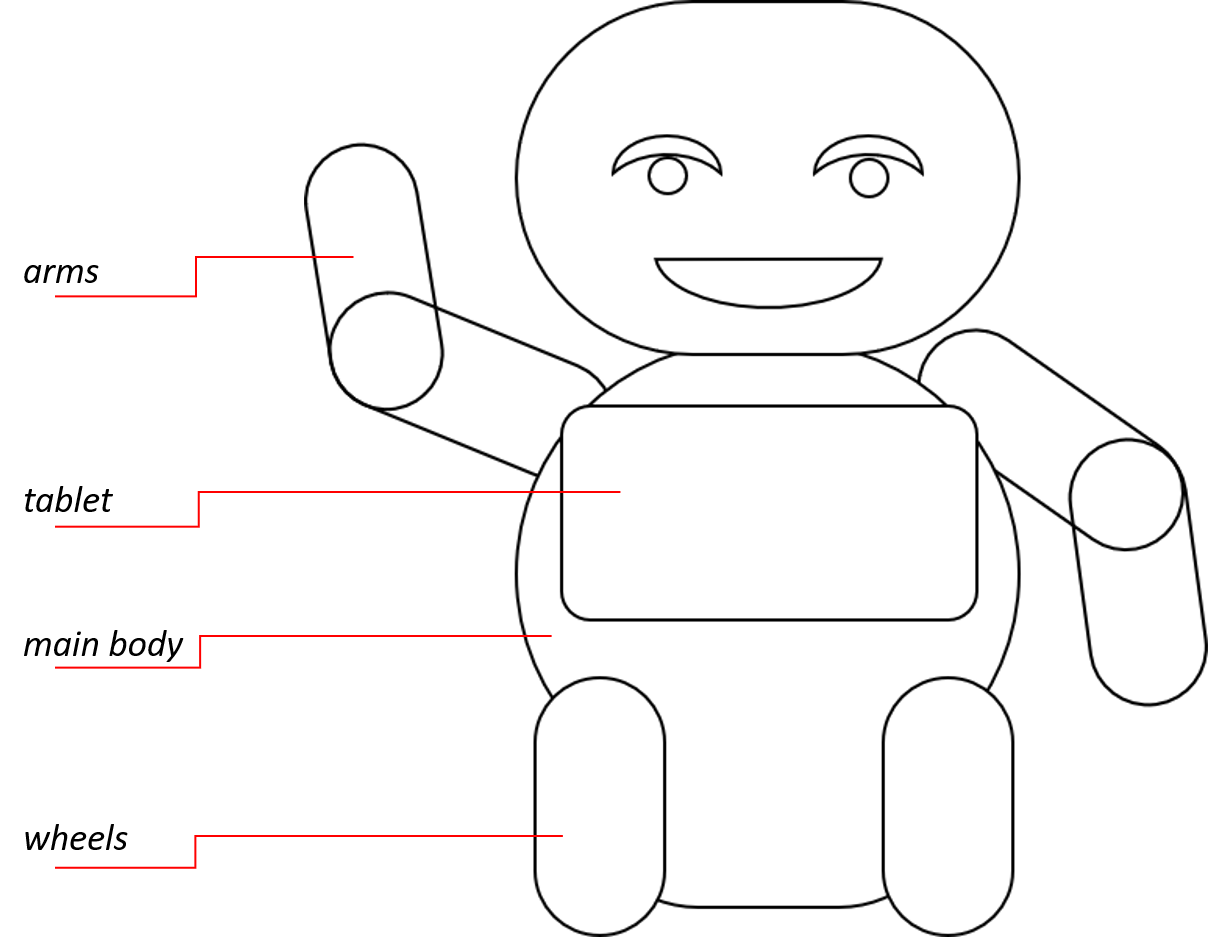

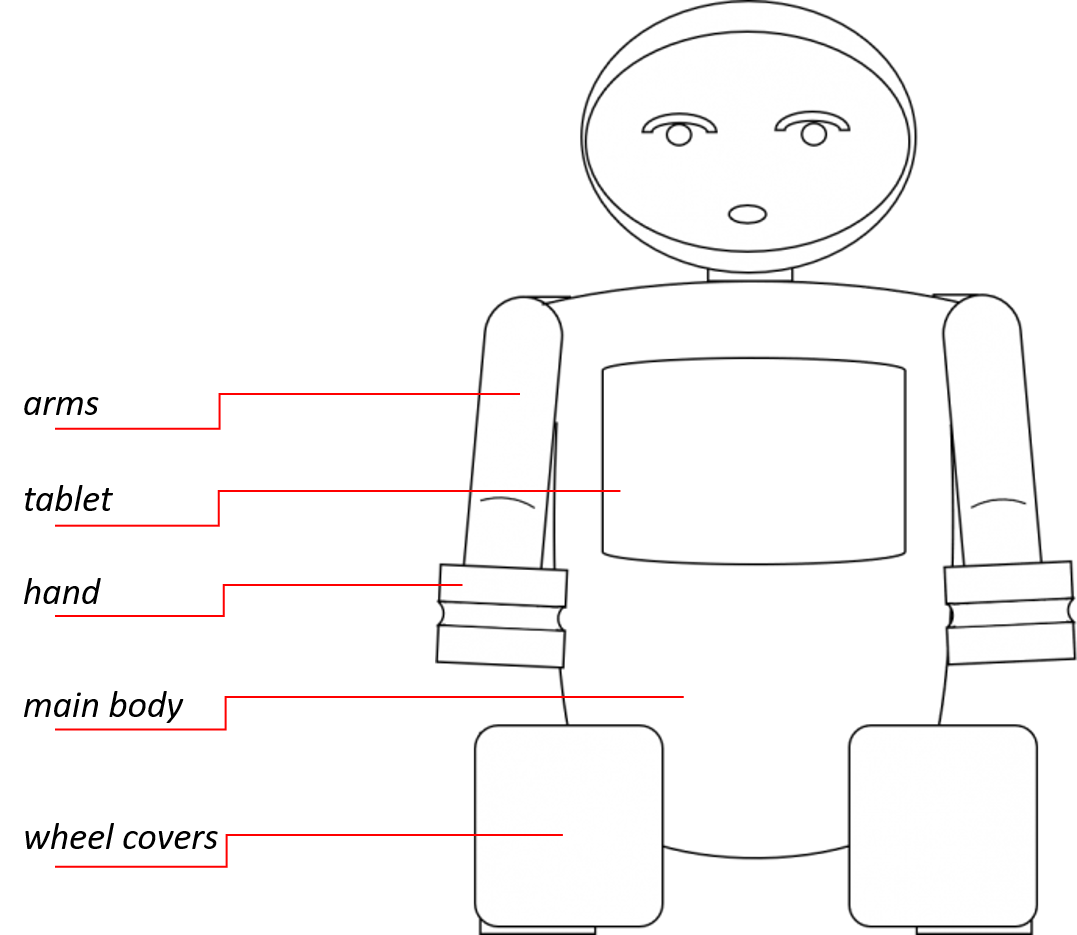

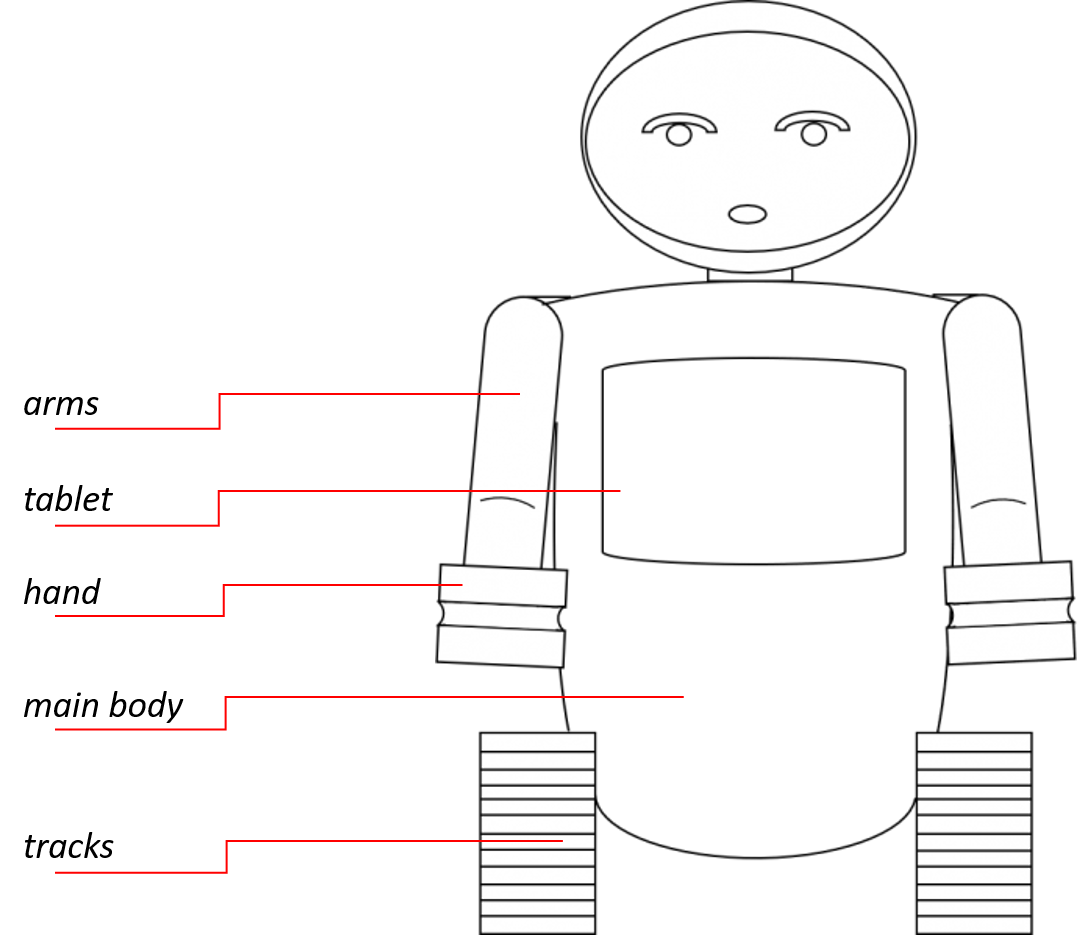

With respect to the earlier determined requirements, several sketches and outlines are made to visualize possible design solutions. In this appendix, all these sketches of the robot are shown: | |||

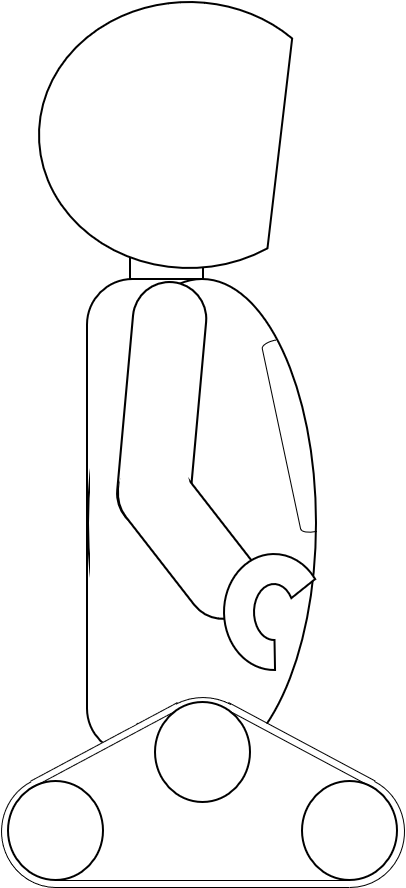

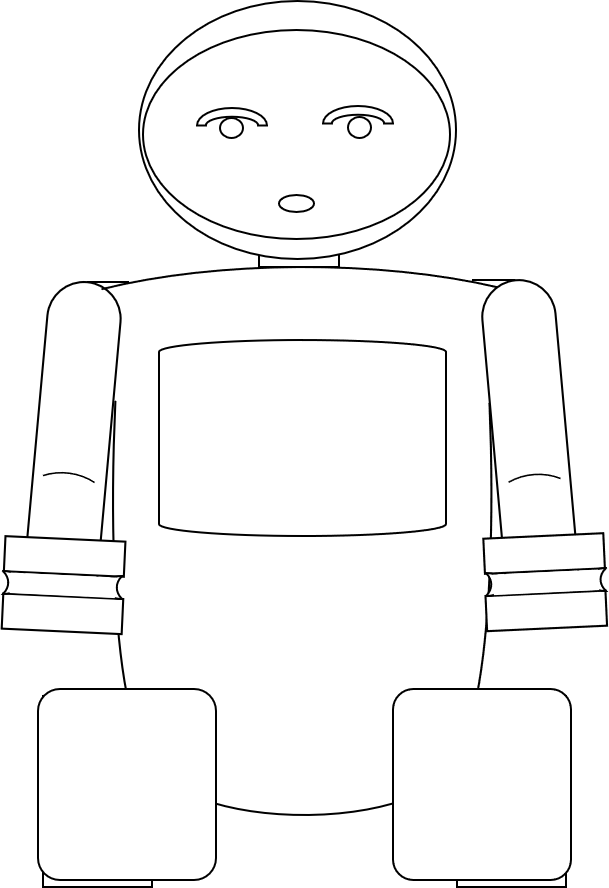

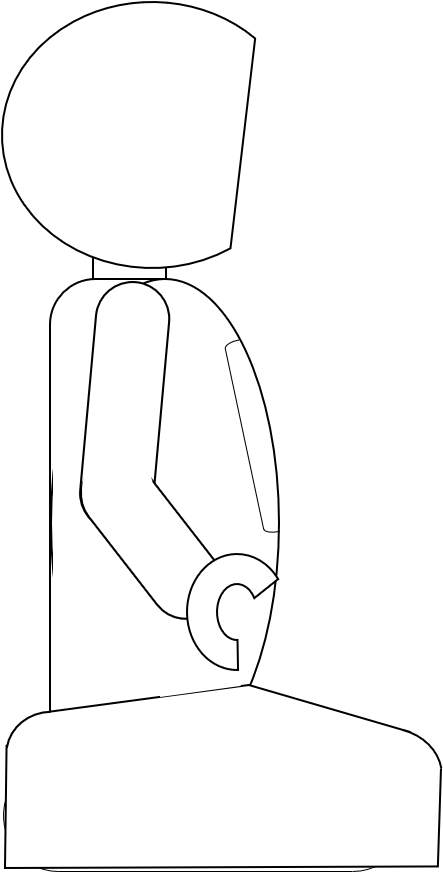

[[File:Rubby1.png|300px|thumb|left|Sketch 1, front view]] [[File:Rubby2.png|110px|thumb|centre|Sketch 1, side view]] | |||

[[File:Rubby3.png|300px|thumb|left||Sketch 2, front view]] [[File:Rubby4.png|200px|thumb|centre||Sketch 2, side view]] | |||

[[File:Rubby5.png|300px|thumb|left|Sketch 3, front view]] [[File:Rubby6.png|220px|thumb|centre|Sketch 3, side view]] | |||

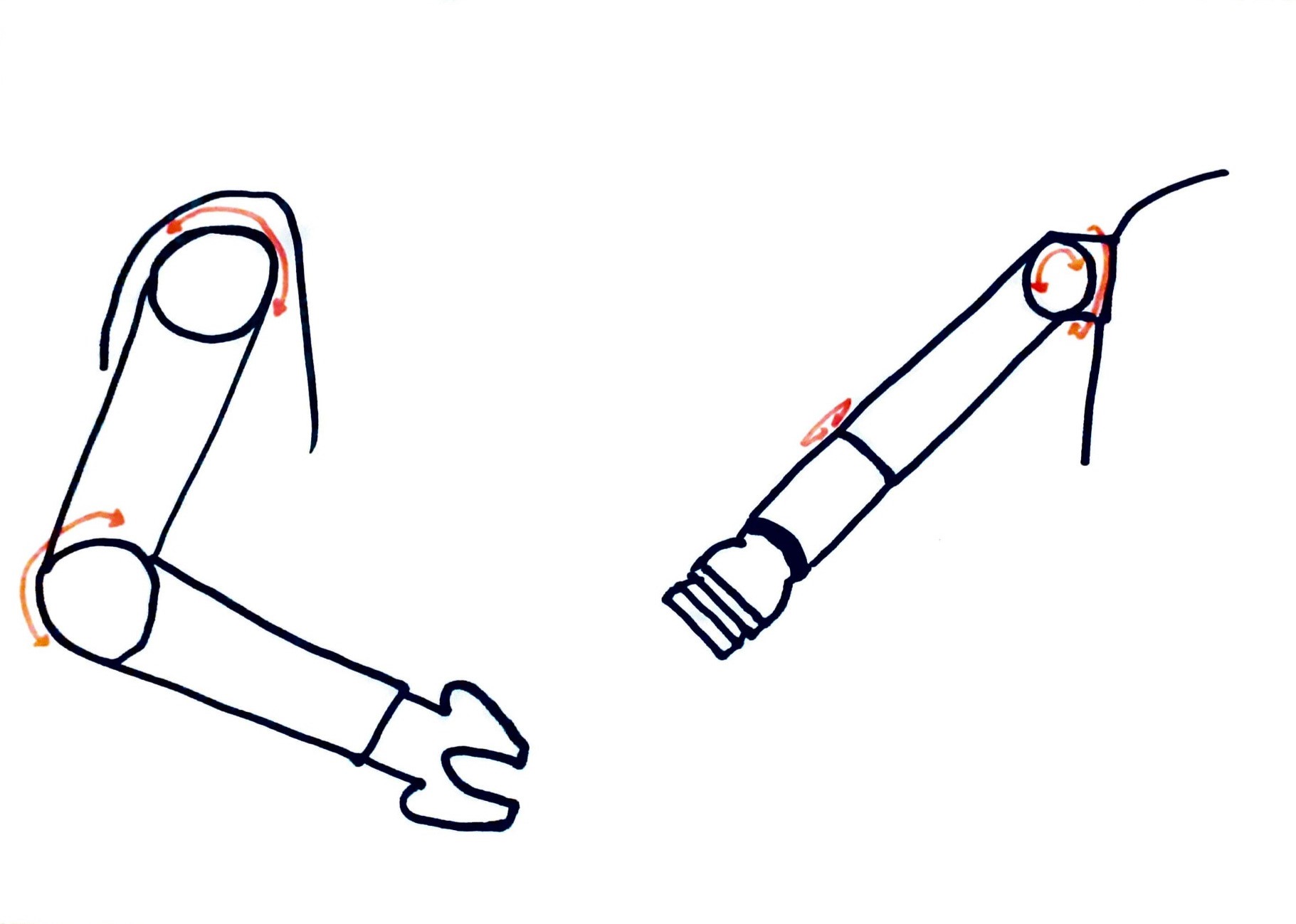

[[File:Rubby7.jpeg|500px|thumb|left|Sketch of arm, side and front view]] | |||

[[File:Rubby8.jpeg|500px|thumb|center|Sketch 4, front and side view]] | |||

[[File:Rubby10.jpeg|500px|thumb|left|Sketch 5, front and side view]] | |||

[[File:Rubby9.jpeg|300px|thumb|center|Sketch 6, front view]] | |||

<br\> | |||

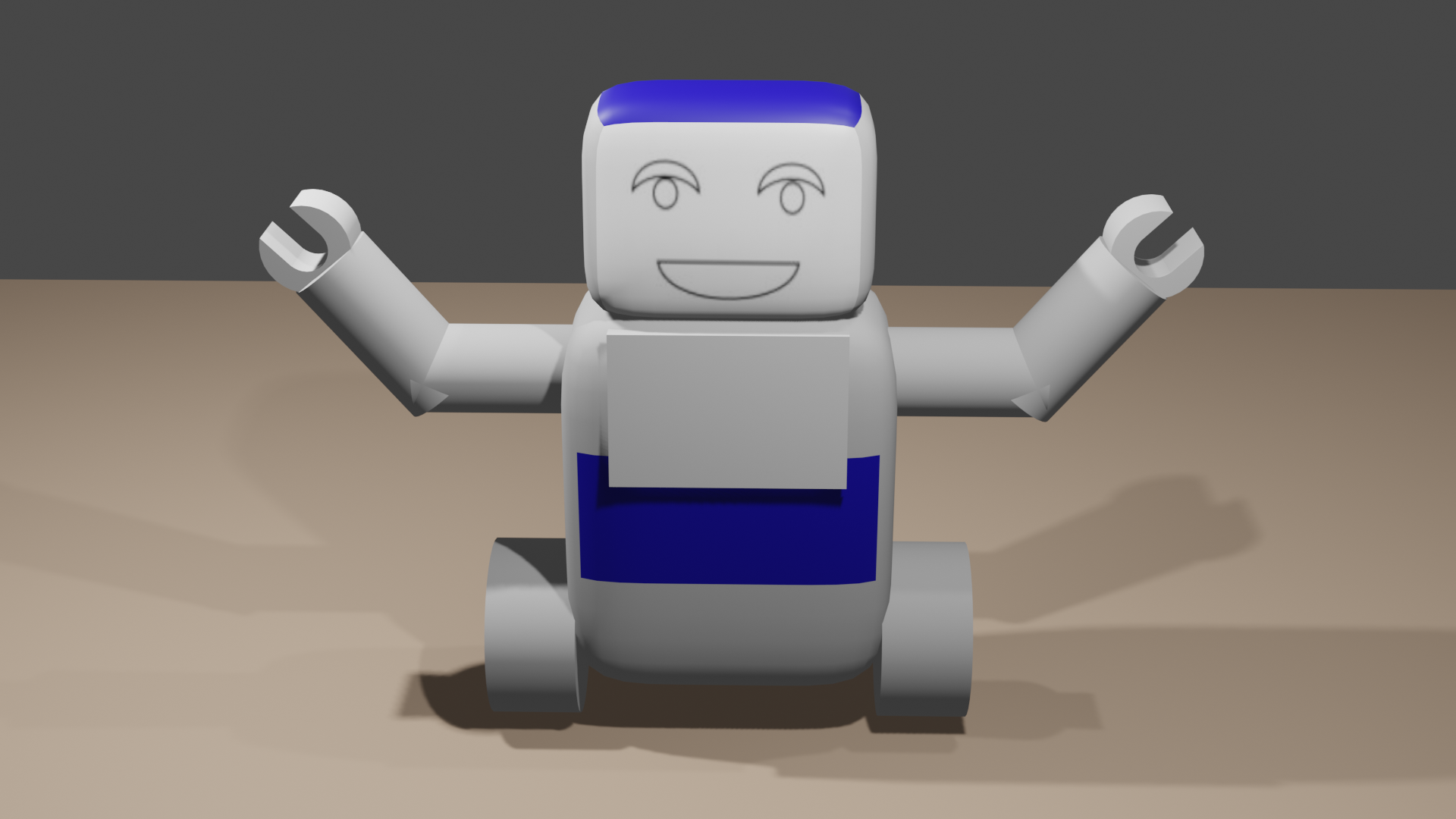

==Appendix F: Designs in Blender== | |||

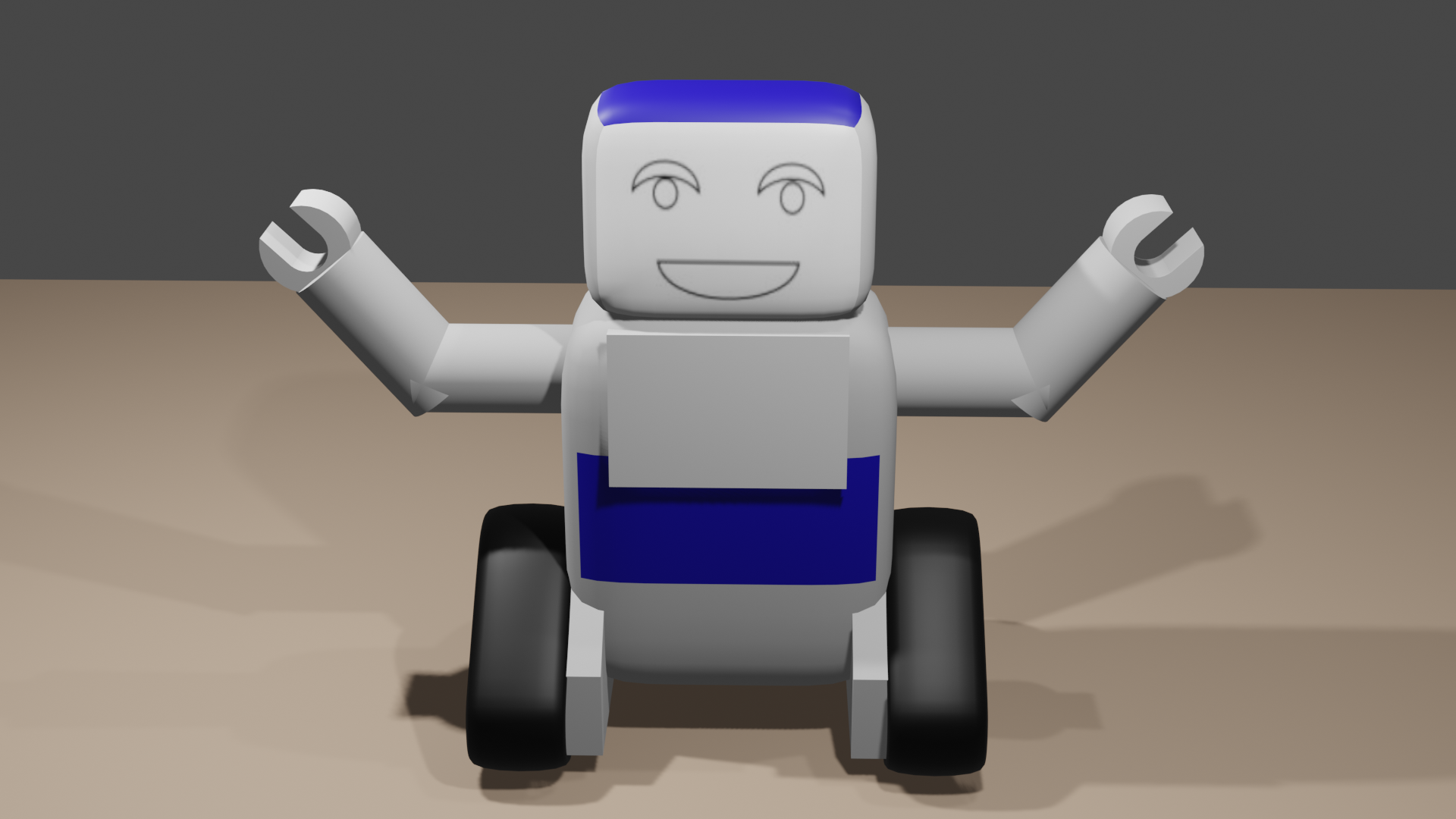

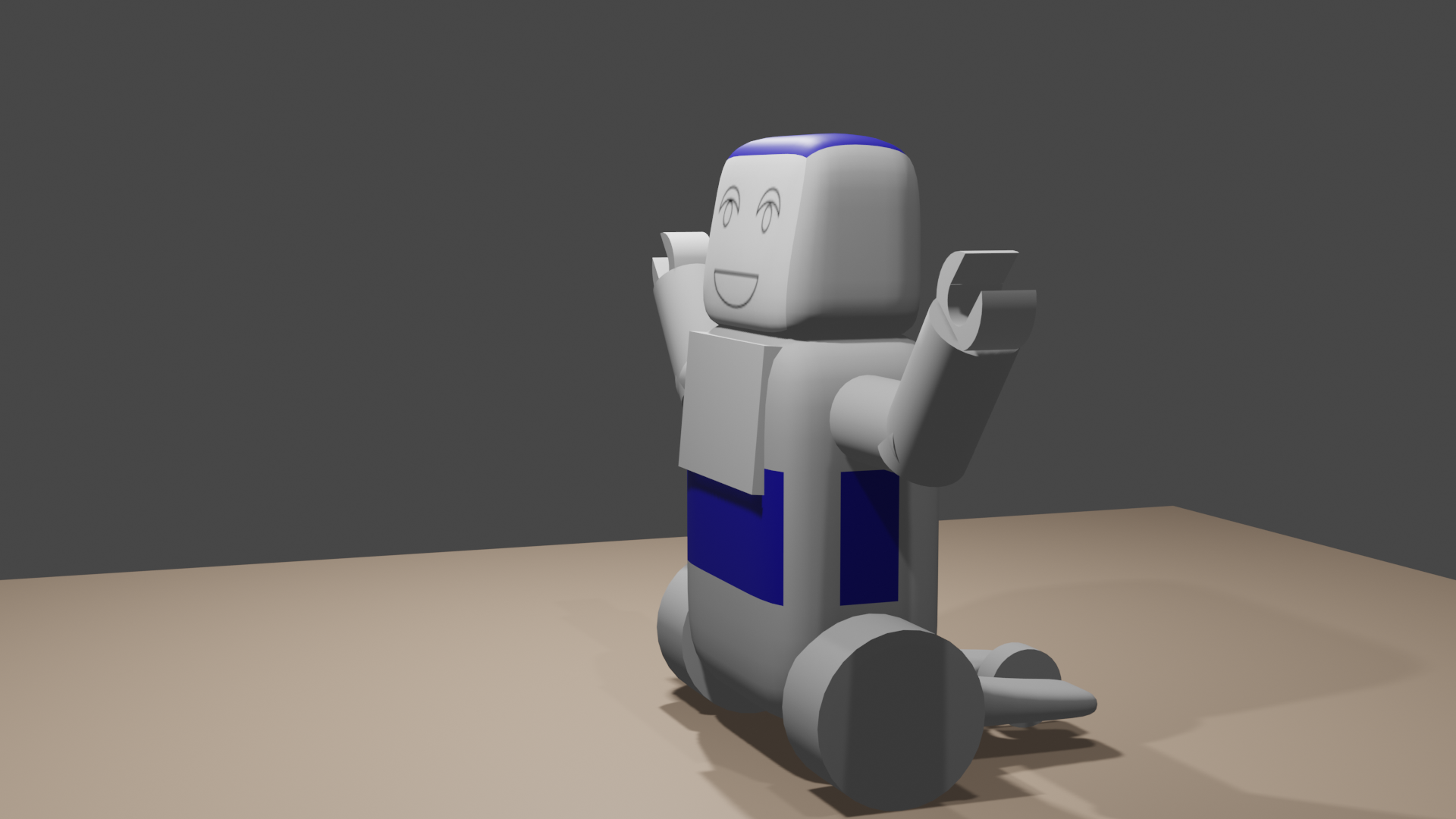

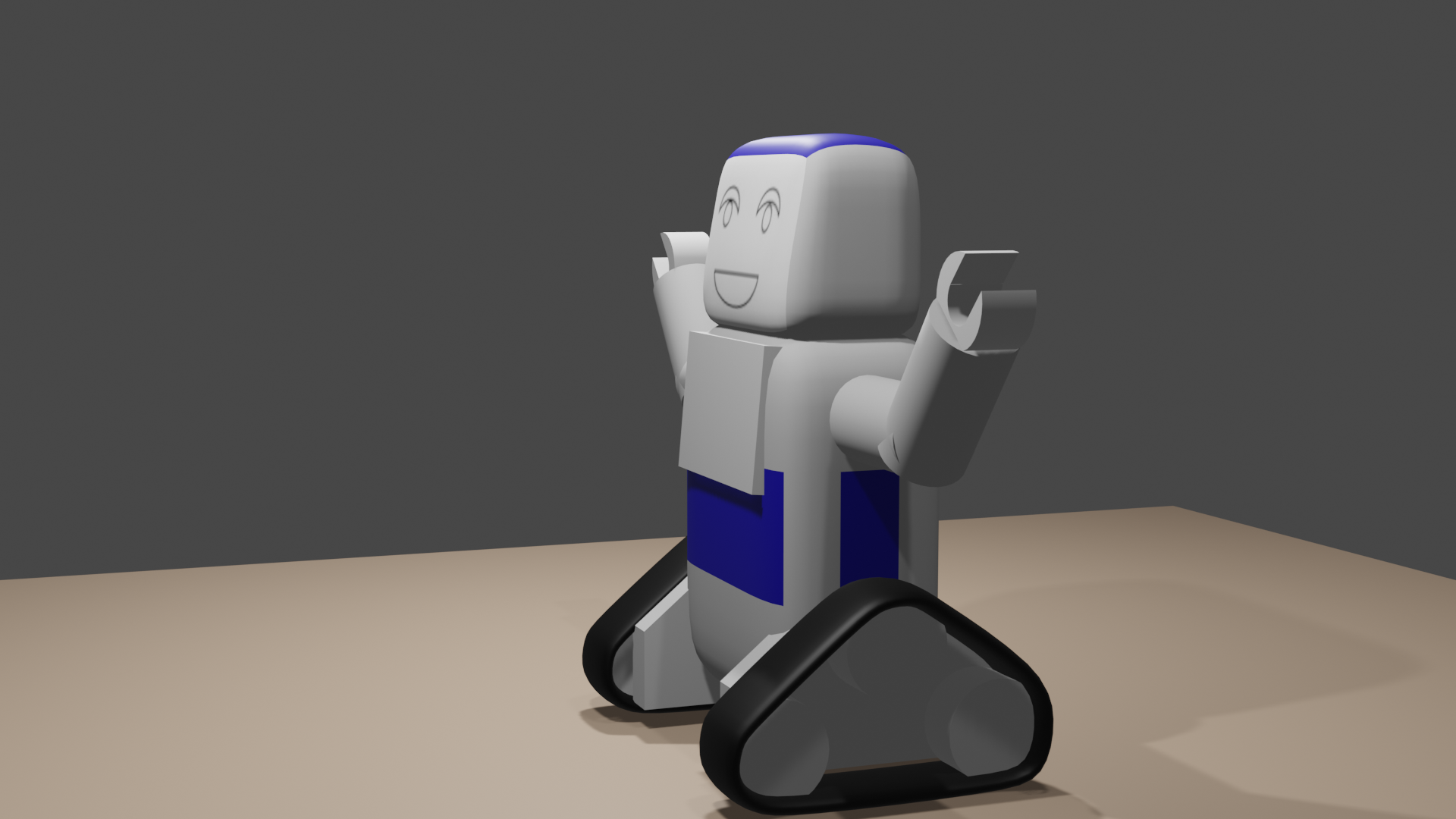

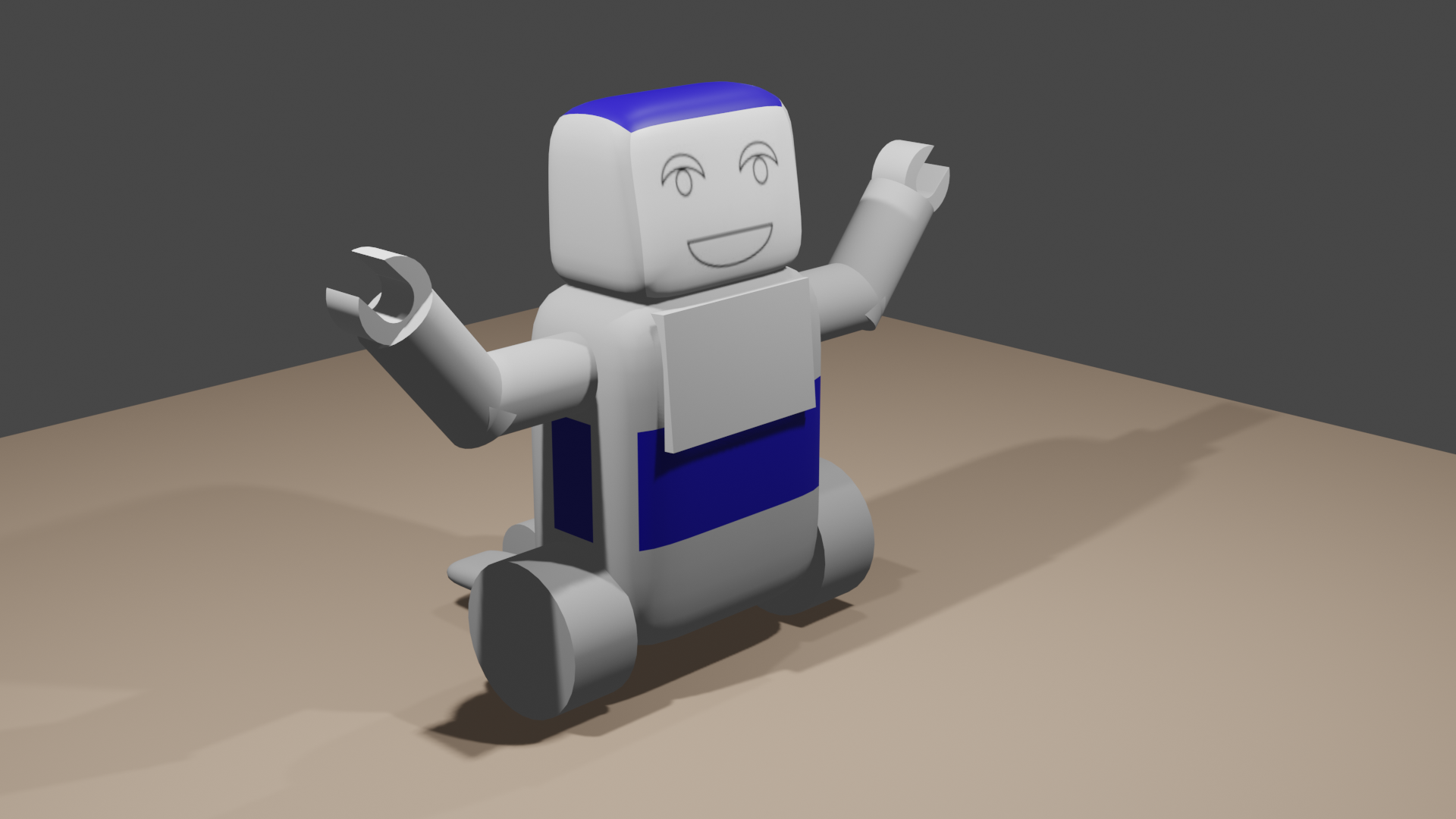

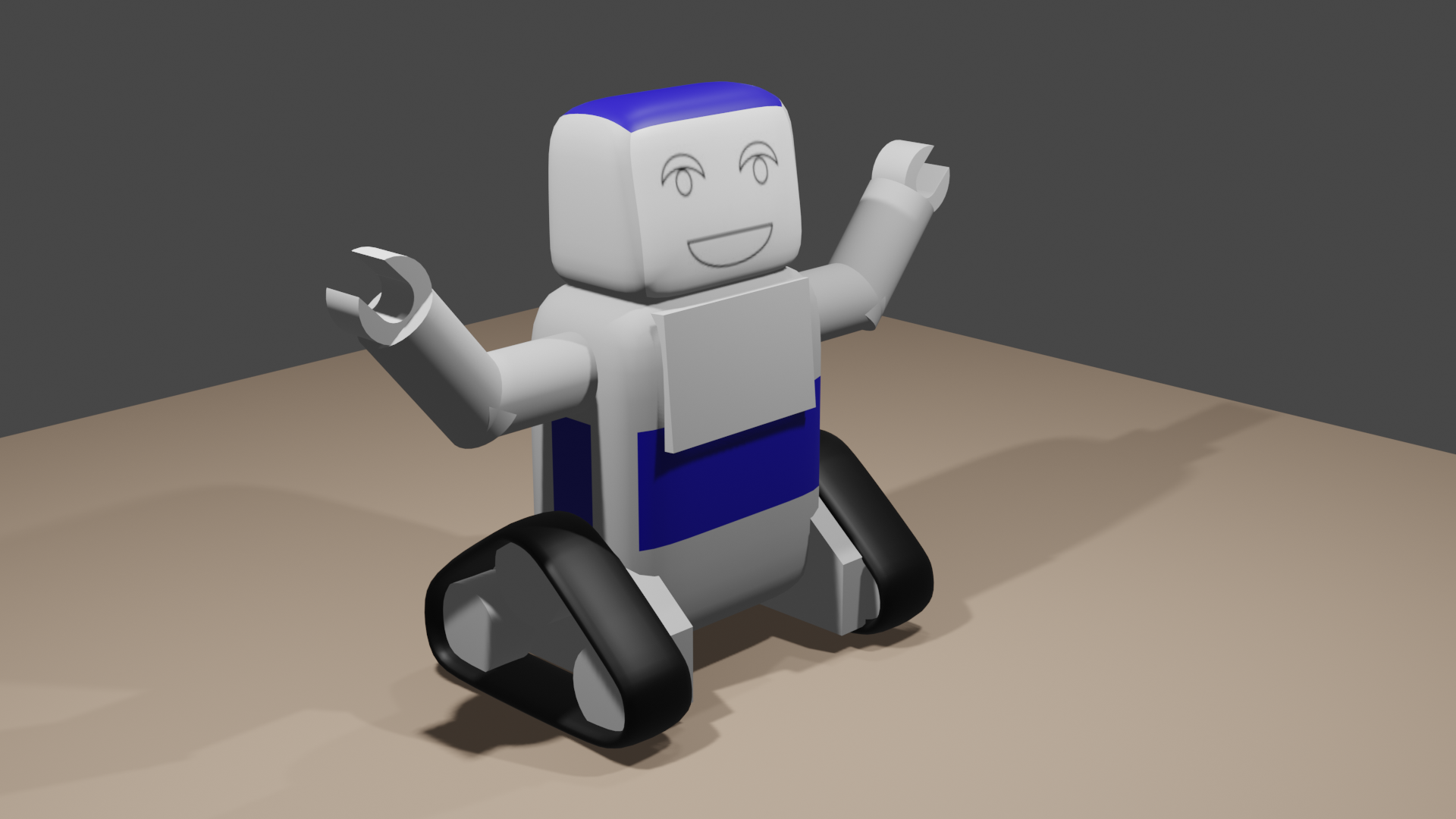

The figures in this appendix show several views of the preliminary designs, created in Blender. | |||

[[File:MicrosoftTeams-image.png|380px|left|thumb|Preliminary design 1, front view]] [[File:MicrosoftTeams-image_(5).png|390px|right|thumb|Preliminary design 2, front view]] | |||

[[File:MicrosoftTeams-image_(1).png|380px|left|thumb|Preliminary design 1, side view]] [[File:MicrosoftTeams-image_(3).png|390px|right|thumb|Preliminary design 2, side view]] | |||

[[File:MicrosoftTeams-image_(2).png|380px|left|thumb|Preliminary design 1, iso view]] [[File:MicrosoftTeams-image_(4).png|390px|right|thumb|Preliminary design 2, iso view]] | |||

<br\> | |||

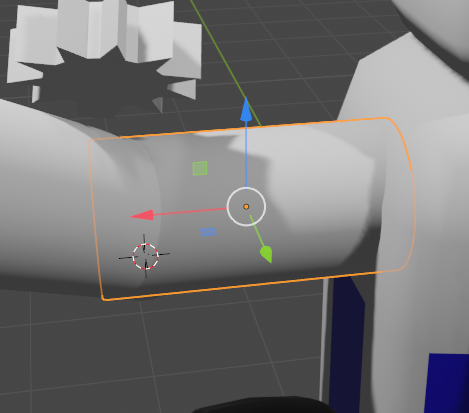

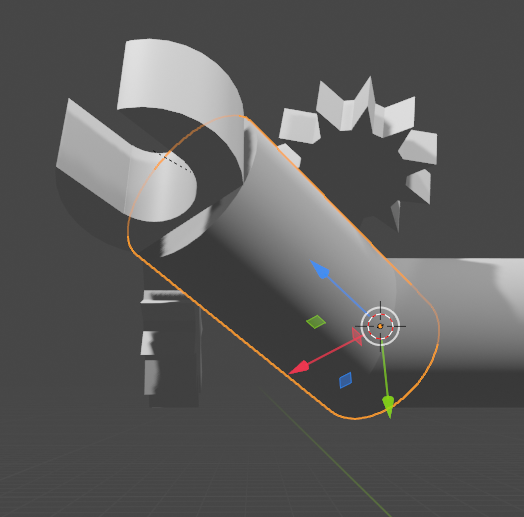

==Appendix G: Visualization of some design aspects== | ==Appendix G: Visualization of some design aspects== | ||

In this section, a few design aspects of the final design are shown. | |||

[[File:arm1.png|400px|thumb|left|Design aspect: Rotation of inner arm along red axis]] | |||

[[File:arm2.png|355px|thumb|centre|Design aspect: Rotation of outer arm along red axis]] | |||

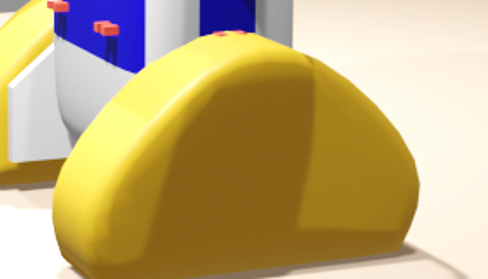

[[File:cover1.png|335px|thumb|left|Design aspect: Covers around tracks for safety]] | |||

[[File:cover2.png|400px|thumb|centre|Design aspect: Improved and sleek covers around tracks]] | |||

==Appendix H: Progress on simulating Rubby in simscape == | ==Appendix H: Progress on simulating Rubby in simscape == | ||

[[File:URDF_snip.PNG|200px|right]] [[File:rubby_urdf_output.PNG|200px|right]] | |||

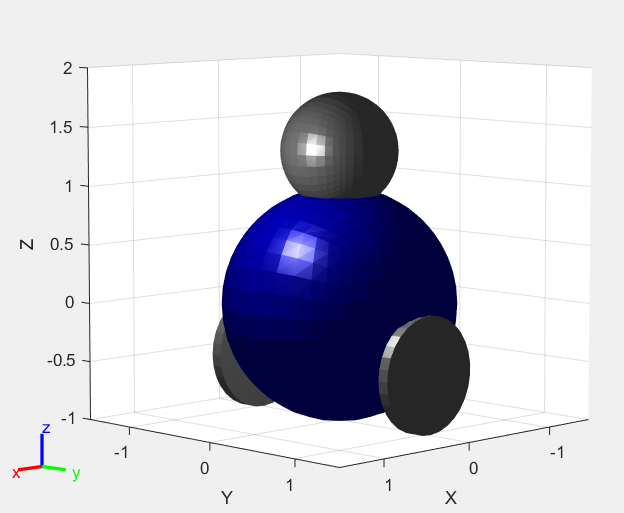

After looking some more into the robotics toolbox in matlab, I found out that it is quite hard to model a mobile robot with this toolbox. This is due to the fact that this toolbox generates a RigidBodyTree model from a robot. This model basically looks like a tree, so it starts with a fixed (!) base and separate limbs can move with respect to that base. This works great for robot arms that stay in one place, but I was not able to get it to work with our robot. | After looking some more into the robotics toolbox in matlab, I found out that it is quite hard to model a mobile robot with this toolbox. This is due to the fact that this toolbox generates a RigidBodyTree model from a robot. This model basically looks like a tree, so it starts with a fixed (!) base and separate limbs can move with respect to that base. This works great for robot arms that stay in one place, but I was not able to get it to work with our robot. | ||

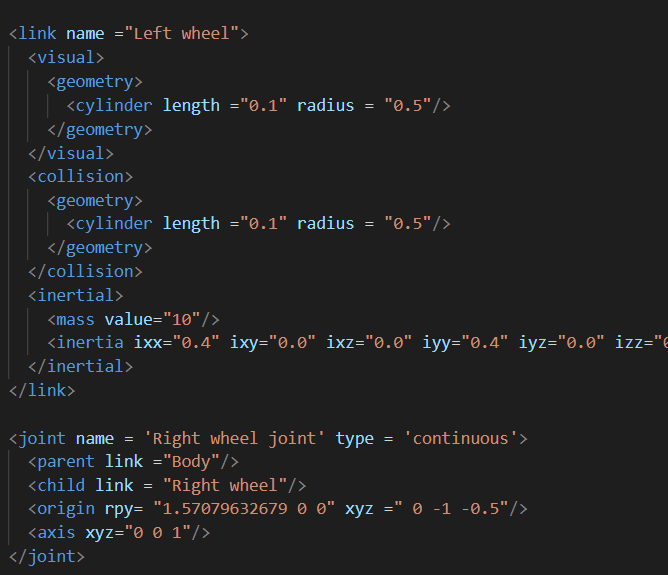

I therefore went back to Simscape in matlab. Simscape can be used to model moving robots. So I started off with that. I checked the kind of files that can be imported by simscape and ended up with making a .urdf file of a rough version of rubby. It looks nowhere like our robot, but it has similar kinematics and that is what matters for now. The .urdf file that was generated looks as follows: | I therefore went back to Simscape in matlab. Simscape can be used to model moving robots. So I started off with that. I checked the kind of files that can be imported by simscape and ended up with making a .urdf file of a rough version of rubby. It looks nowhere like our robot, but it has similar kinematics and that is what matters for now. The .urdf file that was generated looks as follows: | ||

This little snippet of code shows the two most important things in a .urdf file: Links and joints. Links specify different solid bodies in the model and joints connect the two. The actual file is about 120 lines long, so it is no use showing that here, but when importing this file into matlab, the result can be seen in the figure above. | This little snippet of code shows the two most important things in a .urdf file: Links and joints. Links specify different solid bodies in the model and joints connect the two. The actual file is about 120 lines long, so it is no use showing that here, but when importing this file into matlab, the result can be seen in the figure above. | ||

| Line 1,270: | Line 1,344: | ||

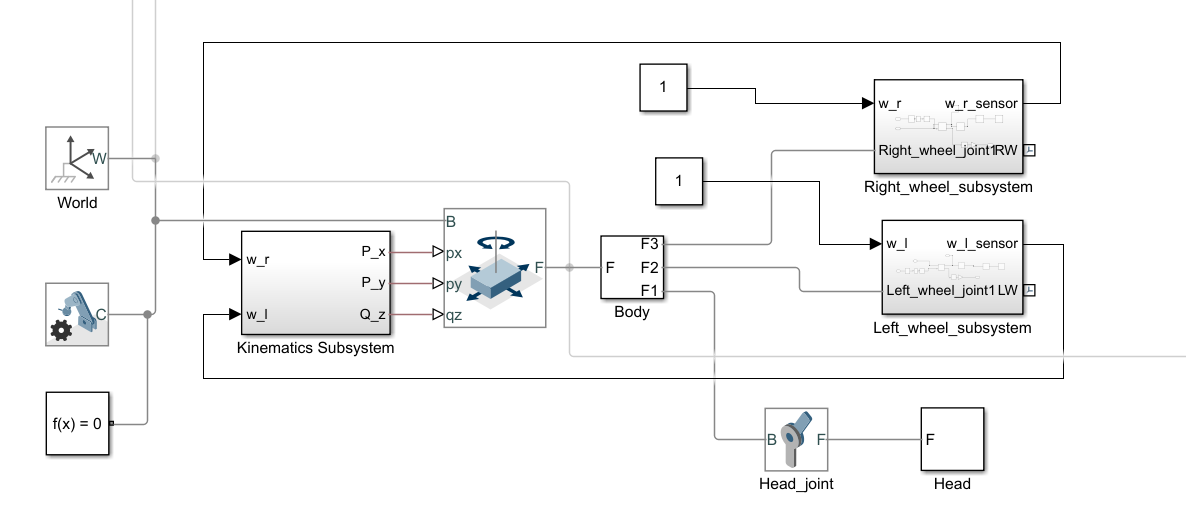

After this, it was time to import the robot in simscape and to make it move. The first part was making the generated urdf file move the way we wanted to. The simscape model for this looks as follows: | After this, it was time to import the robot in simscape and to make it move. The first part was making the generated urdf file move the way we wanted to. The simscape model for this looks as follows: | ||

[[File:Simscape_only_movement.PNG]] | [[File:Simscape_only_movement.PNG|400px]] | ||

Where the two constants are the inputs for the angular velocity of the right and left wheel. This model resulted in the robot driving around happily. A robot moving through an empty world does have any use for us however, so the next step is to implement it in an environment and try to incorporate path planning/ obstacle avoidance. | Where the two constants are the inputs for the angular velocity of the right and left wheel. This model resulted in the robot driving around happily. A robot moving through an empty world does have any use for us however, so the next step is to implement it in an environment and try to incorporate path planning/ obstacle avoidance. | ||

[[File:Rubby_in_room.PNG|200px|right]] | |||

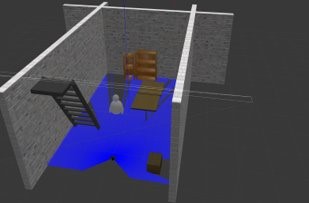

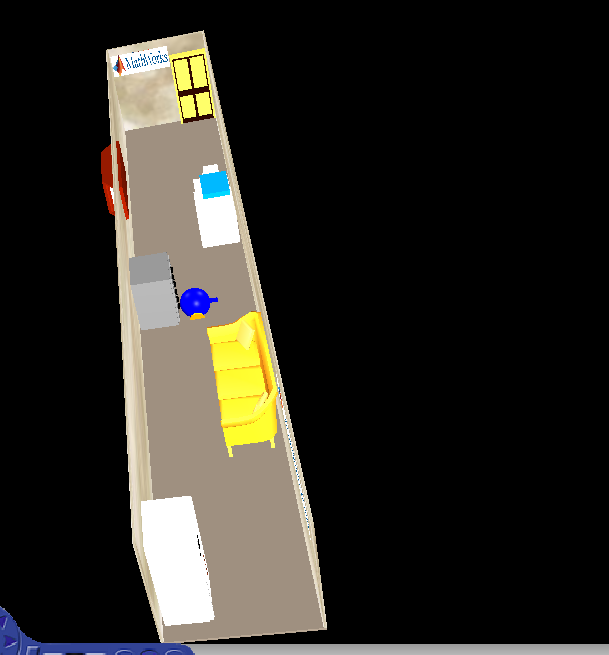

To implement the robot in a simulation environment, we have to make another file format, in this case a '''VRML''' file. This file can easily be exported from blender, so we can use the real robot model made by Paul and Jos. This can then be used in combination with a living room setting that was taken from the mathworks website. Now our robot looks as follows in the living room environment: | |||

This is still with an ugly Rubby model that I made myself, but this can be replaced with the actual model pretty easily. Now we have to make the robot actually move in this living room environment. This was done by using a VR-sink block in Simulink and providing it with inputs. The results from implementing this looks similar to the picture in the living room, only now Rubby is able to move. | This is still with an ugly Rubby model that I made myself, but this can be replaced with the actual model pretty easily. Now we have to make the robot actually move in this living room environment. This was done by using a VR-sink block in Simulink and providing it with inputs. The results from implementing this looks similar to the picture in the living room, only now Rubby is able to move. | ||

The next step is to incorporate an obstacle avoidance algorithm. This is something I tried but could not get to work yet. For this, virtual sensors have to be implemented, either in the simscape model or in the .urdf file and this has to be coupled to the environment. | The next step is to incorporate an obstacle avoidance algorithm. This is something I tried but could not get to work yet. For this, virtual sensors have to be implemented, either in the simscape model or in the .urdf file and this has to be coupled to the environment. | ||

==Appendix I: Picture user needs== | ==Appendix I: Picture user needs== | ||

| Line 1,460: | Line 1,533: | ||

|- | |- | ||

| Emma Allemekinders | | Emma Allemekinders | ||

| hours | | 12 hours | ||

| | | Wiki on path planning, make the powerpoint for the presentation with script. | ||

|- | |- | ||

| Jos Stolk | | Jos Stolk | ||

| | | 12 hours | ||

| | | Specifying and motivating of determined RPCs, creating a future plan, final work on wiki. | ||

|- | |- | ||

| Emma Höngens | | Emma Höngens | ||

| | | 11 hours | ||

| Improved the complete wiki and created a future plan | | Improved the complete wiki and created a future plan | ||

|- | |- | ||

| Hidde Huitema | | Hidde Huitema | ||

| | | 11 hours | ||

| | | Write section on local navigation. Write guidelines for parents. | ||

|} | |} | ||

==Appendix Z: Planning== | ==Appendix Z: Planning== | ||

'''Week 1''' | |||

{|border=1 style="border-collapse: collapse;" | |||

|- | |||

! '''Week''' !! Main tasks | |||

|- | |||

| Week 1 | |||

| Form groups, choose subject, problem statement, start research | |||

|- | |||

| Week 2 | |||

| Research, come up with solutions for problem statement, choose direction to go to | |||

|- | |||

| Week 3 | |||

| Elaborate research on user group, from persona's, create a survey for parents, start research to possible technical options | |||

|- | |||

| Week 4 | |||

| Sending out survey, start information seeking for path planning and navigation, do research to technical options | |||

|- | |||

| Week 5 | |||

| Data analysis survey, start with path planning and navigation, start with preliminary design of mechanical design, complete user needs, start with final RPC list, describe robot, write scenario's | |||

|- | |||

| Week 6 | |||

| Complete RPC list, path planning, navigation, finalize mechanical design and bill of materials, make a software plan | |||

|- | |||

| Week 7 | |||

| Complete path planning, complete navigation, make a future plan, describe guidelines for parents, finalize wiki | |||

|- | |||

| Week 8 | |||

| Presentation, handing in wiki | |||

|} | |||

---- | ---- | ||

Latest revision as of 13:36, 30 March 2021

Groupmembers

| Name | Studentnumber | Department | Responsibility |

| Emma Allemekinders | 1317873 | Mechanical engineering | Model of path planning and software plan |

| Emma Höngens | 1375946 | Industrial Engineering & Innovation Sciences | Future plan, software plan, and wiki |

| Paul Hermens | 1319043 | Mechanical engineering | List of materials and mechanical design |

| Hidde Huitema | 1373005 | Mechanical engineering | Model of path planning and guidelines for parents |

| Jos Stolk | 1443666 | Mechanical engineering | Mechanical design and future plan |

Introduction

Problem statement

Due to the COVID-19 pandemic, a lot of people have to work from home instead of from the office. This means that their working environment changed and in many cases is not optimal. However, the working environment is not the largest problem of working from home, for many parents children are the main problem. Because of several corona restriction, children are not always able to go to school, to their grandparents or after-school care. This results in two problems, namely boredom and distraction.

First of all, boredom. When children are at home with their working parent(s) instead of school, after-school care or at their grandparents' place, they get bored easily. They should be silent and not distract their parents, but what should they do then? Besides, when they cannot go to school, children miss educational content as well. This problem should be solved.

Secondly, when children are at home while the parent is working, they cannot concentrate well. Children need attention and when they get bored, the first they go to are their parents. This means that parents with children at home are not able to concentrate, have meetings and make their deadlines. So it is hard for parents to combine private life with work. This should be solved. Therefore, there should come a child-friendly robot that entertain children and also learn them new things.

Execution

This robot solution is created for the course Project Robots Everywhere (0LAUK0). In the project group 9 will mainly focus on the first outline of the mechanical design and the navigation of the robot, and what should be done in the future to develop a working robot.

In the process of the project, the group will first have a look at the state of the art via literature. Then, the user group is investigated via literature and a survey among the parents. Together with the state of the art, the robot can be specified and RPC's can be stated. The last step is to create and deliver the end products.

Deliverables

At the end of the project Robots Everywhere, the following products will be delivered by group 9:

| Deliverables |

|---|

| Local navigation model: The model of path planning of the robot and the local navigation to move toward its target. |

| Mechanical design: Design of a child friendly robot based on literature and a survey. |

| Bill of materials: List with the materials and the costs of those materials to get a final selling price and insight what is needed to create the robot of this product. |

| Software plan:: The end product of the software plan will be described. This plan is needed for future development of the robot. |

| Future plan: Description of the involved stakeholders and the development steps needed to take the robot to the commercial market. |

| Guidelines for parents: Since the robot is used by younger children a description for parents is needed how to use it in a safe way. |

State of the Art

In this chapter, literature about existing robots and their features are discussed. As the robot in this project is focused on keeping children busy when they, for example, cannot go to school, educational and entertainment functions will be discussed.

Entertainment and Education

Entertainment

Entertainment of children through a robot instead of "normal" toys can be done by making use of an interactive interface. This interface can be a tablet or screen, voice commands, movements, or music (like singing).

Research about the use of tablets as entertainment material for children shows that children can be entertained rather easily by a tablet (Oliemat, Ihmeideh & Alkhawaldeh ,2018). Voice capabilities could be extra entertaining and makes a more natural interaction between robot and child possible (Meyns, van der Spand, Capiau, de Cock, van Steirterghem, van der Looven & van Waelvelde,2019). Also, beside stimulation of musical hearing music stimulates natural exercise.

Attention

Apart from the entertainment function itself, the robot should draw attention of the child that uses it. As children have a short attention span, this should be taken into account during the development process of the robot should check if the child is still paying attention. This awareness can be used to switch up the current activity. If, for example, children are playing games lose interest, the robot can detect this by a child's expressions and body language and the activity can be switched to another. Then the robot can ask if the child would like to do something else.

Children are able to use a tablet independently from a very young age (Flewitt, Messer & Kucirkove, 2014). The child will therefore be able to control the tablet by themselves and will therefore be able to choose their activity independently and according to their own attention span. When the robot senses that the child is fully distracted from the tablet however, it will prompt the child to do another activity with the robot. It is stated in (Torta, van Heumen, Cuijpers & Juola, 2012) that this is most easily achieved by sound cues. Waving gestures proved to be the second most effective means of attracting attention. This can be achieved by moving the arms attached to the robot.

Education

There are two sides of the educational aspects that should be discussed, namely the educational purpose and the working of educational robots.

The potential benefits of using robots for educational purposes has been shown in research of Ruiz-del-Solar (2010), Kanda, Hirano, Eaton and Ishiguro (2004), and Tanaka, Cicourel and Movellan (2007). It is therefore desirable that a robot nanny can also carry out educational tasks. These following tasks will be carried out by Rubby:

- Talking to the child. It is shown in research of Weisleder and Fernald (2013) that speech directly targeted to children plays a large role in their future development. This is a task that can easily be carried out by the robot. It can read books and tell stories to the child to stimulate the development of their vocabulary.

- Games for development of memory and numerical skills. Passolunghi and Costa (2014) have shown, that playing specific games can greatly improve a child’s working memory and numerical skills. These games can be implemented in a tablet for example and can be played by the child.

- Check writing. It is argued in Amorim, Jeon, Abel, Felisberto, Barbosa and Dias (2020) however that playing games on a platform similar to Squla, significantly increased the reading and writing skills of preschoolers. This is a task that can also be carried out by the robot. A tablet can be implemented on which the Squla games can be played and the robot can give positive reinforcement to stimulate playing these games.

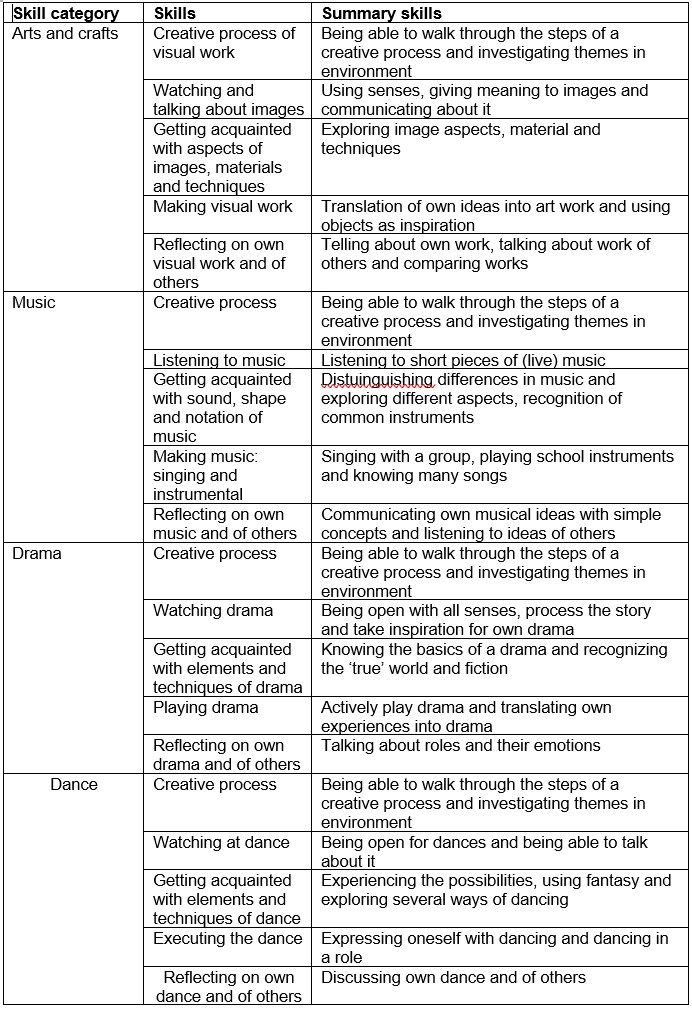

The educational aspects of robots are investigated a lot and this knowledge can help us in the development in our robot. In several studies, robots that have such educational functions, were implemented in a children’s environment to investigate the impact of the robot on the children. Examples of these existing robots are Robovie, QRIO and IROBI (Tanaka, Cicourel & Movellan, 2007; Han, Jo, Park & Kim, 2005). Two Robovie robots have been implemented at a primary school in order to investigate whether they could form relationships with children and whether these children might learn from them. Results from this study, along with those of the studies performed with QRIO and IROBI, showed that robots are actually able to teach kids the English language (Kanda et al., 2004; Ishiguro, Ono, Imai, Maeda, Kanda & Nakatsu, 2001), can achieve strong social bonds with toddlers for a long period of time (Tanaka et al, 2007) and can increase the learning interest and academic achievement (Kory & Breazeal, 2014).

A few important conditions are needed to achieve this. First of all, the more predictable the robot is, the worse the quality of interaction. This means a robot should ‘grow’ with the child in order to increase the effectiveness of learning and communication. Secondly, it is preferred that a robot is adapted very well to the personal characteristics of the user; since each child has a different way and pace of learning. Furthermore, appearance, behavior and content of the robot are the three main factors that have impact on the robot’s effectiveness. Finally, an important note is that robots should do not replace parents, tutors or teachers; they are only for supplementing them in what they already do (Kory & Breazeal, 2014).

As a conclusion, robots with an educational function have a significant added value. Children’s language skills can be improved and especially their motivation to learn increases. Verbal interaction with the child to learn words appears to be a relevant and feasible function, and also playing memory games is a feature of a robot that already exists (Kory & Breazeal, 2014). The extra educational function of writing check increases the diversity of learning areas; Writing is a more active, whereas language learning is a more unconscious process.

Practical functions

Another important feature of a robot that is used by children, is safety. There are several options that could be used in the design of a robot to keep a child safe.

- Motion control for the robot, to keep an eye on the child when it is moving around. Research of Yoshimi, Nishiyama, Sonoura, Nakamoto, Tokura, Sato, Ozaki, Matshuhira and Mizoguchi (2006) shows that the following behavior can be used to keep the child in view and therefore ensure proper functioning of the robot at all times.

- It should be possible to move slowly to not be dangerous. This in combination with a soft material will drastically decrease the change of dangerous operation. Research Taufatofua, Heath, Ramirez-Brinez, Sommer, Durantin, Kong, Wiles and Pounds (2018) shows faster movement on its own is not as bad. It is a higher mass with fast movement that creates a worse impact if in contact with a child. If the drive mechanism is moved towards the core of the robot, the weight of the arms can be reduced which requires less torque, and will therefore be safer.

- Observe dangerous objects in close range of the child and notify caregivers. This is done to increase the safety of young children. As children are very curious they can interact with sharp, hot or other kind of dangerous objects without knowing it. If the parent is busy with other activities they wouldn’t see it without a warning of the robot.

- Monitoring the child is also important. Parents always should be able to know if their child is safe. A logical solution for this would be a camera, but this could bring privacy issues. biosignals to detect if a child is falling or voice technology also could be an option.

User

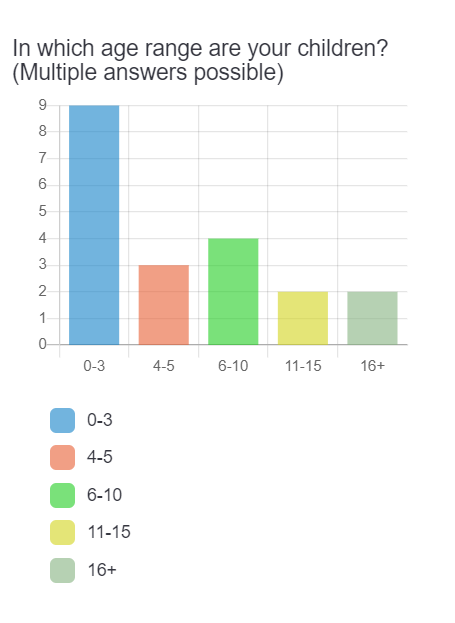

In this chapter first the target group will be defined and the specifications of these users will be given. As a result a table of user needs is given. The primary user group are Dutch children of 4- and 5-years old that go to kindergarten. The needs of the secondary user group, parents of these children, also come forward in this table.

User specifications

The primary users are Dutch children of 4- and 5-years old that go to kindergarten. The educational and entertainment content depends on the nationality and age group. This group is the initial group for the development of this robot, but in further development after this project also other countries and age categories could be included. The secondary users are the parents of the primary users.

Persona's

To get a better feeling and ability of identify with the user group, persona's are described based on data of Dutch research institutes. Below David and Ava are described. In Appendix A you can find the persona descriptions of Melissa and Noah, a single mother with a 5-year old son.

The majority of the Dutch households with children are led by two parents (Volksgezondheidenzorg.info, 2019). To get a feeling for the users of the robot that is developed in this project, the following persona's gives an example of a two parent household.

David

David is a male of 44 years old. He is married with Karin, a general practitioner of 39 years old. Together, they have two children: James, a boy of 8 years old and Ava, a girl of 4 years old. They live in a family home in a village surrounded by meadows.

David works from home, at his home office, for four days a week. He is a logistic consultant and works with companies from over the whole world. He has a lot of conference calls in a week and there is also a lot of paper work to be done. Ava, his little daughter, is at home at Friday and Wednesday afternoon. She can be disturbing, because she wants to have attention from her father David and she is not able to keep herself busy with hobbies, like James is. Kindergarten is not possible for Ava as this only takes place a few villages away. David hopes that there will be a technology that supports him with caring for Ava, so he can continue working.

Ava

Ava is a girl of 4 years old. She lives in a family home with her brother James and her parents David and Karin. She goes to school 4 days a week. At Friday she is at home with her father, because she and the other toddlers of her school are free at that day. Ava likes to play with dolls, cars, paint and dance with her family members.

When she is at home on Wednesday afternoon and Friday, she feels bored. She does not know what to do on these days. James goes to his friends, the hockey club or is at school, so she cannot play with him. On these days she wants to play with daddy, but he has to work all day. When she goes to her father, he is talking to other people on the screen and he has not time for her. Sometimes he get angry when she chats with him during work hours.

On these days, she has to keep herself busy the whole day. David, her dad puts her in the living room or in his office with her toys. She always plays herself with puzzles, read her books and play with toy trains. After a while, she asks her father if she can watch the television and he always says yes so she does not disturb him. However, watching television for a long time is boring, she thinks. So she hopes that there is someone or something that can play with her when she is at home alone with her father.

Development children

In this section the development of children from 3 to 6 is described per year following guidelines for parents written by Engelhart, Win, Vinke and de Win (2010). The development of children is categorized into physical, intellectual, attachment, social, language, and play development.

Development 3-4 year

When children enter kindergarten they are 4 years old. In the year before they go through some important development and to create some feeling of the state of development of children on the moment they can start with the use of the robot, this age group is described as well.

First of all, the physical development. Children of 3-4 years are able to jump 40-60 cm far, walk on stairs on its own, open and close a door on its own, cycle with training wheels, climb on a climbing frame, cannot suddenly turn or stop a movement, and becomes more convenient and independent. Secondly intellectual development. Children can make harder puzzles, lay pictures in the right order, can concentrate longer, have interest in shapes and series, start asking more questions, play role games with other children, fantasy grows, and it starts to like drawing, making, music, and counting. In third place, attachment development. Children have enough language knowledge to understand their parents, are not fearful for parents leaving and can regulate tension. Then, social development, children get insight in what it can do good and what not and are able to build confidence with positive stimulation. In fifth place, language development. Children like learning new words and try them out, ask many 'why'-questions and over use grammatic rules. Last but not least, play development. Children can cut with scissors, pour a drink without spilling, think about what they want to draw, draw more shape-figures instead of scribbling, and can imitate drawing a circle.

Development 4-5 year

In 'groep 1' (grade 1) children are 4 and sometimes 5 years old.

First of all, the physical development. Children of 4-5 years have good regular body movement skills, change position often (running, climbing, jumping, balancing), can work with a computer mouse, and can catch a ball. Secondly intellectual development, children go to grade 1, can talk, know colors and start writing their own name. In third place, attachment development. Children are already quite independent on that age. Then, social development, children get to know different rules at school than they used to know at house, the socializing process start, and can estimate which they are able to do. In fifth place, language development. Children make longer sentences and their pronunciation becomes good. Last but not least, play development. Children can play on their own outside, can play memory, start drawing people and shapes, and do role playing.

Development 5-6 year

In 'groep 2' (grade 2) children are 5 and sometimes 6 years old.

First of all, the physical development. Children of 5-6 years can throw a ball and catch a ball, become competitive, can jump rope, start with practicing a sport, and can well imitate movements. Secondly intellectual development, children go to grade 2, are interested in their history and their future, have insight in traffic, have higher self-esteem, and is able to spell words with 3 to 4 letters. Then, social development, children want to be independent. In fourth place, language development. Children are quite good with words and can have a proper conversation with someone else without too many mistakes. Last but not least, play development. Children can work multiple days on a drawing.

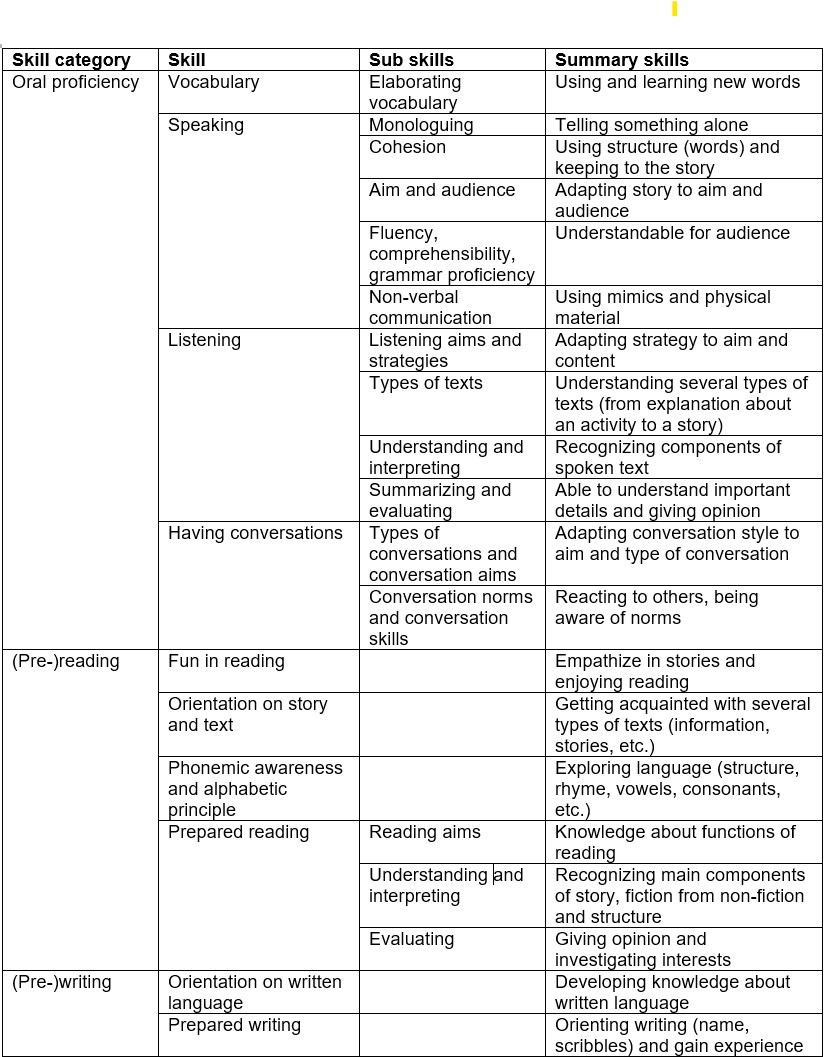

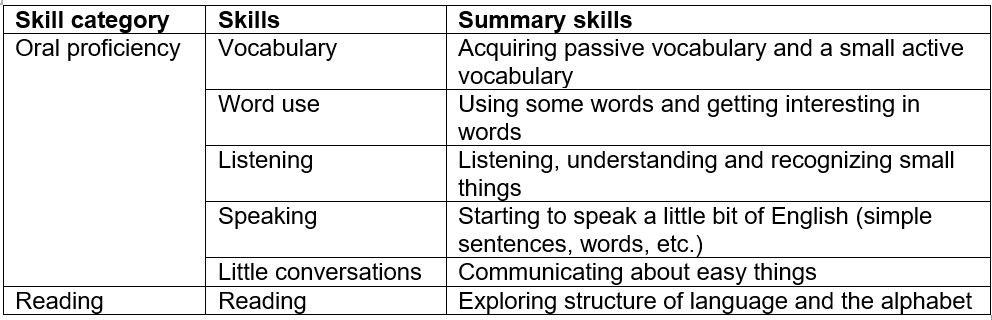

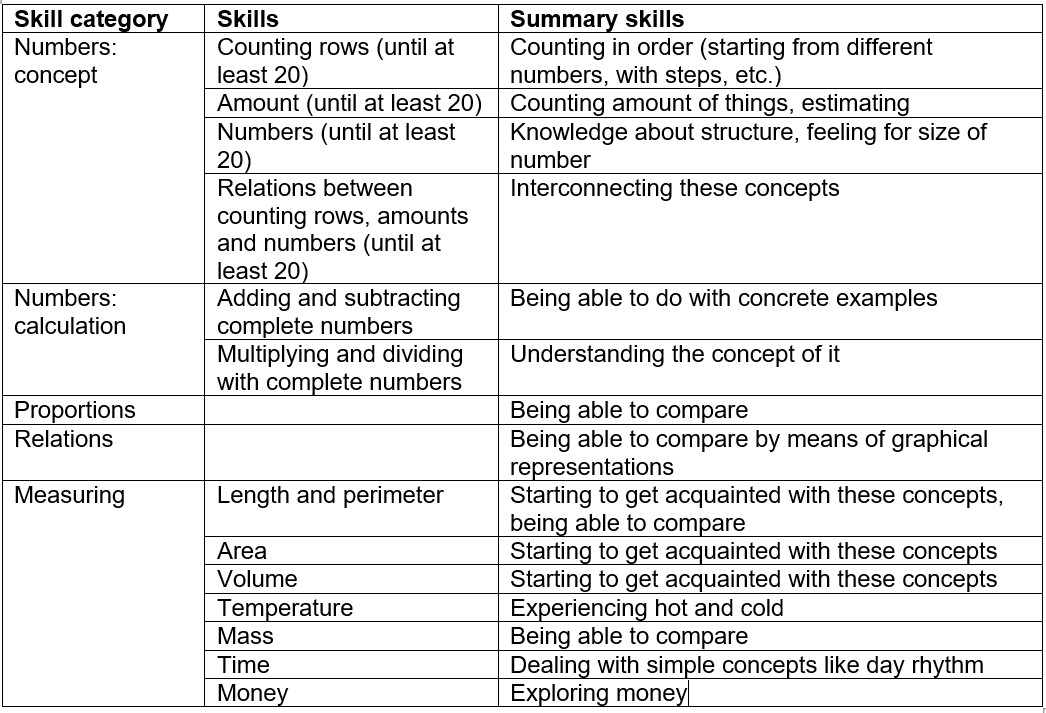

Content school

In Appendix D, an elaborate overview what is learned in year 1 and 2 of primary school in the Netherlands can be found. Many aspects that are (usually) learned in kindergarten are based on social and emotional interaction. Existing technologies are not yet able to completely support humans in these interaction and this is especially the case for younger children. In the table below there can be found on what components of primary school content the robot can build on.

| Discipline | Skill | Learning material |

|---|---|---|

| Dutch |

|

|

| English |

|

|

| Mathematics |

|

|

| Orientation to yourself and the world |

|

|

| Artistic orientation |

|

|

| Physical activity |

|

|

Interaction with children

In parent to child interaction there is a natural balance between the control of the parent in comparison to the child’s own demand for independence (Lundberg, 2007). Which means even parents won’t always be able to guide their children to what the parents want for example playing with blocks. This balance depends on the age.

Young children have way less control over their activity and independence than older children. If the robot is used, the same effect will occur. The robot will not have constant control over the activity of the child. If a child seems to want different interactions the robot can use this information. The child could want to interact with the room or environment or maybe the robot with another application. As young children have an attention span of 10-15 minutes, it is important to change some things up after such a time span (Torta, 2012).

This is important to increase the effectiveness for the parents of working or doing something else without using a lot of focus to watch your child.

Beside from the focus benefit for the benefit, Rubby can also increase educational areas using games. From research (Herodotou, 2018) children using in this study angry birds regularly showed positive impact on the maths comprehension.

Parents' opinion

Parents are not the direct users of the robot, but they are indirectly involved. First of all, the robot is developed with the aim to give them more rest. Secondly, parents know their children best and have the responsibility over them. Therefore, a survey was send to parents with questions about a robot for children with educational and entertainment features. In Appendix C the informed consent, questions including answer options and an elaborate result section can be found. Below we will discuss which questions were asked, what the main conclusions were per question category and the points that will be used in the development of the robot.

First of all, the robot 'Rubby' was introduced to the participants of the survey. This introduction looked the following:

Rubby is a robot that is made to support parents in focusing on their work at home when their young, school-going children (4- and 5-years old) are around. When a parent has a meeting or another important activity that requires a peaceful work environment, Rubby can keep a child busy during these moments.

Rubby moves by wheels, has arms and a body on which a tablet is mounted. It is able to detect the motion of the child. The tablet, motion detection technologies and interaction technologies make it possible for Rubby to entertain the child with games, teach new things (broading vocabulary, learning to write, etc.) and do physical activities like dancing. Every activity has a span of 10 minutes ( meet the limited attention span of 4- and 5-year old children) and when finished, Rubby asks the child if it want to continue or have another activity.

In this way, a child has various activities to do in the time a parent does not have time and parents will be able to work without too many disturbances.

The following introductory questions were asked.

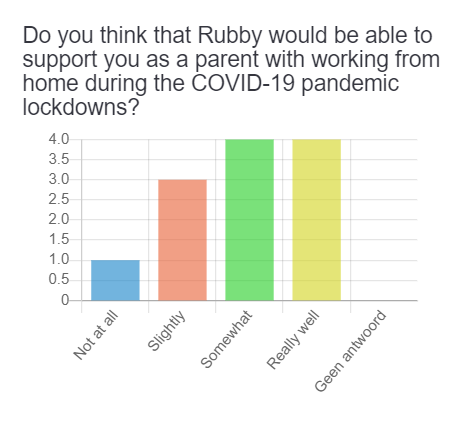

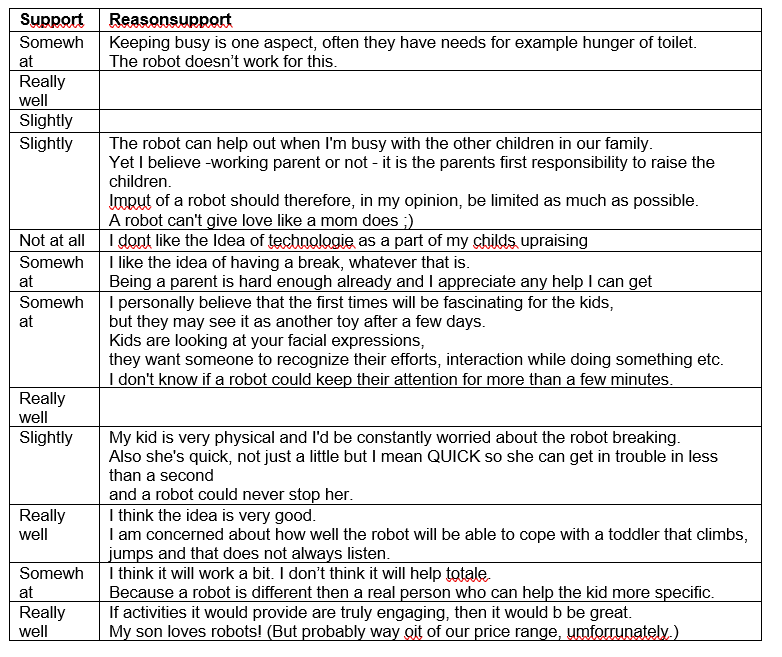

- Do you think that Rubby would be able to support you as a parent with working from home during the COVID-19 pandemic lockdowns? Why/why not?

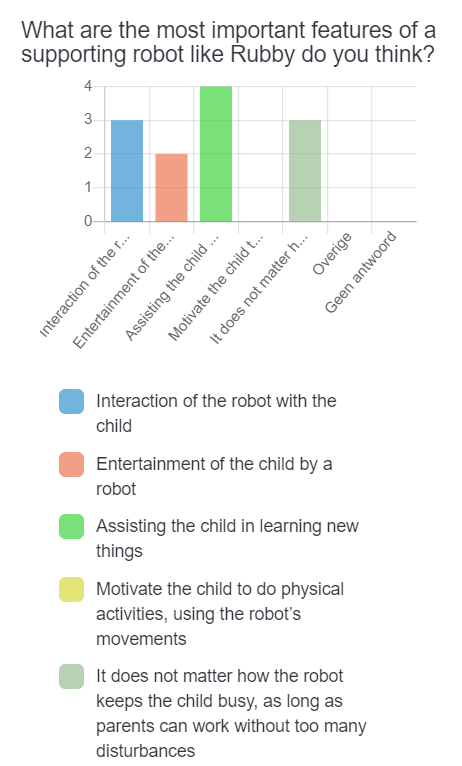

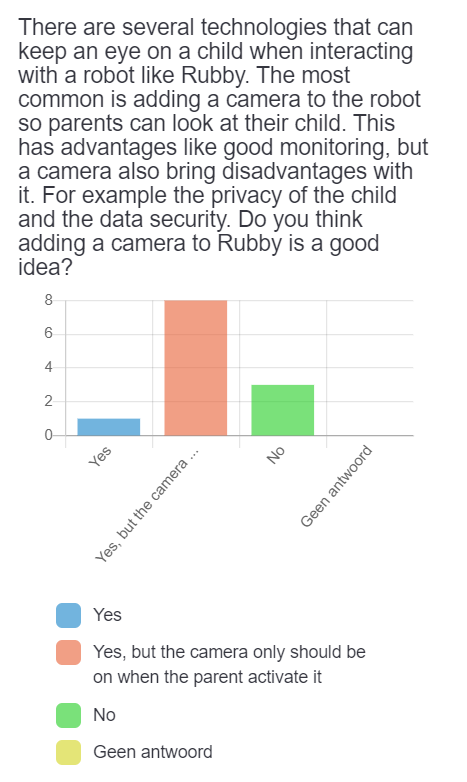

- What are the most important features of a supporting robot like Rubby do you think? Why?

- Do you think that your child(ren) would (have) like(d) to play with Rubby? Why?

- How long is your child able to concentrate on something?

- Do you think that your child will be able to keep him/herself busy with the variety of activities or Rubby when you are working?

A significant majority of the participants think that this robot could be of support during working from home in COVID-19 lockdowns and also a majority is positive about it. However, parents are concerned about the interaction between child and a non-human object, listening of the child and the fragility of the robot. The majority of the parents think their child would like the robot and are able to keep themselves busy with the robot.

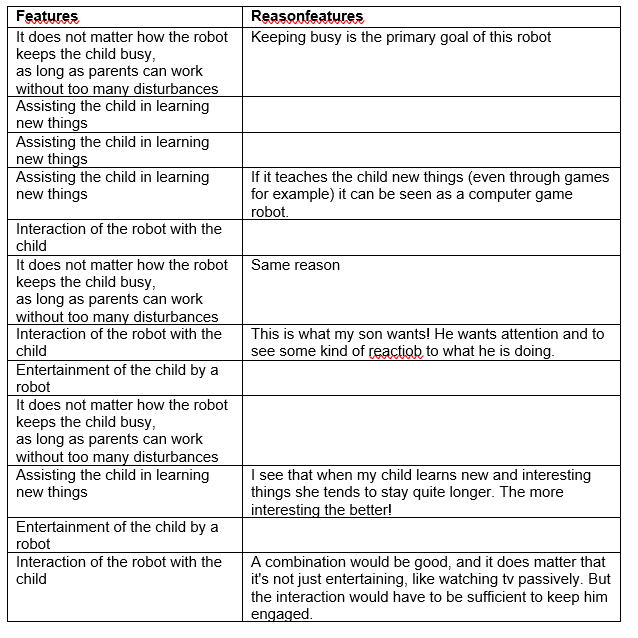

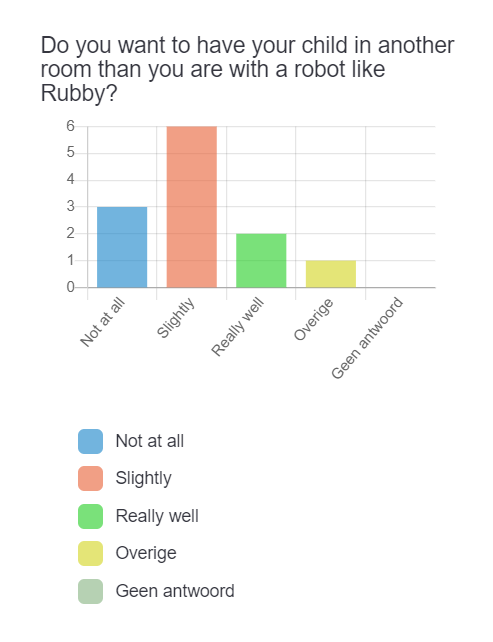

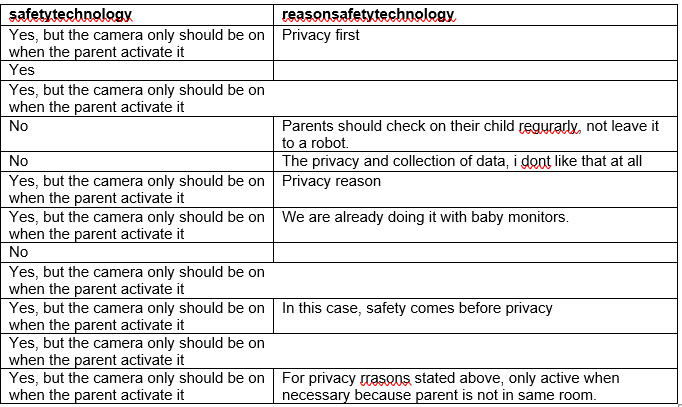

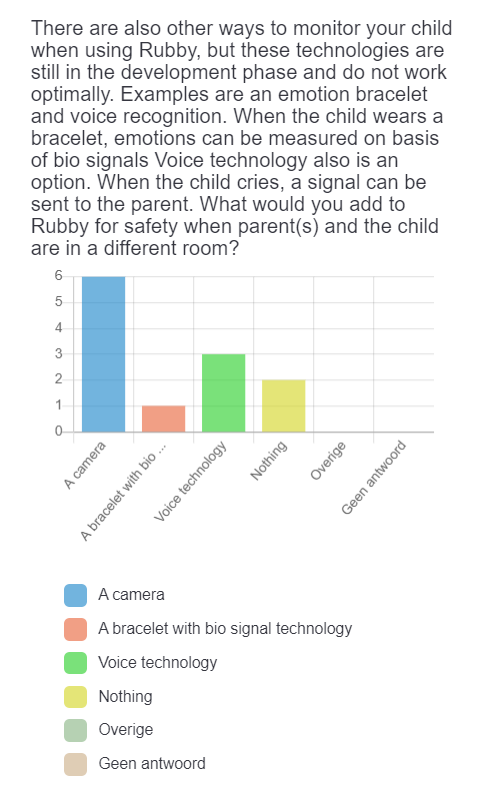

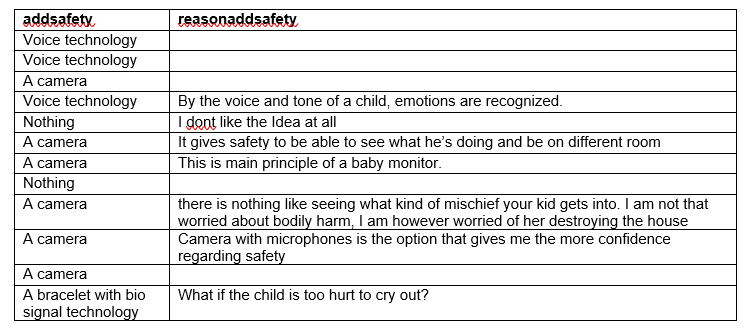

Secondly, parents were asked how they think about safety measures like a camera on the robot. Because of privacy reasons, we also investigated parents' opinions about other measures than camera's. The following questions were asked in the section questions about safety improvement(s) of the robot:

- Do you want to have your child in another room than you are with a robot like Rubby?

- Do you think adding a camera to Rubby is a good idea? Why?

- What would you add to Rubby for safety when parent(s) and the child are in a different room? Why?

As a result, it appeared that the majority of the parents think it is a good idea to add a camera to the robot to provide safety, but also voice technology got support as a safety measure.

In the last content-related section the participants were asked to give input towards the development of the robot by the following questions:

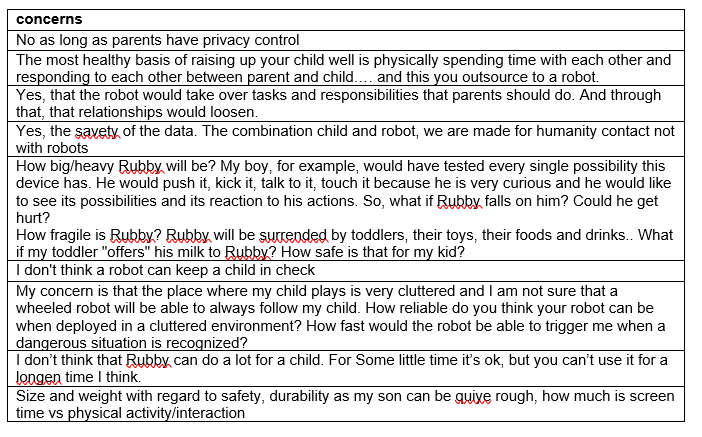

- Do you have any concerns towards Rubby? Please write them down below.

- Do you have any tips/recommendations for Rubby? Please write them down below.

The main worries of parents about a robot like this are safety of the child and the robot, safety of the data and the interaction between child and robot.

Lastly, questions about participants' children and other demographics were asked to have a good overview how the sample looks like.

Conclusion

The following points were learnt from this survey and will be used in the development of the robot:

- Make sure the robot is hard to break and make sure it does not fall.

- Do not use it long or include breaks.

- The variety of activities should be included so many parents will make use of it

- The robot should renew, so it offers new activities and the toddler will maintain its interest

- We should include a camera on the robot, but only film the child when the camera is activated by the parent. Also, including voice technology as an extra check can be handy to monitor the child’s behavior.

- The data of the robot should be safe.

- Good guidelines for the room are needed to keep the safety for the room’s stuff.

- The robot should not look like a human, but more like a toy.

- There should be guidelines for the parents so it is clear what the responsibilities the robot has and which the parents has.

User Needs

The user needs are based on user specifications described above and based on results of the survey that is done among parents. The overview that was used for the user needs in the presentation can be seen in Appendix I.

| User need description | Primary users | Secondary users |

|---|---|---|

| The user shall not get bored. | X | |

| The user shall be supported in his/her development. | X | |

| The user shall have a good time when other human-beings are not able to play with him/her. | X | |

| The user shall be able to choose between different activities. | X | |

| The user shall not be harmed by the robot when it is used in the right way. | X | |

| The user needs a system that prevents from catching up work. | X | |

| The user shall be able to work and have online meetings in peace, without disturbances from children. | X | |

| The user shall have the control over his/her children (turning on the robot, safety). | X | |

| The user wants his/her children to have a good time. | X | |

| The user wants his/her children to learn new things. | X | |

| The user shall be able to keep an eye on his/her children | X |

Rubby

The robot that will partially be developed in this project, is called 'Rubby', a combination of 'buddy' and 'robot'. In this chapter, Rubby will be defined.

The results of the survey and background literature have lead to an idea of a robot. First the requirements, preferences, and constraints are discussed. Then, the robot itself, Rubby, is described. Last but not least, a scenario is given to illustrate the usage of Rubby in daily life.

Specifications robot

In this section of the chapter the RPC’s for robot Rubby are given and the technical requirements are listed. These RPC's are motivated and are accompanied with a description on how it will be applied to the robot. The overview that was used for the RPC's in the presentation can be seen in Appendix J.

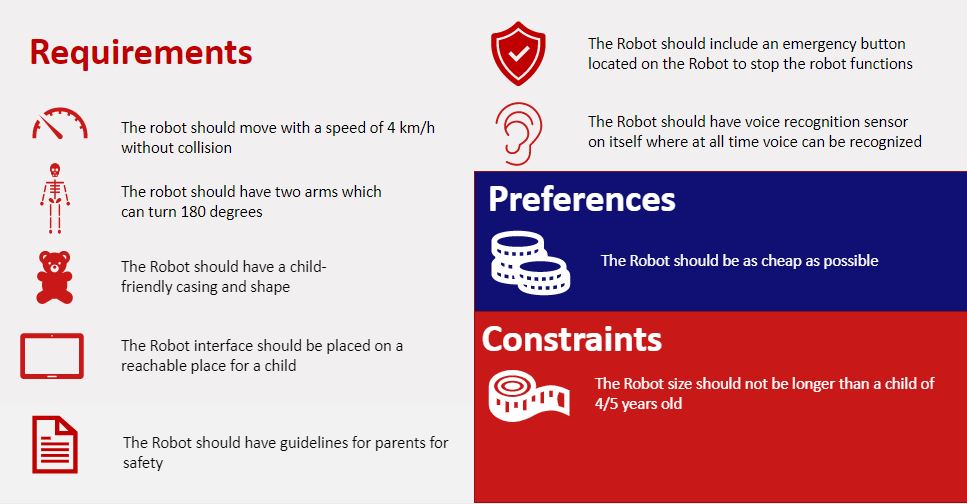

RPC's for this project

| Rationale | Application to Rubby | |

|---|---|---|

| Requirements | ||

| The Robot shall be able to move itself with a speed of 4km/h while preventing collisions with objects in the room | This will ensure the safety for the child | Rotational motors will be used to drive the tracks at moderate speed |

| The Robot shall have two arms which are able to rotate about 180 degrees and move spherically | With this, the physical interaction is enhanced | Servo motors will be used in order to meet this requirement |

| The Robot shall be able to be wireless (on battery not with a wire) | Being wireless increases the practical ease | The battery will be located within the robot, and is able to be charged |

| The Robot shall have a child-friendly casing and shape | Child-friendly casing ensures both attraction from the child to robot and safety | The robot's appearance will be child-friendly (based on literature and survey) |

| The Robot interface shall be placed on itself where the child can reach it well | This is required since the child has to physically make choices on the robot | The interface will be put on the front of the robot's main body |

| Guidelines for parents: Since the robot is a toy it should have a description for safe use for a child | This will ensure the safety when using the robot | Guidelines will be made concerning the robot, the surroundings and the child |

| The Robot shall include an emergency button located on the Robot to stop the robot functions | In case the robot's functioning fails or becomes harmful, this should be interrupted. | An emergency button will be placed at the back of the robot |

| The Robot shall have voice-recognition sensor on itself where at all time voice can be recognized | This requirement is an addition in order to increase the interaction with the user | A voice-recognition sensor will be placed on the robot |

| Preferences | ||

| The robot shall be as cheap as possible | This preference will increase the affordability and therefore the willingness of using the robot | This preference is taken into account in the design (bill of materials) |

| Constraints | ||

| The Robot size shall not be longer than a child of 4/5 years old | This is a constraint that ensures the child is attracted by the robot and does not feel dominated | This is taken into consideration in the measurements of the final design |

Introduction to Rubby

In this section of the chapter Rubby will be described. Rubby is the translation of the RPC's stated above to a 'real' robot.

Description

Rubby is a robot solution that helps parents focusing on their work at home. The robot Rubby keeps the child busy by functioning as a playmate while taking the desires and attention span of the child into account. This means that parents do not have to focus constantly on their children and give them intensive attention during working hours. Especially during the lockdowns of the COVID-19 pandemic this robot is a great help in daily life.

The Rubby is a robot of around half a meter high and is able to move around by means of wheels. It is able to detect motion of the child and if necessary, the robot can move slowly as well. Rubby has arms and also a body on which a tablet is mounted. This tablet is used as an interface on which the child can play (educational and entertaining) games. Rubby is able to interact with the child in several ways.

First of all, it can respond to the child's speech.

Secondly, it can speak to the child, which is for example used in telling stories, improving the child’s vocabulary.

Finally, the interaction is enhanced by the movements of Rubby, as it can move the arms and roll back and forth. The tablet on the robot’s body can be used in an entertainment as well as an educational way. The change in these kinds of interaction enhances the social connection between Rubby and the child. The robot takes into account the loss of attention of the child by asking whether or not he should switch to a different game.

Scenario

To get a better feeling of how Rubby would be used in daily life, scenario's of the persona's that were described in the Usersection are made. Below a scenario of David and Ava are described. In Appendix B you can find a scenario with Melissa and Noah.

Scenario David and Ava

It is Friday morning, around 8.30 AM. Ava wakes up because her father David is opening the curtains in her room. ‘Good morning sweetheart’ he says to her. David let her sleep later on than on school days, so he could already do some work. Today he has some important meetings and he has a deadline in the afternoon, so it is going to be a busy day. But first he breakfast with Ava and goes to the supermarket to do some shopping for the day and the coming weekend.

At 10.00 AM, Ava and David are back from the shop. Now, the day can really begin. David opens up his laptop and starts working from the table in the living room. Ava sits in the living room at the carpet and starts playing with her dolls. After half an hour, she starts to get bored. David gives her some fruit and tells her that he has a meeting the next hour. ‘Yes, then I can play with Rubby’ she says and she wonders what the robot has in mind to do for her this time. When David would have announced a meeting 3 months ago, Ava would be disappointed as she had to be silent all time and she got bored. Nowadays, when her father should not be disturbed by Ava she can hang out together with Rubby, a robot that acts a playmate for toddlers so their parents can work or meet without being disturbed.

After taking her last little piece of fruit and sip of her drink, David get Rubby out of the charger in the corner of the room. He puts the robot on the carpet, turns it on and set up the robot for an hour to an hour and a half. “See you in an hour darling” he says to Ava, sits down at the table and puts on his noise-cancelling headphones.

“Hi Ava, I am Rubby I am not a human like you and your father, but I am a robot” Rubby says to Ava. “Hi Rubby, what are we gonna do today?” Ava asks the robot. “We start with a counting game” and the tablet on his belly light up. After 10 minutes he asks Ava “Do you want to go something else?”. “Yes” she answers. She learned that she can only use little commands for the robot and she has to pronounce it clear. When you talk with humans you can have real conversations, so she knows from this that Rubby is a robot.

“Now, we will do a puzzle.” The game on the screen on his belly change and Ava starts putting pieces on the right spots. After 10 minutes Rubby asks again if Ava want to something else, “no” she answers and continues with the puzzle. After 5 minutes he asks again and Ava indicates she want to do something else. “Let’s do some exercise Ava!” Rubby says and he starts to explain what they will do. With his arms the robot indicates what Ava has to with her arms and with his wheels he shows the direction of the steps Ava has to take. After 10 minutes Rubby says “Let’s do a color game” and the screen on the belly lightens up again. This gets on for coming 40 minutes and then David’s meeting is finished. David finds it nice that his daughter does not get bored while he has a meeting and that he is not distracted. It feels good to know that Ava gets a variety of activities to do by Rubby alternated with entertainment, learning and physical tasks.

After long meetings he always takes a walk, so today he takes a walk with Ava. After their half an hour walk, they lunch together. After lunch Ava should take some rest and she read some picture books for half an hour. Then she can watch the television for half an hour. After this she can play with her dolls or something else. This afternoon, David has to finish something important and he also has a meeting. Fortunately, Rubby supports him with keeping Ava busy for an hour and a half.

Due to Rubby David has an effective working day. And also due to Rubby Ava had a nice day with a lot of fun activities. Before they had Rubby as a support, David had to work in his free time to catch up with his work. This weekend, when the whole family is home, they can do a board game or watch the television together with David.

This chapter describes how a model of the path planning is made. It will also describe the steps that have been taken to try to achieve local navigation. Unfortunately the local navigation goal was not met, but a model of the path planning was created successfully.

Why should Rubby move?

Rubby has to move so that it will always face the child. When the parents are working and want to see if their child is safe they can see on their monitor what the robot is filming. So the robot should always face with it's camera to the child. Also movement is needed since there will be made games in the future that include physical training. For example playing with a ball (by moving against a ball it will start rolling and the child will play with it). But it will also be important for the future when facial expression recognition will be included. So when the robot faces the child it can interact with it when certain emotions are detected. Local navigation is needed for avoiding obstacles when achieving these goals.

The difference between navigation, localization and path planning

Before we explain how the models work, the difference between navigation, localization and path planning should be explained. Localization only describes where the robot is in an environment, it does not know what the environment looks like. Path planning uses algorithms to figure out what the environment looks like, often it uses an existing map and defines an obstacle-free path. When that path is made, a controller is made to follow the defined path. Local navigation is a combination of localization and path planning. The robot moves through an environment. By making use of sensors, it can define whether there is an obstacle on it's way or not. Than it will use algorithms to drive past that obstacle towards its target. So the main difference is that local navigation does not make use of an already existing map, it figures out the map by moving through the environment.

Path planning model

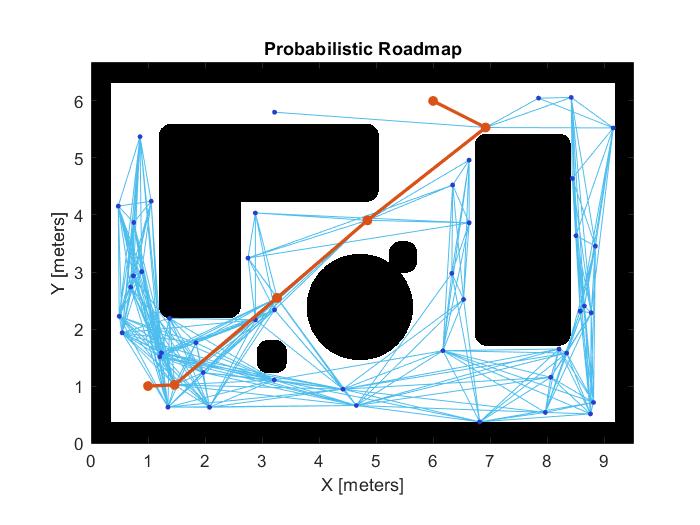

This model demonstrates how to compute an obstacle-free path between two locations on a given map using the Probabilistic Roadmap (PRM) path planner. PRM path planner constructs a roadmap in the free space of a given map using randomly sampled nodes in the free space and connecting them with each other. Once the roadmap has been constructed, you can query for a path from a given start location to a given end location on the map. This model has been made in MATLAB.

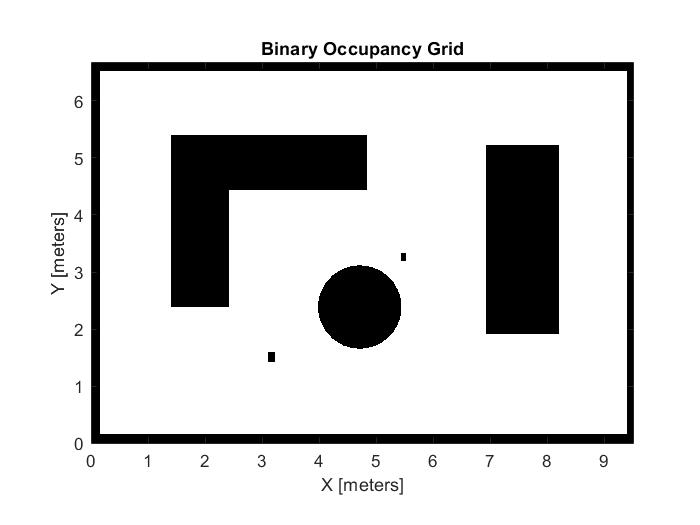

Binary Occupancy grid

In this model, the map is represented as an occupancy grid map using imported data. A binary occupancy grid of a room has been made. In the figure on the right you can see a sofa, a round table and a rectangular table. Also two random objects have been placed in the room that you could see as toys which a child did leave somewhere in the room. When sampling nodes in the free space of a map, PRM uses this binary occupancy grid representation to deduce free space.

Inflated map

Furthermore, PRM does not take into account the robot dimension while computing an obstacle-free path on a map. Hence, you should inflate the map by the dimension of the robot, in order to allow computation of an obstacle-free path that accounts for the robot's size and ensures collision avoidance for the actual robot. The difference between the inflated map and not-inflated map can be seen to the right. In the first picture the map has more straight lines while in the second map you can see that the black images are bold.

Roadmap path planner

When the map has been inflated by the dimension of the robot, mobileRobotPRM object is used as a roadmap path planner. The object uses the map to generate a roadmap, which is a network graph of possible paths in the map based on free and occupied spaces. To find an obstacle-free path from start to end location, the number of nodes and connection distances are adapted so the complexity is fitted into the map. After the map is defined, the mobileRobotPRM path planner generates the specified number of nodes throughout the free spaces in the map. A connection between nodes is made when a line between two nodes contains no obstacles and is within the specified connection distance. You can see this in the figure PRM Algorithm.

Controller

When the obstacle-free path from a start to an end location has been made, the robot with a controller can be made which will make sure that the robot follows the defined path. First the robot model has been initialized. The simulated robot has kinematic equations for the motion of a two-wheeled differential drive robot. The inputs to this simulated robot are linear and angular velocities. Based on the path defined above and a robot motion model, you need a path following controller to drive the robot along the path. The path following controller provides input control signals for the robot, which the robot uses to drive itself along the desired path. The settings of the controller are the desired waypoints, linear velocity and angular velocity. The result can be seen in the video:

Video of path planning: https://www.youtube.com/watch?v=_Ffpt758Jns&ab_channel=RobotsEverywhere

A start was made on developing local navigation for the robot. To start off, a model of Rubby was made in the Unified Robot Description Format (urdf). This model was kinematically similar to the actual robot (it moved in exactly the same way), but the shape was simplified to make simulation easier. The goal was to load this model into a simulation environment and test the obstacle avoidance capabilities of the robot. A LiDAR sensor was to be used to identify obstacles and to avoid them, a program should be written in Matlab. Two different simulation environments were tested. The first one was the simulink simulation environment and the second one was Gazebo in cooperation with Matlab Simulink.

Simulink Simulation

Simulink was chosen first due to the fact that it is a Matlab extension that the group members all had some experience with. To simulate a mobile robot in Simulink, the following steps have to be taken:

- The kinematics of the robot have to be defined

- A map of the test environment has to be made

- Virtual sensors have to be added to the robot

- The robot has to be placed inside the simulation environment and the control algorithm has to be tested.

The first step was achieved by analytically determining the motion of the robot for given wheel positions. The exact derivation of the motion of the robot is will not be elaborated on here, but the conclusion was that for a given velocity of both the wheels, the robot would move in the desired way. An overview of the simscape model that defined the motion of the robot can be seen in the figure.

The second step was achieved by using a test environment used in a webinar by Mathworks (MathWorks Robotics and Autonomous Systems Team, 2021). A video of the robot moving through this environment can be seen on: https://youtu.be/NxY3lH0EniM

Adding sensors in the simulink environment has to be done entirely manually, meaning that for a LiDAR sensor with 180 rays, all 180 rays have to be defined and implemented manually. This is of course not a completely straightforward task and after some further consideration, it was decided to use the Gazebo simulation environment instead. This environment automatically generates all of the sensor data, which is a far more efficient way of doing things. Gazebo can also perform Co-simulations with Matlab simulink, which allowed us to use the pre-existing knowledge of this program to develop a control algorithm.

Gazebo Simulation

Since Gazebo has limited support on windows, it had to be used on a Linux system (Ubuntu in this case). Implementing the robot in Gazebo had some other challenges as well. To launch a world in Gazebo with the robot in it, ROS (Robot Operating System) packages had to be used. A package was made for both the Robot and the world that was designed within gazebo. The robot and a LiDAR sensor could then be seen in the world, as can be seen in the figure on the right. To test the connection to matlab, the sensor data from this LiDAR was plotted in Matlab, an example of which can be seen in the figure. When this LiDAR data corresponded to the data that was expected, see the figure on the right, an attempt was made at adding such a LiDAR sensor to the robot. Since the world file and the robot file were in two different file formats, it was not just a case of copy-pasting the code used to make the sensor from the world file and adding it to the robot. The support for Sensors in the urdf file format is small at best, and significant knowledge of the ROS and the Gazebo program is required to properly implement this. This knowledge is not present within the group however, and there was not enough time to properly acquire this within the time frame given for this project.

Continuation

Despite the fact that there was no tangible result to this part of the project, some important steps in developing and verifying a local navigation system were definitely taken. A test environment and defined which can easily be launched in Gazebo, this test environment contains a living room environment as well as a model of the Robot. A simulink file was created that can connect to the Gazebo environment and retrieve LiDAR data from this environment. This can also be used to actuate the wheels of the robot and make it move. The steps that still have to be taken are as follows:

- A sensor has to be added to the robot model in the urdf file format.

- A control algorithm has to be developed that can enable the robot to avoid obstacles in its way

- The control algorithm has to be tested in the simulation environment.

The sensor can be added to the model by extending the existing file defining Rubby, plugins have to be loaded added that allow Gazebo to see sensors from the urdf file format. Incorporating these plugins required pretty in depth knowledge of the urdf file format and the ROS system. Since that was lacking, there was not enough time to properly finish this task.

A control algorithm can be written in a similar way to (Peng et al., 2015) This can be implemented in a matlab script, which can generate an input torque to the wheels, which can then be sent to Gazebo to perform the co-simulation.

Deliverable 2: Mechanical design

In this chapter the end product of the mechanical design will be described and shared. During the project the steps that are taken to achieve this are posted in this part of the wiki.

Design concepting

In order to design a robot, and especially its exterior appearance, several aspects are to be taken into account. Studies have shown the functions and shapes of a robot are crucial for the effectiveness of interaction between the robot and its user. This is the starting point of the design of Rubby. Sketches are made of different components of the robot as well as of the robot as a whole. Here the RPCs are taken into account in order to comply with the determined requirements.

Additionally, the following findings were important with regards to the external design of Rubby:

- Sound effects: The addition of sound effects is the most effective and feasible way to keep the user’s attraction to the robot (Torta, van Heumen, Cuijpers & Juola, 2012).

- Eye-contact: Eye-contact does not necessarily improve the attention of the participants. The robot’s gazing behaviour may be suited in cases where the visual attention is already on the robot (Torta et al., 2012).

- Essence of shape: The shape are and interactional functions of a robot are important for the development of personal robots (Goetz, Kiesler & Powers, 2003).

- The importance of human-likeness: Robots need a moderate level of human-likeness; however, robots still need to be easily distinguishable from human beings (Tung, 2016).

- Anthropomorphic appearance: A moderate level of anthropomorphic appearance is required combined with appropriate social cues (Tung, 2016).

- Children tend to attribute intelligence, biological function and mental states to a robot (Tung, 2016).

- Humanoid features: A few visual human-like features can achieve the elicitation of children’s preferences (Tung, 2016).

- General appearance: A humanoid robot appearance is predominantly preferred over a robot with pure mechanical appearance (Walters, Syrdal, Dautenhahn, te Boekhorst & Koay, 2017).

- Impression of a robot: The initial impression and evaluation of a robot is essential (Walters et al., 2017).

Based on this, the following design choices regarding the external design of Rubby were concluded:

- Basic shape of the robot should be similar to that of a human being.

- The addition of sound effects: music sounds and speech.

- The addition of arms. These arms can turn in order to increase the level of human-likeness and to increase the attention attraction.

- The addition of a head. To ensure the robot does not look too much like a human being, the incorporation of a moving mouth when speaking is left out.

- Concerning the eye contact, ‘fake’ eyes can be added, however physically rolling and blinking eyes are left out, since this might work counterproductive.

- The addition of wheels. This emphasizes the robotic nature instead being a human. The movable aspect is important to improve the emotional response of the child to the robot.

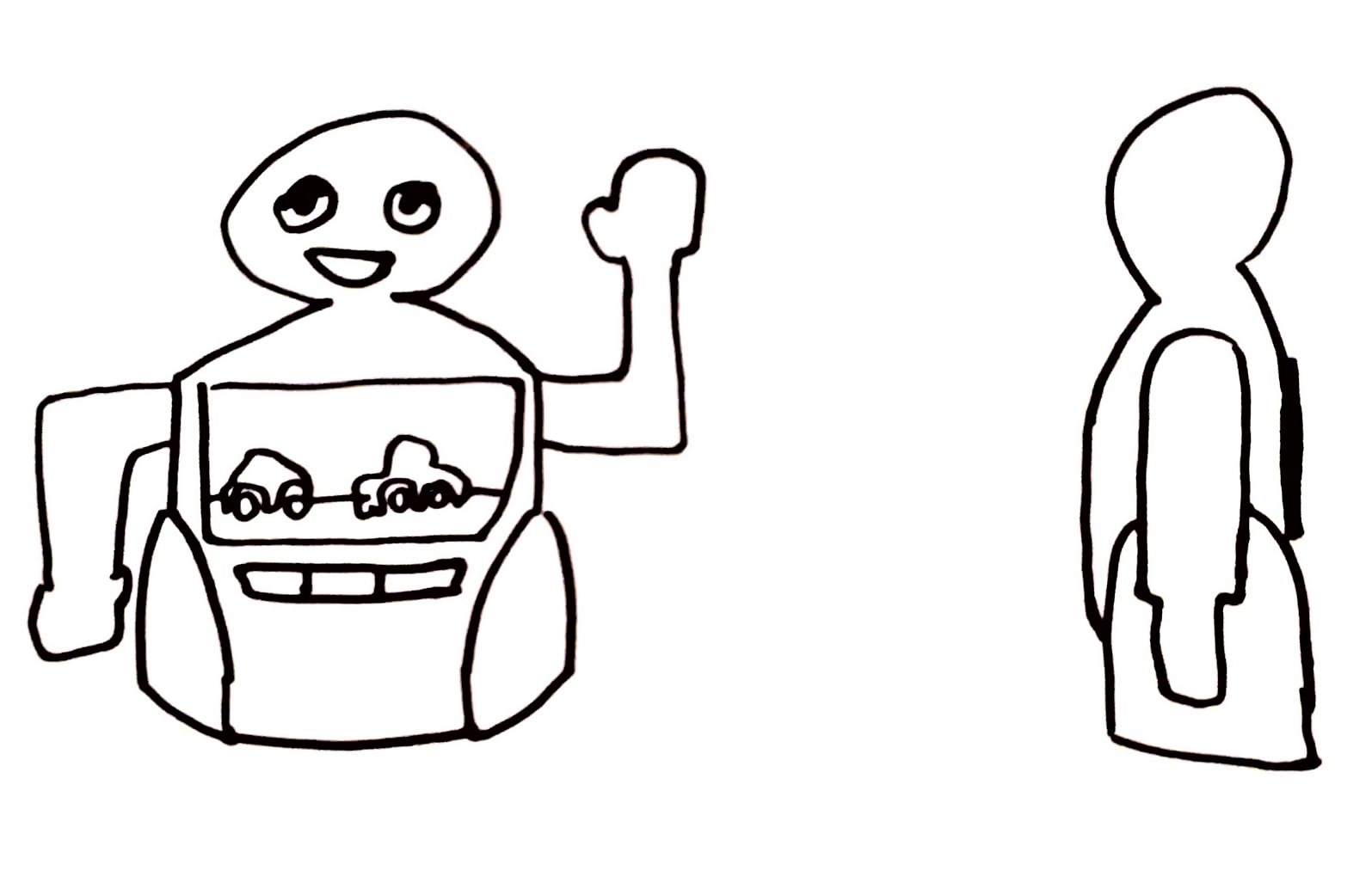

Taking these aspects into account, together with the RPCs made, several sketches and outlines are made to visualize possible solutions. The most relevant sketches are shown below:

In Appendix E, an overview of all sketches can be found together with more detailed sketches.

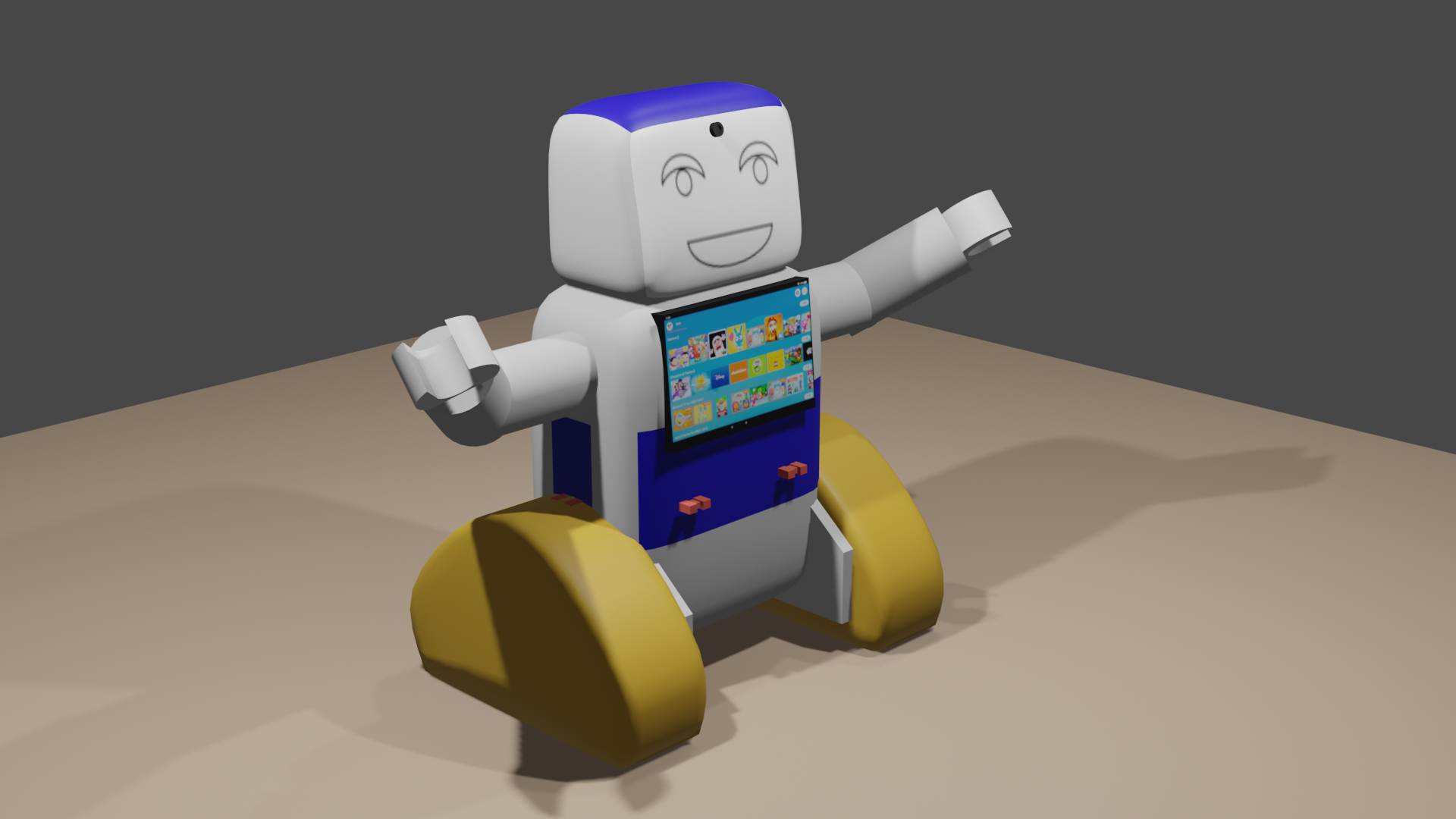

Preliminary design

Subsequently, the 2D sketches as presented in appendix H are further elaborated and optimized. The sketches are combined and put into 3D with a computer program. This results in the preliminary designs.

Below, these 3D design can be seen. In Appendix F, some more figures can be seen of these 3D models.

Two main preliminary designs are the result; One with normal wheels, two attached to the main body, and one wheel at the rear of the robot in order to ensure stability. The second design is one that uses tracks. With this, the robot also maintains stability and will be able to drive more easily on non-smooth surfaces. Both models form the base for the final design, that will be described in the following section.

In Appendix F more figures of the preliminary designs in Blender can be found.

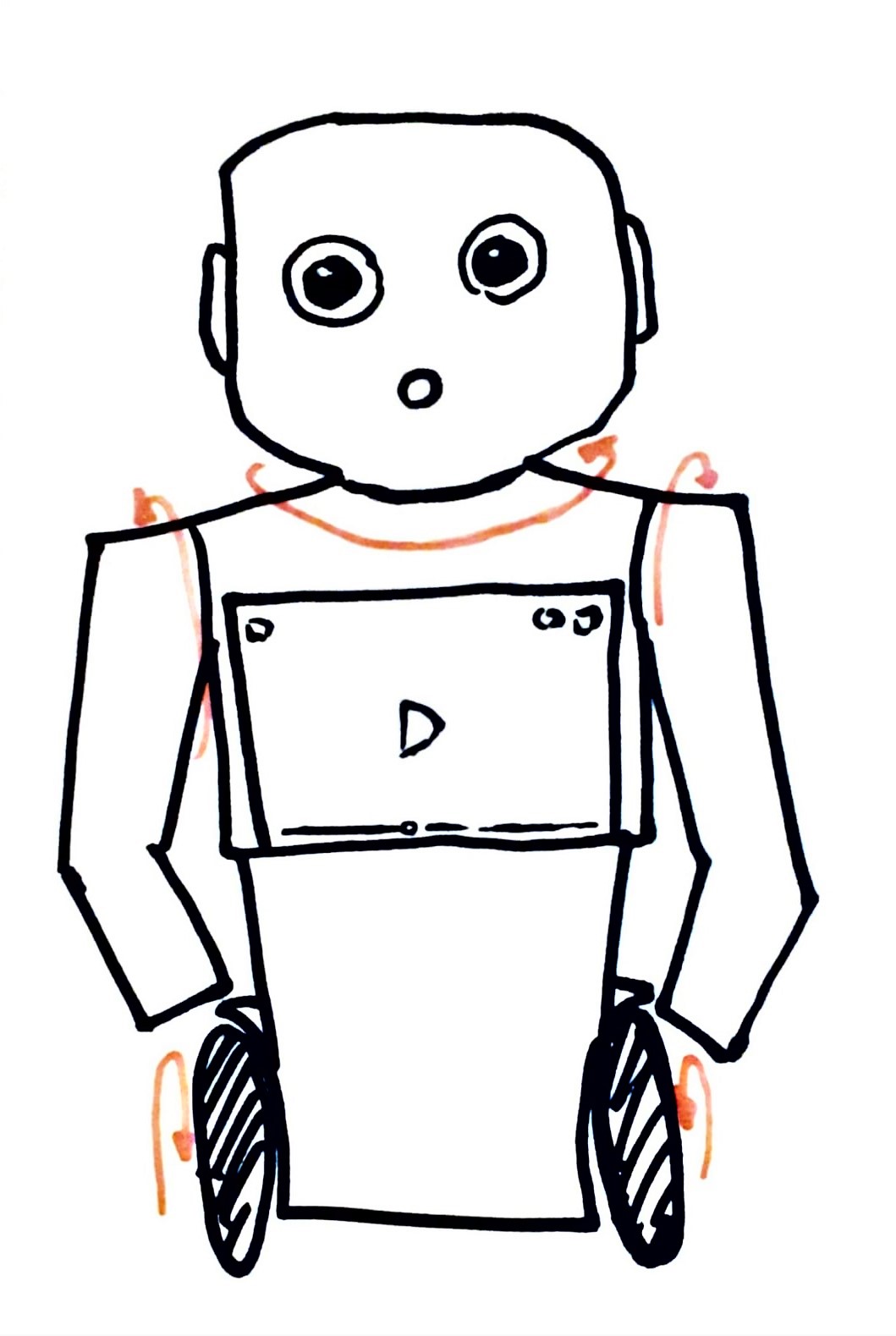

Final design

In this phase, conclusions are made on the design and design choices are discussed. The several specifications of the final design are considered. A bill of materials (BoM) is presented based on the design choices. This BoM is part of deliverable 3. There, also a price indication for the robot is presented.

The robot's main body will contain a battery and processor. The system will contain three motors. The first motor will be to rotate the inner arm around its own axis (red arrow in figure 1 in Appendix G) and can be mounted on the inside of the main body. The second motor will rotate the outer arm around the red axis in the second figure in Appendix G. The easiest option is to mount the outer motor at the end of the inner arm, however, it will increase the mass of the arm a bit. This second motor could be positioned inside the main body and then transfer the rotation via a belt or some alternative. The third motor is used to drive the tracks in order to ensure the movement of the robot.

The weight of our robot can be a compared with a Nao robot, about the same size and material. The total weight of a Nao robot is 5 kg. This would give an estimate for an entire arm of around 400 grams.

If the arms would then be 20 cm long, the required torque can be calculated. The outer motor will put a force on the COG (center of gravity) of the arm 10cm from itself and using gravity force, T = r*Fz = 10*0.200= 2 kg cm. Online a servo can be found of 4.1 kg cm torque (Robotshop, n.d.). This servo is 46 grams and is relatively cheap. This motor can also be used for the inner arm.

Concerning the motor needed for the tracks, the total mass of the robot is estimated at max 5 kg and the radius of the upper wheel at 5 cm. The torque needed for this motor equals equals T=radius*mass of robot= 5*5= 25 kg cm. A motor for this can be found (Amazon, n.d.). So this can be used to drive the tracks.