PRE2020 3 Group11: Difference between revisions

TUe\20182751 (talk | contribs) |

TUe\20182751 (talk | contribs) |

||

| Line 235: | Line 235: | ||

They are coming but not in the way you may have been led to think. Selfdriving cars have many issues: taking save turns, changing road surfaces, snow and ice and avoid traffic cops, crossing guards & emergency vehicles. And automatic stopping for pedestrians will make us people rather walk or take the subway. | They are coming but not in the way you may have been led to think. Selfdriving cars have many issues: taking save turns, changing road surfaces, snow and ice and avoid traffic cops, crossing guards & emergency vehicles. And automatic stopping for pedestrians will make us people rather walk or take the subway. | ||

We have a very unrealistic expectation of self driving cars. They will not happen the way you have been told. | We have a very unrealistic expectation of self driving cars. They will not happen the way you have been told. | ||

[[ | [[File:Sam.png]] | ||

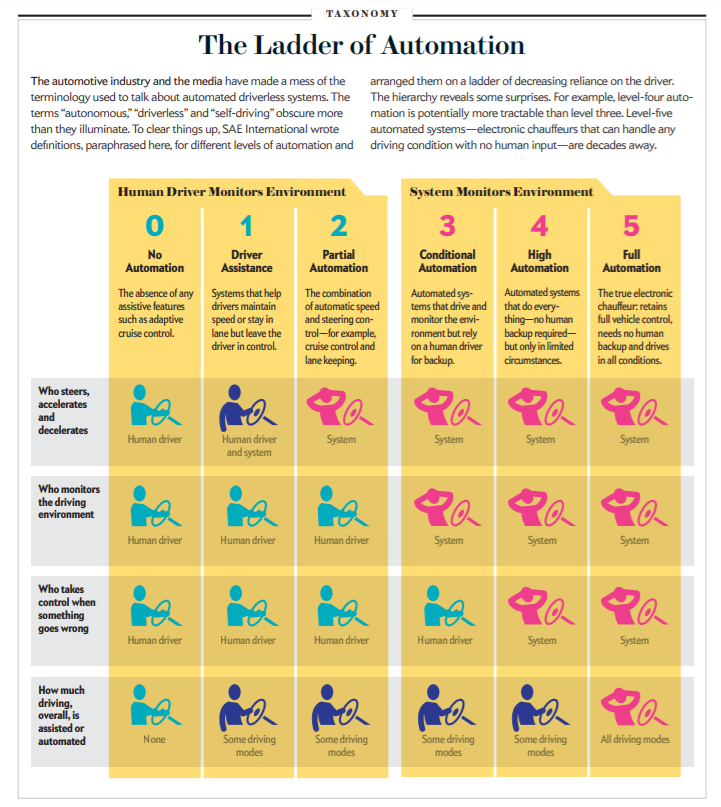

We are currently only arriving at level 3 cars. CEO of Nissan said fully automated cars (level 5) will be on the road by 2020. This isn’t true, level 4 cars may arrive in the next decade. Defining automated driving: much more complex than we think. Despite the popular perception, human drivers are remarkably capable of avoiding crashes. Mind how often your laptop freezes / is slow. This will inevitably lead to crashes, so there is a major software problem. | We are currently only arriving at level 3 cars. CEO of Nissan said fully automated cars (level 5) will be on the road by 2020. This isn’t true, level 4 cars may arrive in the next decade. Defining automated driving: much more complex than we think. Despite the popular perception, human drivers are remarkably capable of avoiding crashes. Mind how often your laptop freezes / is slow. This will inevitably lead to crashes, so there is a major software problem. | ||

Revision as of 11:39, 4 March 2021

The acceptance of self-driving cars

Problem statement

What are the relevant factors that contribute to the acceptance of self-driving cars for the private end-user?

Self-driving cars are believed to be more safe than manually driven cars. However, they can not be a 100% safe. Because crashes and collisions are unavoidable, self-driving cars should be programmed for responding to situations where accidents are highly likely or unavoidable (Sven Nyholm, Jilles Smids, 2016). There are three moral problems involving self-driving cars. First, the problem of who decides how self-driving cars should be programmed to deal with accidents exists. Next, the moral question who has to take the moral and legal responsibility for harms caused by self-driving cars is asked. Finally, there is the decision-making of risks and uncertainty.

There is the trolley problem, which is a moral problem because of human perspective on moral decisions made by machine intelligence, such as self-driving cars. For example, should a self-driving car hit a pregnant woman or swerve into a wall and kill its four passengers? There is also a moral responsibility for harms caused by self-driving cars. Suppose, for example, when there is an accident between an autonomous car and a conventional car, this will not only be followed by legal proceedings, it will also lead to a debate about who is morally responsible for what happened (Sven Nyholm, Jilles Smids, 2016).

A lot of uncertainty is involved with self-driving cars. The self-driving car cannot acquire certain knowledge about the truck’s trajectory, its speed at the time of collision, and its actual weight. Second, focusing on the self-driving car itself, in order to calculate the optimal trajectory, the self-driving car needs to have perfect knowledge of the state of the road, since any slipperiness of the road limits its maximal deceleration. Finally, if we turn to the elderly pedestrian, again we can easily identify a number of sources of uncertainty. Using facial recognition software, the self-driving car can perhaps estimate his age with some degree of precision and confidence. But it may merely guess his actual state of health (Sven Nyholm, Jilles Smids, 2016).

The decision-making about self-driving cars is more realistically represented as being made by multiple stakeholders; ordinary citizens, lawyers, ethicists, engineers, risk assessment experts, car-manufacturers, government, etc. These stakeholders need to negotiate a mutually agreed-upon solution (Sven Nyholm, Jilles Smids, 2016). This report will focus on the relevant factors that contribute to the acceptance of self-driving cars with the main focus on the private end-user. Taking into account the ethical theories: utilitarianism, kantianism, virtue ethics, deontology, ethical plurism, ethical absolutism and ethical relativism, the moral and legal responsibility, safety, security, privacy and the perspective of the private end-user.

Survey

https://doi.org/10.1016/j.tranpol.2018.03.004

doi: 10.1109/TEM.2018.2877307.

https://doi.org/10.1007/978-3-319-58530-7_1

Ethical theories

A key feature of self-driving cars is that the decision making process is taken away from the person in the driver’s seat, and instead bestowed upon the car itself. From this several ethical dilemmas emerge, one of which is essentially a version of the trolley problem. When an unavoidable collision will occur, it is important to define the desired behaviour of the self-driving car. It might be the case that in such a scenario, the car has to choose whether to prioritize the life and health of its passengers or the people outside of the vehicle. In real life such cases are relatively rare [reference 1] , but the ethical theory underlying that decision will have possibly an impact on the acceptance of the technology. Self-driving vehicles that decide who might live and who might die are essentially in a scenario where some moral reasoning is required in order to produce the best outcome for all parties involved. Given that cars seem not to be capable of moral reasoning, programmers must choose for them the right ethical setting on which to base such decisions on. However, ethical decisions are not often clear cut. Imagine driving at high speed in a self-driving car, and suddenly the car in front comes to a sudden halt. The self-driving car can either suddenly break as well, possibly harming the passengers, or it can swerve into a motorcyclist, possibly harming them. One could argue that since the motorcyclist is not at fault, the self-driving car should prioritize their safety. After all, the passenger made the decision to enter the car, putting at least some responsibility on them. On the other hand, people who buy might buy the self-driving car will have an expectation to not be put in avoidable danger. No matter the choice of the car, and the underlying ethical theory that it is (possibly) based on, it is likely that the behaviour and decision-making of the car has more chance of being socially accepted if it can morally be justified. Therefore in this section there is first highlighted some possible ethical theories, and then we will discuss some relevant aspects that surround the implementation of all ethical theories.

- Different ethical theories explanation

- Explicitly choose ethical setting vs neural nets (which would effectively choose one, being a black box)

- Ethical knob and letting the user set the ethical setting

- Game theory

- Relevance of ethical theories

- Ethical theories that are bad for users might not be popular

- Knowing the theory might change people’s behaviours (pedestrians who know the car will prioritize them might not pay as much attention; a perfect car might do everything in its power to avoid collision with them)

Responsibility

One very important factor in the development and sale of automated vehicles is the question of who is responsible when things go wrong. In this section we will look in detail at all factors involved and come up with certain solutions. As brought up by Marchant and Lindor, there are three questions that need to be analysed. Firstly, who will be liable in the case of an accident? Secondly, how much weight should be given to the fact that autonomous vehicles are supposed to be safer than conventional vehicles in determining who of the involved people should be held responsible? Lastly, will a higher percentage of crashes be caused because of a manufacturing ‘defect’, compared to crashes with conventional vehicles where driver error is usually attributed to the cause (Marchant & Lindor, 2012)?

The manufacturer It would be obvious to say the manufacturer of the car is responsible. They designed the car, so if it makes a mistake, they are to blame. Any flaw in the system that might cause the car to crash, the manufacturers could have known or did know beforehand. If they then sold the car anyway, there is no question in that they are responsible. However, by holding the manufacturer responsible in every case, it would immensely discourage anyone to start producing these autonomous cars. Especially with technology as complex as autonomous driving systems, it would be nearly impossible to make it flawless (Marchant & Lindor, 2012). In order to encourage people to manufacture autonomous vehicles and still hold them responsible, a balance needs to be found between the two. This is necessary, because removing all liability would also result in undesirable effects (Hevelke & Nida-Rümelin, 2015).

Safety

One of the main factors deciding whether self-driving cars will be accepted is the safety of them. Because who would leave their life in the hands of another entity, knowing it is not completely safe. Though almost everyone gets into buses and planes without doubt or fear. Would we be able to do the same with self-driving cars? Cars have become more and more autonomous over the last decades. Furthermore, self-driving cars will operate in unstructured environments, this adds a lot of unexpected situations. (Wagner M., Koopman P. (2015))

Software

Traffic behaviour

The cars safety will be determined by the way it is programmed to act in traffic. Will it stop for every pedestrian? If it does pedestrians will know and cross roads wherever they want. Will it take the driving style of humans? How does the driving behavior of automated vehicles influence trust and acceptance?

In a research two different designs were presented to a group of participants. One was programmed to simulate a human driver, whilst the other one is communicating with it’s surroundings in a way that it could drive without stopping or slowing down. The research showed no significant different in trust of the two automated vehicles. However, it did show that the longer the research continued the trust grew. (Oliveira, L., Proctor, K., Burns, C. G., & Birrell, S. (2019)) It is therefore to say that the driving behaviour does not necessarily influence the acceptance. But the overall safety of the driving behaviour determines this.

Errors

Despite what we think, humans are quite capable of avoiding car crashes. It is inevitable that a computer never crashes, think about how often your laptop freezes. A slow response of a mini second can have disastrous consequences. Software for self-driving vehicles must be made fundamentally different. This is one of the major challenges currently holding back the development of fully automated cars. On the contrary automated air vehicles are already in use. However, software on automated aircraft is much less complex since they have to deal with fewer obstacles and almost no other vehicles.

Hackers

Vs humans

Self-driving cars hold the potential of eliminating all accidents, or at least those caused by inattentive drivers. (Wagner M., Koopman P. (2015))

The city

Trust

Questions of whether or not to trust a new technology are often answered by testing. (Wagner M., Koopman P. (2015))

Security

Privacy

Perspective of private end-user

Additional features important for users

While many people look positively towards the implementation of SDC’s, less people are willing to buy one. Also, many people don’t want to invest more money in SDC’s than they do in conventional cars right now. Therefore, a car sharing scheme is preferred by many. Also many people say that they will still have concerns riding a SDC and they prefer to be able to intervene manually whenever they want or need to. Additionally, people like to take over full control when they like to.

Most important benefits or concerns (in order of relevance).

- An SDC could solve transport-problems for older or disabled people.

- People are able to do other things while driving an SDC.

- People are concerned of legal issues caused by SDC’s.

- People are concerned of hackers’ attacks at SDC’s.

Strategic implications (in order of relevance).

- A feature making the user able to take over full control should be implemented. Female and old users showed the highest agreement. Pros: People are still able to enjoy the pleasures of manually driving and they don’t lose the emotion of freedom. Cons: The total efficiency of driving will decrease. People will most likely drive less efficient, if they don’t speed. If every car drives autonomously, the cars can communicate better and adapt earlier and better to each other. Other SDC’s can’t predict what a human driver will do. It is likely that more accidents will take place, because SDC’s will most likely be safer.

- Free test rides should be offered to people.

- Salesmen should offer comprehensive information in the showroom.

(König, M., & Neumayr, L. 2017b)

As already said above, more people are willing to accept SDC’s when they don’t have to buy a car themselves. This means that sharing cars will be the new normal. The idea is that you can order one with a mobile app or something like that and it will drive to you by itself. This is only possible if SDC’s become autonomous at the highest level. If they are autonomous, but require a person to intervene when things go wrong, they may not drive without passenger. As also mentioned, people don’t accept fully autonomous cars as much as cars with a possibility to intervene. The problems posed by ridesharing are that not all passengers, who don’t know each other, may travel from the same point to the same point. Also, people may not always feel to comfortable when they travel with strangers. Therefore, people are willing to accept this idea more when they can order a ride for themselves and when it doesn’t stop to pick up others. That way, it will become available again when the ride is finished. This will require more cars on the road in total than when rides are shared, so it only solves part of the traffic problem. The same amount of people need to move themselves at the same time as now and buses or trains will be made less use of, because cars will be more accessible. As world population also increases, ridesharing may be necessary. A solution would be that ordering a private ride will be more expensive. Then, only a part of the population (wealthy businessmen etcetera), would make use of this option and the majority of the people would have to ride with others. Only the existence of this option and the possibility of enjoying a private ride when you really need to, could make it easier for people to accept. One benefit of not owning cars, will be that parking spots within cities won’t be needed anymore. The cars could be deployed from a base outside the city and they can be parked there when not needed.

References used in report

Sven Nyholm, Jilles Smids. (2016). The Ethics of Accident-Algorithms for Self-Driving Cars: an Applied Trolley Problem? Ethical Theory and Moral Practice, 1275–1289.

Hevelke, A., & Nida-Rümelin, J. (2015). Responsibility for Crashes of Autonomous Vehicles: An Ethical Analysis. Science and Engineering Ethics, 21(3), 619–630. https://doi.org/10.1007/s11948-014-9565-5

Marchant, G. E., & Lindor, R. A. (2012). Santa Clara Law Review The Coming Collision Between Autonomous Vehicles and the Liability System THE COMING COLLISION BETWEEN AUTONOMOUS VEHICLES AND THE LIABILITY SYSTEM. Number 4 Article, 52(4), 12–17. Retrieved from http://digitalcommons.law.scu.edu/lawreview

Wagner M., Koopman P. (2015) A Philosophy for Developing Trust in Self-driving Cars. In: Meyer G., Beiker S. (eds) Road Vehicle Automation 2. Lecture Notes in Mobility. Springer, Cham. https://doi.org/10.1007/978-3-319-19078-5_14

Oliveira, L., Proctor, K., Burns, C. G., & Birrell, S. (2019). Driving Style: How Should an Automated Vehicle Behave? Information, 10(6), 219. MDPI AG. Retrieved from http://dx.doi.org/10.3390/info1006021

Shladover, S. (2016). THE TRUTH ABOUT “SELF-DRIVING” CARS. Scientific American, 314(6), 52-57. doi:10.2307/26046990

26 References

Greenblatt, N. A. (2016). Self-driving cars and the law. IEEE Spectrum, 46-51. doi:10.1109/MSPEC.2016.7419800

Holstein, T., Dodic-Crnkovic, G., & Pellicione, P. (2018). Ethical and Social Aspects of Self-Driving Cars. Retrieved from https://arxiv.org/abs/1802.04103

Nielsen, T. A., & Haustein, S. (2018). On sceptics and enthusiasts: What are the expectations towards self-driving cars? Transport Policy, 49-55. Retrieved from https://doi.org/10.1016/j.tranpol.2018.03.004

Stilgoe, J. (2018). Machine learning, social learning and the governance of self-driving cars. Social Studies of Science, 25-56. Retrieved from https://doi.org/10.1177/0306312717741687

Wagner, M., & Koopman, P. (2015). A Philosophy for Developing Trust in Self-driving Cars. Road Vehicle Automation 2, 163-171. Retrieved from https://link.springer.com/chapter/10.1007/978-3-319-19078-5_14

Sven Nyholm, Jilles Smids. (2016). The Ethics of Accident-Algorithms for Self-Driving Cars: an Applied Trolley Problem? Ethical Theory and Moral Practice, 1275–1289.

Nyholm, S. R. (2018). The ethics of crashes with self-driving cars: a roadmap I.

Chandiramani, J. R. (2017). Decision Making under Uncertainty for Automated Vehicles in Urban Situations. Master of Science Thesis.

Ibo van de Poel, Lambèr Royakkers. (2011). Ethics, Technology, and Engineering an introduction. Wiley-Blackwell.

Sam Levin, Nicky Woolf. (2016). Tesla driver killed while using autopilot was watching Harry Potter, witness says. The Guardian. https://www.theguardian.com/technology/2016/jul/01/tesla-driver-killed-autopilot-self-driving-car-harry-potter

Alexander Hevelke, Julian Nida-Rümelin. (2014). Responsibility for Crashes of Autonomous Vehicles: An Ethical Analysis. Science and Engineering Ethics Joshua Greene. (2013). Moral Tribes.

Noah J. Goodall. (2016). Ethical Decision Making During Automated Vehicle Crashes

Bonnefon, J.-F., Shariff, A., & Rahwan, I. (2016). The Social Dilemma of Autonomous Vehicles. Science, 1573-1576.

Katarzyna de Lazari-Radek, Peter Singer. Utilitarianism: A Very Short Introduction (2017), p.xix, ISBN 978-0-19-872879-5.

Hevelke, A. & Nida-Rümelin, J. Sci Eng Ethics (2015) 21: 619. https://doi.org/10.1007/s11948-014-9565-5

Shladover, S. (2016). THE TRUTH ABOUT “SELF-DRIVING” CARS. Scientific American, 314(6), 52-57. doi:10.2307/26046990

Duranton, G. (2016). Transitioning to Driverless Cars. Cityscape, 18(3), 193-196. Retrieved February 7, 2021, from http://www.jstor.org/stable/26328282

Cox, W. (2016). Driverless Cars and the City: Sharing Cars, Not Rides. Cityscape, 18(3), 197-204. Retrieved February 7, 2021, from http://www.jstor.org/stable/26328283

Stone, J. (2017). Who’s at the wheel: Driverless cars and transport policy. ReNew: Technology for a Sustainable Future, (139), 38-41. Retrieved February 7, 2021, from https://www.jstor.org/stable/90002086

Frey, T. (2012). DEMYSTIFYING THE FUTURE: Driverless Highways: Creating Cars That Talk to the Roads. Journal of Environmental Health, 75(5), 38-40. Retrieved February 7, 2021, from http://www.jstor.org/stable/26329536

Focussed on acceptance of the technology:

König, M., & Neumayr, L. (2017b). Users’ resistance towards radical innovations: The case of the self-driving car. Transportation Research Part F: Traffic Psychology and Behaviour, 44, 42–52. https://doi.org/10.1016/j.trf.2016.10.013

Nees, M. A. (2016). Acceptance of Self-driving Cars. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 60(1), 1449–1453. https://doi.org/10.1177/1541931213601332

S. Karnouskos, "Self-Driving Car Acceptance and the Role of Ethics," in IEEE Transactions on Engineering Management, vol. 67, no. 2, pp. 252-265, May 2020, doi: 10.1109/TEM.2018.2877307.

Lee C., Ward C., Raue M., D’Ambrosio L., Coughlin J.F. (2017) Age Differences in Acceptance of Self-driving Cars: A Survey of Perceptions and Attitudes. In: Zhou J., Salvendy G. (eds) Human Aspects of IT for the Aged Population. Aging, Design and User Experience. ITAP 2017. Lecture Notes in Computer Science, vol 10297. Springer, Cham. https://doi.org/10.1007/978-3-319-58530-7_1

Raue, M., D’Ambrosio, L. A., Ward, C., Lee, C., Jacquillat, C., & Coughlin, J. F. (2019). The Influence of Feelings While Driving Regular Cars on the Perception and Acceptance of Self-Driving Cars. Risk Analysis, 39(2), 358–374. https://doi.org/10.1111/risa.13267

Summaries References

The ethics of crashes with self‐driving cars: A roadmap, I

Self‐driving cars hold out the promise of being much safer than regular cars. Yet they cannot be 100% safe. Accordingly, they need to be programmed for how to deal with crash scenarios. Should cars be programmed to always prioritize their owners, to minimize harm, or to respond to crashes on the basis of some other type of principle? The article first discusses whether everyone should have the same “ethics settings.” Next, the oft‐made analogy with the trolley problem is examined. Then follows an assessment of recent empirical work on lay‐people's attitudes about crash algorithms relevant to the ethical issue of crash optimization. Finally, the article discusses what traditional ethical theories such as utilitarianism, Kantianism, virtue ethics, and contractualism imply about how cars should handle crash scenarios.

It might seem like a good idea to always hand over control to a human driver in any accident scenario. However, typical human reaction‐times are too slow for this to always be a good idea (Hevelke & Nida‐Rümelin, 2015) Jason Millar argues that a person's car should function as a “proxy” for their ethical outlook. People should therefore be able to choose their own ethics settings (Millar, 2014; see also Sandberg & Bradshaw‐Martin, 2013). Similarly, Giuseppe Contissa and colleagues argue that self‐driving cars should be equipped with an “ethical knob,” so that whoever is currently using the car can set it to their preferred settings. (Contissa, Lagioia, & Sartor, 2017) Jan Gogoll and Julian Müller, in contrast, argue that we all have self‐interested reasons to want everyone's cars to be programmed according to the same settings. (Gogoll & Müller, 2017). One advantage to giving people a certain degree of choice here is that this might make it easier to hold them responsible for any bad outcomes that crashes involving their vehicles might give rise to (Sandberg & Bradshaw‐Martin, 2013; cf. Lin, 2014).

One of the questions this raises is whether the vast literature on the trolley problem might be a useful source of ideas about how to deal with the ethics of crashing self‐driving cars. Together with Jilles Smids, I have put forward three reasons for being skeptical about relying very heavily on the trolley problem literature here (Nyholm & Smids, 2016). Firstly, in the trolley literature, we are typically asked to imagine that the only morally relevant factors are a very small set of factors. . Any bigger and more complex sets of considerations are imagined away. Secondly, in most trolley discussions, we are asked to set all questions of moral and legal responsibility aside, and only focus on the choice between the one and the five. In actual traffic ethics, we cannot ignore questions about responsibility. Thirdly, in trolley discussions, a fully deterministic scenario is imagined. It is assumed that we know with certainty what the outcomes of our available choices would be. In contrast, when we are prospectively programming self‐driving cars for how to deal with accident scenarios, we do not know what scenarios they will face. We must make risk‐assessments. (Nyholm & Smids, 2016). Emperical ethics: minimize overall harm. . However, when surveyed about what kinds of cars they themselves would want to use, people tend to favor cars that would save them in an accident scenario. People appear to have inconsistent or paradoxical attitudes. In the finding mentioned above, many people want others to have harm‐minimizing cars, while themselves wanting to have cars that would favor them.

“Top‐down” approach. That is, we can consider what utilitarians (or consequentialists more broadly), Kantians (or deontologists more broadly), virtue ethicists, or contractualists would recommend regarding this topic. Utilitarian ethics is about maximizing overall happiness, while minimizing overall suffering. Kantian ethics is about adopting a set of basic principles (“maxims”) fit to serve as universal laws, in accordance with which all are treated as ends‐in‐themselves and never as mere means. Virtue ethics is about cultivating and then fully realizing a set of basic virtues and excellences. Contractualist ethics is about formulating guidelines people would be willing to adopt as a shared set of rules, based on nonmoral or self‐interested reasons, in a hypothetical scenario where they would be making an unforced agreement about how to live together. A utilitarian would be mindful of the fact that people might be scared of taking rides in “utilitarian” cars, instead preferring cars programmed to prioritize their passengers. . The lesson from Kantian ethics might be that we should choose rules we would be willing to have as universal laws applying equally to all—so as to make everything fair, and not give some people an unjustified advantage in crash‐scenarios. ? It is hard to come up with any virtue ethical ideas about how self‐driving cars should crash (cf. Gurney, 2016). But virtue ethics might help when we think about the ethics of automated driving more generally. Perhaps a lesson from a virtue ethical perspective is that we should try to design and program cars in ways that help to make people act carefully and responsibly when they 6 of 10 NYHOLM use self‐driving cars.

The Ethics of Accident-Algorithms for Self-Driving Cars: an Applied Trolley Problem?

We identify three important ways in which the ethics of accidentalgorithms for self-driving cars and the philosophy of the trolley problem differ from each other. These concern: (i) the basic decision-making situation faced by those who decide how selfdriving cars should be programmed to deal with accidents; (ii) moral and legal responsibility; and (iii) decision-making in the face of risks and uncertainty.

According to Frances Kamm, the basic philosophical problem is this: why are certain people, using certain methods, morally permitted to kill a smaller number of people to save a greater number, whereas others, using other methods, are not morally permitted to kill the same smaller number to save the same greater number of people? (Kamm 2015) The morally relevant decisions are prospective decisions, or contingency-planning, on the part of human beings. In contrast, in the trolley cases, a person is imagined to be in the situation as it is happening, split-second decision-making. It is unlike the prospective decision-making, or contingency-planning, we need to engage in when we think about how autonomous cars should be programmed to respond to different types of scenarios we think may arise. The decision-making about self-driving cars is more realistically represented as being made by multiple stakeholders – for example, ordinary citizens, lawyers, ethicists, engineers, risk-assessment experts, car-manufacturers, etc. These stakeholders need to negotiate a mutually agreed-upon solution. . In one case, the morally relevant decision-making is made by multiple stakeholders, who are making a prospective decision about how a certain kind of technology should be programmed to respond to situations it might encounter. And there are no limits on what considerations, or what numbers of considerations, might be brought to bear on this decision. In the other case, the morally relevant decision-making is done by a single agent who is responding to the immediate situation he or she is facing – and only a very limited number of considerations are taken into account.

Responsibility: Suppose, for example, there is a collision between an autonomous car and a conventional car, and though nobody dies, people in both cars are seriously injured. This will surely not only be followed by legal proceedings. It will also naturally – and sensibly – lead to a debate about who is morally responsible for what occurred. Forward-looking responsibility is the responsibility that people can have to try to shape what happens in the near or distant future in certain ways. Backward-looking responsibility is the responsibility that people can have for what has happened in the past, either because of what they have done or what they have allowed to happen. (Van de Poel 2011) Applied to riskmanagement and the choice of accident-algorithms for self-driving cars, both kinds of responsibility are highly relevant.

Uncertainties: the self-driving car cannot acquire certain knowledge about the truck’s trajectory, its speed at the time of collision, and its actual weight. Second, focusing on the self-driving car itself, in order to calculate the optimal trajectory, the self-driving car needs (among other things) to have perfect knowledge of the state of the road, since any slipperiness of the road limits its maximal deceleration. Finally, if we turn to the elderly pedestrian, again we can easily identify a number of sources of uncertainty. Using facial recognition software.

Responsibility for Crashes of Autonomous Vehicles: An Ethical Analysis

Autonomous cars are involved around legal, but also moral questions. Patrick Lin is concerned that any security gain will constitute a trade-off with human lives. The second question is whether it would be morally okay to put liability on the user based on a duty to pay attention to the road and traffic and to intervene when necessary to avoid accidents. It should depend on whether or not the driver would ever have a chance to intervene. In this article, two options are discussed: driver with a duty to intervene, or a driver with no duty (and thus no control). For the first option, if the driver never had a real chance of intervening, he should not be held responsible. However this holds only for the new cars, and they would still not be accessible to blind etc. For the second option where the driver has no control, it makes more sense to hold them accountable. However, this would make more sense in some kind of tax or insurance. Manufacturers should not be freed of their liability completely (take the Ford Pinto case as an example).

Ethical decision making during automated vehicle crashes

Three arguments were made in this paper: automated vehicles will almost certainly crash, even in ideal conditions; an automated vehicle’s decisions preceding certain crashes will have a moral component; and there is no obvious way to effectively encode human morality in software. A three-phase strategy for developing and regulating moral behavior in automated vehicles was proposed, to be implemented as technology progresses. The first phase is a rationalistic moral system for automated vehicles that will take action to minimize the impact of a crash based on generally agreed upon principles, e.g. injuries are preferable to fatalities. The second phase introduces machine learning techniques to study human decisions across a range of real-world and simulated crash scenarios to develop similar values. The rules from the first approach remain in place as behavioral boundaries. The final phase requires an automated vehicle to express its decisions using natural language, so that its highly complex and potentially incomprehensible-to-humans logic may be understood and corrected.

The social dilemma of autonomous vehicles

When it becomes possible to program decision-making based on moral principles into machines, will self-interest or the public good predominate? In a series of surveys, Bonnefon et al. found that even though participants approve of autonomous vehicles that might sacrifice passengers to save others, respondents would prefer not to ride in such vehicles (see the Perspective by Greene). Respondents would also not approve regulations mandating self-sacrifice, and such regulations would make them less willing to buy an autonomous vehicle.

The truth about ‘self-driving’ cars

They are coming but not in the way you may have been led to think. Selfdriving cars have many issues: taking save turns, changing road surfaces, snow and ice and avoid traffic cops, crossing guards & emergency vehicles. And automatic stopping for pedestrians will make us people rather walk or take the subway.

We have a very unrealistic expectation of self driving cars. They will not happen the way you have been told.

We are currently only arriving at level 3 cars. CEO of Nissan said fully automated cars (level 5) will be on the road by 2020. This isn’t true, level 4 cars may arrive in the next decade. Defining automated driving: much more complex than we think. Despite the popular perception, human drivers are remarkably capable of avoiding crashes. Mind how often your laptop freezes / is slow. This will inevitably lead to crashes, so there is a major software problem.

We are currently only arriving at level 3 cars. CEO of Nissan said fully automated cars (level 5) will be on the road by 2020. This isn’t true, level 4 cars may arrive in the next decade. Defining automated driving: much more complex than we think. Despite the popular perception, human drivers are remarkably capable of avoiding crashes. Mind how often your laptop freezes / is slow. This will inevitably lead to crashes, so there is a major software problem.

Software on aircraft is much less complex, since they have to deal with less obstacles and other vehicles. Also, the testing of the automated cars will have lots of problems. A lot of people will have to be subject of crashes statistically over a long period of time. Also, there is boundary money-wise, since the cars must stay affordable for the public. Some people think AI will give us self-driving cars. However, the problem with that is that it is non-deterministic. The possibility of having 2 cars with the same assembly but after a year automation systems will have different behaviour. It is out of our control.

Writer: Fully automated cars will not be here until 2075. In level 3 cars there is a problem with the driver zoning out. This problem so hard, some car manufacturers will not even try level 3. So outside of traffic jam assistants level 3 will probably never happen. Level 4 will happen eventually, but on certain parts of roads and with certain weather conditions. These scenarios might not sound as futuristic as having your own personal electronic chauffeur, but they have the benefit of being possible and soon.

Transitioning to driverless cars

Despite some nuances, the future looks mostly bright. The questions are how to get there, and what the transition to a full system of driverless cars will look like. A lot of the discussion so far has focused on insurance and ethical issues. Who is responsible in case of accidents? If the computer has to choose a victim in a collision, who will it be, its own passenger or a passenger in another car? These questions are interesting, but it is hard to imagine they will be major stumbling blocks. New technologies have brought new risks for many years, and ways have been found to spread those risks and define new forms of protection and liability. The ethical question probably makes for interesting debates in an introduction to ethics class at a university, but it is unlikely to have much practical relevance. Driverless cars will be much safer than cars are now.

A good case can be made that the key transitional problems will be instead about the political economy of the regulation of driverless cars and the cohabitation between driverless cars and cars driven by human beings. For car producers or would-be car producers, two strategies are possible. The first is incremental and consists of making cars gradually less reliant on drivers. That has been the strategy of most incumbent car producers. The incremental strategy presents one major problem, however. Partially driverless cars may be safer, but the true timesaving benefits of driverless cars will occur only when cars become completely driverless. With this scenario, the transition is likely to be extremely long, and how the last step about getting rid of the wheel will take place is unclear.

The alternative strategy is rupture and the direct development of cars without a steering wheel; that is the Google, Inc. strategy. It is an appealing but difficult proposition on several counts. It will require maximum software sophistication right from the start. If anything, processes will get easier with more driverless cars. Some technical issues seem extremely tricky to resolve. Incumbent car manufacturers that are betting on incremental change, not cars without wheels right from the start, will probably do everything they can to prevent fully driverless cars from being able operate.

Realizing that its radical innovation will be a hard sell, Google appears to want to make it even more radical. If Google cars cannot operate in existing cities, perhaps new cities need to be created for them. That probably sounds like a mad idea to many, but history teaches us that it may not be as crazy as it sounds. What was possibly the first suburb of America, the Main Line of Philadelphia, Pennsylvania, was developed by rail entrepreneurs who realized that developing suburbs was much more profitable than operating railways.

Driverless Cars and the City: Sharing Cars, Not Rides

The world of driverless cars heralds revolutionary changes, but for cities (metropolitan areas) the process will be evolutionary. No “Big Bang” will happen, but it will slowly evolve. Driverless cars will not significantly impact urban form, but will expand opportunity and quality of life for the disabled and other people who are unable to drive.

Who’s at the wheel: Driverless cars and transport policy Many of the claims for the benefits of driverless technologies rely on the complete transformation of the existing vehicle fleet. But the transition will not be smooth or uniform: winners and losers in the competition between the different interest groups will depend on many factors.

Freeways are likely to be the first spaces in which the new vehicles will be able to operate. In any case, problems of congestion and competition for space at any popular destination will not be resolved. The ambition is to allow cars, bikes and pedestrians to share road space much more safely than they do today, with the effect that more people will choose not to drive. But, if a driverless car or bus will never hit a jaywalker, what will stop pedestrians and cyclists from simply using the street as they please?

Some analysts are even predicting that the new vehicles will be slower than conventional driving, partly because the current balance of fear will be upset. While this might be attractive to cyclists, will it affect the marketability of Google’s new products? With huge reserves of cash and consequent lobbying power, Google and its ilk will be in a strong position to demand concessions from governments and road authorities. You can just imagine the pitch: we can save you billions on public transport operations, but we need fences to keep bikes and pedestrians out of the way of our vehicles in busy urban centres. Lost in the enthusiasm for the new, is the simple reality of the limited availability of urban space. New technologies of driverless trains may reduce costs and allow us to improve the quality of the service, but only if that is the focus of investment and innovation.

I would urge readers of ReNew to turn their minds to the real alternative technologies we need in urban transport. Rather than follow the individualist model which directs our attention to the technology of the vehicle, let’s turn our attention to the ‘technology of the network’. How can we build on the insights of the Europeans and Canadians and use the potentials of IT and electronics to build better collective transport systems that connect all of us to the life of the city without consuming all the space we need to live and grow.

Driverless Highways: Creating Cars That Talk to the Roads

The art of road building has been improving since the Roman Empire. The highways today remain as little more than dumb surfaces with no data flowing between vechicles and the road. China already has restrictions on the limit of vehicles that can be licensed in Shanghai and Beijing. Going driverless brings some exciting new options. Driverless cars will be a very disruptive technology. To compensate for the loss of a driver, vehicles will need to become more aware of their surroundings. With cameras you create a symbiotic relationship that is far different than human-to-road relationship, which is largely emotion based. An intelligent car coupled with an intellegent road is a powerfull source.

- Lane compression

- Distance compression

- Time compression

On-demand transportation. All car parts and component need to be designed to be more durable and longer lasting. Shifting from driver to rider. More fancy dashboards, movies, music and massage interfaces. China doesn’t need more cars, it needs more transportation.

Conclusion: We all love to drive, but humans are the inconsistent variable in this demanding area of responsibility. Driving requires constant vigilance, constant alertness, and constant involvement. Once we take the driver out of the equation, however, we solve far more problems than the wasted time and energy needed to pilot the vehicle. But vehicle design is only part of the equation. Without reimagining the way we design and maintain highways, driverless cars will only achieve a fraction of their true potential.