PRE2018 3 Group11: Difference between revisions

| (211 intermediate revisions by 4 users not shown) | |||

| Line 10: | Line 10: | ||

</div> | </div> | ||

= Organization | = Introduction = | ||

In the following sections we document our decisions and processes for the course “Project Robots Everywhere (0LAUK0)”. The documentation includes organizational information, insight into the process of choosing a topic, the design process of our solution and its viability and limitations. | |||

<big>'''Organization'''</big> | |||

The group composition, deliverables, milestones, planning and task division can be found on the [[PRE2018_3_Group11:Organization | organization]] page. | The group composition, deliverables, milestones, planning and task division can be found on the [[PRE2018_3_Group11:Organization | organization]] page. | ||

<big>'''Brainstorm'''</big> | |||

To explore possible subjects for this project, a [[PRE2018_3_Group11:Brainstorm | brainstorm session]] was held. Out of the various ideas, the Follow-Me Drone was chosen to be our subject of focus. | To explore possible subjects for this project, a [[PRE2018_3_Group11:Brainstorm | brainstorm session]] was held. Out of the various ideas, the Follow-Me Drone was chosen to be our subject of focus. | ||

= Problem Statement = | = Problem Statement = | ||

People who are affected by the mental disorder DTD (developmental topographical disorientation) suffer from poor navigational skills, which greatly limits their independence and causes seemingly trivial daily tasks—like finding the way to work—to become difficult hurdles in their life. | |||

Because many people affected by this disorder cannot rely on map-based navigation, we currently see a lack of solutions which target to improve the quality of life for DTD patients and other people that suffer disadvantages in their daily life caused by their poor navigational skills. | |||

While the number of people affected by DTD is still unknown, current estimates based on small-sample studies say that 1-2% of the world population might be affected. This underlines the urgency and gravity of this issue [https://doi.org/10.1007/s00221-010-2256-9] [http://nautil.us/issue/32/space/the-woman-who-got-lost-at-home][https://www.theatlantic.com/health/archive/2015/05/when-the-brain-cant-make-its-own-maps/392273/]. | |||

== Background on DTD == | |||

The ability to navigate around one’s environment is a difficult mental task whose complexity is typically dismissed. By ''difficult'', we are not referring to tasks such as pointing north while blindfolded, but rather much simpler ones such as: following a route drawn on a map, recalling the path to one’s favourite café. Such tasks require complex brain activity and fall under the broad skill of ‘navigation’, which is present in humans in diverse skill levels. People whose navigational skills fall on the lower end of the scale are slightly troubled in their everyday life; it might take them substantially longer than others to be able to find their way to work through memory alone; or they might get lost in new environments every now and then. | |||

Some navigational skills can be traced back to certain distinct cognitive processes, which means that a lesion in specific brain regions can cause a human’s wayfinding abilities to decrease dramatically. This was found in certain case studies with patients that were subject to brain damage, and labeled “Topographical Disorientation” (TD), which can affect a broad range of navigational tasks [https://doi.org/10.1093/brain/122.9.1613]. Within the last decade, cases started to show up in which people showed similar symptoms to patients with TD, without having suffered any brain damage. This disorder in normally developed humans was termed “Developmental Topographical Disorientation” (DTD) [https://doi.org/10.1016/j.neuropsychologia.2008.08.021], which, as was recently discovered, might affect 1-2% of the world's population (this is an estimate based on informal studies and surveys) [https://doi.org/10.1007/s00221-010-2256-9][http://nautil.us/issue/32/space/the-woman-who-got-lost-at-home][https://www.theatlantic.com/health/archive/2015/05/when-the-brain-cant-make-its-own-maps/392273/]. | |||

= Design concept = | |||

To make the everyday life of (D)TD patients easier, our proposed solution is to use a drone; we call it the “follow-me drone”. The idea behind it is simple: By flying in front of its user, accurately guiding them to a desired location, the drone takes over the majority of a human’s cognitive wayfinding tasks. This makes it easy and safe for people to find their destination with minimized distraction and cognitive workload of navigational tasks. | |||

The first design of the “follow-me drone” will be mainly aimed at severe cases of (D)TD, who are in need of this drone regularly, with the goal of giving those people some of their independence back. This means that the design incorporates features that make the drone more valuable to its user with repeated use and is not only thought-of as a product for rare or even one-time use as part of a service. | |||

== | == Target user analysis == | ||

While we are well aware that the target user base is often not congruent with the real user base, we would still like to deliver a good understanding of the use cases our drone is designed for. Delivering this understanding is additionally important for future reference and the general iterative process of user centered design. | |||

As clarified by our problem statement, we specifically designed this drone for people that, for various reasons, are not able to navigate through their daily environment without external help. This mostly concerns people that suffer a form of topographical disorientation. Since this form of mental disorder is still relatively new and does not have an easily identifiable cluster of symptoms, [https://doi.org/10.1093/brain/122.9.1613 Aguirre & D’Esposito (1999)] made an effort to clarify and classify cases of TD and DTD (where TD is caused by brain damage and DTD is the developmental form). To give a better understanding of (D)TS symptoms, we list the significant categories of topographical disorientation and a short description of their known symptoms and syndromes below: | |||

*'''Egocentric disorientation''' | |||

:Patients of this category are not able to perform a wide range of mental tasks connected to navigation. They might be able to point at an object they can see, however, they lose all sense of direction as soon as their eyes are closed. Their route-descriptions are inaccurate and they are not able to draw maps from their mind. Most patients are also unable to point out which of two objects is closer to them. This results in often getting lost and generally poor navigation skills, which contributes to many of the affected people staying home and not going out without a companion. [https://doi.org/10.1093/brain/122.9.1613 (Aguirre & D’Esposito, 1999)] | |||

*'''Heading disorientation''' | |||

:Patients with heading disorientation are perfectly able to recognize landmarks but can not derive any directional information from them. This might also just show up occasionally, as one patient describes: “I was returning home when suddenly, even though I could recognize the places in which I was walking and the various shops and cafés on the street, I could not continue on my way because I no longer knew which way to go, whether to go forward or backward, or to turn to the right or to the left...”. [https://doi.org/10.1093/brain/122.9.1613 (Aguirre & D’Esposito, 1999)] | |||

*'''Landmark agnosia''' | |||

:People affected by landmark agnosia are not able to use environmental features such as landmarks for their way-finding or orientation. As described by someone landmark agnostic: “I can draw you a plan of the roads from Cardiff to the Rhondda Valley... It's when I'm out that the trouble starts. My reason tells me I must be in a certain place and yet I don't recognize it.” [https://doi.org/10.1093/brain/122.9.1613 (Aguirre & D’Esposito, 1999)] | |||

In all three cases above, we can identify the need for additional guidance in navigating through their environment in everyday life. Also a different solution besides a drone may fulfill that need. More details about the viability of the drone and alternative solutions can be found in the “Alternatives” section within [[#Limitations and Viability|Limitations and Viability]]. However, our drone can act as a mentally failsafe help for people affected by topographical disorientation, since all of the mentioned cases showed an ability to recognize objects and point at them. This will give people who lost faith in their ability to freely and independently walk to a desired nearby location hope and security to do so again. | |||

= Approach = | = Approach = | ||

In order to achieve a feasible design solution, we answer the following questions: | |||

In order to | |||

* '''User requirements''' | * '''User requirements''' | ||

** Which daily-life tasks are affected by Topographical Disorientation and should be addressed by our design? | ** Which daily-life tasks are affected by Topographical Disorientation and should be addressed by our design? | ||

** What limitations | ** What limitations should the design consider in order to meet the requirements of the specified user base? | ||

* '''Viability''' | |||

** What alternative solutions exist currently? | |||

** What are the pros and cons of the drone solution? | |||

** In which situations can a drone provide additional value? | |||

* '''Human technology interaction''' | * '''Human technology interaction''' | ||

** What design factors influence users’ comfort with these drones? | ** What design factors influence users’ comfort and safety with these drones? | ||

** | ** How can an intuitive and natural guiding experience be facilitated? | ||

*** How | *** How can the salience of the drone be maximized in traffic? | ||

*** What | *** What distances and trajectories of the drone will enhance the safety and satisfaction of users while being guided? | ||

** | ** What kind of interface is available in which situations for the user to interact with the drone? | ||

* '''User tracking''' | * '''User tracking''' | ||

** How exact should the location of a user be tracked to be able to fulfill all other requirements of the design? | ** How exact should the location of a user be tracked to be able to fulfill all other requirements of the design? | ||

| Line 74: | Line 80: | ||

** How well can the drone perform at night (limited visibility)? | ** How well can the drone perform at night (limited visibility)? | ||

* '''Physical design''' | * '''Physical design''' | ||

** What size and weight limitations | ** What size and weight limitations must the design adhere to? | ||

** What sensors are needed? | ** What sensors are needed? | ||

** Which actuators are needed? | ** Which actuators are needed? | ||

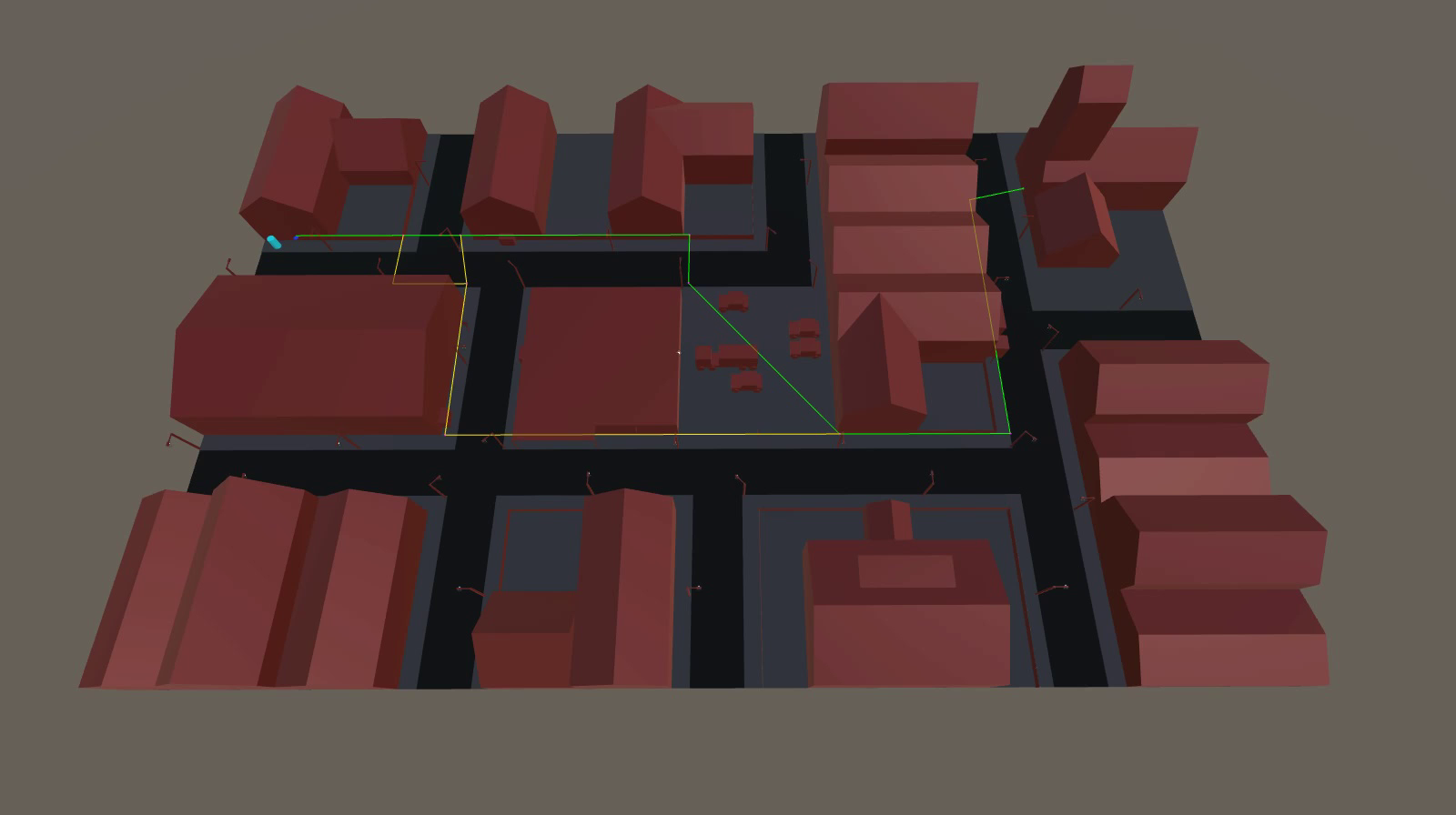

* '''Simulation''' | * '''Simulation''' | ||

** How could the design be implemented on a real drone? | |||

** How well does the implemented design perform? | |||

** How can the internal workings of the drone be visualized? | |||

* | |||

* | |||

* | |||

= Solution = | = Solution = | ||

== Requirements == | == Requirements == | ||

Aside from getting lost in extremely familiar surroundings, | Aside from getting lost in extremely familiar surroundings, it appears that individuals affected by DTD do not consistently differ from the healthy population in their general cognitive capacity. Since we cannot reach the target group, it is difficult to define a set of special requirements. That said, we have compiled a list of general requirements that should be met. | ||

# The Follow-Me | # The Follow-Me drone uses a navigation system that computes the optimal route from any starting point to the destination. | ||

# The drone must guide the user to | # The drone must be able to fly. | ||

# The drone must guide the user to their destination by taking the decided route hovering in direct line of sight of the user. | |||

# During guidance, when the drone goes out of line of sight from the user, it must recover line of sight. | |||

# The drone must know how to take turns and maneuver around obstacles and avoid hitting them. | |||

# The drone must guide the user without the need to display a map. | # The drone must guide the user without the need to display a map. | ||

# The drone must provide an interface to the user that allows the user to specify their destination without the need to interact with a map. | # The drone must provide an interface to the user that allows the user to specify their destination without the need to interact with a map. | ||

# | # While the drone is guiding the user, it must keep a minimum distance of 1 meter between it and the user. | ||

# The drone should announce when the destination is reached. | |||

# The drone should fly in an urban environment. | |||

# The drone | # The drone should have a flight time of half an hour | ||

# The drone should | |||

# The drone should | |||

# The drone should not weigh more than 4 kilograms. | # The drone should not weigh more than 4 kilograms. | ||

== Human factors == | == Human factors == | ||

Drones are finding more and more applications around our globe and are becoming increasingly available and used on the mass market. In various applications of | Drones are finding more and more applications around our globe and are becoming increasingly available and used on the mass market. In various drone applications, interacting with humans—regardless of their level of involvement with the drone’s task—is inevitable. Even though drones are still widely perceived as dangerous or annoying, there is a common belief that they will get more socially accepted over time [http://interactions.acm.org/archive/view/may-june-2018/human-drone-interaction#top]. However, since the technology and therefore the research on human-drone interaction is still relatively new, our design should incorporate human factors without assuming general social acceptance of drones. | ||

The next sections | The next sections focus on different human factors that influence our drone’s design. This includes the drone’s physical appearance and its users’ comfort with it, safety with respect to its physical design, as well as functionality-affecting factors which might contribute to a human's ability to follow the drone. | ||

=== Physical appearance === | === Physical appearance === | ||

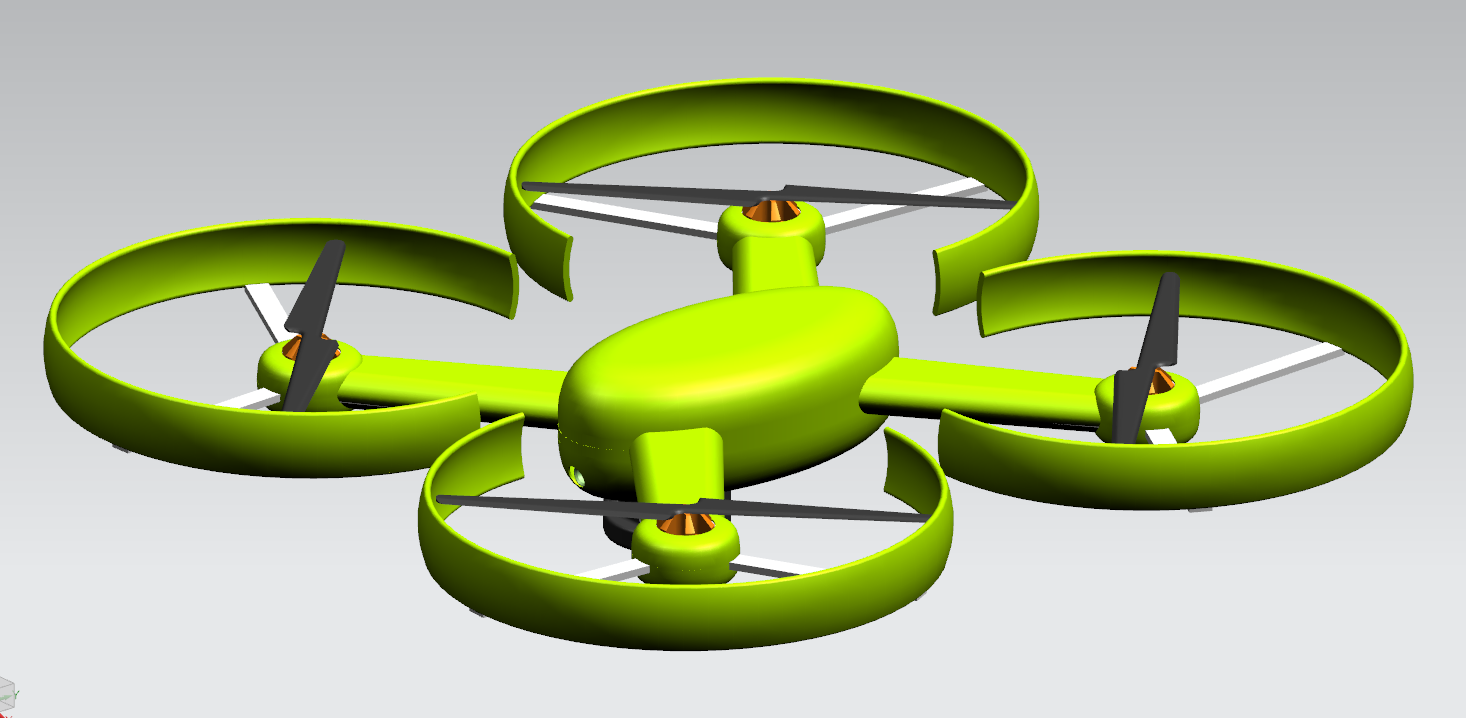

The physical design of the drone depends on different factors. It is firstly defined and limited by technical requirements, as for example the battery size and weight, the size and number of propellers and motors and the system’s capacity for carrying additional weight (all discussed in | The physical design of the drone depends on different factors. It is firstly defined and limited by technical requirements, as for example the battery size and weight, the size and number of propellers and motors and the system’s capacity for carrying additional weight (all discussed in [[#Technical considerations|Technical considerations]]). As soon as the basic functionality is given, the safety of the drone’s users has to be considered in the design. This is done in the section [[#Safety|Safety]]. Taking all these requirements into account, further design-influencing human factors can now be discussed. However, the design process is not hierarchical, but rather iterative, which means that all mentioned factors influence each other. | ||

* '''Drone size''': For a human to feel comfortable using a drone as a guiding assistant, the size will play an important role. Especially in landing or storing processes a drone of a big size will come in unwieldy, whereas a small drone might be more difficult to detect during the process of following it. In the study conducted in 2017 by Romell and Karjalainen [http://publications.lib.chalmers.se/records/fulltext/250062/250062.pdf], it was investigated what size of a drone people prefer for having a drone companion. The definition of a drone companion in this study lies close enough to our | * '''Drone size''' | ||

:For a human to feel comfortable using a drone as a guiding assistant, the size will play an important role. Especially in landing or storing processes, a drone of a big size will come in unwieldy, whereas a small drone might be more difficult to detect during the process of following it. In the study conducted in 2017 by Romell and Karjalainen [http://publications.lib.chalmers.se/records/fulltext/250062/250062.pdf], it was investigated what size of a drone people prefer for having a drone companion. The definition of a drone companion in this study lies close enough to our Follow-Me drone to consider the results of this study for our case. Given the choice between a small, medium or large drone, the majority of participants opted for the medium size drone, which was approximated at a diameter of 40cm. Giving priority to the technical limitations and the drone’s optimal functionality, we took this result into account. | |||

* '''Drone shape''' | * '''Drone shape''' | ||

In the mentioned 2017 study by Romell and Karjalainen [http://publications.lib.chalmers.se/records/fulltext/250062/250062.pdf], also the optimal shape for such a drone companion was evaluated. It came to the conclusion that particularly round design features are preferred, which we considered as well in our design process. | :In the mentioned 2017 study by Romell and Karjalainen [http://publications.lib.chalmers.se/records/fulltext/250062/250062.pdf], also the optimal shape for such a drone companion was evaluated. It came to the conclusion that particularly round design features are preferred, which we considered as well in our design process. | ||

Additionally, while the drone is not flying, a user should intuitively be able to pick up and hold the drone, without being afraid to hurt | :Additionally, while the drone is not flying, a user should intuitively be able to pick up and hold the drone, without being afraid to hurt themselves or damage the drone. Therefore, a sturdy frame or a visible handle should be included in the design. | ||

* '''Drone color''' | * '''Drone color''' | ||

Since the color | :Since the drone’s color greatly affect its visibility, and is therefore crucial for the correct functioning of the system, the choice of color is discussed in [[#Salience in traffic|Salience in traffic]]. | ||

=== Safety === | === Safety === | ||

The safety section considers all design choices that have to be made to ensure that no humans will be endangered by the drone. These choices are divided into factors that influence the physical aspects of the design, and factors which concern dynamic features of the functionality. | The safety section considers all design choices that have to be made to ensure that no humans will be endangered by the drone. These choices are divided into factors that influence the physical aspects of the design, and factors which concern dynamic features of the functionality of the drone. | ||

* '''Safety of the physical design''' | * '''Safety of the physical design''' | ||

The biggest hazard of most drones are accidents caused by objects getting into a running propeller, which can damage the object (or injure a human), damage the drone, or both. Propellers need as much free air flow as possible directly above and below to function properly, which introduces some restrictions in creating a mechanical design that can hinder objects from entering the circle of the moving propeller. But most common drone designs involve an enclosure around the propeller (the turning axis), which is a design step that we also follow for safety reasons | :The biggest hazard of most drones are accidents caused by objects getting into a running propeller, which can damage the object (or injure a human), damage the drone, or both. Propellers need as much free air flow as possible directly above and below to function properly, which introduces some restrictions in creating a mechanical design that can hinder objects from entering the circle of the moving propeller. But most common drone designs involve an enclosure around the propeller (the turning axis), which is a design step that we also follow for these safety reasons. | ||

* '''Safety aspects during operation''' | * '''Safety aspects during operation''' | ||

Besides hazards that the drone is directly responsible for, | :Besides hazards that the drone is directly responsible for, such as injuring a human with its propellers, there are also indirect hazards to be considered that can be avoided or minimized by a good design. | ||

The first hazard of this kind that we | :The first hazard of this kind that we identify is a scenario where the drone leads its user into a dangerous or fatal situation in traffic. As an example, if the drone is unaware of a red traffic light and keeps on leading the way crossing the trafficked road, and the user shows an overtrust into the drone and follows it without obeying traffic rules, this could result in an accident. | ||

Since this design will not incorporate real-time traffic monitoring, we have to assure that our users are aware of their unchanged responsibility as traffic participants when using our drone. However, for future designs, data from online map services could be used to identify when crossing a road is necessary and subsequently create a scheme that the drone follows before leading a user onto a trafficked road. | :Since this design will not incorporate real-time traffic monitoring, we have to assure that our users are aware of their unchanged responsibility as traffic participants when using our drone. However, for future designs, data from online map services could be used to identify when crossing a road is necessary and subsequently create a scheme that the drone follows before leading a user onto a trafficked road. | ||

Another hazard we identified concerns all traffic participants besides the user. There is a danger in the drone distracting other humans on the road and thereby causing an accident. This assumption is mainly due to drones not being widely applied in traffic situations (yet), which makes a flying object such as our drone | :Another hazard we identified concerns all traffic participants besides the user. There is a danger in the drone distracting other humans on the road and thereby causing an accident. This assumption is mainly due to drones not being widely applied in traffic situations (yet), which makes a flying object such as our drone a noticeable and uncommon sight. However, the responsibility lies with every conscious participant in traffic, which is why we will not let our design be influenced by this hazard greatly. The drone will be designed to be as easily visible and conspicuous for its user, while keeping the attracted attention from other road users to a minimum. | ||

=== Salience in traffic === | === Salience in traffic === | ||

As our standard use case described, the drone is aimed mostly at application in traffic. It should be able to safely navigate its user to her destination, no matter how many other vehicles, pedestrians or further distractions are around. A user should never get the feeling to be lost while being guided by our drone. | As our standard use case described, the drone is aimed mostly at application in traffic. It should be able to safely navigate its user to her destination, no matter how many other vehicles, pedestrians or further distractions are around. A user should never get the feeling to be lost while being guided by our drone. | ||

To reach this requirement, the most evident solution is constant visibility of the drone. Since human attention is easily distracted, | To reach this requirement, the most evident solution is constant visibility of the drone. Since human attention is easily distracted, conspicuity, which describes the property of getting detected or noticed, is an important factor for users to find the drone quickly and conveniently when lost out of sight for a brief moment. However, the conspicuity of objects is perceived similarly by (almost) every human, which introduces the problem of unwillingly distracting other traffic participants with an overly conspicuous drone. | ||

We will focus on two factors of salience and conspicuity: | We will focus on two factors of salience and conspicuity: | ||

* '''Color''' | *'''Color''' | ||

The color characteristics of the drone can be a leading factor in increasing the overall visibility and conspicuity of the drone. The more salient the color scheme of the drone proves to be, the easier it will be for its user to detect it. Since the coloring of the drone alone would not emit any light itself, we assume that this design property does not highly influence the extent of distraction for other traffic participants. | :The color characteristics of the drone can be a leading factor in increasing the overall visibility and conspicuity of the drone. The more salient the color scheme of the drone proves to be, the easier it will be for its user to detect it. Since the coloring of the drone alone would not emit any light itself, we assume that this design property does not highly influence the extent of distraction for other traffic participants. | ||

:Choosing a color does seem like a difficult step in a traffic environment, where certain colors have implicit, but clear meanings that many of us have gotten accustomed to. We would not want the drone to be confused with any traffic lights or street signs, since that would most likely lead to serious hazards. But we would still need to choose a color that is perceived as salient as possible. Stephen S. Solomon conducted a study about the colors of emergency vehicles [http://docshare01.docshare.tips/files/20581/205811343.pdf]. The results of the study were based upon a finding about the human visual system: It is most sensitive to a specific band of colors, which involves ‘lime-yellow’. Therefore, as the study showed, lime-yellow emergency vehicles were involved in less traffic accidents, which does indeed let us draw conclusions about the conspicuity of the color. | |||

:This leads us to our consideration for lime-yellow as base color for the drone. To increase contrast, similar to emergency vehicles, red is chosen as a contrast color. This could additionally be implemented by placing stripes or similar patterns on some well visible parts of the drone, but was disregarded as a minor design choice that should be implemented at a later stage. | |||

*'''Luminosity''' | |||

:To further increase the visibility of the drone, light should be reflected and emitted from it. Our design of the drone will only guarantee functionality by daylight, partly because a lack of light limits the object and user detection possibilities. But an emitting light source on the drone itself is still valuable in daylight situations. | |||

:We assume that a light installation which is visible from every possible angle of the drone should be comparable in units of emitted light to a standard LED bicycle lamp, which has about 100 lumen. As for the light color, we choose a color that is not preoccupied by any strict meaning in traffic, which makes the color blue the most rational choice. | |||

* '''Luminosity''' | |||

To further increase the visibility of the drone, light should be reflected and emitted from it. Our design of the drone will only guarantee functionality by daylight, | |||

We assume that a light installation which is visible from every possible angle of the drone should be comparable in units of emitted light to a standard LED bicycle lamp, which has about 100 lumen. As for the light color, we choose a color that is not preoccupied by any strict meaning in traffic | |||

=== Positioning in the visual field === | === Positioning in the visual field === | ||

A correct positioning of the drone will be extremely valuable for a user but due to individual preferences, there will be difficulties in deriving a default positioning. The drone should not deviate too much from its usual height and position within its users attentional field, however, it might have to avoid obstacles, wait, and make turns without confusing the user. | A correct positioning of the drone will be extremely valuable for a user but due to individual preferences, there will be difficulties in deriving a ''default positioning''. The drone should not deviate too much from its usual height and position within its users attentional field, however, it might have to avoid obstacles, wait, and make turns without confusing the user. | ||

According to a study of Wittmann et. al. (2006) [https://doi.org/10.1016/j.apergo.2005.06.002], it is easier for the human visual-attentional system to track an arbitrarily moving object horizontally than it is vertically. For that reason, our drone should try to avoid objects with only slight movements in vertical direction, but is more free to change its position horizontally on similar grounds. Since the drone will therefore mostly stay at the same height while guiding a user, it makes it easier for the user to detect the drone, especially with repeated use. | According to a study of Wittmann et. al. (2006) [https://doi.org/10.1016/j.apergo.2005.06.002], it is easier for the human visual-attentional system to track an arbitrarily moving object horizontally than it is vertically. For that reason, our drone should try to avoid objects with only slight movements in vertical direction, but is more free to change its position horizontally on similar grounds. Since the drone will therefore mostly stay at the same height while guiding a user, it makes it easier for the user to detect the drone, especially with repeated use. | ||

In a study that investigated drone motion to guide pedestrians [https://dl.acm.org/citation.cfm?id=3152837], a small sample was stating their preference on the distance and height of a drone guiding them. The results showed a mean horizontal distance of 4 meters between the user and the drone as well as a preferred mean height of 2.6 meters of the drone. As these values can be | In a study that investigated drone motion to guide pedestrians [https://dl.acm.org/citation.cfm?id=3152837], a small sample was stating their preference on the distance and height of a drone guiding them. The results showed a mean horizontal distance of 4 meters between the user and the drone as well as a preferred mean height of 2.6 meters of the drone. As these values can be individualised for our drone, we see them merely as default values. We derived an approximate preferred viewing angle of 15 degrees, so we are able to adjust the default position of the drone to each users body height, which is entered upon first configuration of the drone. | ||

== Legal Situation == | |||

As the Follow-Me drone is designed to move through urban environments to guide a person to their destination, it is important to look at the relevant legal situation. In The Netherlands, laws regarding drones are not formulated in a clear way. There are two sets of laws: [https://www.rijksoverheid.nl/onderwerpen/drone/regels-hobby-drone recreational-use laws (NL)] and [https://www.rijksoverheid.nl/onderwerpen/drone/vraag-en-antwoord/regels-drone-zakelijk-gebruik business-use laws (NL)]. It is not explicitly clear which set of laws applies, if any at all. Our drone is not entirely intended for recreational use because of its clear necessity to the targeted user group. On the other hand, it does not fall under business use either as it is not used by a company to make money—this does not include selling it to users. In that case, it is in the users’ ownership and is no longer considered to be used by a company. If the drone is rented, however, it might still fall under business use. In conclusion, neither set of laws applies to our project. Since drones are not typically employed in society (yet), this is not unexpected and we can expect the law to adapt to cases such as ours in the future. | |||

Another source states that drones in general are not allowed within 50m of people and buildings[https://www.rijksoverheid.nl/onderwerpen/drone/vraag-en-antwoord/vergunning-drone]. This is a problem when designing a drone intended to guide people through urban areas. However, just like countries are slowly changing their laws regarding the use of self-driving cars, it is safe to assume that the law regarding drones in urban areas will change in the future. | |||

== Technical considerations== | == Technical considerations== | ||

=== Controllers and Sensors === | |||

=== | In this section we explain the controlling and sensing hardware necessary for the Follow-Me drone. The specific components mentioned here are only examples that meet the requirements. Other components might be used as long as the total weight and energy usage of the drone remains approximately the same and the parts are compatible. Firstly, the drone is controlled by a Raspberry Pi ,which weighs 22g and requires 12.5W of energy. In order for it to perform obstacle detection (see [[#Obstacle_detection | obstacle detection]]), one needs either a rotational lidar or a stereo camera. We have opted for a lidar. | ||

The Sweep V1 360° Laser Scanner [https://www.robotshop.com/uk/sweep-v1-360-laser-scanner.html] is a good example of such a lidar that is not too expensive, and should be good enough for our purposes. It can scan up to 40 meters and does not require a lot of power. A simple 5V supply is needed and it draws a current of about 0.5A which means it requires 2.5W of energy. It weighs 120g. | |||

The drone needs a camera to track the user. This camera does not have many requirements, so it can be as simple and light as possible, as long as it can produce a consistent sequence of frames in adequate resolution. That is why a camera module designed for use with a Raspberry Pi is used. The Raspberry Pi Camera Module F [https://www.waveshare.com/rpi-camera-f.htm] can take videos of resolutions up to 1080p, which is more than needed for the purpose of user tracking. Weight is not specified, but other modules weigh around 3g, so this module won't weigh more than 10g. It uses power from the Raspberry Pi directly. | |||

The required amount of power including a margin of 5W for miscellaneous components (e.g. LED's) would then come to a total of 20W. | |||

=== Battery life === | |||

One thing to take into consideration when designing a drone is the operating duration. Most commercially available drones cannot fly longer than 30 minutes [https://3dinsider.com/long-flight-time-drones/],[https://www.outstandingdrone.com/long-flight-time-battery-life-drone/]. Whereas this is no problem for walking to the supermarket around the block, commuting to work and back home will surely prove more difficult. Furthermore, wind and rain have a negative influence on the effective flight time of the drone. As the drone should still be usable during these situations, it is important to improve battery life. | |||

In order to keep the battery life as long as possible, a few measures can be taken. First of all, a larger battery can be used. This, however, makes the drone heavier and would thus require more power. Another important aspect of batteries to think of, is the fact that completely discharging them decreases its lifespan. Therefore, the battery used should not be drained for more than 80%. As the practical energy density of a lithium-polymer battery is 150Wh/kg [https://www.nature.com/articles/s41467-017-02411-5#Tab1], the usable energy density is thus approximately 120Wh/kg. | |||

Another way to improve flight time is to keep spare battery packs. It could be an option to have the user bring a second battery pack with them for travelling back. For this purpose, the drone should go back to the user when the battery is low, indicate that it is low for example by flashing its lights and land. The user should then be able to quickly and easily swap the battery packs. This requires the batteries to be easily (dis)connectable from the drone. A possible downside to this option is that the drone would temporarily shut down and might need some time to start back up after the new battery is inserted. This could be circumvented by using a small extra battery in the drone that is only used for the controller hardware. | |||

Unfortunately no conclusive scientific research is found about the energy consumption of drones. Instead, existing commercially available drones are investigated, in particular the Blade Chroma Quadcopter Drone [https://www.horizonhobby.com/product/drone/drone-aircraft/ready-to-fly-15086--1/chroma-w-st-10-and-c-go3-blh8675]. It weighs 1.3kg and has an ideal flight time of 30 minutes. It uses 4 brushless motors and has a 11.1V 6300mAh LiPo battery. The amount of energy can be calculated with [[File:battery_equation.png|150px]] which gives about 70Wh. The battery weighs about 480g [https://www.amazon.co.uk/Blade-6300mAh-11-1V-Chroma-Battery/dp/B00ZBKP51S] so this is in line with the previously found energy density. The drone has a GPS system, a 4K 60FPS camera with gimball and a video link system. On the Follow-Me Drone a lighter simpler camera that uses less energy will be implemented. No video-link system will be used either. The drone will only have to communicate simple commands to the user interface. Replacing the camera and removing the video linking system surely saves some battery usage. On the other hand, a stronger computer board will be needed that runs the user tracking, obstacle avoidance and path finding. For now it is assumed that the energy usage remains the same. | |||

In windy weather the drone will need more power to stay on its intended path and with precipitation the propellers will also have a slightly increased resistance. It is unknown how big the influence of these weather phenomena is, but for now it is realistically assumed that the practical flight time is approximately 20 minutes, partly also due to the fact that manufacturers list ideal, laboratory flight times. | |||

If a flight time of 60 minutes is required, then that would mean that a battery at least 3 times as strong is needed. However, as these battery packs weigh 480g, this would increase the weight by a factor of almost 2. This by itself requires more power such that even more battery and motor power is required. Therefore it can be reasonably assumed that this is not a desirable option. Instead, the user should bring one or more extra battery packs to swap during longer trips. | |||

It is, however, possible to design a drone that has sufficient flight time on a single battery. “Impossible Aerospace” claims to have designed a drone that has a flight time of 78 minutes with a payload [https://impossible.aero]. To make something similar a large battery pack and stronger motors would be needed. Sadly, Impossible Aerospace has not released any specifications on their motors used, and it weighs 7 kilograms which violates the requirements, so this cannot be used. | |||

=== Motors and propellers, battery pack=== | |||

To have a starting point which propellers and motors to use, the weight of the drone is assumed. Two cases are investigated. One is where the drone has a flight time of 20-30 minutes and its battery needs to be swapped during the flight. In this case, the drone is assumed to have a weight of approximately 1.5kg which is slightly heavier than most commercially available drones with flight times of around 30 minutes. The second case is a drone designed to fly uninterrupted for one hour. The weight for this drone is assumed to be 4kg, the maximum for recreational drones in The Netherlands. | |||

With a weight of 1.5kg, the thrust of the drone would at least have to be 15N. However, with this thrust the drone would only be able to hover and not actually fly. Several fora and articles on the internet state that for regular flying (no racing and acrobatics) at least a thrust twice as much as the weight is required (e.g. [https://oscarliang.com/quadcopter-motor-propeller/] and [https://www.quora.com/How-much-thrust-is-required-to-lift-a-2kg-payload-on-a-quad-copter]) so the thrust of the drone needs to be 30N or higher. If four motors and propellers are used, such as in a quadcopter, this comes down to 7.5N per motor-propeller pair. | |||

APC propellers has a database with the performance of all of their propellers [https://www.apcprop.com/technical-information/performance-data/] which will be used to get an idea of what kind propeller to use. There are way too many propellers to compare individually, so as starting point a propeller diameter of 10" is selected (the propeller diameter for the previously discussed Blade Chroma Quadcopter) and the propeller with the lowest required power at a static thrust (thrust when the drone does not move) of 7.5N or 1.68lbf is searched. This way the 10x5E propeller is found. It has the required thrust at around 7000RPM and requires a torque of approximately 1.01in-lbf or 114mNm. This requires approximately 82W of mechanical power. For simple hovering only half the power is needed. The propeller weighs 20g. | |||

With a | With the required torque and rotational velocity known, a suiting motor can be chosen. For this, the torque constant in mNm/A and the speed constant in RPM/V are required along with the operation range (like in the specifications for Maxon motors [https://www.maxonmotor.com/maxon/view/product/motor/dcmotor/re/re40/148867]). Unfortunately, drone motor suppliers do not give the torque constant in their specifications, making it very difficult to determine the amount of thrust a motor-propeller pair can deliver. Therefore, it is for now assumed that size (and thus the weight) of the motor is linearly related to the amount of thrust generated. The motor for the Blade Chroma Quadcopter weighs 68g and supports a quarter of the 1.3kg weighing drone. If the linear relation between weight and thrust were true our drone would require motors of 78g. Efficiency specifications are also hard to find so it is unknown how much power the motors actually require. For now an efficiency of 80% is assumed. Furthermore it is assumed that for flight at walking speed the drone uses 20% extra energy compared to simple hovering. Based on these assumptions every motor-propeller pair would use approximately 125W. | ||

In total, the drone motors require 500W and the hardware requires an additional 20W. The drone without the battery pack weighs 800g meaning that 700g is left over for the battery pack. With an energy density of 120Wh/kg approximately 84Wh of energy is available. With that a flight time of merely 9.5 minutes could be achieved. This is highly insufficient. There seems to be something wrong with the calculations and assumptions used here. Therefore, new assumptions will be made about energy storage and usage based on the specifications of the Blade Chroma. Of the drones with a flight time of 30 minutes its weight of 1.3kg comes closest to our desired weight of 1.5kg and it's parts can be found on the internet. | |||

The blade weighs 1.3kg, has four motor-propeller pairs, has an ideal flight time of 30 minutes and a 70Wh battery pack. The drone thus uses approximately 140W of power. Assuming that its controlling and sensing electronics (so everything without the motors) use the same amount of power as for the case of the Follow-Me Drone, the motors together require 120W of power. For our drone, weighing 1.5kg, motors with a power of 140W would be required giving a total energy usage of 160W. For a flight time of half an hour then approximately 670g of battery pack is required. | |||

=== Overview === | |||

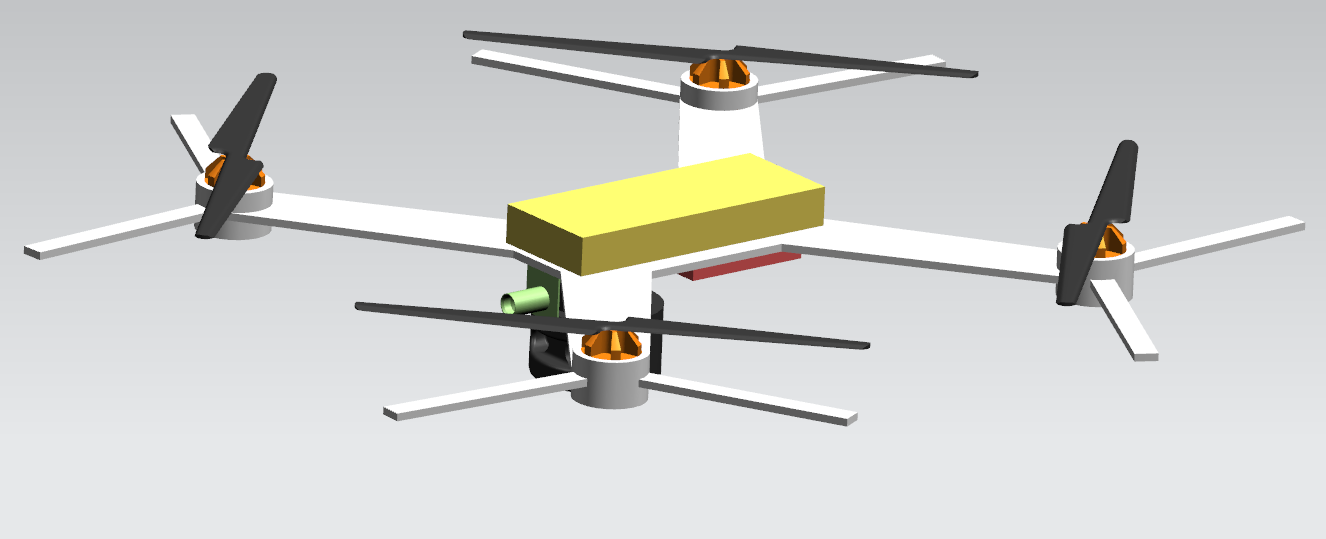

[[File:TechConceptClosed.PNG| 600px | center]] | |||

[[File:TechConceptOpen.PNG| 600px | center]] | |||

In above figures a concept image of the Follow-Me Drone is given. A few of the design choices are visible. In the first image the outside of the drone is shown. The outer shell is coloured lime-green so it is least distracting in traffic (as discussed in [[#Salience in traffic|Salience in traffic]]) and has round shapes (as discussed in [[#Physical appearance|Physical appearance]]). The propellers are surrounded by guards that make sure that if the drone bumps into something the propellers don’t get damaged. In the second image the outer shell is removed and the inner components are better visible. The drone features a sturdy but slightly flexible frame to reduce the chances of breaking as the user would be totally lost without the drone. The battery pack is placed on top of the inner frame and can be replaced via a hatch in the outer shell. The camera, controller (Raspberry Pi) and Lidar are attached at the bottom of the inner frame. The camera is placed backwards while the Lidar can rotate and has 360 degrees view. | |||

The total weight of the drone will be 1.5 kg. The drone uses 10" (25.4cm) propellers. Since the propellers should not overlap the frame too much the total width of the drone will be 60cm. | |||

The total weight of the drone will be 1. | |||

The weight will consist of the following parts: | The weight will consist of the following parts: | ||

4 motors + propellers: | *4 motors + propellers: 392g | ||

Raspberry Pi controller: 22g | *Raspberry Pi controller: 22g | ||

Lidar: | *Lidar: 120g | ||

Camera: | *Camera: 10g | ||

*Battery pack: 670g | |||

Frame: | *Frame: 200g (estimation) | ||

*Over for miscellaneous: 86g | |||

The required power for the components is: | |||

*4 motors: 140W | |||

*Raspberry Pi: 12.5W | |||

*Lidar: 2.5W | |||

*Miscellaneous: 5W | |||

*Total: 160W | |||

== User Interface Design == | == User Interface Design == | ||

When the user starts the app for the first time, the drone is paired with the mobile app. After pairing, the user needs to complete their profile by entering their name and height so that the drone can adjust its altitude accordingly. | |||

The navigation bar contains two tabs: ''Drone'' and ''Locations''. The Drone tab is where the user can search for an address or a location to go. Any previously saved locations will appear under the search bar for easy access. | |||

The Locations tab contains the favorite destinations saved by the user. The user can enter frequently used destinations to a preset location list, edit or delete them. These destinations appear on the home screen when the user opens the app for quick access. | |||

When a destination is selected, a picture of it appears in the background of the screen to aid the user in recognising it in real life. The stop button is located visibly in red in the lower right corner for the user to easily spot in case of emergency. Pressing the stop button stops the drone and the user is given two choices. He can either continue/resume the trip or end route. Red color represents termination of processes whereas blue color represents continuation or start of a process. | |||

Information about the trip is given at the bottom of the screen such as ETA, time remaining to arrival, and remaining route distance. | |||

== Distance Estimation== | == Distance Estimation== | ||

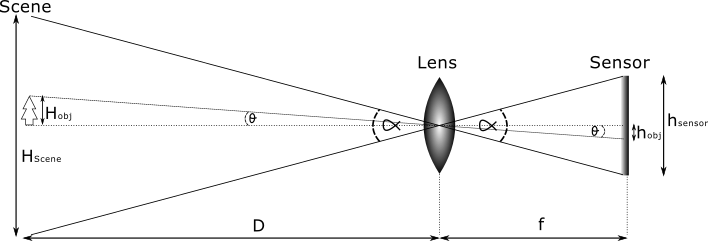

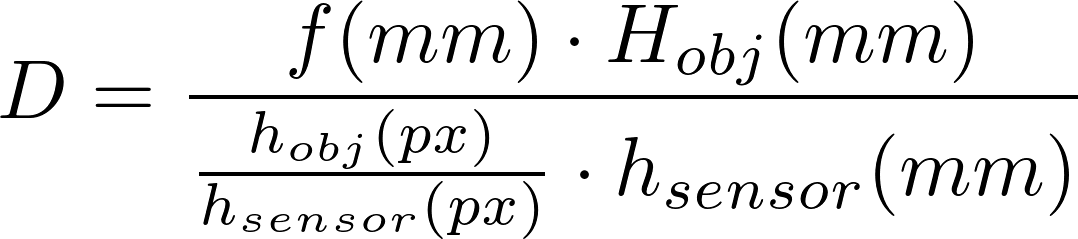

An integral part of the operation of a drone is distance computation and estimation. | An integral part of the operation of a drone is distance computation and estimation. | ||

[[File:distance_estimation_diagram.png]] | [[File:distance_estimation_diagram.png| center]] | ||

Since a camera can be considered to be a part of a drone, we present a formula for distance computation, given some parameters. The only assumption made is that the type of lens is '''rectilinear'''. The explained formula does not hold for other types of lenses. | Since a camera can be considered to be a part of a drone, we present a formula for distance computation, given some parameters. The only assumption made is that the type of lens is '''rectilinear'''. The explained formula does not hold for other types of lenses. | ||

< | Since ''D * φ = H<sub>obj</sub>'' and ''f * φ = h<sub>obj</sub>'' hold, we can factor ''φ'' out and get [[File:d_equation_3.png|80px]]. After rewriting ''h<sub>obj</sub>'' to be in terms of ''mm'', we finally end up with: | ||

</ | |||

[[File:d_equation_1a.png| 400px| center]] | |||

<!-- <math> D(mm) = \frac { f(mm) \cdot H_{obj}(mm)} { \frac{ h_{obj}(px) } { h_{sensor}(px) } \cdot h_{sensor}(mm) } </math> --> | |||

< | The parameters are as follows: | ||

# ''D(mm)'' : The real-world distance between the drone and the user in millimeters. This is what is computed. | |||

</ | # ''f(mm)'' : The actual focal length of the camera in millimeters. If you see a ''35mm equivalent'' focal length, then refrain from using it. If you simply see two values, a quick test will show you which one should be used. | ||

# ''H<sub>obj</sub>(mm)'' : The real-world height of the user in millimeters. | |||

# ''h<sub>obj</sub>(px)'' : The height of the user in pixels. | |||

# ''h<sub>sensor</sub>(px)'' : The height of the sensor in pixels, in other words, the vertical resolution of the video file (e.g. for 1080p videos this would be 1080, for 480p this would be 480 and so on). | |||

# ''h<sub>sensor</sub>(mm)'' : The sensor size in millimeters, which is the pixel size in millimeters multiplied by ''h<sub>obj</sub>(px)''. | |||

All these parameters should be present on the spec-sheet of a well documented camera, such as the OnePlus 6 (which is a phone that was used for shooting our own footage). | |||

When looking at the '''amount of error''' this method gives when implementing, we find that on scales less than 500mm, it is unfeasible. For a scale from 1000mm and up, it can be used. The results of our tests can be found in following table. | |||

{| class="wikitable" | |||

|+ Distance Computation Evaluation | |||

! Scale (mm) | |||

! Computed Distance (mm) | |||

! Absolute Error (m, rounded) | |||

|- | |||

| 100 || 7989.680302875845 || 7.89 | |||

|- | |||

| 200 || 2955.9793551540088 || 2.76 | |||

|- | |||

| 500 || 936.64000567 || 0.44 | |||

|- | |||

| 1000 || 687.851437808 || 0.31 | |||

|- | |||

| 2000 || 1692.22216373 || 0.30 | |||

|- | |||

| 3000 || 3183.46590645 || 0.18 | |||

|- | |||

| 4000 || 4143.14879882 || 0.14 | |||

|} | |||

If the drone is to be equipped with 2 cameras, computing the distance towards an object is possible using stereo vision as proposed by Manaf A. Mahammed, Amera I. Melhum and Faris A. Kochery, with an error rate of around 2% [http://warse.org/pdfs/2013/icctesp02.pdf]. This does, however, increase the number of cameras that need to be used, which is a further constraint on power. | |||

As the distance estimation described is to be used solely for user tracking purposes, and not for detecting and avoiding obstacles, we believe that an error rate of 3.5% [[File:d_equation_4.png|50px]] on a scale of 4m is acceptable. | |||

== Obstacle detection == | == Obstacle detection == | ||

In general there are two possible and readily accessible technologies for obstacle detection, namely, stereo vision and rangefinders. We will discuss how each of these hardware solutions recover or find depth information and then proceed on how to actually use the depth information. | |||

=== Finding depth === | |||

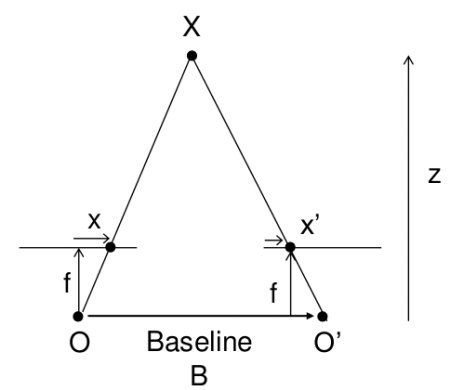

'''Stereo vision''' works by having two cameras acting as human eyes, hence the name. It relies on the principle of ''triangulation'', the principle illustrated here. | |||

[[File:triangulation.png| center]] <!-- https://docs.opencv.org/3.1.0/stereo_depth.jpg --> | |||

In above image, taken from the open CV documentation, one can see ''O'' and ''O<nowiki>'</nowiki>'', the two camera's. ''B'' denotes the ''baseline'', which is a vector from ''O'' to ''O<nowiki>'</nowiki>'', or simply put the distance between the two cameras. The focal length of both cameras (it is assumed to be the same type of lense used for a stereo vision camera) is denoted by ''f''. ''X'' denotes the point where the rays of the two cameras coincide (which is a pixel). ''Z'' denotes the distance between the mounting point of both cameras and ''X'', or simply put it is the depth information that we are interested in. Finally, ''x'' and ''x<nowiki>'</nowiki>'' are the distance between points in the image plane corresponding to the scene point 3D and their camera center. With ''x'' and ''x<nowiki>'</nowiki>'', we can compute the ''disparity'' which simply is ''x - x<nowiki>'</nowiki>'' or just [[File:d_equation_5.png| 30px]]. Rewriting this for ''Z'', the depth, is trivial [https://docs.opencv.org/3.1.0/dd/d53/tutorial_py_depthmap.html] [https://www.cse.unr.edu/~bebis/CS791E/Notes/StereoCamera.pdf]. While it is mathematically advanced, it is very accessible thanks to the OpenCV framework [https://albertarmea.com/post/opencv-stereo-camera/]. | |||

'''Rangefinders''' work in a relatively simpler way, as it uses lasers, which is just a type of wave. Hence, the depth (or distance) can be computed using [[File:d_equation_6.png|70px]] where ''c'' is the speed of light and ''t<sub>Δ</sub>'' the time it takes in order for the light to return to the point from which it was sent [http://www.lidar-uk.com/how-lidar-works/]. | |||

=== Using depth === | |||

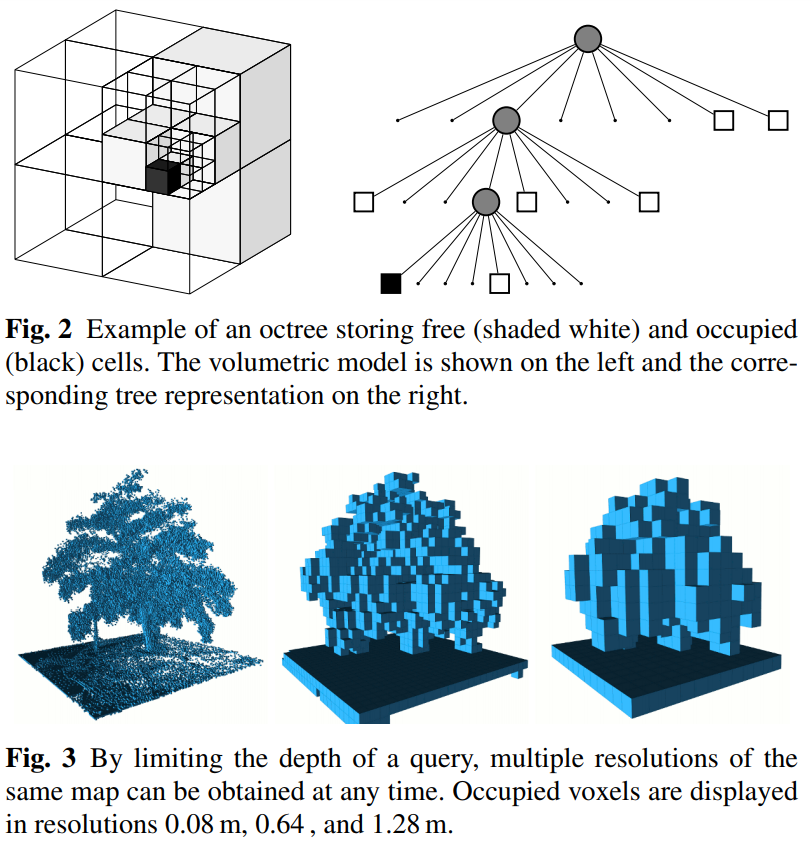

When it comes to representing a 3D environment for pretty much any computer task, one generally comes across the ''octomap'' framework. It is an efficient open source probabilistic 3D mapping framework (written in C++) based on octrees [https://octomap.github.io/ github]. | |||

An octree is a tree-like datastructure, as the name implies. (It is assumed that the reader is familiar with a tree data structure. If this is not the case, an explanation can be found [https://xlinux.nist.gov/dads/HTML/tree.html here]). The octree datastructure is structured according to the rule : "A node either has 8 children, or it has no children". These children are generally called voxels, and represent space contained in a cubic volume. Visually this makes more sense. | An octree is a tree-like datastructure, as the name implies. (It is assumed that the reader is familiar with a tree data structure. If this is not the case, an explanation can be found [https://xlinux.nist.gov/dads/HTML/tree.html here]). The octree datastructure is structured according to the rule : "A node either has 8 children, or it has no children". These children are generally called voxels, and represent space contained in a cubic volume. Visually this makes more sense. | ||

[[File:octomap_image.png|600px|center]] | |||

By having 8 children, and using an octree for 3D spatial representation, we are effectively recursively partitioning space into octants. As it is used in a multitude of applications dealing with 3 dimensions ( | By having 8 children, and using an octree for 3D spatial representation, we are effectively recursively partitioning space into octants. As it is used in a multitude of applications dealing with 3 dimensions (collision detection, view frustum culling, rendering, ...) it makes sense to use the octree. | ||

The OctoMap framework creates a full 3-dimensional model of the space around a point (e.g. sensor) in an efficient fashion. For the inner workings of the framework, we refer you to [http://www2.informatik.uni-freiburg.de/~hornunga/pub/hornung13auro.pdf the original paper]. Important notions that need to be mentioned is that one can set the depth and resolution of the framework. As it uses a depth-first-search traversal of nodes in an octree, by setting the maximal depth to be traversed one can influence the running time greatly. Moreover, the resolution of the resulted mapping can be changed by means of a parameter | The OctoMap framework creates a full 3-dimensional model of the space around a point (e.g. sensor) in an efficient fashion. For the inner workings of the framework, we refer you to [http://www2.informatik.uni-freiburg.de/~hornunga/pub/hornung13auro.pdf the original paper], from which we have also taken above figure. Important notions that need to be mentioned is that one can set the depth and resolution of the framework (as visible in the same figure). As it uses a depth-first-search traversal of nodes in an octree, by setting the maximal depth to be traversed one can influence the running time greatly. Moreover, the resolution of the resulted mapping can be changed by means of a parameter. | ||

With the information presented in said paper, it is doable to convert any sort of sensory information (e.g. from a laser rangefinder or lidar) into an Octree representation ready for use [https://github.com/OctoMap/octomap/wiki/Importing-Data-into-OctoMap], as long as it has following scheme. | |||

<pre> | |||

NODE x y z roll pitch yaw | |||

x y z | |||

x y z | |||

[...] | |||

NODE x y z roll pitch yaw | |||

x y z | |||

[...] | |||

</pre> | |||

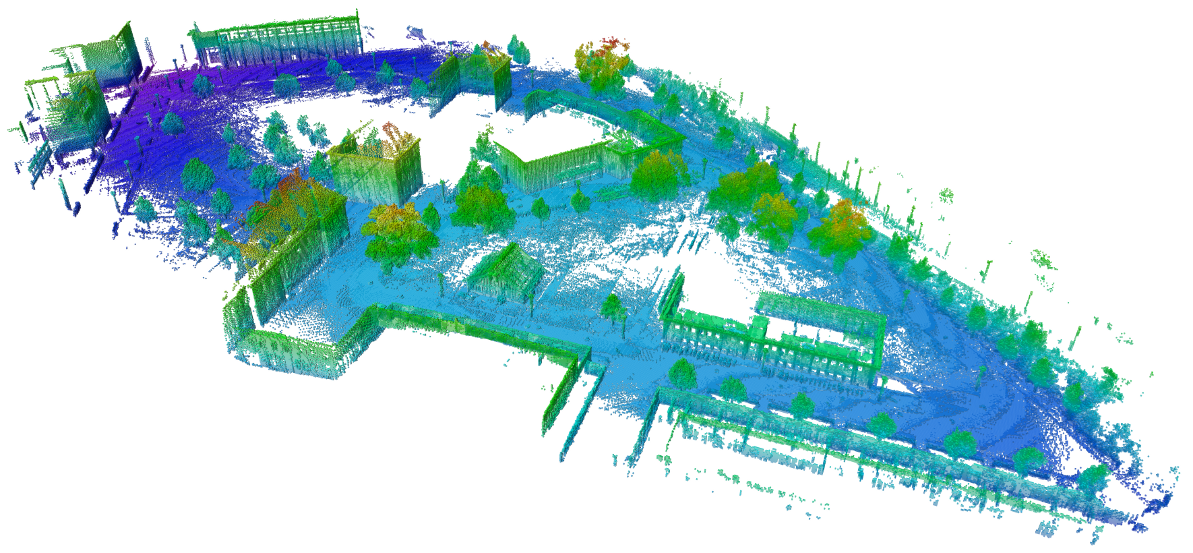

An example of such a (converted) representation can be seen in the image below. | |||

[[File:octomap_example_map.png|600px]] | [[File:octomap_example_map.png|600px|center]] | ||

Using an octree representation, the problem of flying a drone w.r.t. obstacles has been reduced to only obstacle avoidance, as detection is a simple check in the octree representation found by the octomap framework. | Using an octree representation, the problem of flying a drone w.r.t. obstacles has been reduced to only obstacle avoidance, as detection is a simple check in the octree representation found by the octomap framework. | ||

| Line 314: | Line 336: | ||

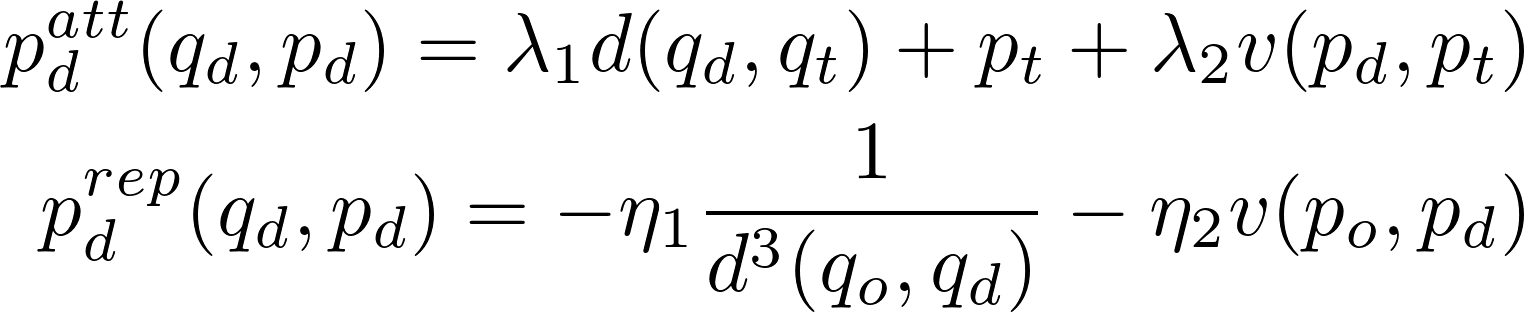

The potential field is constructed using attractive and repulsive potential equations, which pull the drone towards the target and push it away from obstacles. The attractive and repulsive potential fields can be summed together, to produce a field guides the drone past multiple obstacles and towards the target. | The potential field is constructed using attractive and repulsive potential equations, which pull the drone towards the target and push it away from obstacles. The attractive and repulsive potential fields can be summed together, to produce a field guides the drone past multiple obstacles and towards the target. | ||

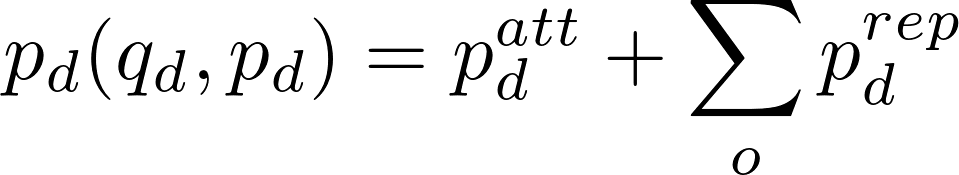

We consider potential forces to work in the x, y and z dimensions. To determine the drone’s velocity based on the attractive and repulsive potentials, we use the following functions: | We consider potential forces to work in the ''x'', ''y'' and ''z'' dimensions. To determine the drone’s velocity based on the attractive and repulsive potentials, we use the following functions: | ||

[[File:n_equation_1.png|600px|center]] | |||

where < | where ''λ<sub>1</sub>'', ''λ<sub>2</sub>'', ''η<sub>1</sub>'', ''η<sub>2</sub>'' are positive scale factors, ''d(q<sub>d</sub>, q<sub>t</sub>)'' is the distance between the drone and the target and ''v(p<sub>d</sub>, p<sub>t</sub>)'' is the relative velocity of the drone and the target. Similarly, distance and velocity of an obstacle ''o'' are used. | ||

There may be multiple obstacles and each one has its own potential field. To determine the velocity of the drone, we sum all attractive and repulsive velocities together: | There may be multiple obstacles and each one has its own potential field. To determine the velocity of the drone, we sum all attractive and repulsive velocities together: | ||

[[File:n_equation_2.png|600px|center]] | |||

== User Tracking == | == User Tracking == | ||

It should be clear that tracking the user is a large part of the software for the Follow-Me drone. We present python [ | It should be clear that tracking the user is a large part of the software for the Follow-Me drone. We present python code, available at our [https://github.com/nimobeeren/0LAUK0 github] repository, that can be used with a webcam as well as normal video files, which can use various tracking algorithms. | ||

=== Tracker Comparisons === | === Tracker Comparisons === | ||

In order to decide on which tracker to use, we have quite extensively compared all implemented trackers in OpenCV that work out of the box(version 3.4.4 has been used). Both videos from an online source [https://www.pyimagesearch.com/2018/07/30/opencv-object-tracking/] as well as own footage ([https://drive.google.com/open?id=1Y2RFkfoI38LIsz4zbivxiqykBj6-3a7I long_video] and [https://drive.google.com/file/d/1kujiZjRVe4f8CnrzMyFRThsXbEhIXYcK/view?usp=sharing short_video]) has been used to compare the trackers. | |||

We will first discuss all trackers with regard to the downloaded videos on a per-video basis, in the same order as has been tested. The discussion will include two major points, namely how good a tracker is dealing with occlusion in the sense that it should continue tracking the user and how good a tracker is dealing with occlusion in the sense that it should message that the user is occluded and afterwards resume tracking. | |||

* '''American Pharaoh''': This video is from a horse race. For each tracker we select the horse in first position at the start of the video as object to be tracked. During the video, the horse racers take a turn, making the camera perspective change. There are also small obstacles, which are white poles at the side of the horse racing track. | |||

** '''BOOSTING tracker''': The tracker is fast enough, but fails to deal with occlusion. The moment that there is a white pole in front of the object to be tracked, the BOOSTING tracker fatally loses track of its target. This tracker cannot be used to keep track of the target when there are obstacles. It can, however, be used in parallel with another tracker, sending a signal that the drone is no longer in line of sight with the user. | |||

** '''MIL tracker''': The MIL tracker, standing for Multiple Instance Learning, uses a similar idea as the BOOSTING tracker, but implements it differently. Surprisingly, the MIL tracker manages to keep track of the target where BOOSTING fails. It is fairly accurate, but does not run fast with a measly 5 FPS average. | |||

** '''KCF tracker''': The KCF tracker, standing for Kernelized Correlation Filters, builds on the idea behind BOOSTING and MIL. It reports tracking failure better than BOOSTING and MIL, but still cannot recover from full occlusion. As for speed, KCF runs fast enough at around 30 FPS. | |||

** '''TLD tracker''': The TLD tracker, standing for tracking, learning and detection, uses a completely different approach to the previous three. Initial research showed that this would be well-performing, however, the amount of false positives is too high to use TLD in practice. To add to the negatives, TLD runs the slowest of the up to this point tested trackers with 2 to 4 FPS. | |||

** '''MedianFlow tracker''': The MedianFlow tracker tracks the target in both forward and backward directions in time and measures the discrepancies between these two trajectories. Due to the way the tracker works internally, it follows that it cannot handle occlusion properly, which can be seen when using this tracker on this video. Similarly to the KCF tracker, the moment that there is slight occlusion, it fails to detect and continues to fail. A positive point of the MedianFlow tracker when compared with KCF is that its tracking failure reporting is better. | |||

** '''MOSSE tracker''': The MOSSE tracker, standing for Minimum Output Sum of Squared Error, is a relative new tracker. It is less complex than previously discussed trackers and runs a considerable amount faster than the other trackers at a minimum of 450 FPS. The MOSSE tracker also can easily handle occlusion, and be paused and resumed without problems. | |||

** '''CSRT tracker''': Finally, the CSRT tracker, standing for Discriminative Correlation Filter with Channel and Spatial Reliability (which thus is DCF-CSRT), is, similar to MOSSE, a relative new algorithm for tracking. It cannot handle occlusion and cannot recover. Furthermore, it seems that CSRT gives false positives after it lost track of the target, as it tries to recover but fails to do so. | |||

At this point we concluded that, for actual tracking purposes (e.g. how well the tracker deals with occlusion in the sense that it continues tracking the user), only the MIL, TLD and MOSSE trackers could be used. For error reporting (e.g. how well the tracker deals with occlusion in the sense that it sends a message that the user is occluded and afterwards resumes tracking) it is seen that the KCF tracker is superior, which is also supported by online sources [https://www.pyimagesearch.com/2018/07/30/opencv-object-tracking/]. | |||

* '''Dashcam Boston''': This video is taken from a dashcam in a car, in snowy conditions. The car starts off behind a traffic light, then accelerates and takes a turn. The object to track has been selected to be the car in front. | |||

** '''MIL tracker''': The MIL tracker starts off tracking correctly, although it runs slow (4-5 FPS). It does not care about the fact that there is snow (visual impairment) or the fact that the camera angle is constantly changing in the turn. Can perfectly deal with the minimal obstacles occuring in the video. | |||

** '''TLD tracker''': It runs both slower and inferior to MIL. There are many false positives. Even a few snowflakes were sometimes selected as object-to-track, making TLD impossible to use as tracker in our use case. | |||

** '''MOSSE tracker''': Unsurprisingly, the MOSSE tracker runs at full speed and can track without problems. It does, however, make the bounding box slightly larger than initially indicated. | |||

When looking at only the downloaded videos (there were more than 2), the conclusion remained the same. MOSSE is (in these cases) superior in terms of actual tracking, and KCF is (in these cases superior) in terms of error reporting. It is assumed that our own footage would conclude the same. | |||

* '''Real Life Scenario 1''': The video, available [[File:long_video.mp4 here]], has been shot by [Name omitted]. The user to track is Pim. The filming location is outside, near MetaForum on the TU/e campus. In the video, [Name omitted] is walking backwards while holding a phone in the air to emulate drone footage. During the course of this video, Nimo and Daniel will be occasionally walking next to Pim, as well as in front of him. There are 7 points of interest defined in the video (footage was around 2 and a half minutes long), which are as follows: | |||

*# Daniel walks in front of Pim. | |||

*# Nimo walks in front of Pim. | |||

*# Everyone makes a normal-paced 180 degrees turn around a pole. | |||

*# Pim almost gets out of view in the turn. | |||

*# Nimo walks in front of Pim. | |||

*# Daniel walks in front of Pim. | |||

*# Pim walks behind a pole. | |||

** '''BOOSTING tracker''': Runs at a speed of around 20 - 25 FPS. (1) This is not seen as an obstruction. The BOOSTING tracker continues to track Pim. (2) The tracker follows Nimo until he walks out of the frame. Then, it stays at the place where Nimo would have been while the camera pans. (3) As mentioned, the bbox (the part that shows what the tracker is tracking) stays at the place where Nimo was. (4) At this point the tracker has finally started tracking Pim again, likely due to luck. (5) see 2. and notes. (6) see 2. and notes. (7) see 2. and notes. | |||

***''Notes: Does not handle shaking of the camera well. It's also bad at recognizing when the subject to track is out of view; the moment there is some obstruction (points 2, 5, 6, 7), it follows the obstruction and tries to keep following it even though.'' | |||

**'''MIL tracker''': Runs at a speed of around : 10 - 15 FPS. (1) The tracker follows Daniel for a second, then goes back to the side of Pim, where it was before. (2) The tracker follows Nimo until he walks out of frame. Then it follows Daniel as he is closer to the bbox than Pim. (3) The tracker follows Daniel. (4) The tracker follows Daniel. (5) The tracker follows Nimo until he is out of view. (6) The tracker follows Daniel until he is out of view. (7) The tracker tracks the pole instead of Pim. | |||

***''Notes: The bbox seems to be lagging behind in this video (it's too far off to the right). Similarly to BOOSTING, MIL cannot deal with obstruction and is bad at detection tracking failure.'' | |||

**'''KCF tracker''': Runs at a speed of around: 10 - 15 FPS. (1) The tracker notices this for a split second and outputs tracking failure, but recovers easily afterwards. (2) The tracker reports a tracking failure and recovers. (3) no problem. (4) no problem. (5) The tracker does not report a tracking failure. This was not seen as an obstruction. (6) The tracker does not report a tracking failure. This was not seen as obstruction. (7) The tracker reports a tracking failure. Afterwards, it recovers. | |||

***''Notes: none.'' | |||

**'''TLD tracker''': Runs at a speed of around: 8 - 9 FPS. (1) TLD does not care. (2) TLD started tracking Daniel before Nimo could come in front of Pim, then switched back to Pim after Nimo was gone. (3) TLD likes false positives such as water on the ground, random bushes and just anything except Pim. (4) see notes. (5) see notes. (6) see notes. (7) see notes. | |||

***''Notes: The BBOX immediately focuses only on Pims face. Then it randomly likes Nimo's pants. It switches to Nimo's hand, Pim's cardigan and Pim's face continuously even before point 1. At points 4 through 7 the TLD tracker is all over the place, except for Pim.'' | |||

**'''Medianflow tracker''': Runs at a speed of around: 70 - 90 FPS (1) No problem. (2) It tracks Nimo until he is out of view, then starts tracking Daniel as he is closer similar to MIL. (3) It tracks Daniel. (4) It tracks Daniel. (5) It reports a tracking error only to return to tracking Daniel (6) It keeps tracking Daniel until out of view, then reports tracking error for the rest of the video. (7) Tracking error. | |||

***''Notes: The bbox becomes considerably larger when the perspective changes (e.g. Pim walks closer to the camera). Then, it becomes smaller again but stops focussing on Pim and reports tracking failure.'' | |||

**'''MOSSE tracker''': Runs at a speed of around: 80 - 100 FPS. (1) It doesnt care. (2) It follows Nimo. (3) It stays on the side. (4) It is still on the side. (5) It follows Nimo (6) It had stayed on the side and tracked Daniel for a split second. (7) It tracks the pole. | |||

***''Notes: Interestingly MOSSE doesn't perform well in a semi real-life scenario. It is bad at detecting when it is not actually tracking the subject to track.'' | |||

**'''CSRT tracker''': Runs at a speed of around: 5 - 7 FPS (1) It doesnt care. (2) It follows Nimo, then goes to the entire opposite of the screen and tracks anything in the top left corner. (3) It tracks anything in the top left corner. (4) It tracks anything in the top left corner. (5) It tracks Nimo, then keeps tracking the top left corner. (6) It tracked a non-existent thing at the top right. (7) It goes to top left to track another non-existent thing. | |||

***''Notes: Deals badly with obstruction. Likes to track the top left and right corners of the screen.'' | |||

* '''Real Life Scenario 2''': The video, available [[File:short_video.mp4 here]], has been shot by [Name omitted]. The user to track is Pim. The filming location is outside, near MetaForum on the TU/e campus. In the short video, we tried to increase realism by having [Name omitted] stand on the stone benches walking backwards in a straight line. | |||

**''' BOOSTING tracker''': Runs at a speed of around: 25 - 30 FPS Can surprisingly deal with having Pim's head cut off in the shot. Fails to follow when Nimo walks in front of Pim (e.g. it follows Nimo). | |||

**''' MIL tracker''': Runs at a speed of around: 8 - 10 FPS Can somewhat deal with having Pim's head cut off in the shot, but doesn't reset bbox to keep tracking his head as well as his body. Fails to follow when Nimo walks in front of Pim (e.g. it follows Nimo). | |||

**''' KCF tracker''': Runs at a speed of around: 12 - 13 FPS Can deal with having Pim's head cut off in the shot and nicely resets bbox to include head afterwards. Can deal with Nimo walking in front and reports tracking failure. | |||

**''' TLD tracker''': Runs at a speed of around: 8 - 10 FPS Can not deal with having Pim's head cut off in the shot (it tracks the stone behind him). Can deal with Nimo walking in front, but does not report tracking failure. | |||

**''' Medianflow tracker''': Runs at a speed of around: 60 - 70 FPS Can deal with having Pim's head cut off in the shot, but seems to be bad with tracking in general (again the bbox is off on the right side). Fails to follow when Nimo walks in front of Pim (e.g. it follows Nimo). | |||

**''' MOSSE tracker''': Runs at a speed of around: 70 - 80 FPS Can deal with having Pim's head cut off in the shot and nicely resets bbox to include head afterwards. Fails to follow when Nimo walks in front of Pim (e.g. it follows Nimo). | |||

**''' CSRT tracker''': Runs at a speed of around: 4 - 6 FPS Can deal with having Pim's head cut off in the shot and nicely resets bbox to include head afterwards. Shortly switches to Nimo when he walks in front of Pim, but then switches back. | |||

=== Tracker Conclusions === | |||

During the testing phases, we came to mixed conclusions. On one hand, for the downloaded video sources (not all testing is mentioned as to not clutter the wiki too much), the MOSSE tracker consistently outperforms the other trackers for tracking whichever subject we had selected to be tracked. KCF consistently outperforms with respect to error reporting. For our own footage, however, it showed that MOSSE was outperformed by KCF on the tracking front as well. | |||

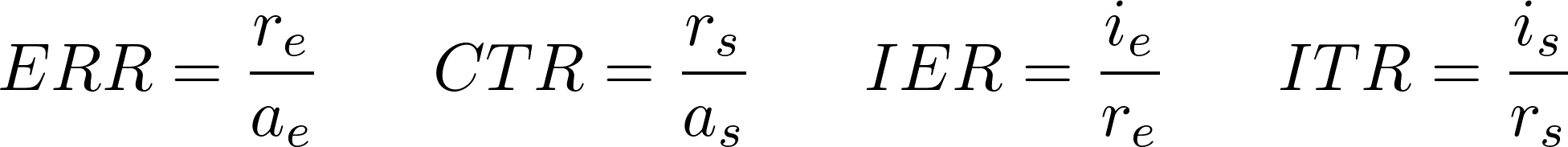

To further investigate which tracker is the better one, we have analysed our own footage. Using a screen recording of the tracking algorithms (KCF and MOSSE in parallel) at work, we have stepped through frame-by-frame to manually count the number of false positive frames, as well as the number of tracking failures and successes. The measures used to find out how good a tracker is are the ''Error Reporting Rate (ERR)'', ''Correct Tracking Rate (CTR)'', ''Incorrect Error Rate (IER)'' and ''Incorrect Tracking Rate (ITR)'' as shown here. | |||

[[File:d_equation_7.png|400px|center]] | |||

<!-- ERR = \frac {r_e} {a_e} ~~~~~ CTR = \frac {r_s} {a_s} ~~~~~ IER = \frac {i_e} {r_e} ~~~~~ ITR = \frac {i_s} {r_s} --> | |||

In the formulas, ''r<sub>e</sub>'' and ''a<sub>e</sub>'' stand for ''reported error'' (amount of frames) and ''actual error'' respectively (amount of frames that the subject was completely occluded). similarly, ''r<sub>e</sub>'' and ''a<sub>e</sub>'' stand for ''reported success'' (amount of frames) and ''actual success'' (amount of frames that the subject was '''not''' completely occluded). Finally, ''i<sub>e</sub>'' and ''i<sub>s</sub>'' stand for ''incorrect errors'' (amount of frames) and ''incorrect success'' (amount of frames) respectively. | |||

We first present a table with absolute numbers, and then one with above defined measures. | |||

{| class="wikitable" | |||

|+ Tracker Usability - Absolute | |||

|- | |||

! | |||

! Type | |||

! amount of frames | |||

! a<sub>s</sub> | |||

! a<sub>e</sub> | |||

! i<sub>s</sub> | |||

! i<sub>e</sub> | |||

|- | |||

! Short Video | |||

| Actual || 649 || 623 || 26 | |||

|- | |||

! | |||

| KCF || 649 || 606 || 43 || 0 || 17 | |||

|- | |||

! | |||

| MOSSE || 649 || 649 || 0 || 26 || 0 | |||

|- | |||

! Long Video | |||

| Actual || 2273 || 2222 || 51 | |||

|- | |||

! | |||

| KCF || 2273 || 2016 || 257 || 0 || 206 | |||

|- | |||

! | |||

| MOSSE || 2273 || 2273 || 0 || 1321 || 0 | |||

|} | |||

From the absolute values we compose the table with the actual errors: | |||

{| class="wikitable" | |||

|+ Tracker Usability - Measures | |||

|- | |||

! | |||

! Tracker | |||

! ERR | |||

! CTR | |||

! IER | |||

! ITR | |||

|- | |||

! Short Video | |||

| ''KCF'' || 1.65 || 0.97 || 0.40 || 0 | |||

|- | |||

! | |||

| ''MOSSE'' || 0 || 1.04 || + Inf || 0.04 | |||

|- | |||

! Long Video | |||

| ''KCF'' || 5.04 || 0.91 || 0.80 || 0 | |||

|- | |||

! | |||

| ''MOSSE'' || 0 || 1.03 || + Inf || 0.58 | |||

|} | |||

It should be noted that we have written ''+ Inf'' in the table to denote a division by zero, whose limit is positive infinity. | |||

As can be seen, KCF and MOSSE perform extremely well in their respective categories. Since the ITR of the KCF tracker is in both computed cases equal to 0, we can conclude that it is most certainly good enough for the task, as 0 is the perfect number for this measure. Similarly, since MOSSE has a CTR close to 1, it is good enough for the task, as 1 is the perfect number for this measure.. | |||

== User dependent positioning == | |||

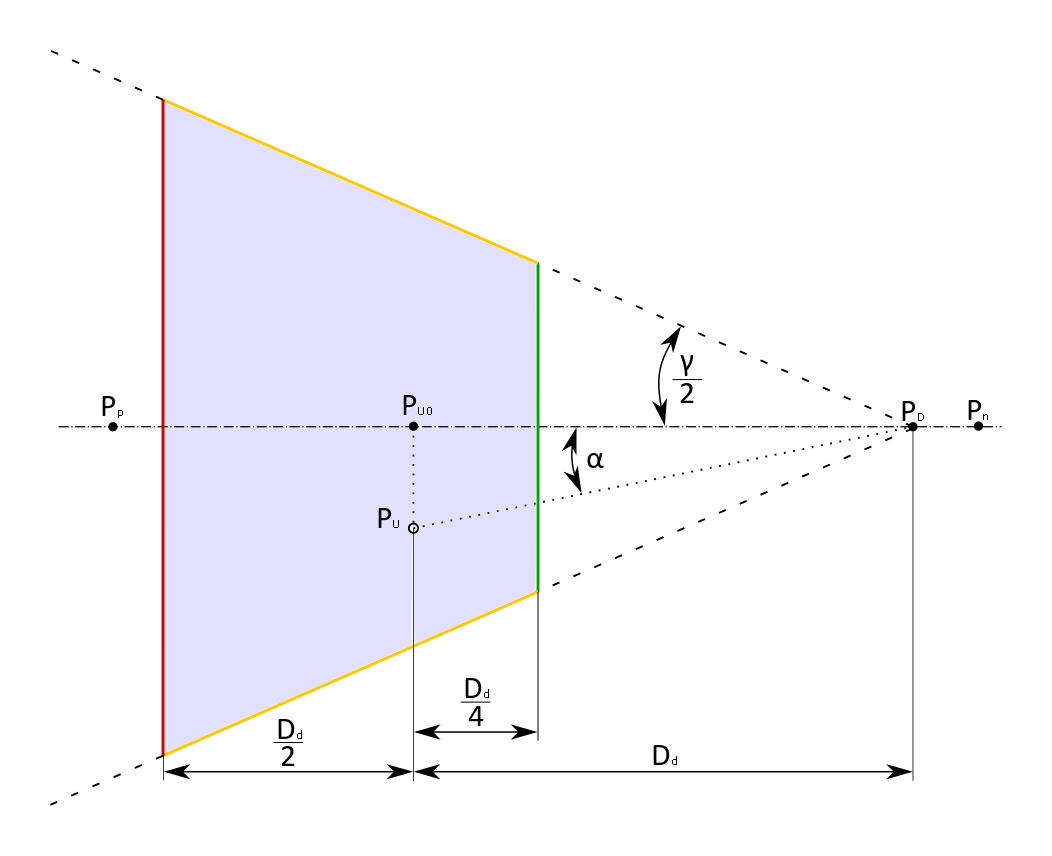

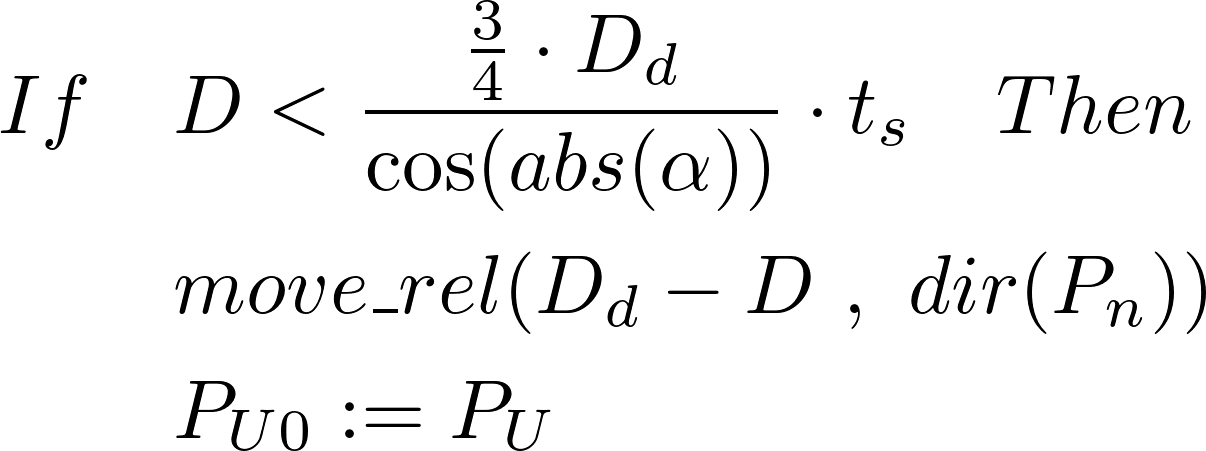

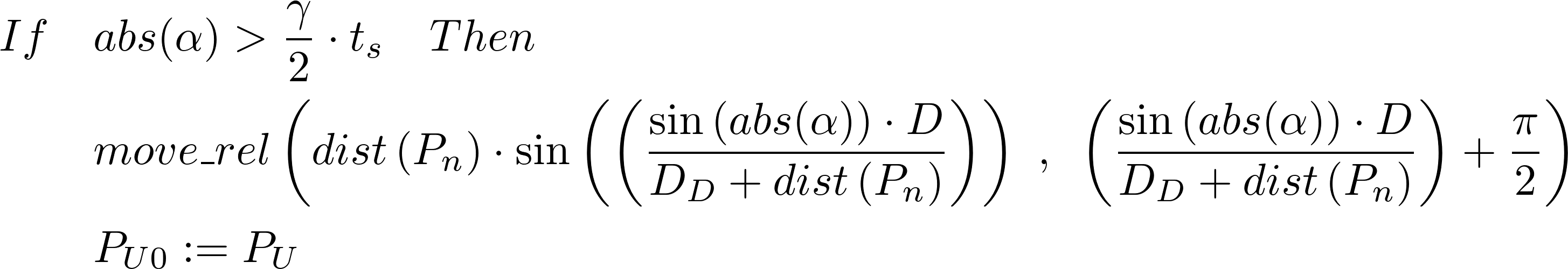

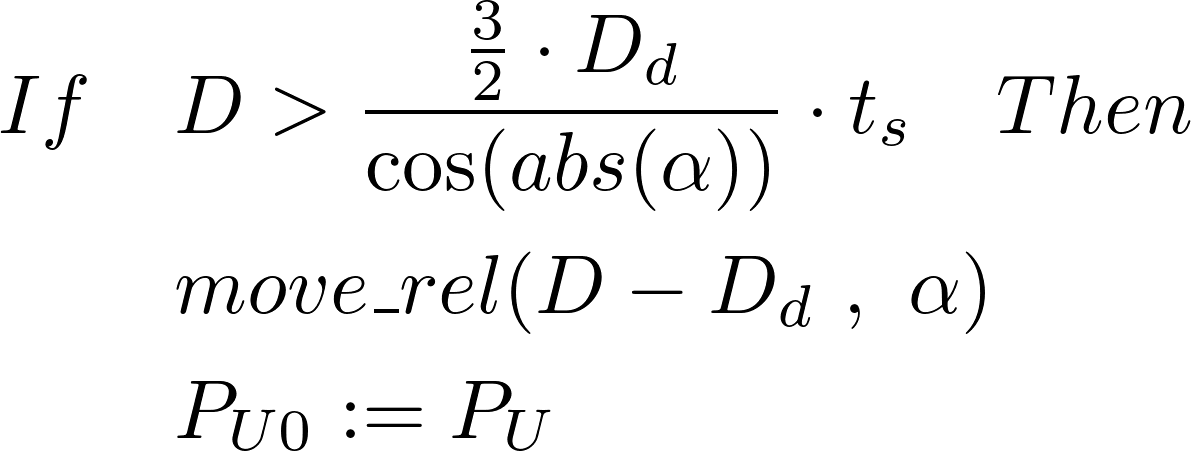

Since a user should still be able to move around as freely as possible during being guided, there is a need for the drone to react appropriately to certain scenarios and movements of the user, while still having the goal of guiding the user to her destination. In the following section, various variables, formulas and conditions will be introduced, that together allow for smooth movements of the drone, while reacting to various scenarios. The definitions will all refer to and at the same time act as a legend for the following image. | |||

[[File:ToleranceZone2.png|600px]] | |||

=== Variables & Parameters === | |||

'''D''' … Distance: this describes the continuously updated horizontal distance between the drone and the user. | |||

'''α''' … User angle: The angle between the vector '''''P<sub>D</sub>P<sub>U0</sub>''''' (the last significant position of the user) and the vector '''''P<sub>D</sub>P<sub>U</sub>''''' (the current position of the user). Calculated by '''dir(''P<sub>U</sub>'')'''). | |||

'''D<sub>d</sub>''' … Default distance. Parameter that can be varied by the user, defining the desired default horizontal distance that the drone is supposed to be apart from the user while guiding. | |||