PRE2019 4 Group2: Difference between revisions

No edit summary |

|||

| (263 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

= DeepWeed, a weed/crop classification neural network = | |||

Leighton van Gellecom, Hilde van Esch, Timon Heuwekemeijer, Karla Gloudemans, Tom van Leeuwen | Leighton van Gellecom, Hilde van Esch, Timon Heuwekemeijer, Karla Gloudemans, Tom van Leeuwen | ||

| Line 16: | Line 18: | ||

---- | ---- | ||

'''User profile''' | |||

Farmers that adopt a sustainable farming method differ significantly from conventional farmers on personal characteristics. Sustainable farmers tend to have a higher level of education, be younger, have more off-farm income, and adopt more new farming practices (Ekanem & co., 1999). The sustainable farming has other goals than conventional farming as it focuses on aspects like biodiversity and soil quality in addition to the usual high productivity and high profit. | Farmers that adopt a sustainable farming method differ significantly from conventional farmers on personal characteristics. Sustainable farmers tend to have a higher level of education, be younger, have more off-farm income, and adopt more new farming practices (Ekanem & co., 1999). The sustainable farming has other goals than conventional farming as it focuses on aspects like biodiversity and soil quality in addition to the usual high productivity and high profit. | ||

| Line 22: | Line 26: | ||

There is a growing trend of sustainable farming, with the support of the EU, which has set goals for sustainable farming and promotes these guidelines (Ministerie van Landbouw, Natuur en Voedselkwaliteit, 2019). This trend expresses itself in the transition from conventional to sustainable methods within farms, and new initiatives, such as Herenboeren. | There is a growing trend of sustainable farming, with the support of the EU, which has set goals for sustainable farming and promotes these guidelines (Ministerie van Landbouw, Natuur en Voedselkwaliteit, 2019). This trend expresses itself in the transition from conventional to sustainable methods within farms, and new initiatives, such as Herenboeren. | ||

Agroforestry imposes more difficulty in the removal of weeds, due to the mixed crops. Weeding is a physically heavy and dreadful job. These reasons | Agroforestry imposes more difficulty in the removal of weeds, due to the mixed crops. Weeding is a physically heavy and dreadful job. These reasons cause growing need for weeding systems from farmers who made a transition to agroforestry. This is also ascribed by Marius Monen, co-founder of CSSF and initiator in the field of agroforestry. | ||

Spraying pesticides preventively reduces food quality and poses the problem of environmental pollution (Tang, J., Chen, X., Miao, R., & Wang, D.,2016). The users of the software for weed detection would not only be the sustainable farmers, but also indirectly the consumers of farming products, as it poses an influence on their food and environment. | Spraying pesticides preventively reduces food quality and poses the problem of environmental pollution (Tang, J., Chen, X., Miao, R., & Wang, D.,2016). The users of the software for weed detection would not only be the sustainable farmers, but also indirectly the consumers of farming products, as it poses an influence on their food and environment. | ||

Since the approach and views of sustainable farmers may differ, one of the requirements of the system is that it is flexible in its views what may be concerned as weeds, and what as useful plants (Perrins, Williamson, Fitter, 1992). Furthermore, regarding the set-up of agroforestry, the system should be able to deal with different kinds of plants in a small region. | |||

This research is in cooperation with CSSF. In line with their advice, we will focus on the type of agroforestry farming where both crops and trees grow, in strips on the land. To test the functionality of our design, we will be working in cooperation with farmer John Heesakkers, who has shifted from livestock farming towards this form of agroforestry recently. Therefore, his case will be our role model to design the system. | |||

'''System requirements''' | |||

Since the approach and views of sustainable farmers may differ, one of the requirements of the system is that it is flexible in its views on what may be concerned as weeds, and what as useful plants (Perrins, Williamson, Fitter, 1992). It should thus be able to distinguish multiple plants instead of merely classifying weeds/non-weeds. Based on user feedback, the following list of plant types should be recognised as weeds: [https://en.wikipedia.org/wiki/Atriplex Atriplex], [https://en.wikipedia.org/wiki/Capsella_bursa-pastoris Shepherd's purse ], [https://en.wikipedia.org/wiki/Persicaria_maculosa Redshank], [https://en.wikipedia.org/wiki/Stellaria_media Chickweed], [https://en.wikipedia.org/wiki/Lamium_purpureum Red Dead-Nettle], [https://en.wikipedia.org/wiki/Chenopodium_album Goosefoot], [https://en.wikipedia.org/wiki/Cirsium_arvense Creeping Thistle] and [https://en.wikipedia.org/wiki/Rumex_obtusifolius Bitter Dock]. Furthermore, regarding the set-up of agroforestry, the system should be able to deal with different kinds of plants in a small region, thus it should be able to recognise multiple plants in one image. It also means that the plant types include trees, making which set the maximum height and breadth of the plants. The non-weedsare expected to be recognised when (nearly) fully grown, as young plants are very hard to distinguish. However, weeds should be removed as soon as possible and in every growth stage. Next, the accuracy of the system should be as close as possible to 100%, however realistically an accuracy of at least 95% should be achieved. The system should not recognize a non-weed as a weed, because this will lead to harm or destruction of the value of that plant. Lastly, based on constraints on both the training/testing and possible implementation, the neural network should be as efficient and compact as possible, so that it can classify plant images real-time. The following will give a rough estimation of the upper bound for the processing time. Given a speed of 3.6 km/h and a processed image every meter and maximally two cameras are used for detection, than the upper bound of the processing time is 500 milliseconds per image. If the system performs the classification more quickly, than the frequency of taking pictures could be increased, the movement speed could be increased or the combination of these improvements could happen. Moreover, farming equipment is getting increasingly expensive and therefore they are a pray to theft. The design should minimize the attractiveness of stealing the system. This yields the following concrete list of system requirements: | |||

<ol> | |||

<li> The system should be flexible in its views on what may be concerned as weeds. </li> | |||

<li> The system should be able to distinguish the following types of weeds: [https://en.wikipedia.org/wiki/Atriplex Atriplex], [https://en.wikipedia.org/wiki/Capsella_bursa-pastoris Shepherd's purse ], [https://en.wikipedia.org/wiki/Persicaria_maculosa Redshank], [https://en.wikipedia.org/wiki/Stellaria_media Chickweed], [https://en.wikipedia.org/wiki/Lamium_purpureum Red Dead-Nettle], [https://en.wikipedia.org/wiki/Chenopodium_album Goosefoot], [https://en.wikipedia.org/wiki/Cirsium_arvense Creeping Thistle] and [https://en.wikipedia.org/wiki/Rumex_obtusifolius Bitter Dock] </li> | |||

<li> The system should be able to recognize multiple plants in one image.</li> | |||

<li> Non-weeds should be recognized in a mature growing stage, whereas weeds should be recognized in all different growing stages.</li> | |||

<li> The classification accuracy of weeds versus non-weeds is preferably above 95%.</li> | |||

<li> The system should ideally be able to have no false positive classifications. </li> | |||

<li> The system should be able to work under varying lighting conditions, but under the restriction of daytime.</li> | |||

<li> Preferably the system should work well under varying weather conditions, such as heat and rain.</li> | |||

<li> The processing time of a single image should be real-time, that is in under 500 milliseconds.</li> | |||

<li> The position of the weed should be known in the image.</li> | |||

<li> The robot should be as less as possible the target of theft. </li> | |||

<li> The farmer should not have to worry about the system, data acquisition and validation should only take little of the farmer’s time.</li> | |||

</ol> | |||

'''Costs and benefits''' | |||

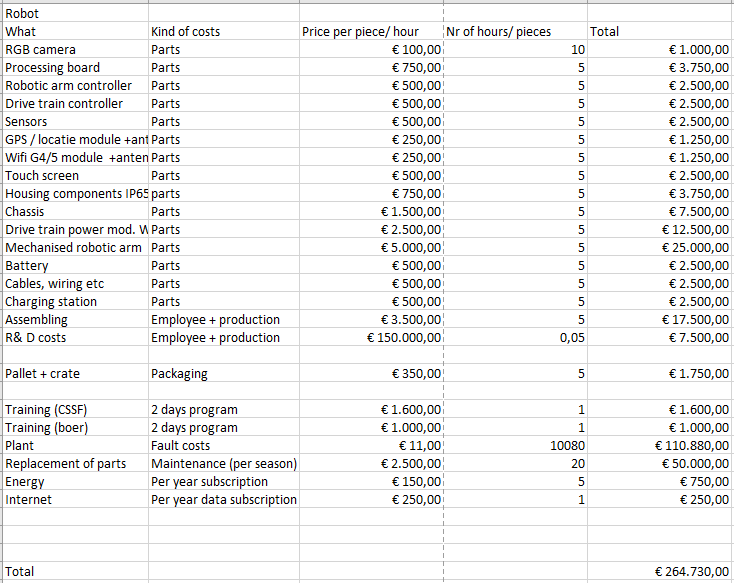

An important aspect regarding the user is what advantages the weeding robot will bring. The most obvious possible advantage is profit. Therefore, an estimation of the costs of the weeding robot per year was drawn up. In this estimation, the following aspects were considered: | |||

- Production costs | |||

- Research costs | |||

- Costs of the production materials | |||

- Packaging costs | |||

- Maintenance costs | |||

- The employee costs of the training required to work with the robot, where both the costs for the training givers (CSSF) and the receivers (the farmers) are considered | |||

- The costs of the training itself | |||

- Error costs of the machine: the costs made by the robot by accidentally destroying crops | |||

- Internet costs required for the robot to function | |||

- Energy costs of the robot | |||

The actual costs may be quite different from the costs that were calculated in this estimation, since the production of the robot has not started yet, the design is not finished, and the research is still quite in an earlier phase. However, the estimation can still prove useful to gain a general idea of the costs. Furthermore, it should be noted that several kinds of costs were not taken into account yet, such as employee cars, office rent costs, remainder employee costs, production space rent costs, and possibly other indirect costs. This is with a reason: the costs of the robot will probably lower after the first production phase, which will be in proportion to these additional costs. This consideration was consulted by CSSF, which have spent more attention on this already. In addition, adding these costs to the estimation would make the estimation a lot more inaccurate probably, since these costs are very hard to estimate in such an earlier stadium. | |||

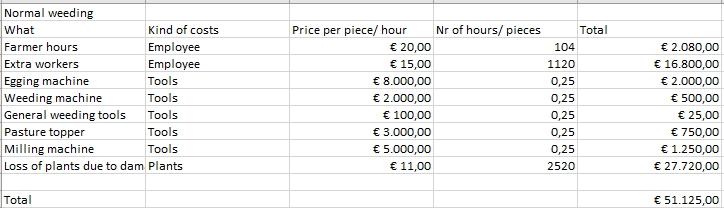

Of course, it is impossible to know what the costs of the robot would mean for the farmer if it cannot be compared. Therefore, the costs that traditional weeding imposes per year were calculated as well. For this calculation, the following aspects were considered: | |||

- Employee costs of the farmer to weed | |||

- Employee costs of additional workers to weed | |||

- Machines required for weeding | |||

- Additional tools required for weeding | |||

- Costs of mechanical damage to wanted plants | |||

From the comparison between the costs of the weeding robot and the costs of traditional weeding it turned out that the weeding robot will not be profitable to the farmer. This does not necessarily mean that the weeding robot will not be beneficial to the farmer though. The costs of the weeding robot per year were calculated to be around €250.000, as opposed to €50.000,- per year for traditional weeding. This calculation was based on the farm of John Heesakkers, with the estimation of 5 required robots for a farm of that size. A more detailed calculation can be found in the [[#Appendix|appendix]]. This would mean there is a 1:5 ratio in costs for traditional weeding versus weeding with the robot. There are still opportunities for the future of the robot though. | |||

As CSSF also mentioned, the costs of the robot will probably decline after the first production phase. Production can be made more efficient, and as happens with all new technologies, costs will decline after a while. Of course, this still means that the robot is very expensive in the beginning phase. This could be solved by the possibility of subsidizing the robot. This would be a plausible possibility, since the robot would support the cause of agroforestry, which is better for agriculture, as explained before. It is known to many people that change needs to happen in the field of agriculture with the high need for food with little space, especially in the Netherlands. Agroforestry seems promising for these issues, and thus it would be likely to be able to receive subsidies for a robot which would enable farmers to convert to agroforestry. | |||

In addition, a large part of the difference between the costs of the robot and the costs of traditional weeding are caused by the current error rate of the weeding robot. Using the performance of the current system, the percentage of wanted plants that will be damaged by the robot is estimated to be at least 2%. Since John Heesakkers indicated that the mechanical damage of traditional weeding is very limited, the damage percentage of traditional weeding is estimated at 0.5%. This provides opportunities for cost reduction of the robot. If the performance of the weed recognition system would be further improved such that the costs of damage to plants would be equal or even lower to those in traditional weeding, the weeding robot could become profitable. Ways to improve the system are further elaborated below in the chapter on “Further research and developments”. | |||

From this, it can be concluded that while the robot may not necessarily be profitable for farmers at first compared to traditional weeding, there are still opportunities. In the first years, the difference in the costs and profits can be overcome using subsidies. In that period, attention should be given to a business plan which enables reduction of costs of the robots, due to new developments in technology, reduction in material costs, and good marketing such that R&D costs can be divided over a larger number of products. | |||

There are also other motivations that could play a role for farmers in the purchase of a weeding robot. While profit seems most obvious, it is not necessarily the main motive. As CSSF explained, for many farmers it is acceptable if the robot costs more than traditional weeding, if that means they can contribute in this way to the development. There are other motives for purchasing the weeding robot. Farmer John Heesakkers explained his scenario: “Weeding by hand is not pleasurable work to do. Also, I have to search for workers in the months that the weeds grow really fast, because I cannot keep up with the work on my own anymore.”. | |||

Agroforestry is a relatively new form of farming. As explained earlier, it might prove very effective in different ways: it is better for the ground since the issue of soil depletion is reduced, the harvest is less vulnerable to plagues and diseases, and it improves biodiversity. However, to make this way of agriculture possible, new developments are required. Weeding becomes more difficult, since multiple kinds of crops are growing close to each other. Where it used to be enough to distinguish the only crop on the soil from weeds, it now takes more knowledge to be able to do weeding since multiple kinds of plants need to be distinguished. In addition, it is more difficult to weed between the rows of planted crops, since the rows are not necessarily straight, and sometimes even overlapping. Farmer John explained: "In the months of spring, when the weeds grow really fast, it would take two people weeding full-time to keep up with the weeds". Weeding is quite heavy work, and even requires quite some knowledge of plants in agroforestry. It is difficult to find people capable and willing to do this work. | |||

The weeding robot can take tiresome work off the hands of the farmer, and provide the ability for the farmer to spend time on other things. In addition, the robot provides stability: it will no longer form a problem if more weeds start growing quicker. The farmer does not need to concern himself with the need to hire extra employees, and to instruct and supervise them. | |||

'''Relating results to user needs''' | |||

The end result of the project is a computer vision tool with a certain accuracy and confusion matrix. From the confusion matrix follow the number of true positives, false positives, true negatives and false negatives. To determine whether these values are acceptable, the user’s needs are of importance. The project is focused on two users, CSSF and John Heesakkers. The needs of the two users overlap but are not the same. Therefore both needs are discussed separately. | |||

For John Heesakkers running his farm efficiently is important. Ideally, the weeding robot removes all weeds and does not damage the crops. This means a network with an accuracy of 100% and zero false positives or negatives. Since the model will have false positives and negatives, it is important to define the boundaries of these numbers. These boundaries are indicated by Mr. Heesakkers. The robot has to remove 80% of all the weeds in one try. He equals the maximum of damaged crops to 2-3% of all crops. Both percentages are determined intuitively. The percentages indicate that the priority of not damaging crops lies higher than removing weeds. It is more important to reduce the number of false positives than to increase the accuracy. This follows from the preferences of the user. | |||

Training the model with two categories means creating a network that makes a distinction between weeds and non-weeds. All the data of the crops is merged into one category and the same goes for the data of the weeds. The model has to give a positive result when it detects a weed and a negative result when it detects a non-weed. The number of false positives gives an indication of the percentage of crops that will be damaged by the robot. The percentage of damaged crops has to be lower than 3% in total. The percentage of false positives has to be even lower than 3%. The robot will pass the crops multiple times while weeding. If the robot damages 3% of the crops each time, the total percentage will be much higher. For example, damaging 0.5% of the crops each time the robot passes all plants means 3% will be damaged after six times. Weeds can grow from February through October. Weeding every other week means the robot will pass the crops 18 times. In order to only damage 3% in total, 0.17% can be damaged each time the robot passes all plants. The percentage will be even lower if errors in other parts of the robot are taken into account. The accuracy gives an indication of the weeds that are not removed. The robot has to remove 80% of all weeds in one try. The percentage of the accuracy has to be significantly higher than 80%. Errors in other parts of the robot is the reason why. Besides detecting the weed, the position has to be determined and the action of actual removing the weed has to take place. Each step has a certain error, which can cause the weed not to be removed or accidently damaging a crop. Since these steps are not worked out yet, an assumption has to be made. The weeding robot of Raja et al. (2020) had a gap of 15% between software accuracy and actual accuracy. Using this as a guideline, the accuracy has to be at least 95%. | |||

Training the model with eleven categories means creating a network that recognizes types of plants. It can recognize the different types crops and weeds. Even though the farmer is only interested in the distinction between weeds and non-weeds, working with multiple categories is useful. It shows the capabilities of the network. Also, the software is useful for a different farmer who wants to keep certain weeds. From the network the ratio of false positives for non-weeds has to be extracted. This ratio is used to give an indication of the percentage damaged crops. The accuracy of this network can be determined in two different ways. The overall accuracy of all the categories says something about the ability to recognize a plant species. The accuracy of only the weed plant species indicates the ability to recognize weeds. It is difficult to determine the exact accuracy of detecting weeds with only the overall accuracy. The degree of influence of the non-weeds is unknown. However, the overall accuracy is related to the accuracy of weed detection and therefore has to be above than 95%. | |||

CSSF does not indicate desired percentages like Mr. Heesakkers. They are interested in proof of concept. Instead of reaching a specific accuracy or percentage of false positives, gaining technical insights about the network is important to them. Their goal is to create an autonomous weeding and harvesting robot with the help of multiple projects of different scales. This robot should work with a network which has a high accuracy, close to no false positives and gives negative as result in case of doubt. This project is a step towards the goal of CSSF. If the results do not reach the desired goal of Mr. Heesakkers, the project is still useful to CSSF. They have gained insights on what to do and not to do during the following projects. The final report including proof of concept and steps that have to be taken to create the final network is the result that meets the needs of CSSF. | |||

== Approach and Milestones == | == Approach and Milestones == | ||

| Line 31: | Line 119: | ||

---- | ---- | ||

The main challenge is the ability to distinguish undesired (weeds) and desired (crops) plants. Previous attempts have utilised | The main challenge is the ability to distinguish undesired (weeds) and desired (crops) plants. Previous attempts (Su et al., 2020)(Raja et al., 2020) have utilised chemicals to mark plants as a measurable classification method, and other attempts only try to distinguish a single type of crop. In sustainable farming based on biodiversity, a large variety of crops are grown at the same time, meaning that it is extremely important for automatic weed detection software to be able to recognise many different crops as well. To achieve this, the first main objective is collecting data, and determines which plants can be recognised. The data should be colour images of many species of plants, of an as high as possible quality, meaning that it should be of high resolution, in focus and with good lighting. Species that do not have enough images will be removed. Next, using the gathered data, the next main objective will be training and testing neural networks with varying architectures. The architectures can range from very simple networks with one hidden layer to using pre-existing networks, such as ResNet (He et al., 2015) trained on datasets such as ImageNet (Russakovsky et al., 2015). Then, weeds will be defined as a species of plant that is not desired, or not recognised. Based on this, the final objective will be testing the best neural network(s) using new images from a farm, to see its accuracy in a real environment. | ||

To summarize: <br /> | To summarize: <br /> | ||

Images of plants will be collected for training. < | <ol> | ||

<li>Images of plants will be collected for training.</li> | |||

The best | <li>Neural networks will be trained to recognise plants and weeds.</li> | ||

<li>The best neural networks will be tested in real situations.</li> | |||

</ol> | |||

== Deliverables == | == Deliverables== | ||

---- | ---- | ||

The main deliverable will be a | The main deliverable will be a neural network that is trained to distinguish desired plants and undesired plants on a diverse farm, that is as accurate as possible, and can recognise as many different species as possible. The performance of this neural network, as well as the explored architectures and encountered problems will be described in this wiki, which is the second part of the deliverables. | ||

== Planning == | |||

---- | |||

End of week 1: | |||

<table border="1px solid black"> | |||

<tr> | |||

<th>Milestone</th> | |||

<th>Responsible</th> | |||

<th>Done</th> | |||

</tr> | |||

<tr> | |||

<td>Form a group</td> | |||

<td>Everyone</td> | |||

<td>Yes</td> | |||

</tr> | |||

<tr> | |||

<td>Choose a subject</td> | |||

<td>Everyone</td> | |||

<td>Yes</td> | |||

</tr> | |||

<tr> | |||

<td>Make a plan</td> | |||

<td>Everyone</td> | |||

<td>Yes</td> | |||

</tr> | |||

</table> | |||

End of week 2: | |||

<table border="1px solid black"> | |||

<tr> | |||

<th>Milestone</th> | |||

<th>Responsible</th> | |||

<th>Done</th> | |||

</tr> | |||

<tr> | |||

<td>Improve user section</td> | |||

<td>Hilde</td> | |||

<td>Yes</td> | |||

</tr> | |||

<tr> | |||

<td>Specify requirements</td> | |||

<td>Tom</td> | |||

<td>Yes</td> | |||

</tr> | |||

<tr> | |||

<td>Make an informed choice for model</td> | |||

<td>Leighton</td> | |||

<td>Yes</td> | |||

</tr> | |||

<tr> | |||

<td>Read up on (Python) neural networks</td> | |||

<td>Everyone</td> | |||

<td>Yes</td> | |||

</tr> | |||

</table> | |||

End of week 3: | |||

<table border="1px solid black"> | |||

<tr> | |||

<th>Milestone</th> | |||

<th>Responsible</th> | |||

<th>Done</th> | |||

</tr> | |||

<tr> | |||

<td>Set up a collaborative development environment</td> | |||

<td>Timon</td> | |||

<td>Yes</td> | |||

</tr> | |||

<tr> | |||

<td>Have a training dataset</td> | |||

<td>Karla</td> | |||

<td>Yes</td> | |||

</tr> | |||

</table> | |||

End of week 4: | |||

<table border="1px solid black"> | |||

<tr> | |||

<th>Milestone</th> | |||

<th>Responsible</th> | |||

<th>Done</th> | |||

</tr> | |||

<tr> | |||

<td>Implement basic neural network structure</td> | |||

<td>Timon, Leighton</td> | |||

<td>Yes</td> | |||

</tr> | |||

<tr> | |||

<td>Justify all design choices on the wiki</td> | |||

<td>Tom</td> | |||

<td>Yes</td> | |||

</tr> | |||

</table> | |||

End of week 5: | |||

<table border="1px solid black"> | |||

<tr> | |||

<th>Milestone</th> | |||

<th>Responsible</th> | |||

<th>Done</th> | |||

</tr> | |||

<tr> | |||

<td>Implement a working neural network</td> | |||

<td>Timon, Leighton</td> | |||

<td>Yes</td> | |||

</tr> | |||

</table> | |||

End of week 6: | |||

<table border="1px solid black"> | |||

<tr> | |||

<th>Milestone</th> | |||

<th>Responsible</th> | |||

<th>Done</th> | |||

</tr> | |||

<tr> | |||

<td>Explain our process of tweaking the hyperparameters</td> | |||

<td>Timon</td> | |||

<td>Yes</td> | |||

</tr> | |||

</table> | |||

End of week 7: | |||

<table border="1px solid black"> | |||

<tr> | |||

<th>Milestone</th> | |||

<th>Responsible</th> | |||

<th>Done</th> | |||

</tr> | |||

<tr> | |||

<td>Finish tweaking the hyperparameters and collect results</td> | |||

<td>Timon, Leighton, Tom</td> | |||

<td>Yes</td> | |||

</tr> | |||

<tr> | |||

<td>Finding the costs and benefits of the weeding robot</td> | |||

<td>Hilde</td> | |||

<td>Yes</td> | |||

</tr> | |||

</table> | |||

End of week 8: | |||

<table border="1px solid black"> | |||

<tr> | |||

<th>Milestone</th> | |||

<th>Responsible</th> | |||

<th>Done</th> | |||

</tr> | |||

<tr> | |||

<td>Create the final presentation</td> | |||

<td>Everyone</td> | |||

<td>Yes</td> | |||

</tr> | |||

<tr> | |||

<td>Hand in peer review</td> | |||

<td>Everyone</td> | |||

<td>Yes</td> | |||

</tr> | |||

<tr> | |||

<td>Translating the results and costs to the user needs</td> | |||

<td>Hilde and Karla</td> | |||

<td>Yes</td> | |||

</tr> | |||

<tr> | |||

<td>Determining future research</td> | |||

<td>Hilde and Karla</td> | |||

<td>Yes</td> | |||

</tr> | |||

<tr> | |||

<td>Writing prototype section </td> | |||

<td>Leighton, Timon, Tom</td> | |||

<td>Yes</td> | |||

</tr> | |||

</table> | |||

Week 9: | |||

<table border="1px solid black"> | |||

<tr> | |||

<th>Milestone</th> | |||

<th>Responsible</th> | |||

<th>Done</th> | |||

</tr> | |||

<tr> | |||

<td>Do the final presentation</td> | |||

<td>Everyone</td> | |||

<td>Yes</td> | |||

</tr> | |||

</table> | |||

== State of the art == | == State of the art == | ||

| Line 48: | Line 328: | ||

---- | ---- | ||

This section contains the results of many researches done on the subject of the project. Following are the main conclusions drawn from the literature research. In most existing cases, the camera observes the plants from above. This will be difficult when there are also trees. Three-dimensional images could be a solution. Secondly, lighting has a big influence on the functioning of the weed recognition software. This has to be taken into account when working on the project. A solution could be turning the images into binary black- and white pictures. Also, there are already many neural networks that can make the distinction between weeds and crops. It is also used in practice. However, all of the applications are used in monoculture agriculture. The challenge of agroforestry is the combination of multiple crops. Another conclusion is that the resolution of the camera has to be high enough. This has a large impact on the | This section contains the results of many researches done on the subject of the project. Following are the main conclusions drawn from the literature research. In most existing cases, the camera observes the plants from above. This will be difficult when there are also trees. Three-dimensional images could be a solution. Secondly, lighting has a big influence on the functioning of the weed recognition software. This has to be taken into account when working on the project. A solution could be turning the images into binary black- and white pictures. Also, there are already many neural networks that can make the distinction between weeds and crops. It is also used in practice. However, all of the applications are used in monoculture agriculture. The challenge of agroforestry is the combination of multiple crops. Another conclusion is that the resolution of the camera has to be high enough. This has a large impact on the accuracy of the system. In most cases an RGB camera is used, since a hyperspectral camera is very expensive. RGB images are also sufficient enough to work with. A conclusion can be drawn about datasets. Most researches mention the problem of obtaining a sufficient dataset to use for training the neural network. This slows down the process of improving weed recognition software. At last, recognition can be based on color, shape, texture, feature extraction or 3D image. There are many options to choose from for this project. | ||

A weed is a plant that is unwanted at the place where it grows. This is a rather broad definition, though, and therefore Perrins et al. (1992) looked into what plants are regarded as weeds among 56 scientists. Again, it was discovered that views greatly differed among the scientists. Therefore it is not possible to clearly classify plants into weeds or non-weeds, since it depends on the views of a person, and the context of the plant. | A weed is a plant that is unwanted at the place where it grows. This is a rather broad definition, though, and therefore Perrins et al. (1992) looked into what plants are regarded as weeds among 56 scientists. Again, it was discovered that views greatly differed among the scientists. Therefore it is not possible to clearly classify plants into weeds or non-weeds, since it depends on the views of a person, and the context of the plant. | ||

| Line 58: | Line 338: | ||

Most weed recognition and detection systems designed up to now are specifically designed for a sole purpose or context. Plants are generally considered weeds when they either compete with the crops or are harmful to livestock. Weeds are traditionally mostly battled using pesticides, but this diminishes the quality of the crops. The Broad-leaved dock weed plant is one of the most common grassland weeds, and Kounalakis et al. (2018) aim to create a general weed recognition system for this weed. The system designed relied on images and feature extraction, instead of the classical choice for neural networks. It had a 89% accuracy. | Most weed recognition and detection systems designed up to now are specifically designed for a sole purpose or context. Plants are generally considered weeds when they either compete with the crops or are harmful to livestock. Weeds are traditionally mostly battled using pesticides, but this diminishes the quality of the crops. The Broad-leaved dock weed plant is one of the most common grassland weeds, and Kounalakis et al. (2018) aim to create a general weed recognition system for this weed. The system designed relied on images and feature extraction, instead of the classical choice for neural networks. It had a 89% accuracy. | ||

Salman et al. (2017) researched a method to classify plants based on 15 features of their leaves. This yielded a 85% accuracy for classification of 22 species with a training | Salman et al. (2017) researched a method to classify plants based on 15 features of their leaves. This yielded a 85% accuracy for classification of 22 species with a training dataset of 660 images. The algorithm was based on feature extraction, with the help of the Canny Edge Detector and SVM Classifier. | ||

Li et al. (2020) have compared multiple convolutional neural networks for recognizing crop pests. The used dataset consisted of 5629 images and was manually collected. They found that GoogLeNet outperformed VGG-16, VGG-19, ResNet50 and ResNet152 in terms of accuracy, robustness and model complexity. As input RGB images were used and in the future infrared images are also an option. | Li et al. (2020) have compared multiple convolutional neural networks for recognizing crop pests. The used dataset consisted of 5629 images and was manually collected. They found that GoogLeNet outperformed VGG-16, VGG-19, ResNet50 and ResNet152 in terms of accuracy, robustness and model complexity. As input RGB images were used and in the future infrared images are also an option. | ||

| Line 64: | Line 344: | ||

Riehle et al. (2020) give a novel algorithm that can be used for plant/background segmentation in RGB images, which is a key component in digital image analysis dealing with plants. The algorithm has shown to work in spite of over- or underexposure of the camera, as well as with varying colours of the crops and background. The algorithm is index-based, and has shown to be more accurate and robust than other index-based approaches. The algorithm has an accuracy of 97.4% and was tested with 200 images. | Riehle et al. (2020) give a novel algorithm that can be used for plant/background segmentation in RGB images, which is a key component in digital image analysis dealing with plants. The algorithm has shown to work in spite of over- or underexposure of the camera, as well as with varying colours of the crops and background. The algorithm is index-based, and has shown to be more accurate and robust than other index-based approaches. The algorithm has an accuracy of 97.4% and was tested with 200 images. | ||

Dos Santos Ferreira et al. (2017) created data by taking pictures with a drone at a height of 4 meters above ground level. The approach used convolutional neural networks. The results achieved high accuracy in discriminating different types of weeds. In comparison to traditional neural networks and support vector machines deep learning has the key factor that features extraction is automatically learned from raw data. Thus it requires little by hand effort. Convolutional neural networks have been proven to be successful in image recognition. For image segmentation the simple linear iterative clustering algorithm (SLIC) is used, which is based upon the k-means centroid based clustering algorithm. The goal was to separate the image into segments that contain multiple leaves of soy or weeds. Important is that the pictures have a high resolution of 4000 by 3000 pixels. Segmentation was significantly influenced by | Dos Santos Ferreira et al. (2017) created data by taking pictures with a drone at a height of 4 meters above ground level. The approach used convolutional neural networks. The results achieved high accuracy in discriminating different types of weeds. In comparison to traditional neural networks and support vector machines deep learning has the key factor that features extraction is automatically learned from raw data. Thus it requires little by hand effort. Convolutional neural networks have been proven to be successful in image recognition. For image segmentation the simple linear iterative clustering algorithm (SLIC) is used, which is based upon the k-means centroid based clustering algorithm. The goal was to separate the image into segments that contain multiple leaves of soy or weeds. Important is that the pictures have a high resolution of 4000 by 3000 pixels. Segmentation was significantly influenced by lighting conditions. The convolutional neural network consists of 8 layers, 5 convolutional layers and 3 fully connected layers. The last layer uses SoftMax to produce the probability distribution. ReLU was used for the output of the fully connected layers and the convolutional layers. The classification of the segments was done with high robustness and had superior results to other approaches such as random forests and support vector machines. If a threshold of 0.98 is set to than 96.3% of the images are classified correctly and none received incorrect identification. | ||

Yu et al. (2019) argued that the deep convolutional neural networks (DCNN) takes much time in training (hours), and little time in classification (under a second). The researchers compared different existing DCNN for weed detection in perennial ryegrass and detection between different weeds too. Due to the recency of the paper and the comparison across different approaches it is a good estimation of the current state of the art. The best results seem to be > 0.98. It also shows weed detection in perennial ryegrass, so not perfectly aligned crops. However, only the distinction between the ryegrass or weeds is made. For robotics applications in agroforestry, different plants should be discriminated from different weeds. | Yu et al. (2019) argued that the deep convolutional neural networks (DCNN) takes much time in training (hours), and little time in classification (under a second). The researchers compared different existing DCNN for weed detection in perennial ryegrass and detection between different weeds too. Due to the recency of the paper and the comparison across different approaches it is a good estimation of the current state of the art. The best results seem to be > 0.98. It also shows weed detection in perennial ryegrass, so not perfectly aligned crops. However, only the distinction between the ryegrass or weeds is made. For robotics applications in agroforestry, different plants should be discriminated from different weeds. | ||

| Line 71: | Line 351: | ||

Piron et al. (2011) suggest that there are two different types of problems. First a problem that is characterized by detection of weeds between rows or more generally structurally placed crops. The second problem is characterized by random positions. Computer vision has led to successful discrimination between weeds and rows of crops. Knowing where, and in which patterns, crops are expected to grow and assuming everything outside that region is a weed has proven to be successful. This study has shown that plant height is a discriminating factor between crop and weed at early growth stages since the speed of growth of these plants differ. An approach with three-dimensional images is used to facilitate this. The classification is by far not robust enough, but the study shows that plant height is a key feature. The researchers also suggest that camera position and ground irregularities influences classification accuracy negatively. | Piron et al. (2011) suggest that there are two different types of problems. First a problem that is characterized by detection of weeds between rows or more generally structurally placed crops. The second problem is characterized by random positions. Computer vision has led to successful discrimination between weeds and rows of crops. Knowing where, and in which patterns, crops are expected to grow and assuming everything outside that region is a weed has proven to be successful. This study has shown that plant height is a discriminating factor between crop and weed at early growth stages since the speed of growth of these plants differ. An approach with three-dimensional images is used to facilitate this. The classification is by far not robust enough, but the study shows that plant height is a key feature. The researchers also suggest that camera position and ground irregularities influences classification accuracy negatively. | ||

Weeds hold particular features among: fast growth rate, greater growth increment and competition for resources such as water, fertilizer and space. These features are harmful for crops growth. Lots of line detection algorithms use Hough transformations and the perspective method. The robustness of Hough transformations is high. The problem with the perspective method is that it cannot accurately calculate the position of the lines for the crops on the sides of an image. Tang et al. (2016) propose to combine the vertical projection method and linear scanning method to reduce the shortcomings of other approaches. It is roughly based upon transforming the pictures into binary black- and white pictures to control for different illumination conditions and then drawing a line between the bottom and top of the image such that the amount of white pixels is maximized. In contrast to other methods, this method is real-time and its accuracy is relatively high. | Weeds hold particular features among: fast growth rate, greater growth increment and competition for resources such as water, fertilizer and space. These features are harmful for crops growth. Lots of line detection algorithms use Hough transformations and the perspective method. The robustness of Hough transformations is high. The problem with the perspective method is that it cannot accurately calculate the position of the lines for the crops on the sides of an image. Tang et al. (2016) propose to combine the vertical projection method and linear scanning method to reduce the shortcomings of other approaches. It is roughly based upon transforming the pictures into binary black- and white pictures to control for different illumination conditions and then drawing a line between the bottom and top of the image such that the amount of white pixels is maximized. In contrast to other methods, this method is real-time and its accuracy is relatively high. | ||

| Line 88: | Line 367: | ||

Herck et al. (2020) describe how they modified farm environment and design to best suit a robot harvester. This took into account what kind of harvesting is possible for a robot, and what is possible for different crops, and then tried to determine how the robot could best do its job. | Herck et al. (2020) describe how they modified farm environment and design to best suit a robot harvester. This took into account what kind of harvesting is possible for a robot, and what is possible for different crops, and then tried to determine how the robot could best do its job. | ||

== Design == | |||

---- | |||

This section elaborates on the design, which consists of multiple parts. The goal is to create detection software for a robot that is capable of weeding, thus some more information on the robot is needed to determine the design. Moreover, this section will elaborate on the type of solution that will be implemented in the software. | |||

'''Robot''' | |||

The software will depend on the robot design, therefore this section briefly elaborates on such a design. To keep costs as low as possible it is wise to create robots that can do multiple tasks, thus not only weeding. It will be assumed that such a general-purpose robot possesses a RGB-camera, to take pictures and/or videos. It is assumed that the robot will take the form of a land-vehicle instead of an unmanned aerial-vehicle (UAV). This decision is made because for the specific context an UAV would not be appropriate. In a latter stage of the farm, the trees would pose serious restrictions to the flying path. The trees will form obstacles, which the drone will have to avoid. Moreover weeds or bushes grow on the ground, so it could be that the tree blocks the line of sight to such weeds. It could be that the UAV therefore has to constantly adapt the flying height, which would yield inconsistency in the gathered data and presumably negatively affect the classification accuracy. Because of these reasons it has been decided to focus on a land-vehicle. This distinction is important as it influences the type of data that is gathered, and thus the type of data the software should be designed for. Therefore, the data gathered consists of pictures from the side, slightly above the ground. | |||

Moreover, such a robot would need a particular speed to cover the farm by itself. Weeds grow and within 2 or 3 days they are clearly visible and easily removable. Because the weeding task will be only one of the tasks of such a robot it will be important that the classification can be done quickly. It is clear that it should be able to cover the farm in under 2 days. Another important factor which influences the available time is lighting. For now it is assumed that it can only work when there is natural light, so created by the sun. Thus it can only work with daylight, for which the duration in various parts of the world might differ according to the time of year. All these factors combined argue for the need of quick identification. | |||

To adhere to the requirement that the robot should be as less as possible the target of theft it should be able to be kept away when it is not working. In addition, the value of the robot should be minimized whenever possible. Hardware necessary to do image processing will be rather expensive, therefore it is more convenient to process the images off the vehicle, for example by cloud computation. Moreover this would also minimize the maintenance and power needs of the robot. On the other hand it does need a stable and fast Internet connection, with the arrival of 5G this should be possible. | |||

'''Software''' | |||

For image classification multiple approaches exist. This section elaborates on the choice that is made for a specific approach to be implemented. From above section it is clear that the implementation should have relatively quick identification times and for which type of data it should work. Moreover, as stated in the requirements section, the goal is to get an as high as possible classification accuracy. | |||

There are different approaches to such a computer vision task. Particularly aimed at plant or weed recognition the approaches include: support vector machines (SVM), random forests, adaboost, neural networks (NN), convolutional neural networks (CNN) and convolutional neural networks using transfer learning. | |||

Worth noting is that not all of these classifiers were trained using the actual input image. Some researchers choose to first segment the image in different regions and feeding those segments for classification. Dos Santos Ferreira et al. (2017) used SLIC for segmentation of images, which is based upon the k-means algorithm. More recently, Riehle et al. (2020) were able to distinguish plants from the background with 98% accuracy using segmentation. The importance of segmentation is that by using it the position of the weed can be derived, which is of course crucial if the weed has to be removed. | |||

Kounalakis et al. (2018) achieved 89% classification accuracy with the SVM approach to recognize weeds and Salman et al. (2017) achieved an 85% accuracy using the same approach for leaf classification and identification. Gašparović et al. (2020) have achieved an 89% accuracy recognizing weeds using the random forests approach. Notable is that the researchers have implemented 4 different algorithms for the random forests approach and that the accuracy result is from the best implementation. Tang et al. (2016) found an accuracy of 89% for an ordinary neural network with the backpropagation algorithm. Li et al. (2020) achieved an accuracy of 98% recognizing crop pests using a CNN. Yu et al. (2019) found an accuracy larger than 98% recognizing weeds in perennial ryegrass using a CNN. Espejo-Garcia et al. (2020) used a CNN with transfer learning and evaluated different models. Taking the best model (with a SVM for transfer learning) they achieved a 99% accuracy. Comparing these numbers it is clear that the CNN generally achieves the best result. However, it must be taken into account that these classifiers have all been trained on different datasets and therefore comparing these numbers cannot fully argue for which approach is actually the best. | |||

Dos Santos Ferreira et al. (2017) tried to compare their CNN to a SVM, adaboost and a random forest. The CNN outperformed the other approaches in terms of classification accuracy. Since all approaches were tested on the same dataset we can argue that CNN’s seem most appropriate to achieve a high classification accuracy. Now in this particular context false positives weigh more heavily than false negatives in weed identification, because the false negatives could be solved if the robot goes by the same plant more than once. However, removing a crop due to falsely identifying it as a weed could have larger negative effects if the robot passes the crops relatively frequently. Dos Santos Ferreira et al. (2017) also found an important property of their CNN. When setting a threshold in determining classification they were able to achieve an 96.3% accuracy, with no false positives. The researchers also noted that using deep neural networks removes the tedious task of feature extraction, because the features are automatically learned from the raw data. This might enlarge the CNN’s generalizability. | |||

To further argue for the use of a convolutional neural network two other factors should be evaluated, namely; time taken for classification and it ability to use this approach for land-vehicles. Yu et al. (2019) state that these deep convolutional networks (DCNN) take much time in training (hours), whereas classification is done in little time (under a second). Booij et al. (2020) made a driving robot that had an identification with 96% accuracy and it could drive up to 4 km/h. Notable is that the researchers were able to use 5G and cloud computing, which might be crucial for real-time identification. Moreover, Raja et al. (2020) have made a weeding robot with a crop detection accuracy of 97.8%. The land-vehicle was able to move up to a speed of 3.2 km/h. However, there is still quite a gap between the detection accuracy and the 83% of weeds removed in the controlled setting where it was tested. However these researches confirm the possibility of a land-vehicle. Lastly, implicitly it is proven that a CNN is suitable for agriculture. This implicit prove is done by noting that the researches named above all focus on agriculture. But also explicitly it is argued that CNNs have proven to deliver good results in precision agriculture for identifying plants (Espejo-Garcia et al., 2020). | |||

== Creating the dataset == | |||

---- | |||

One of the main obstacles of creating a functioning network is the dataset which is used for training. The dataset has to consist of many pictures in order to reach a high accuracy. The dataset has to represent the system in which the robot will be operating. This means the plants on the pictures have to look the same as the plants on the farm. Obtaining these pictures of the specific plants on a farm online is not easy. Most datasets are owned by companies and not shared. The solution to this problem would be obtaining the pictures ourselves. This will be done in cooperation with the farmer. | |||

The dataset which is used in this project has three downsides compared to the dataset which can be created by taking the pictures ourselves. The first downside is the number of pictures. This number is not as large as desired. Furthermore, the pictures in the current dataset do not represent the farm as well as pictures taken at the farm. The current dataset is formed with pictures found online. It is possible to create a working network without using photos from the operating environment. However, using pictures from the operating environment is preferred. The final downside is the distribution in numbers of pictures per category. The current dataset contains significantly more pictures of weeds than non-weeds. When creating the dataset, it is important to take the mentioned distribution into account. | |||

It has multiple benefits to involve the farmer in the process of creating the dataset. A condition to the benefits is that clear instructions and explanations are given to the farmer. The farmer knows which plants grow on his farm. He can tell which plants are unwanted weeds. There is no external person needed to identify the plants. There are other aspects in which the farmer can specify the network according to his wishes. The acceptable damage to the crops can be determined. A tradeoff has to be made between not damaging the crops and weeding all the undesired plants. Each farmer can decide to what extent one is more important than the other. Another benefit is that the farmer is introduced to the workings of the robot without the robot weeding plants. The robot will probably be new to the farmer. The farmer might have worries whether the robot will indeed only weed the unwanted plants. By starting with data obtaining, trust in the robot’s performance can be build. | |||

To create the dataset, the robot has to be able to ride around the crops and take pictures. In the beginning the robot will not be able to recognize weeds or crops. It will simply take pictures of the plants. A benefit of using pictures taken by the robot is that the angle in which the photos are taken will be the same during training and operating. The photos can be uploaded to a cloud storage immediately if this is possible. This would make processing the photos while the robot is taking them possible. The robot will probably have a wireless connection, but it is not certain whether this connection is strong enough to upload many pictures. If uploading to a cloud is not possible, the robot would have to store the pictures. The pictures can then be processed after the robot has taken pictures of the plants. Uploading the pictures to a cloud storage would have as benefit that the pictures can be processed anywhere. Employees whose task it is to process the pictures can work from home. A downside is that working with cloud storage does call for extra data security. Uploading the pictures after the robot has stored them internally is also possible. This would have to be done by the farmer or automatically. The latter is preferred. | |||

Which sizes of the weeds are important to record in the dataset depends on how much the robot will be used by the farmer. If the robot were to be used every day, larger size weeds are not important since the weeds do not have the time to grow that large. The accuracy of the network is also of influence. With a lower accuracy, the chance of not removing all the weeds in one go is larger. Weeds that are not removed have more time to grow larger in size. An assumption has to be made of the maximum time a weed can have to grow. It is assumed that the robot will weed the plants every week and a weed will be removed in a maximum of three attempts. This means the sizes the weeds can have during three weeks are important to include in the dataset. Creating the dataset will therefore take up roughly three weeks. These weeks should fall in a time period in which the weeds will grow well. The weather will play a part in this. Some more days should be added to the weeks to include larger sizes in the dataset. This is for weeks in which the weeds grow faster than normally. This means the farmer will have to grow the weeds for about 3. in order to create the dataset. It is not necessary to take pictures each day during this period of time. | |||

The number of pictures that will be taken in total depends on the network, number of plant species and the time to process a picture. If only the network was of influence, as many pictures as possible would be made. For deep learning goes that more data is merrier. However, processing the pictures will be done manually so the number of pictures has to be limited. The minimum number of pictures the network needs, depends on the desired accuracy of the network. A high accuracy calls for a lot of data. The number of plant species is also of influence. More species means more pictures. Literature study showed thousands of pictures are necessary. A precise number is difficult to indicate for now. If a thousand pictures were made each day for five days, this would give five thousand pictures. With a helpful interface to work with, the average time spend on processing one picture is assumed to be 15 seconds. This means processing five thousand pictures takes up 21 hours. Assuming an hourly wage of 12 euros, processing the data will cost 252 euros. This amount will increase when the number of pictures increases and decrease when the processing interface is made easier and quicker. A tool to increase the dataset after processing is image augmentation. This tool is relatively fast and cheap. | |||

In order to process the pictures efficiently, a clear plan of approach has to be made. This plan will make it possible for multiple employees of many levels to process data. With the plan of approach, these employees will not have to know the specifics of the farm. It will also ensure that the data is processed correctly and can be used to train the network without further adaptations. Since the robot will be used by multiple farms with different plant species, the process of creating a dataset will have to take place multiple times. It will be easier to have new employees working on data processing for a farm with a clear plan of approach. The plan has to contain descriptions of the wanted and unwanted plans. This will be specific for each farm. The farmer can help with these descriptions. The plan has to indicate how to make the data ready for training. This means deleting pictures with no plants at all. Pictures with one plant species on them have to be sorted. Pictures of multiple plant species have to be divided in a way. Whether this will be done by dividing into multiple pictures or divisions in one pictures is yet to decide. The divided parts have to be sorted. As mentioned before, a helpful interface to process the pictures will speed up the work. The plan of approach should go hand in hand with the processing software. | |||

After the data is processed on the cloud storage, the dataset is finished and accessible for the employees who will train the network. After the first time of executing this process of creating a database, the results might be insufficient. In that case, the process has to be altered. This has to be taken into account when starting with this process. After the start up phase, the process will be fine tuned and can be performed as desired at the following farms. The datasets and trained networks that are made can be reused, if the plant species are the same. If the other farm has more or different plant species, more data has to be collected. Creating a dataset at multiple farms will create a large dataset of many plant species. This could lead to a phase in which creating a dataset will not be necessary anymore. | |||

== Prototype == | |||

---- | |||

Some parts of the design were chosen based on early results of the experiments done to make this software. In this section we will explain how these choices were made. | |||

The architecture of a model is very dependent on the task, and each variation has its own benefits and downsides. One major choice was between the use of transfer learning or a model made from scratch. Transfer learning models can converge more quickly and with less data, and have a higher performance if used for the right task, but tend to be larger and more complicated. Here a “right task” is characterized by high similarity between the data the network is pre-trained on and the target data (the data you would like to predict). Simple models can take more time and data to converge, but are smaller and more flexible. Based on some early performance experiments, a simple model performed similarly, if not better than the transfer learning models. | |||

Deep learning requires a lot of data to train a model correctly (the ImageNet database consists of over one million pictures). The amount of data sufficient for training depends on the type of data and model, but generally at least a thousand images are required for computer vision tasks. Quality images are often hard to find or owned by private companies, which limits the available data significantly. Because images of weeds etc. were limited in this manner, data augmentation was applied to prevent overfitting of the neural network by increasing the amount of different images. | |||

==== Data ==== | |||

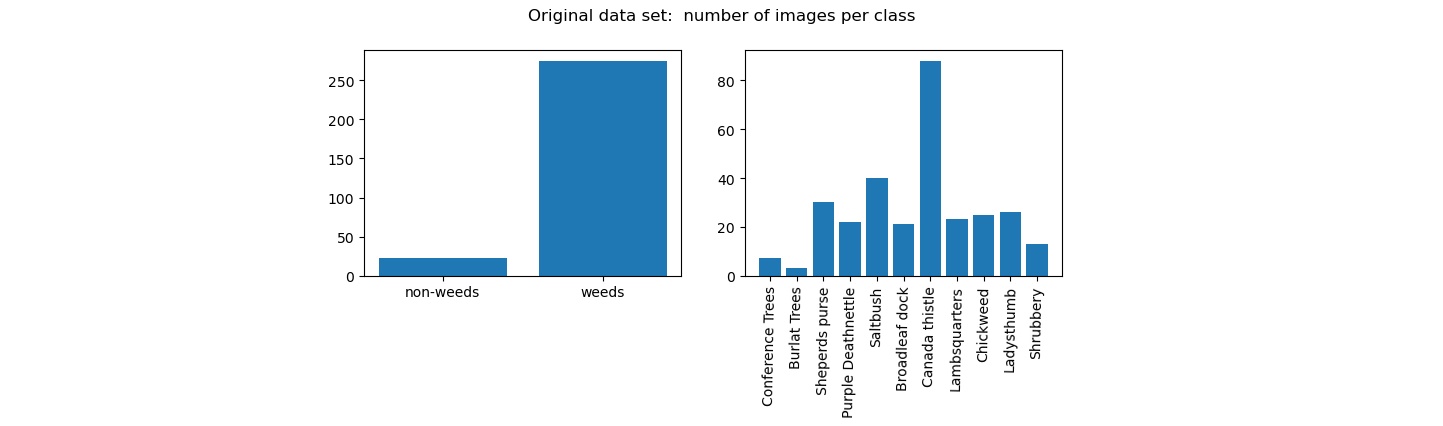

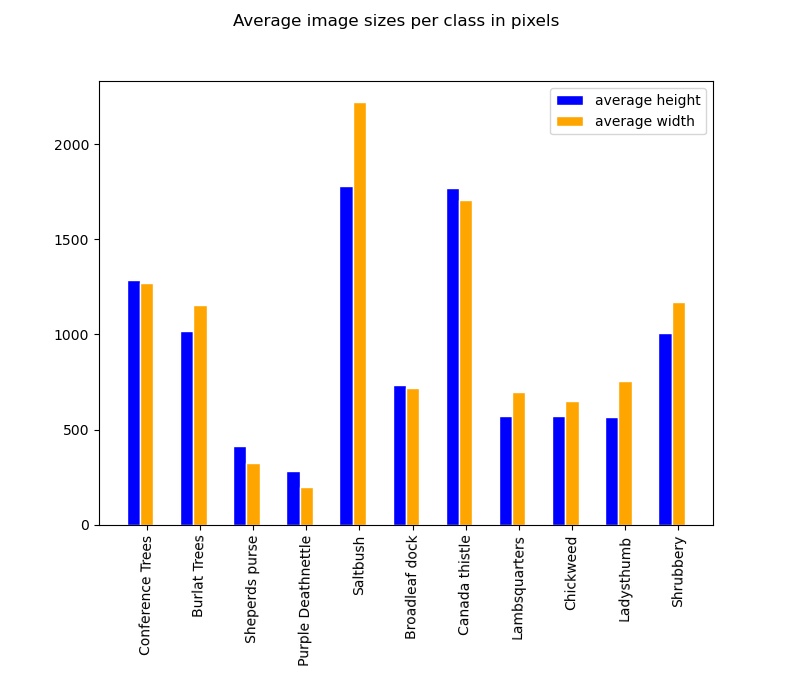

As mentioned previously, the dataset is not balanced as can be seen in Figure 2. As can be seen from the two bar plots is that first of all, there is a huge gap between the amount of data for weeds and non-weeds, in total there are 298 images. Approximately 92% of the images are of weeds. Moreover, in te bar plot displaying the distribution of the images over classes it is noticeable that there is an unbalanced amount of canada thistle images (approximately 29%), whereas there is a clear lack of trees and shrubbery. All other data amounts seem relatively equal. The variability in size is also rather extensive, the maximal height is 4128 pixels, the maximal width is 4272 pixels, the minimal height is 84 pixels and the minimal width is 29 pixels. Figure 3 depicts the average height and width for the image classes. As one can see there is great variability in sizes, thus there is a need to resize all the data to one size (apart from implementation). Note how some image classes generally have a higher width than height, thus if resizing this data to the state of the art standard (a square) these images will turn out a bit squeezed horizontally. | |||

[[File:DataClassesDistribution2.jpg|1000px|thumb|center|'''Figure 2''': image count per class before data augmentation. ]] | |||

[[File:ImageSizesPerClass.jpg|600px|thumb|center|'''Figure 3''']] | |||

===== Data pre-processing ===== | |||

First of all due to the lack of data, augmentation techniques were applied. The data augmentations were selected in such a way that the end result is realistic: for example, left-right flipping was applied because plants are somewhat vertically symmetric, but up-down flipping was not applied as the vertical orientation is specific due to gravity. The augmentations are: left-right flipping, increased saturation, increased brightness, decreased brightness, blurring (to simulate out-of-focus plants) and center-cropping. This yields in total 6 augmentations and thus the dataset size is increased by a factor of 7. Apart from preprocessing the images using data augmentation another technique was applied, namely resizing. The images were resized in such a fashion that they have become square which is easier to handle for the models. For transfer learning networks this type of input is actually requested, with an upperbound on the size of 224 by 224 pixels. Since most of the data was larger than this size, the images were effectively downsized. Generally, larger images take longer and need better hardware, but can provide better results. | |||

[[File:7014 allTransformsInOneWithNumbers.jpg|1200px|thumb|center|'''Figure 1''': All transformations, from left to right: (1) original, (2) increased brightness, (3) center-cropping, (4) decreased brightness, (5) left-right flipping, (6) blurring, (7) increased saturation (numbers are only added for reference, in the dataset the pictures do '''not''' contain numbers).]] | |||

==== Transfer Learning Models ==== | |||

Python with tensorflow (including keras) 2.0 or higher is used to create models. The defaults for these models are chosen by current state of the art standards. From there on different models were created, including one network from scratch (ScratchNet) and three pre-trained models: MobilenetV2, DenseNet201 and InceptionResNetV2. These pre-trained models have been trained on the ImageNet database. The characteristics of these models are shown in Table 1. As can be seen in the table different networks are used with varying complexity. For the ImageNet data, it seems to be the more complex the network is, the better it performs. | |||

'''Table 1:''' Characteristics of pre-trained models on the ImageNet validation dataset. | |||

<table border="1px solid black"> | |||

<tr> | |||

<th> Model </th> | |||

<th> Top-5 accuracy </th> | |||

<th> Number of parameters</th> | |||

<th> Topological Depth</th> | |||

</tr> | |||

<tr> | |||

<td> MobileNetV2 </td> | |||

<td> 0.901 </td> | |||

<td> 3.538.984 </td> | |||

<td> 88 </td> | |||

</tr> | |||

<tr> | |||

<td> DenseNet201 </td> | |||

<td> 0.936 </td> | |||

<td> 20.242.984 </td> | |||

<td> 201 </td> | |||

</tr> | |||

<tr> | |||

<td> InceptionResNetV2 </td> | |||

<td> 0.953 </td> | |||

<td> 55.873.736 </td> | |||

<td> 572 </td> | |||

</tr> | |||

</table> | |||

The defaults for the transfer learning networks; optimizer: Adam; number of hidden layers : 1; pooling method: globalAverage2DPooling; loss function: categorical cross entropy; data : augmented data; image size: 224 by 224 pixels; initialization weights : ImageNet; class weights: 1; batch size: 8 images; maximal number of epochs: 10; hidden layer’s activation function: rectified linear unit (ReLU); output layer’s activation function: SoftMax (creates a probability density distribution). Moreover some hyperparameters are optimized with the Keras hyperband tuner. This tuner evaluates possible combinations and eventually takes the most promising combination of hyperparameters. Concerning the transfer learning networks the following hyperparameters have been tuned: the number of neurons in the hidden layer (between 32 and 512 in steps of 32) and the learning rate (0.001, 0.0001, 0.00001, 0.000001). The transfer learning networks were adopted and the top (classifier) was replaced where the training of the base (convolutional layers) was disabled. The output layer consisted of the number of classes (either 11 or 2) nodes. | |||

==== ScratchNet ==== | |||

ScratchNet is a relatively simple convolutional neural network. The optimizer and pooling was handled the same as with the transfer learning models: Adam and global average 2D pooling respectively. This model used a smaller input image resolution of 165x165 pixels and a batch size of 32. The resolution was lowered to prevent the machine training the net to run out of memory while tuning the hyperparameters, but in hindsight using a higher resolution and smaller batch size would probably have had better results and would have also resolved the memory issue. | |||

The network is structured as follows: an input layer of 165x165x3, which feeds into a convolutional layer tuned as follows: amount of filters between 8 and 32 with steps of 4 (in this case 28) and a kernel size between 2 and 6 with steps of 1 (in this case 5), with ReLU as activation function. After that a pooling layer, with pooling of X*X, where X is tuned between 1 and 8 with steps of 1 (in this case 7). Then a flattening layer, a dense layer with between 32 and 512 nodes (steps of 32) (in this case 224) and finally the output layer, which contained either 11 nodes (one for each class in our dataset), or two nodes (weeds and non-weeds). | |||

==== Models With 11 Prediction Classes ==== | |||

In the 11 class case the classes are: ladysthumb, shrubbery, conference trees, burlat trees, purple deathnettle, sheperds purse, saltbush, broad leaf dock, Canada thistle, lambsquarters and chickweed. The different architectures have been applied to this problem with varying characteristics to investigate the following questions: | |||

<ol> | |||

<li> Which network with 11 prediction classes performs the best? </li> | |||

<li> Does a model with an extra hidden layer perform better? </li> | |||

<li> Is knowledge “transferred” with transfer learning? </li> | |||

<li> How does assigning class weights impact performance? </li> | |||

<li> What is the effect of augmenting data? </li> | |||

</ol> | |||

The results of training different networks for the 11 class case can be found in Table 2. To answer the questions well some terms need to be elaborated on. The false positives ratio is the ratio of non-weeds being classified as weeds (thus between 0 and 1, lower is better. The best performing network is the network with the lowest false positives ratio satisfying that the accuracy is 80% or higher with a classification time within 500 milliseconds. Class weights are used for weighting the loss function, loosely speaking it defines the importance for each class in training. Proportional class weights are class weights that show how much data is available for each class, it is computed by dividing the total amount of images by the product of the number of classes and the amount of images in the class. | |||

'''Table 2:''' accuracy, false positive ratio and classification time for different models predicting to 11 classes | |||

<table border="1px solid black"> | |||

<tr> | |||

<th> Row/Model number </th> | |||

<th> Model </th> | |||

<th> Accuracy </th> | |||

<th> False Positive Ratio</th> | |||

<th> Classification time (ms) </th> | |||

<th> Comment </th> | |||

</tr> | |||

<tr> | |||

<th> 1 </th> | |||

<td> MobileNetV2, defaults </td> | |||

<td> 0.5208 </td> | |||

<td> 1.0 </td> | |||

<td> 12 </td> | |||

<td> </td> | |||

</tr> | |||

<tr> | |||

<th> 2 </th> | |||

<td> MobileNetV2, with 2 hidden layers </td> | |||

<td> 0.4499 </td> | |||

<td> 1.0 </td> | |||

<td> 12 </td> | |||

<td> </td> | |||

</tr> | |||

<tr> | |||

<th> 3 </th> | |||

<td> MobileNetV2, random weights initialization </td> | |||

<td> 0.2917 </td> | |||

<td> 1.0 </td> | |||

<td> 12 </td> | |||

<td> All predictions are canada thistle, ~29% of total data </td> | |||

</tr> | |||

<tr> | |||

<th> 4 </th> | |||

<td> MobileNetV2, with proportional class weights </td> | |||

<td> 0.5333 </td> | |||

<td> 1.0 </td> | |||

<td> 12 </td> | |||

<td> </td> | |||

</tr> | |||

<tr> | |||

<th> 5 </th> | |||

<td> MobileNetV2, on raw data </td> | |||

<td> 0.3392 </td> | |||

<td> 1.0 </td> | |||

<td> 11 </td> | |||

<td> </td> | |||

</tr> | |||

<tr> | |||

<th> 6 </th> | |||

<td> MobileNetV2, with GlobalMax2DPooling </td> | |||

<td> 0.4368 </td> | |||

<td> 0.9188 </td> | |||

<td> 12 </td> | |||

<td> </td> | |||

</tr> | |||

<tr> | |||

<th> 7 </th> | |||

<td> MobileNetV2, on raw data with proportional class weights and GlobalMax2DPooling </td> | |||

<td> 0.2857 </td> | |||

<td> 1.0 </td> | |||

<td> 11 </td> | |||

<td> </td> | |||

</tr> | |||

<tr> | |||

<th> 8 </th> | |||

<td> MobileNetV2, with proportional class weights and globalMax2DPooling </td> | |||

<td> 0.2745 </td> | |||

<td> 1.0 </td> | |||

<td> 11 </td> | |||

<td> </td> | |||

</tr> | |||

<tr> | |||

<th> 9 </th> | |||

<td> DenseNet201, defaults </td> | |||

<td> 0.5613 </td> | |||

<td> 1.0 </td> | |||

<td> 30 </td> | |||

<td> </td> | |||

</tr> | |||

<tr> | |||

<th> 10 </th> | |||

<td> DenseNet201, with AdaDelta </td> | |||

<td> 0.5348 </td> | |||

<td> 1.0 </td> | |||

<td> 29 </td> | |||

<td> </td> | |||

</tr> | |||

<tr> | |||

<th> 11 </th> | |||

<td> InceptionResNetV2, defaults </td> | |||

<td> 0.3559 </td> | |||

<td> 1.0 </td> | |||

<td> 35 </td> | |||

<td> predictions mostly on 2 classes </td> | |||

</tr> | |||

<tr> | |||

<th> 12 </th> | |||

<td> InceptionResNetV2, with AdaDelta </td> | |||

<td> 0.2966 </td> | |||

<td> 1.0 </td> | |||

<td> 35 </td> | |||

<td> predictions mostly on canada thistle, ~29% of total data </td> | |||

</tr> | |||

<tr> | |||

<th> 13 </th> | |||

<td> ScratchNet, default </td> | |||

<td> 0.9651 </td> | |||

<td> 0.0631 </td> | |||

<td> 7 </td> | |||

<td> visible bias towards canada thistle, ~29% of total data </td> | |||

</tr> | |||

<tr> | |||

<th> 14 </th> | |||

<td> ScratchNet, with proportional class weights </td> | |||

<td> 0.9676 </td> | |||

<td> 0.0325 </td> | |||

<td> 7 </td> | |||

<td> </td> | |||

</tr> | |||

<tr> | |||

<th> 15 </th> | |||

<td> ScratchNet, on raw data</td> | |||

<td> 0.6962</td> | |||

<td> 0.3836 </td> | |||

<td> 7 </td> | |||

<td> big bias towards canada thistle, ~29% of total data </td> | |||

</tr> | |||

<tr> | |||

<th> 16 </th> | |||

<td> ScratchNet, on raw data with proportional class weights</td> | |||

<td> 0.5139 </td> | |||

<td> 0.7153 </td> | |||

<td> 7 </td> | |||

<td> </td> | |||

</tr> | |||

</table> | |||

Question 1, it seems to be that the ScratchNet with proportional class weights has the lowest false positive ratio, with the highest accuracy and the lowest classification time. Thus it is chosen as the best model with 11 prediction classes. The visible bias towards one class signals that it could be possible that with a more balanced dataset the performance might increase. Note that all transfer learning networks have a false positive ratio so high that they would harm crops more than they would remove weeds making these networks useless given this data and training method. | |||

Question 2, compare the first and the second row of Table 2. The network with an additional hidden layer (in total 2) does not perform better, it even has a lower accuracy. Thus no, more hidden layers do not necessarily imply better performance. | |||

Question 3, compare the first and third row of Table 2. It seems to be that the model with random weights, thus not pre-trained, has the same false positive ratio but performs significantly worse since it always predicts only one class yielding a far lower accuracy. Thus yes, it seems to be the case that that some knowledge is transferred, in other words the initialized weights provides a better starting point compared to random weights. | |||

Question 4, compare the following pairs of rows: (1) row 1 and row 4; (2) row 6 and row 8; (3) row 13 and row 14; (4) row 15 and 16. At first sight, only at 2 out of the 4 pairs the models with class weights outperform the models without them. However it seems to be that models with no additional changes from the defaults (apart from the class weights) are consistent in showing better results. Even by comparing pair 3 (row 13 and 14), it seems to be that adding the class weights almost halves the False Positive Ratio while maintaining the accuracy. So to conclude, class weights can improve performance significantly. | |||

Question 5, compare the following pairs of rows: (1) row 1 and row 5; (2) row 7 and row 8; (3) row 13 and row 15; (4) row 14 and row 16. Apart from pair 2 there seems to be far better performance when using the augmented data versus using the raw data. Concerning pair two, there seems to be no significant difference. To conclude, augmenting data seems helpful (given this dataset), to improve performance. | |||

Apart from these questions there are some general remarks to be made about these results: essentially 4 different architectures have been compared: MobileNetV2, DenseNet201, InceptionResNetV2 and ScratchNet. It seems to be that all models perform their classification task relatively quickly. Comparing Table 1 and Table 2, there seems to be a positive correlation between network complexity (number of parameters and topological depth) and classification times, in other words: more complex networks seem to take longer. This is not only true for classification times, but also for training times. It seems to be that MobileNetV2 is almost three times as fast as the other transfer learning networks and there seems to be only a small improvement in performance from InceptionResNetV2 to MobileNetV2. Because of these differences in computational times, most of the questions have been tested with the MobileNetV2 model. Moreover the computational times are mainly dependent on whether the GPU or CPU (this case the GPU) is used for computations and the specific hardware used (Intel i7-7700HQ, NVIDIA Quadro M1200). Lastly, most comments relate towards the imbalanced dataset, suggesting that a more balanced dataset could yield better results. | |||

==== Models With 2 Prediction Classes ==== | |||

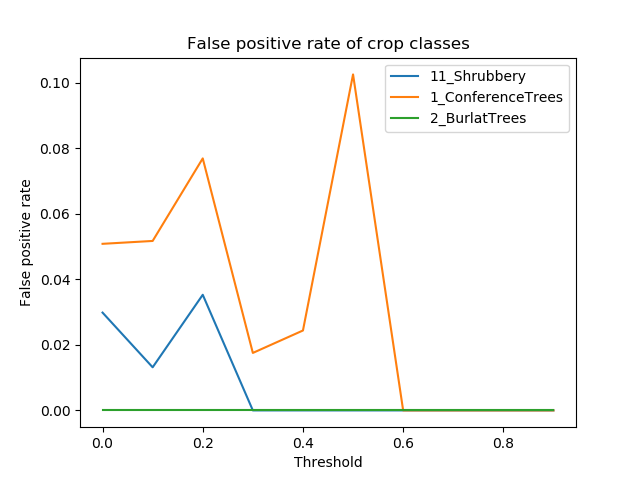

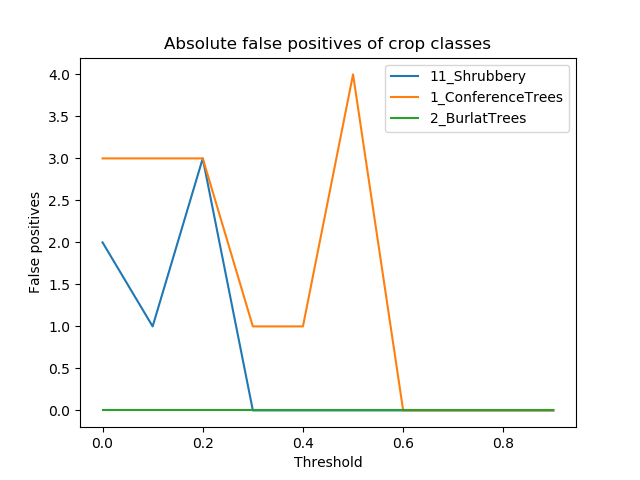

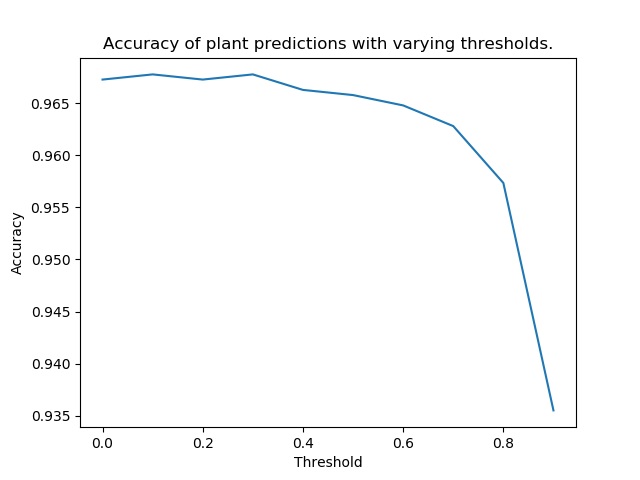

Here all data is divided into only two classes: weeds and non-weeds. We wanted to see if we could get lower false positive ratios when only classifying into two classes while keeping accuracy as high as possible. A similar approach is used as above. | |||