Embedded Motion Control 2014 Group 2: Difference between revisions

| Line 103: | Line 103: | ||

=== Determine cases === | === Determine cases === | ||

=== Decision === | === Decision === | ||

The top level decision node decides in which direction a trajectory should be planned. It finds a new set point which becomes a target point for the trajectory node. The top level decision node is subscribed to three topics. First of all it is connected to the odometry module and secondly to Hector mapping node to receive information to determine the current position of pico. Furthermore, it is subscribed on a topic which is connected to the arrow detector node. The arrow detector node as described previously sends a integer message 1 for a left arrow and 2 for a right arrow to help pico find the correct direction. Two major situations can be distinguished in this node and are depicted below: | |||

1. A turn can be initiated before the crossing [type 1 or type 2] | |||

From the case creator node follows that a simple corner (of type 1 or 2) is nearby. A starting point | |||

2. Go to crossing for overview of situation [ type 3, type 4 or type 5 or 6] | |||

=== Trajectory === | === Trajectory === | ||

=== Arrow detection === | === Arrow detection === | ||

Revision as of 16:09, 11 June 2014

Members of Group 2:

Michiel Francke

Sebastiaan van den Eijnden

Rick Graafmans

Bas van Aert

Lars Huijben

Planning:

Week1

- Lecture

Week2

- Understanding C++ and Ros

- Practicing with pico and the simulator

- Lecture

Week3

- Start with the corridor competition

- Start thinking about the hierarchy of the system (nodes, inputs/outputs etc.)

- Dividing the work

- Driving straight through a corridor

- Making a turn through a predefined exit

- Making a turn through a random exit (geometry unknown)

- Meeting with the tutor (Friday 9 May 10:45 am)

- Lecture

Week4

- Finishing corridor competition

- Start working on the maze challenge

- Think about hierarchy of the system

- Make a flowchart

- Divide work

- Corridor challenge

Week5

- Continue working on the maze challenge

Week6

Week7

Week8

Week9

- Final competition

Corridor Challenge

Ros-nodes and function structure

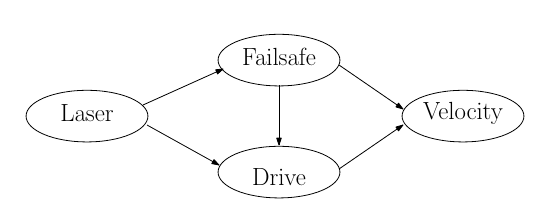

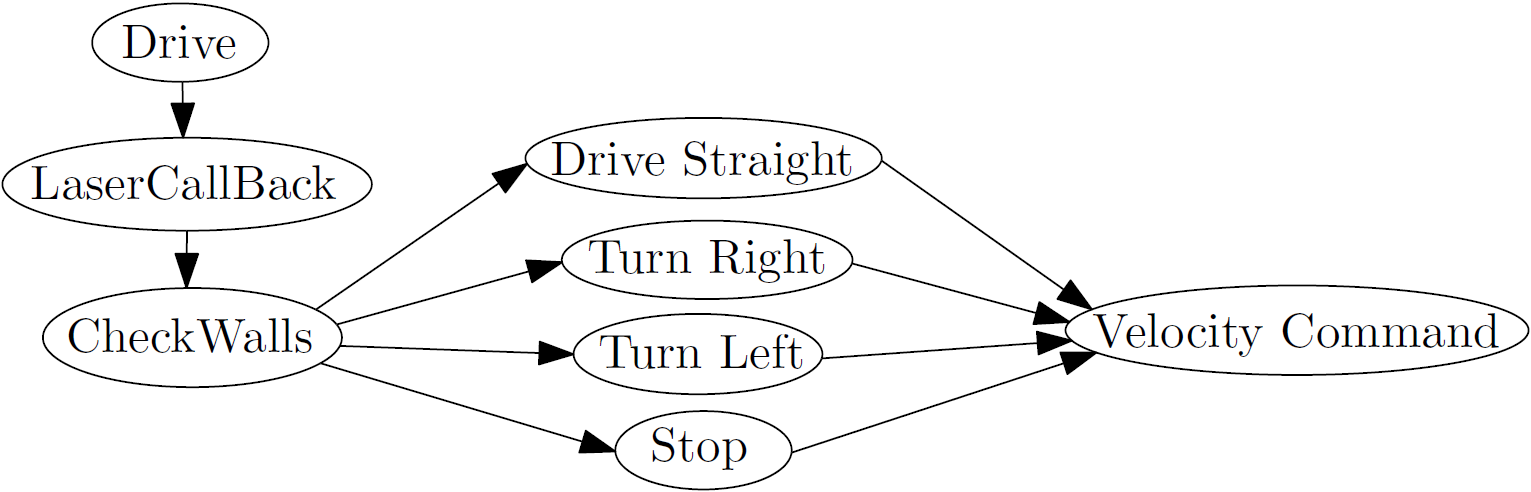

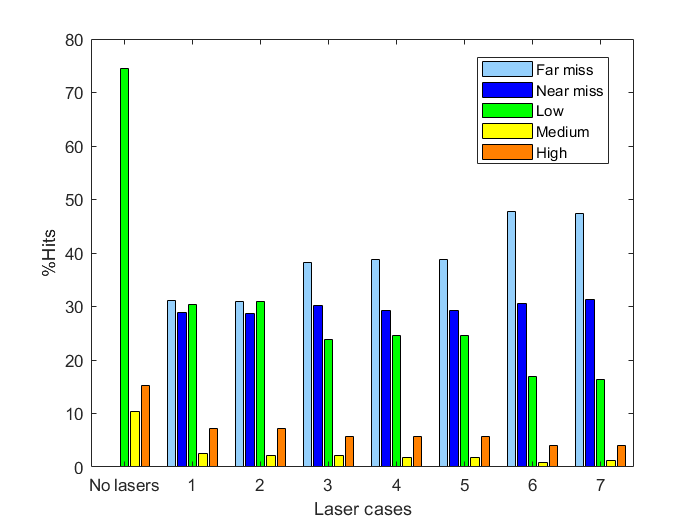

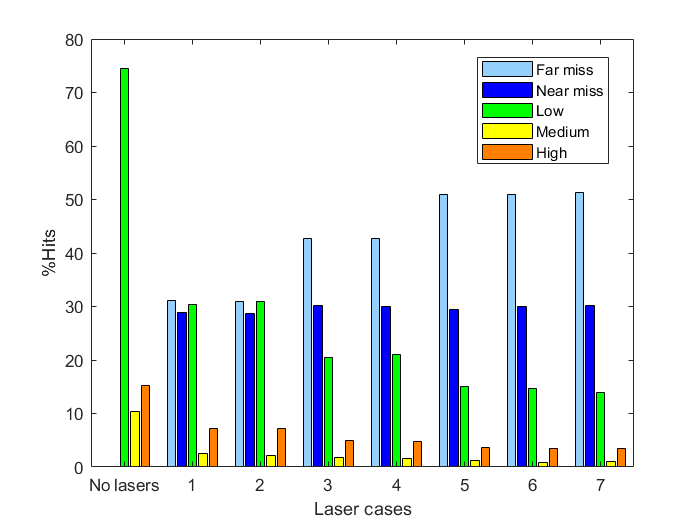

The system for the corridor challenge is made up from 2 nodes. The first one is a very big node which does everything needed for the challenge, driving straight, look for corners, turning, etc. The second one is some kind of fail-safe node which checks for (near) collisions with walls and if so, it will override the first node to prevent collisions. The figure next to the ros-node overview displays the internal functions in the drive-node.

Each time the LaserCallBack function gets called the CheckWalls function is called in it. This function looks at the laser range at -pi/2 and pi/2. If both the lasers are within a preset threshold it sets the switch that follows the CheckWalls function such that it will execute the Drive_Straight function. If either the left or the right laser exceed the threshold it will cause the switch to execute the Turn_Left or Turn_Right function respectively. If both lasers exceed the threshold pico must be at the exit of the corridor and the stop function is called. Each of these functions in the switch set the x,y and z velocities to appropriate levels in a velocity command which can then be transmitted to the velocity ros-node.

Corridor challenge procedure

The figures below display how all the different functions in the switch operate and it also shows the general procedure that pico will follow when it solves the corridor competition.

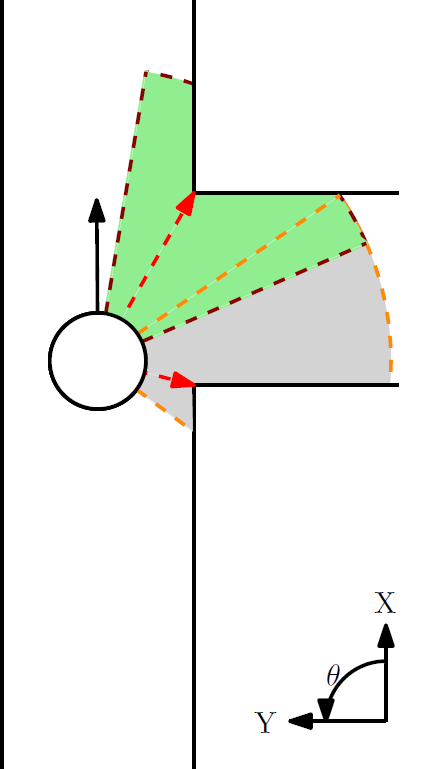

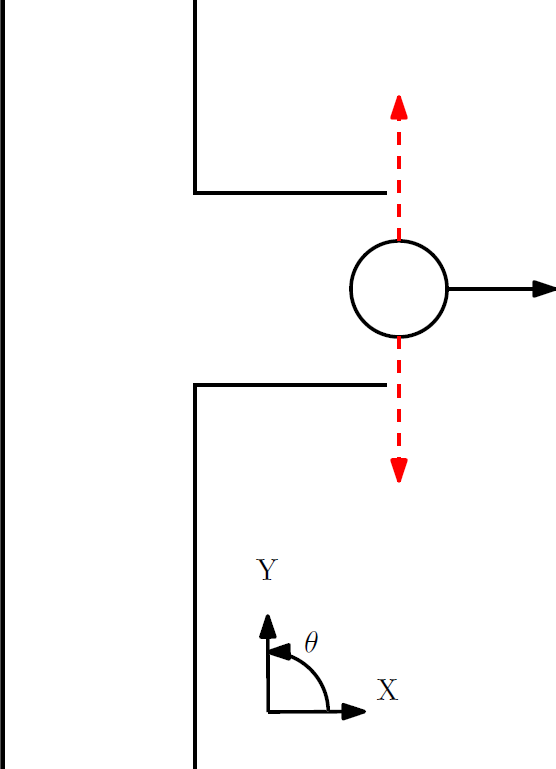

Action 1: In the first picture we see pico in the drive straight mode. The lasers at -pi/2 and pi/2 are being monitered (indicated in black) and compared with the laser points that are closest to pico on the left and right side (indicated with red arrows). The y-error can now be interpreted as the difference in distance between the two red arrows divided by 2. The error in rotation can be seen as the angle difference between the black and red arrows. In order to stay on track pico magnifies the y and theta errors by a negative gain and sends the resulting values as the velocity commands for the y and theta velocity commands respectively. This way pico is always centered and aligned with the walls. The x-velocity is always just a fixed value.

Action 2: As soon as pico encounters an exit, one of its lasers located on the sides will exceed the threshold causing the switch to go to one of the turn cases.

Action 3: Once in the turn case, pico must first determine the distance to the first corner. This is being done by looking at all the points in a set field of view (the grey field) and then determining which laser is the closest to pico. The accompanying distance is stored as the turning radius and the accompanying integer that represents the number of the laser point is stored as well. For the second corner we do something similar but look in a different area of the lasers (the green field), again the distance and integer that represent the closest laser point are stored.

After this initial iteration, each following iteration will relocate the two corner points by looking in a field around the two stored laser points that used to be the closest to the two corners. The integers of the laser points that are now closest to pico and thus indicate the corners, are stored again such that the corner points can be determined again in the following iteration.

The distance towards the closest point is now being compared with the initial stored turning radius, the difference is the y-error and is again magnified with a negative gain to form the y-speed command. The z-error is determined from the angle that the closest laser has with respect to pico, this angle should be perpendicular to the x-axis of pico. Again the error is magnified with a negative gain and returned as the rotational velocity command. This way the x-axis of pico will always be perpendicular to the turning radius and through the y correction we also ensure that the radius stays the same (unless the opposing wall gets to close). The speed in x-direction is set to a fixed value again, but it only moves in x-direction if the y and z errors are within certain bounds. Furthermore, also the y-speed isn't enforced if the z error is too large. This in this order we first make sure that the angle of pico is correct, than we compensate for any radius deviations and finally pico is allowed to move forward along the turning radius.

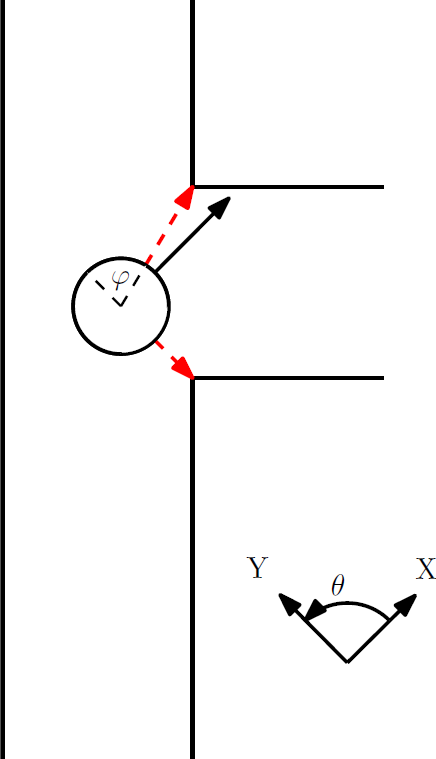

Action 4: If pico is in the turning case, the checkwalls function is disabled and the routine will always skip to the turn command, thus an exit condition within the turning function is required. This is where the tracking of the second corner comes in. The angle [math]\displaystyle{ \varphi }[/math] that represents the angle between the second corner and one of the sides of pico is constantly being updated. If the angle becomes zero, pico is considered to be done turning and should be located in the exit lane. The turning case is now aborted and pico should aromatically go back into the drive straight function because the checkwalls function is reactivated.

Action 5: Both the left and right laser exceed the threshold and thus pico must be outside of the corridor's exit. The checkwalls function will call the stop command and the corridor routine is finished.

Note: If the opposing wall would be closed (an L-shaped corner instead of the depicted T-shape) the turning algorithm will still work correctly.

Corridor competition evaluation

We achieved the second fastest time of 18.8 seconds in the corridor competition. Our scripts functioned exactly the way they should have. However our entire group was unaware of the speed limitation of 0.2 m/s (and yes we all attended the lectures). Therefore, we unintentionally went faster than we were allowed (0.3 m/s). Nevertheless, we are very proud on our result and hopefully the instructions for the maze competition will be made more clear and complete.

Maze Competition

Flowchart

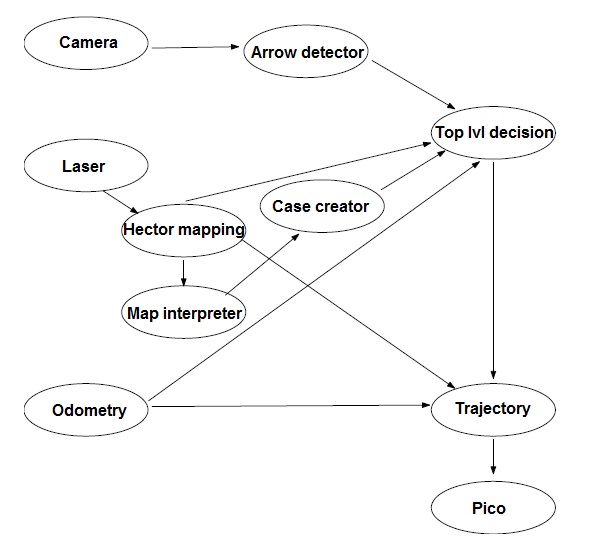

Our strategy for solving the maze is shown schematicallly in the figure below.

Six nodes ( Arrow detector, Hector mapping, Map interpreter, case creator, top level decision and trajectory) have been developed and communicate with each other through several topics. The nodes are subscribed on topics to receive sensory data coming from the laser, camera and odometry modules. All nodes are launched in one launch file. The function of the individual nodes will be discussed below.

Mapping

Determine cases

Decision

The top level decision node decides in which direction a trajectory should be planned. It finds a new set point which becomes a target point for the trajectory node. The top level decision node is subscribed to three topics. First of all it is connected to the odometry module and secondly to Hector mapping node to receive information to determine the current position of pico. Furthermore, it is subscribed on a topic which is connected to the arrow detector node. The arrow detector node as described previously sends a integer message 1 for a left arrow and 2 for a right arrow to help pico find the correct direction. Two major situations can be distinguished in this node and are depicted below:

1. A turn can be initiated before the crossing [type 1 or type 2]

From the case creator node follows that a simple corner (of type 1 or 2) is nearby. A starting point 2. Go to crossing for overview of situation [ type 3, type 4 or type 5 or 6]

Trajectory

Arrow detection

Although the arrow detection is not crucial for finding the exit of the maze, it will potentially save a lot of time by avoiding dead ends. That is why we considered the arrow detection as an important part of the problem, instead of just something extra. The cpp file provided in the demo_opencv package is used as a basis to tackle the problem. The idea is to receive ros rgb images, convert them to opencv images (.mat), convert them to hsv images, find 'red' pixels using predefined thresholds, apply some filters and detect the arrow. The conversions are applied using the file provided in demo_opencv, but finding 'red' pixels is done differently.

Thresholded image

Opencv has a function called InRange to find pixels within the specified thresholds for h, s and v. To determine the thresholds, one should know something about hsv images. An hsv image stands for hue, saturation and value. In opencv the hue ranges from 0 to 179. However the color red wraps around these values, see figure a.1. In other words, the color red can be defined as hue 0 to 10 and 169 to 179. The opencv function InRange is not capable of dealing with this wrapping property and therefore it is not suitable to detect 'red' pixels. To tackle this problem, we made our own InRange function, with the appropriate name 'InRange2'. This function accepts negative minimum thresholds for hue. For example:

min_vals=(-10, 200, 200); max_vals=(10, 255, 255); inRange2(img_hsv, min_vals, max_vals, img_binary);

Makes every pixel in img_binary for which the corresponding pixel in img_hsv lies within the range [H(169:179 and 0:10) S(200:255) V(200:255)] white and otherwise black. This results in a binary image (Thresholded image), see figure a.3.

Filter and detection

The thresholded image likely contains other 'red' clusters besides the arrow. A very basic filter is applied to filter out this noise. The filter computes the average x and y of all the white pixels in the thresholded images. Subsequently it deletes all the pixels outside a certain square from this average. The length and width of this square have a constant ratio (the x/y ratio of the arrow ~ 2.1) and the size is a function of how many pixels are present in the thresholded image. This way the square becomes proportionally with the distance of the arrow. This approach is very prone to noise and therefore an additional safety is applied. The detection only starts if the number of pixels present in the filtered image lies between 2000 and 5000. These numbers are optimized for an arrow +- 0.3 to 1.0 meter away from pico.

Time Survey Table 4K450

| Members | Lectures [hr] | Group meetings [hr] | Mastering ROS and C++ [hr] | Preparing midterm assignment [hr] | Preparing final assignment [hr] | Wiki progress report [hr] | Other activities [hr] |

|---|---|---|---|---|---|---|---|

| Week 1 | |||||||

| Lars | 2 | 0 | 0 | 0 | 0 | 0 | 0 |

| Sebastiaan | 2 | 0 | 0 | 0 | 0 | 0 | 0 |

| Michiel | 2 | 0 | 0 | 0 | 0 | 0 | 0 |

| Bas | 2 | 0 | 0 | 0 | 0 | 0 | 0 |

| Rick | 2 | 0 | 0 | 0 | 0 | 0 | 0 |

| Week 2 | |||||||

| Lars | 2 | 0 | 16 | 0 | 0 | 0 | 0 |

| Sebastiaan | 2 | 0 | 10 | 0 | 0 | 0 | 0 |

| Michiel | 2 | 0 | 8 | 0 | 0 | 0 | 0 |

| Bas | 2 | 0 | 12 | 0 | 0 | 0.5 | 0 |

| Rick | |||||||

| Week 3 | |||||||

| Lars | 2 | 0.25 | 4 | 13 | 0 | 0 | 0 |

| Sebastiaan | 2 | 0.25 | 6 | 10 | 0 | 0 | 0 |

| Michiel | 2 | 0.25 | 6 | 2 | 0 | 0 | 0 |

| Bas | 2 | 0.25 | 4 | 10 | 0 | 0 | 0 |

| Rick | |||||||

| Week 4 | |||||||

| Lars | 0 | 0 | 0 | 12 | 0 | 4 | 0 |

| Sebastiaan | 0 | 0 | 1 | 10 | 0 | 0 | 2 |

| Michiel | 0 | 0 | 0 | 2 | 10 | 2 | 0 |

| Bas | 0 | 0 | 0 | 13 | 0 | 0 | 0 |

| Rick | |||||||

| Week 5 | |||||||

| Lars | 2 | 0 | 0 | 0 | 22 | 0 | 0 |

| Sebastiaan | |||||||

| Michiel | 0 | 0 | 0 | 0 | 16 | 0 | 0 |

| Bas | 2 | 0 | 0 | 0 | 14 | 0 | 0 |

| Rick | |||||||

| Week 6 | |||||||

| Lars | 0 | 0 | 0 | 0 | 20 | 0.25 | 0 |

| Sebastiaan | |||||||

| Michiel | 0 | 0 | 0 | 0 | 20 | 0 | 0 |

| Bas | |||||||

| Rick | |||||||

| Week 7 | |||||||

| Lars | |||||||

| Sebastiaan | |||||||

| Michiel | |||||||

| Bas | |||||||

| Rick | |||||||

| Week 8 | |||||||

| Lars | |||||||

| Sebastiaan | |||||||

| Michiel | |||||||

| Bas | |||||||

| Rick | |||||||