Mobile Robot Control 2024 Wall-E: Difference between revisions

(No difference)

| |

Latest revision as of 20:01, 28 June 2024

Group members:

| Name | student ID |

|---|---|

| Coen Smeets | 1615947 |

| Sven Balvers | 1889044 |

| Joris Janissen | 1588087 |

| Rens Leijtens | 1859382 |

| Tim de Wild | 1606565 |

| Niels Berkers | 1552929 |

Exercise 1

Instructions

- Create a folder called

exercise1in themrcfolder. Inside theexercise1folder, create ansrcfolder. Within thesrcfolder, create a new file calleddont_crash.cpp. - Add a

CMakeLists.txtfile to theexercise1folder and set it up to compile the executabledont_crash. - Q1: Think of a method to make the robot drive forward but stop before it hits something. Document your designed method on the wiki.

- Write code to implement your designed method. Test your code in the simulator. Once you are happy with the result, make sure to keep these files as we will need them later.

- Make a screen capture of your experiment in simulation and upload it to your wiki page.

Implementations:

The videos for the don't crash simulations have been made with the use of the Teleop command. The inputs differ per video, however, the same logic for where to go has been used. Firstly, the drive forward and do not hit a will is tested. Then a corner is tested while driving closely next to a wall. As the teleop overwrites the 0 velocity input of the don't crash, it may be difficult to see that the robot is stopping. Take into account that if the robot is jolty, it is being stopped by the don't crash code.

Coen Smeets:

The provided C++ code is designed to prevent a robot from colliding with obstacles by using laser scan data and odometry data. Here's a step-by-step explanation of how it works:

- Initialization: The code starts by initializing the emc::IO object for input/output operations and the emc::Rate object to control the loop rate. It also initializes some variables for later use.

- Main Loop: The main part of the code is a loop that runs as long as the io.ok() function returns true. This function typically checks if the program should continue running, for example, if the user has requested to stop the program.

- Reading Data: In each iteration of the loop, the code reads new laser scan data and odometry data. If there's new data available, it processes this data.

- Processing Odometry Data: If there's new odometry data, the code calculates the linear velocity vx of the robot by dividing the change in position by the change in time. It also updates the timestamp of the last odometry data.

- Collision Detection: If there's new laser scan data, the code calls the collision_detection function, which checks if a collision is likely based on the laser scan data. If a collision is likely and the robot is moving forward (vx > 0.001), the code sends a command to the robot to stop moving. It also prints a message saying "Possible collision avoided!".

- Sleep: At the end of each iteration, the code sleeps for the remaining time to maintain the desired loop rate.

This code ensures that the robot stops moving when a collision is likely, thus preventing crashes.

Collision detection function

The collision_detection function is a crucial part of the collision avoidance code. It uses laser scan data to determine if a collision is likely. Here's a step-by-step explanation of how it works:

The collision_detection function is a crucial part of the collision avoidance code. It uses laser scan data to determine if a collision is likely. Here's a step-by-step explanation of how it works:

- Function Parameters: The function takes four parameters:

scan,angle_scan,distance_threshold, andverbose.scanis the laser scan data,angle_scanis the range of angles to consider for the collision detection (default is 1.7),distance_thresholdis the distance threshold for the collision detection (default is 0.18), andverboseis a boolean that controls whether the minimum value found in the scan data is printed (default is false). - Zero Index Calculation: The function starts by calculating the index of the laser scan that corresponds to an angle of 0 degrees. This is done by subtracting the minimum angle from 0 and dividing by the angle increment.

- Minimum Distance Calculation: The function then initializes a variable

min_valueto a large number. It loops over the laser scan data in the range of angles specified byangle_scan, and updatesmin_valueto the minimum distance it finds. - Verbose Output: If the

verboseparameter is true, the function prints the minimum value found in the scan data. - Collision Detection: Finally, the function checks if

min_valueis less thandistance_thresholdand within the valid range of distances (scan.range_mintoscan.range_max). If it is, the function returnstrue, indicating that a collision is likely. Otherwise, it returnsfalse.

This function is used in the main loop of the code to check if a collision is likely based on the current laser scan data. If a collision is likely, the code sends a command to the robot to stop moving, thus preventing a crash.

Potential improvements:

While the current collision detection system is effective, it is sensitive to outliers in the sensor data. This sensitivity can be problematic in real-world scenarios where sensor noise and random large outliers are common. Safety is about preparing for the worst-case scenario, and dealing with unreliable sensor data is a part of that. Here are a couple of potential improvements that could be made to the system:

- Rolling Average: One way to mitigate the impact of outliers is to use a rolling average of the sensor data instead of the raw data. This would smooth out the data and reduce the impact of any single outlier. However, this approach would also introduce a delay in the system's response to changes in the sensor data, which could be problematic in fast-moving scenarios.

- Simplifying Walls to Linear Lines: Given that the assignment specifies straight walls, another approach could be to simplify the walls to linear lines in the data processing. This would make the collision detection less sensitive to outliers in the sensor data. However, this approach assumes that the walls are perfectly straight and does not account for any irregularities or obstacles along the walls.

This process involves several steps that may not be immediately intuitive. Here's a breakdown:"- All walls in the restaurant will be approximately straight. No weird curving walls." [1](https://cstwiki.wtb.tue.nl/wiki/Mobile_Robot_Control_2024)

- Data Cleaning: Remove outliers from the data. These are individual data points where the sensor readings are clearly incorrect for a given time instance.

- Data Segmentation: Divide the data into groups based on the angle of the sensor readings. A group split should occur where there is a significant jump in the distance reading. This helps differentiate between unconnected walls.

- Corner Detection: Apply the Split-and-Merge algorithm to each wall. This helps identify corners within walls, which may not be detected by simply looking for large jumps in distance.

Keep in mind that the complexity of the scene can significantly impact the computation time of this algorithm. However, given the scenes shown on the wiki, the additional computation time required to generate clean lines should be minimal. This is because the number of walls is relatively small, and the algorithm can be optimized in several ways. For instance, instead of processing each data point, you could select a subset of evenly spaced points. On average, these selected points should accurately represent the average location of the wall.

Based on my experience, prioritizing safety enhancements in the system is crucial. Establishing a strong safety foundation from the outset is key. By implementing robust safety measures early in the development process, we can effectively mitigate potential risks and issues down the line.

Rens Leijtens

General structure

The code follows the general structure as outlined below:

- including libraries

- main starts

- initialization of objects and variables

- while loop that is active while io.ok() is true

- the laser and odometry data is checked and processed

- the movement is enabled or disabled if necessary

The following libraries are included in the code:☃☃

- iostream for print to terminal

- emc/rate for fixed loop time

- emc/io for connecting with the simulated robot

Movement

The movement is controlled using a simple boolean called "move" which will move the robot if true and stop the robot if false. The movement in this example is only forward by using the sendBaseReference command.

Sensor data processing

Firstly it is checked whether new data is received from the laser or odometry data. Thereafter for every point the laserdata is checked. if the laserdata is gives a distance which is closer then 20 [cm] the robot will make the boolean "move" false and the robot will thus stop moving.

Improvements

The current implementation considers measurements from all directions, which might not be optimal for scenarios where the robot needs to navigate through narrow hallways or close to walls.

Niels Berkers:

The code uses the standard library header <iostream>, which is used when printing outputs to the terminal. Furthermore, the <emc/io.h> package provides the structures and functions to read data from the LiDar sensor and other hardware information needed for robot movement. Finally the package <emc/rate.h> is used to set the appropriate frequency for a while loop.

Implementation:

After the packages are included the main function is called, which starts by creating an IO object of the class IO and setting the frequency to 10 Hz. Afterwards, a while loop starts running that only breaks if the IO tools are not ok. Inside the while loop the reference velocity is set so that the robot drives forward with a velocity of 0.1. Afterwards, if new laser data is obtained, it loops over all indexes of the scan data and checks if the a range is within 0.3 meters. If that is the case, the reference velocity is set to 0 for all directions and thus the robot stops driving.

Improvements:

The code can be improved by only looking at a window that is in front of the robot. When this would be implemented, it would also be possible to drive parallel and close to a wall. This would also decrease the amount of indexes that need to be checked. The code can also be improved by sending out a signal when the robot is too close to a wall, which could be handy in a future and possibly bigger algorithm.

Joris Janissen:

Packages Used

emc/io.h: This package provides functionalities for interfacing with hardware, including reading laser and odometry data, and sending commands to control the robot's movement.emc/rate.h: This package enables control over the loop frequency, ensuring the code executes at a fixed rate.iostream: This package facilitates input/output operations, allowing for the printing of messages to the console.cmath: This package provides mathematical functions used in the implementation.

Implementation

- Initialization: The

main()function initializes the IO object, which initializes the input/output layer to interface with hardware, and creates a Rate object to control the loop frequency. - Data Processing: Within the main loop, the code reads new laser and odometry data from the robot. It then calculates the range of laser scan indices corresponding to a specified angle in front of the robot.

- Obstacle Detection: The code iterates over the laser scan data within the specified range. For each laser reading, it checks if the range value falls within the valid range and if it detects an obstacle within a certain stop distance threshold.

- Action: If an obstacle is detected within the stop distance threshold, the code sends a stop command to the robot and prints a message indicating the detected obstacle and its distance. Otherwise, if no obstacle is detected, the code sends a command to move the robot forward.

- Loop Control: The loop sleeps for a specified duration to maintain the desired loop frequency using the

r.sleep()function.

Improvements

- Data segmentation: The data points can be grouped to reduce computation time.

- Collision message: The collision message now spams the terminal, it can be improved to plot only once.

- Rolling average: The rolling average can be taken from the data points to filter out outliers or noise.

Conclusion

This documentation provides insights into the implementation of obstacle avoidance using laser scans in a robotic system. By leveraging the EMC library and appropriate data processing techniques, the robot can effectively detect obstacles in its path and navigate safely to avoid collisions, there are however some improvements.

Sven Balvers:

implementation:

Header Files: The code includes some header files (emc/io.h and emc/rate.h) which contain declarations for classes and functions used in the program, as well as the standard input-output stream header (iostream).

Main Function: The main() function serves as the entry point of the program.

Object Initialization: Inside main(), several objects of classes emc::IO, emc::LaserData, emc::OdometryData, and emc::Rate are declared. These objects are part of a framework for controlling a robot.

Loop: The program enters a while loop that runs as long as io.ok() returns true. This indicates that the I/O operations are functioning correctly, and thus is connected.

Control Commands: Within the loop, it seems to send a reference command to the robot's base at a fixed rate of 5 Hz using io.sendBaseReference(0.1, 0, 0). This command tells the robot to move forward at a certain velocity.

Data Reading: It reads laser data and odometry data from sensors attached to the robot using io.readLaserData(scan) and io.readOdometryData(odom).

Obstacle Detection: If new laser data or odometry data is available, it checks the laser scan data for any obstacles within a certain range (less than 0.3 units). If an obstacle is detected, it sends a stop command to the robot's base using io.sendBaseReference(0, 0, 0), setting the value of the speed to 0.

Rate Control: The loop sleeps for a fixed duration (r.sleep()) to maintain the specified loop rate.

Improvements:

To prevent the noise of the measurement data there are multiple approaches, we can take a moving average of the samples this will cause a delay of the data. We can also take point near one another to merge as one point and taking the average. Than there isn't a delay present.

Another improvement that can be made is to limit the vision of the robot to a certain angle and not the full range from -pi to +pi. This will ensure the robot is able to pass through a narrow hall, which is important for the final code.

Tim de Wild

Implementation:

1. Initialization

- An `emc::IO` object is created to handle the robot's I/O operations.

- An `emc::Rate` object is set to maintain a loop rate of 10 Hz.

2. Main Loop

- The loop runs as long as the connection is good (`io.ok()`).

- Laser and odometry data are read into `scan` and `odom`.

- A command is sent to move the robot forward at a speed of 0.1 units.

- If new laser or odometry data is available, it checks the laser scan ranges.

- If any distance in the laser data is less than 0.25 units (indicating an obstacle), the robot stops.

- The loop sleeps to maintain the 10 Hz rate.

3. Exit

- The loop exits if the connection is lost, and the program ends.

Improvements:

The Don't Crash code is as simple as can be, due to this the full range of the lidar data that is used to determine if the robot is too close to a wall is used. This prevents the robot from being able to drive close to a wall. A simple improvement could be to only use the laserpoints in front of the robot.

Another improvement would be to rotate the robot away from a wall when it gets too close.

Exercise 1 (practical)

You now know how to work with the robot. For your first session, we suggest you start by experimenting with the robot. Document the answers to these questions on your wiki.

Go to your folder on the robot and pull your software.

On the robot-laptop open rviz. Observe the laser data. How much noise is there on the laser? What objects can be seen by the laser? Which objects cannot? How do your own legs look like to the robot.

The noise of the signal from the robot's Lidar is difficult to quantify, as noticed during testing of "dont_crash" file. When the robot drives straight towards an object, it stops abruptly, but when it approaches a wall at a sharp angle, it stutters slightly. This happens because only a limited number of data points are obtained in such cases, making the noise on the sensor apparent. The robot can only see object at the hight of the Lidar. The Lidar has a distribution of measuring points spreading a certain angle. Due to this, the Lidar can have problems detecting thin objects such as chair legs. Objects it can observe are flat surfaces. The robot's scanning capability doesn't cover a full 360 degrees around itself but instead sees a 180-degree angle centered at the front.

Take your example of don't crash and test it on the robot. Does it work like in simulation? Why? Why not? (choose the most promising version of don't crash that you have.)

The Don't crash code runs almost the same as the simulations. One important remark can be made about not running into people. The Lidar sees the legs of a person. However, feet extend drastically further out than the legs. Thus, the robot runs over feet as it sees the legs and think that it still has space left.

The most promising version of the code is Coen's version. This is due to the fact that it does not look at the full range but only the relevant rays in front of the robot. It can thus, in comparison to other implementations, drive close to walls that are to the side of it. This this especially relevant in narrow hallways.

If necessary make changes to your code and test again. What changes did you have to make? Why? Was this something you could have found in simulation?

We modified the code so that the robot doesn't stop when it reaches a wall but instead turns left until it has enough space to resume driving. What's noticeable is that the robot starts and stops repeatedly because it can drive a short distance and then stops again. To prevent this, the robot should turn slightly longer to maintain continuous movement. We could see this in the simulation however we only created this code to try when we were there.

From the real life test, it become apparant that some of the data might need to receive some smoothing. During turning some random measurements appear in front of the robot (To the side but just within the range that Coen's version tests). It thus become Jolty in turning. It can be solved in multiple ways. Firstly, don't crash can only prevent lateral velocity and not rotational. This will fix the issue that the robot rotates and still stops. The data can also be cleaned first with the use of a rolling average, however, this is not wanted at high velocities and low Lidar frequencies as measurements can differ more.

Artificial Potential Field

Background

Artificial Potential Field is to treat the robot's configuration as a point in a potential field that combines attraction to the goal, and repulsion from obstacles. The resulting trajectory is output as the path. This approach has advantages in that the trajectory is produced with little computation. However, they can become trapped in local minima of the potential field and fail to find a path, or can find a non-optimal path. The artificial potential fields can be treated as continuum equations similar to electrostatic potential fields (treating the robot like a point charge), or motion through the field can be discretized using a set of linguistic rules.[1] A navigation function[2] or a probabilistic navigation function[3] are sorts of artificial potential functions which have the quality of not having minimum points except the target point.

The Algorithm

The APF algoritm makes use of the position of the robot [math]\displaystyle{ q }[/math] and the position of the goal point [math]\displaystyle{ q_g }[/math] to compute the error between the two as [math]\displaystyle{ e(q) = q_g - q }[/math]. This error is used to compute the attractive force as seen below, where [math]\displaystyle{ k_a\gt 0 }[/math].[4] The magnitude of the attractive force is normalised and multiplied with a factor [math]\displaystyle{ k_a }[/math].

[math]\displaystyle{ f_a(q) = -\nabla U_a(q) = k_a \frac{e(q)}{\left\Vert e(q) \right\Vert} }[/math]

The error [math]\displaystyle{ e(q) }[/math] is also used to compute the repulsive force field, the formula can be seen below where [math]\displaystyle{ k_r\gt 0 }[/math], [math]\displaystyle{ \eta_i(q) }[/math] is the range value of a single LiDAR data point, and [math]\displaystyle{ \eta_0 }[/math] is the threshold for a scanned obstacle where the repulsive force field kicks in. The repulsive force is the force pushing the robot away from nearby objects scanned by the LiDAR data to prevent collisions.

[math]\displaystyle{ f_{r,i}(q) = -\nabla U_r(q) = \begin{cases} \frac{k_r}{\eta_i^2(q)}(\frac{q}{\eta_0} - \frac{1}{\eta_i(q)})\nabla\eta_i(q) & \text{if $\eta_i(q) \leq \eta_0$} \\ 0 & \text{if $\eta_i(q) \gt \eta_0$} \end{cases} }[/math]

The total Artificial Potential Field is computed as [math]\displaystyle{ f(q) = f_a(q) + \sum_{i=1}^p f_{r,i}(q) }[/math], where [math]\displaystyle{ p }[/math] is the total amount of LiDAR data points used. Right now they are not all used, since 1000 is unnecessary much. Each 10th LiDAR data point is used, resulting in 100 range values. The amount of range values can be changed and [math]\displaystyle{ k_r }[/math] will scale accordingly.

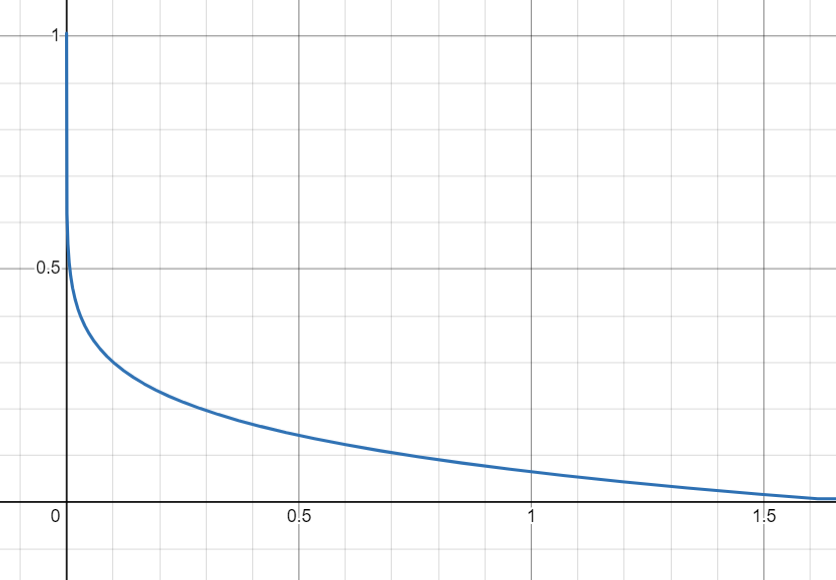

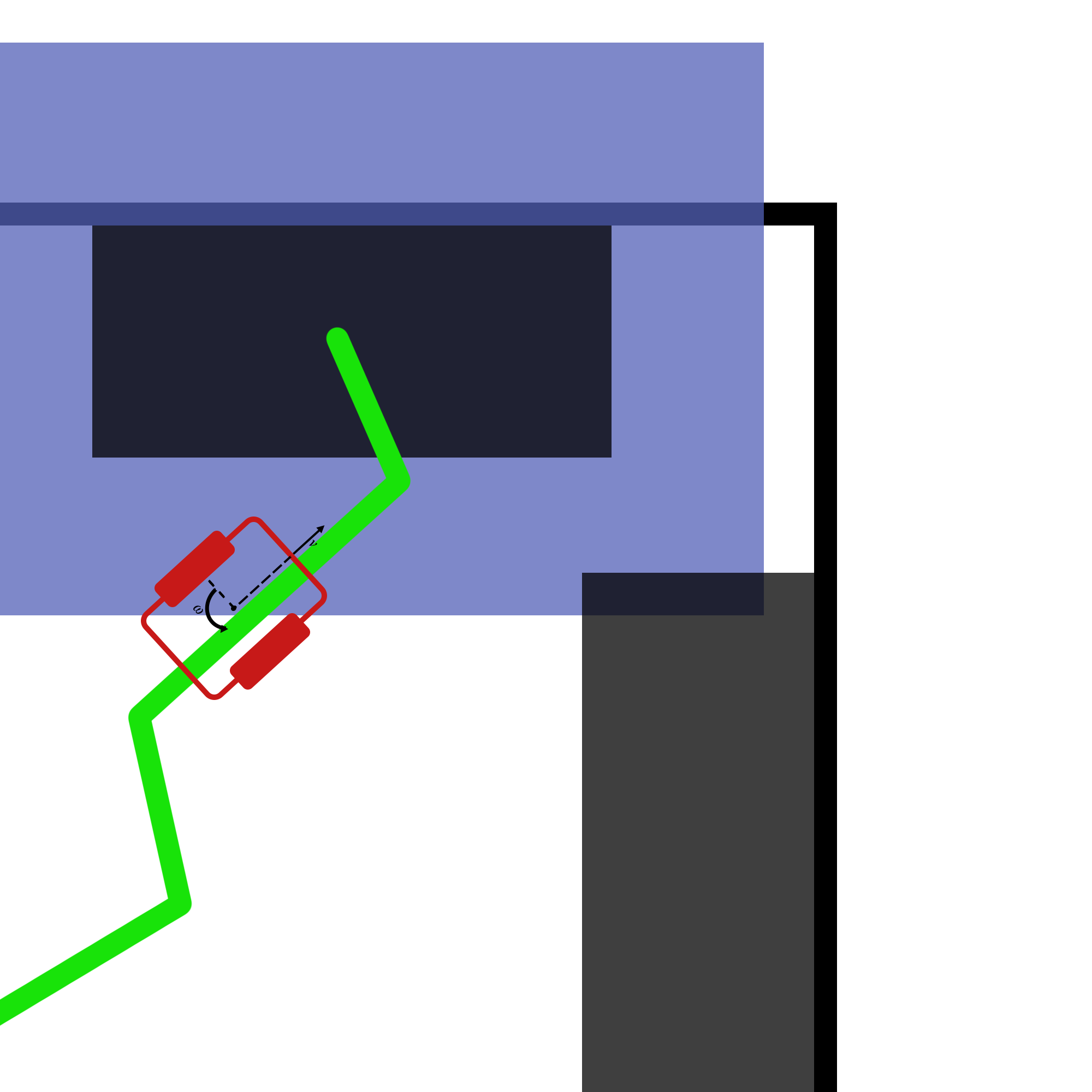

After the gradient field [math]\displaystyle{ f(q) }[/math] is calculated, it has to be translated to velocity inputs for the mobile robot. These velocity inputs come down to linear velocity and angular velocity: [math]\displaystyle{ \dot{q}=\begin{bmatrix} v\\ \theta \end{bmatrix} }[/math]. The calculation for the velocity is given below, where [math]\displaystyle{ \theta_f }[/math] is the angle the force field directs the robot to move in relative to the robot itself. The angular velocity input is determined by [math]\displaystyle{ \theta_f }[/math] and the forward velocity is determined by the magnitude of the force field on the location of the robot times a formula dependent on [math]\displaystyle{ \theta_f }[/math]. The shape of this formula can be seen in the figure on the right. When the force field pushes the robot straight ahead, the full magnitude of the force is utilized. However, when the force field directs the robot to turn, the forward velocity input is minimal, with a minimum of 0.08 m/s.

[math]\displaystyle{ v = \begin{bmatrix} \text{max}\left(1-\left(\frac{|\theta_f|}{\pi}\right)^{(\frac{1}{8})},0.08\right) \frac{1}{0.92} - 0.08\\ \theta_f \end{bmatrix} }[/math]

Simulation and testing videos

The robot in the simulation video to the right follows 3 goal points, these are not placed optimally as a robust global path planner should do. This is done on purpose to make sure the robot has to maneuver around obstacles to follow the path. It can be seen that the robot follows the path quite smoothly. If the goal points are positioned extremely bad, for example behind a wall, the algorithm will come in a local minimum and after some time ask for a new goal point that is reachable.

Advantages

One of the main advantages is the simplicity of the algorithm. It can be implemented with straightforward mathematical functions. Another advantage is the robot will always avoid obstacles and drive in a smooth path (if it doesn't encounter a local minimum.

Disadvantages

The main disadvantage is that the robot can become stuck in a local minimum or in al local minimum loop and will not reach its end goal. The effectiveness of the method heavily depends on the tuning of parameters like the strength of the attractive and repulsive potentials. Improper tuning can lead to poor performance. The method relies on local information and does not inherently consider the global environment. This can result in sub optimal paths or failure to find a path in complex environments. But this is where the global path finding comes in to action.

Solutions

First, the robot should never get stuck in a local minimum if the global path finding is sufficiently robust. However, if this does occur, two solutions have been implemented. Firstly, if the robot remains in a local minimum for 2.5 seconds and its position does not change significantly, the driving force will gradually be increased to help the robot escape. The second solution implemented is the detection of a situation where the robot remains in a local minimum for 30 seconds. The robot will then indicate that it wants an alternative route to reach its destination. This is done because the environment is dynamic and cannot be fully predicted.

To implement the integration of local planning with global planning, the global planner provides a list of way points for the local planner to follow. As the robot approaches a way point on the list, it switches to the next point on the list until the robot reaches its final destination. A real life example of how the global and local path planner work together can be seen in the video on the right. The problem here is that the dimensions of the "hero" robot are used and not the dimensions of the "bobo" robot. This results in the robot having some difficulties driving trough the straight hallway at the end. Also can't the robot localize itself, this is now done based on the starting position and the odometry data.

Dynamic Window Approach

Background

The Dynamic Window Approach (DWA)[5] serves as a local navigation algorithm tailored for mobile robots. It functions by analyzing both the present condition of the robot and the array of conceivable future states within a dynamically adaptable "window" of acceptable velocities and angular velocities. Each potential future state undergoes evaluation based on factors like its proximity to the goal, its clearance from obstacles, and its feasibility within the dynamic constraints of the robot.

The Algorithm

The computeDynamicWindow function calculates the permissible range of linear and angular velocities for the robot based on its current state and dynamic constraints. It determines the minimum and maximum linear velocities by considering the robot's current velocity, the maximum acceleration, and the time step:

[math]\displaystyle{ v_{min} = max(v_{min}, v - a_{max} \triangle t) }[/math]

[math]\displaystyle{ v_{max} = min(v_{max}, v + a_{max} \triangle t) }[/math]

Similarly, it computes the minimum and maximum angular velocities using the current rotational velocity, the maximum allowable yaw rate, and the time step:

[math]\displaystyle{ \omega_{min} = max(-\omega_{max}, \omega - \alpha_{max} \triangle t) }[/math]

[math]\displaystyle{ \omega_{max} = min(-\omega_{max}, \omega + \alpha_{max} \triangle t) }[/math]

The number of possible linear and angular velocities is then determined based on the specified resolution:

[math]\displaystyle{ n_{velocities} = \frac{v_{max} - v_{min}}{\small{\text{Velocity resolution}}} }[/math]

[math]\displaystyle{ n_{yawrates} = \frac{\omega_{max} - \omega_{min}}{\small{\text{Yawrate resolution}}} }[/math]

Memory is allocated for arrays to store these possible velocities, and each velocity value is assigned iteratively within the specified range. Finally, the function returns the dynamic window containing the calculated permissible velocities.

The calculateVelocityCost function determines the cost associated with the current linear velocity of the robot. It calculates the following cost by subtracting the current forward velocity from the maximum permissible velocity specified in the parameters:

[math]\displaystyle{ \small{\text{Velocity Cost}} = v_{max} - v }[/math]

This operation provides insight into how far the robot's velocity deviates from the maximum allowable value.

The calculateHeadingCost function evaluates the robot's orientation towards a goal, determining the cost of its current heading. This cost measures how well the robot is aligned with the goal. The function calculates the angle between the robot's current position and the goal point, then compares this angle to the robot's current heading. The absolute value of this difference is taken as the heading cost, which reflects how directly the robot is oriented toward its target.

[math]\displaystyle{ \small{\text{Heading cost}} = \left| \arctan \left( \frac{\sin(\text{angleError} - \omega)}{\cos(\text{angleError} - \omega)} \right) \right| }[/math], where [math]\displaystyle{ \text{angleError} = \arctan \left( \frac{y_{goal} - y_{pose}}{x_{goal} - x_{pose}} \right) }[/math]

The calculateClearanceCost function calculates the cost associated with the clearance of the robot from obstacles in its environment. It iterates over a simulated time horizon, updating the robot's pose based on its velocity. Within each time step, it iterates over the laser scan data transformed into a point cloud. For each point in the point cloud, it computes the distance between the robot's pose and the obstacle point in the world frame. The function updates the minimum clearance distance if a closer obstacle is detected. After iterating over all laser scan points within the time horizon, it returns the reciprocal of the minimum clearance distance as the clearance cost. If the robot is within a minimum threshold distance from an obstacle, it returns the maximum possible clearance cost.

Function calculateClearanceCost(pose, velocity, scan):

iPose ← pose

laserWorldData ← transformScantoPointCloud(scan,pose)

time ← 0.0

minr ← maxDistance

While time < maxTime:

iPose ← calculateNewpose(iPose, forwardVelocity, rotationVelocity, dt)

For i ← to size of laserWorldData - 1 with steps of 3

r ← distanceToObject

If r < minr:

minr = r

Increment time by dt

Return 1.0 / minr

The optimalInputVelocity function determines the optimal velocity input for the robot based on the given laser scan data, odometry data, current pose, current velocity, and goal position. It first transforms the laser scan data into a point cloud in the world frame. Then, it computes the dynamic window based on the transformed point cloud, current pose, and velocity. Within nested loops iterating over possible velocities and yaw rates within the dynamic window, it computes the cost associated with each velocity and yaw rate combination. The cost is calculated as a weighted sum of the forward velocity cost, the heading cost towards the goal, and the obstacle clearance cost, each multiplied by their respective parameters:

[math]\displaystyle{ G(v, \omega) = k_h \cdot h(v, \omega) + k_d \cdot d(v, \omega) + k_s \cdot s(v, \omega) }[/math]

Where:

- [math]\displaystyle{ h(v, \omega) }[/math]represents the target heading cost towards the goal.

- [math]\displaystyle{ h_k }[/math] represents the weight of the heading cost.

- [math]\displaystyle{ d(v, \omega) }[/math] represents the distance to the closest obstacle on the trajectory.

- [math]\displaystyle{ h_d }[/math] represents the weight of the clearance cost.

- [math]\displaystyle{ s(v, \omega) }[/math] represents the forward velocity.

- [math]\displaystyle{ h_s }[/math] represents the weight of the velocity cost.

The function then selects the velocity input with the minimum total cost. Additionally, it checks if the goal has been reached within a threshold distance and returns a tuple containing the optimal velocity, a boolean indicating whether the goal has been reached, and a boolean indicating if a new global path needs to be planned. Simplified pseudo code for calculating the optimal input velocity using DWA would look like:

Function optimalInputVelocity(scanData, odometryData, pose, vel, goal):

dw ← computeDynamicWindow(pose, vel)

total_cost ← ∞

For i from 0 to dw.nPossibleVelocities - 1 Do:

For j from 0 to dw.nPossibleYawrates - 1 Do:

forwardVelocity ← dw.possibleVelocities[i]

rotationalVelocity ← dw.possibleYawrates[j]

iPose ← calculateNewPose(pose, forwardVelocity, rotationalVelocity, dt)

cost ← costForwardVelocity * calculateVelocityCost(iVelocity) +

costTargetHeading * calculateHeadingCost(iPose, goalPoint) +

costObstacleClearance * calculateClearanceCost(iPose, iVelocity, scanData)

If cost < total_cost Then:

total_cost ← cost

optimalVelocity ← iVelocity

goalReached ← distanceToGoal < goalDistanceMargin

Return (optimalVelocity, goalReached, false)

Simulation and testing videos

In order to compare both approaches of the local navigation tests have been performed in both simulation as well as with the physical robot. In order to test the DWA algorithm the proper parameters needed to be tuned, which resulted in the following values:

| Parameter | Value | Unit |

|---|---|---|

| minVelocity | 0.0 | m/s |

| maxVelocity | 0.25 | m/s |

| maxAcceleration | 0.2 | m/s^2 |

| maxYawrate | 0.4 | rad/s |

| maxdYawrate | 0.4 | rad/s |

| velocityResolution | 0.005 | - |

| yawrateResolution | 0.005 | - |

| dt | 0.2 | s |

| maxTime | 3.0 | s |

| costWeightHeading | 0.05 | - |

| costWeightClearance | 2.0 | - |

| costWeightVelocity | 0.3 | - |

Advantages

- Real-time adaptation: DWA continuously adjusts robot velocity based on current conditions, enabling real-time response to changing environments.

- Dynamic constraints: By considering dynamic constraints such as maximum acceleration and yaw rate, DWA ensures that generated velocities are feasible and safe for the robot.

- Customizable parameters: DWA allows for fine-tuning through parameters like velocity and yaw rate resolution, as well as cost weights for different objectives, making it adaptable to various robot platforms and environments. (This could also be a disadvantage)

- Clearance-based planning: By incorporating obstacle clearance cost, DWA prioritizes paths with sufficient clearance, minimizing collision risks.

Disadvantages

- Sensitive to inaccurate sensor data: DWA heavily relies on accurate sensor data, and inaccuracies or limitations in perception may lead to sub-optimal or unsafe trajectories.

- Local Minima: In cluttered environments, DWA may get stuck in local minima, unable to find feasible trajectories due to limited exploration of velocity and yaw rate space.

- Customizable parameters: Although these adjustable parameters can guarantee adaptability, it also creates challenges when tuning since testing can be empirical.

Possible failures and solutions

Possible scenario: During simulation, it was observed that the robot faces unwanted movement when it cannot directly reach its goal due to curvature and velocities limitations from the dynamic window. As a result, the robot overshoots the target and continues rotating until it precisely aligns with the goal position. This behavior leads to inefficient movement and non-optimal paths, potentially increasing the likelihood of encountering local minima.

Solution:

To address this issue, tuning the parameters to prioritize the heading cost can help prevent the robot from overshooting the target. However, after implementing this adjustment, it was noted that the robot no longer moves smoothly along curved paths but instead frequently stops and rotates towards the goal. This abrupt and jerky movement not only lacks elegance but also raises the risk of getting trapped in local minima.

To enhance navigation efficiency and avoid circling around the target, integrating a 'goalReached' function within the local navigation algorithm proves effective. By incorporating a distance margin, such as 20 cm, the robot can detect its proximity to the next goal point and request a new target from the global planner. This proactive approach ensures that the robot continuously updates its trajectory towards the next waypoint, minimizing the risk of getting stuck in a radial loop. Since DWA performs well with a small number of waypoints, as demonstrated in simulations, this method optimizes navigation by seamlessly transitioning between successive goals, resulting in smoother and more efficient paths.

To seamlessly integrate local planning with global planning, the global planner provides a sequence of waypoints representing key locations for the robot to visit. The local planner, in this case the DWA, dynamically adjusts the robot's trajectory to navigate towards each waypoint while avoiding obstacles and adhering to dynamic constraints. Continuous coordination between the global and local planners ensures smooth navigation towards the final destination, with the local planner updating its trajectory as the robot approaches each waypoint. A real life example of how the global and local path planner work together can be seen in the video on the left. In this video it can be seen that the robot moves reasonably well around the map, without the implementation of localization. Note that the map compared to simulation is not exactly the same, since at for example the second to last corner/turn the wall should be extended a bit more. For this reason the robot now seems to move around the corner with a very large distance. This would be resolved when implementing localization.

In the video on the right it can be seen how DWA approach an unexpected object. Although the object is positioned on its path, the robot is able to smoothly adapt and move around the object. It can however be seen that the toes of Rens are not detected by the LiDar, therefore we continue looking into parameter tuning and safety margins.

Probabilistic Road Map

It must be noted that this section has been heavily influenced by Coen Smeets's BEP report. Ideas and abstracts have been taken from this report as it was written by a groupmember.[6]

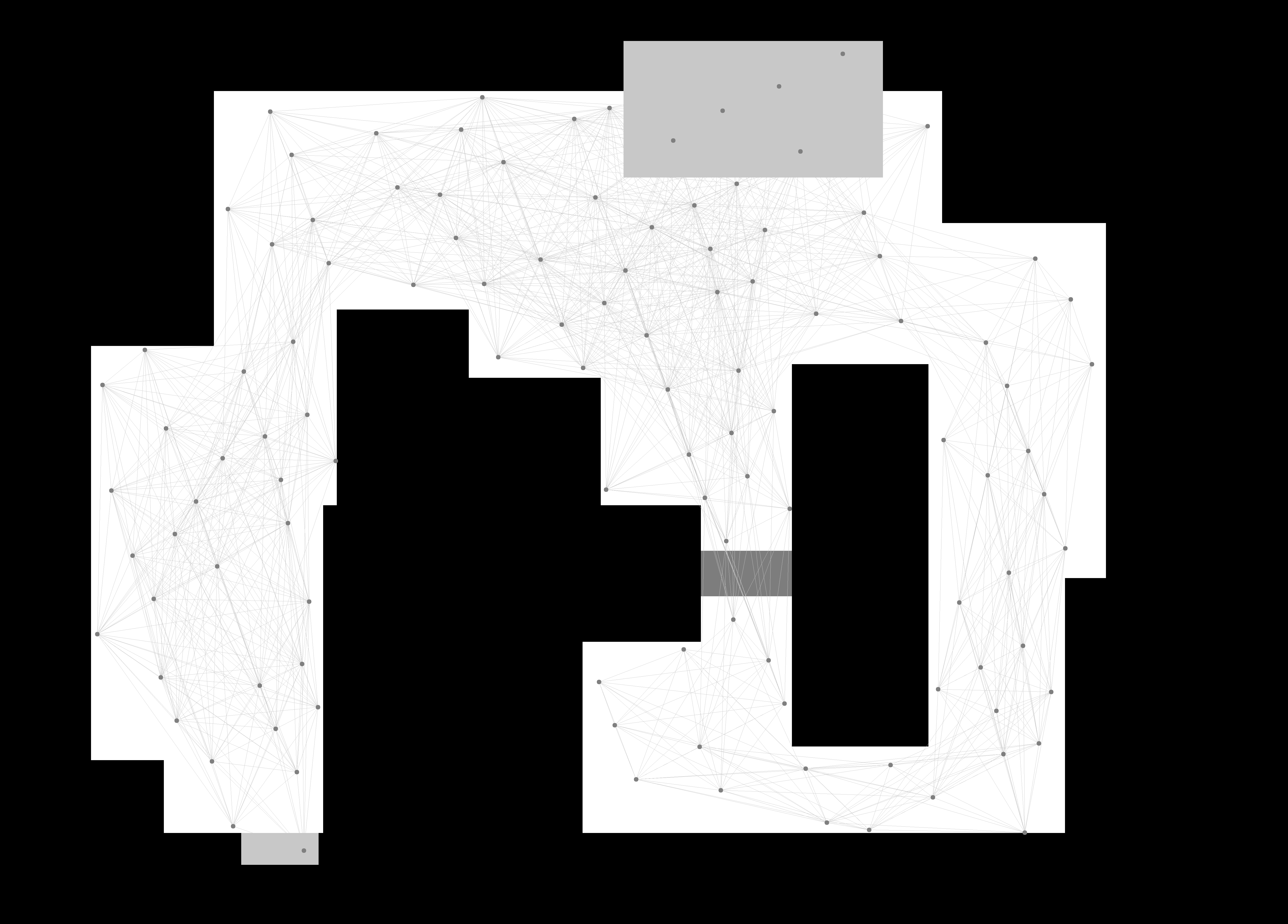

Probabilistic Road Maps, henceforth noted as RPM, are a method of creating a network in which a dedicated algorithm can find the shortest route. In order to be a good network for the purpose of navigating a mobile robot, the network has to be a correct representation of the enviroment where safety is of importance; Edges between nodes are safe. There are several methods to create a network, however, Tsardoulias et al. (2016) compared several techniques to optimise path planning and concluded that space sampling methods are most effective in two-dimensional Occupancy grid map, OGM.[7] The data for the environment has been pre-parsed to an occupancy grid map by the course. Space sampling shows great success rate, small execution times and short paths without excessive turns.

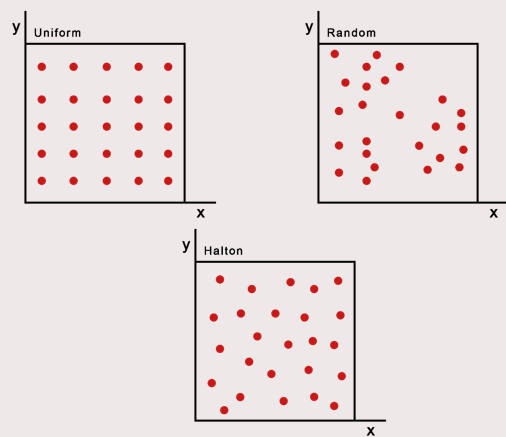

For space sampling, the Halton sequence technique presented in Tsardoulias et al. (2016) has been adapted to create a network, G. The network is a simplified representation of the movable space in the OGM. The Halton sequence combines the advantages of random sampling and uniform sampling. In random sampling, every cell can be sampled independently of its location, ensuring an unbiased representation. On the other hand, uniform sampling ensures that the sampled points are evenly distributed. Therefore, the Halton sequence offers the benefits of both approaches simultaneously. The network will consist of nodes, the sampled points in the OGM, and links between nodes, possible paths. The Halton sequence has low discrepancy and thus appears random while being deterministic.[8] It samples the space quasi-randomly but with an even distribution. The space is sampled in 2D by using a sequence of bases 2 and 3. The higher the base the more computationally demanding the calculations are, thus, base three is chosen in the evaluation between evenly spreading the nodes and computational power.[8] The sequences are subsequently scaled to the space the OGM takes up by using the number of rows and columns for the x and y coordinates, respectively and with the use of the resolution of the map.

The created two-dimensional Halton sequence contains the possible locations of nodes within the OGM. However, each node is additionally checked to determine whether it is located within a cell that is considered free. This check is necessary as the area spanned by all free cells can still contain occupied cells. Nodes should also not be placed in free cells close to occupied cells where collisions between the robot and the obstruction still occur. Free cells close to occupied cells are found by 'inflating' the occupied cells. This process operates by iterating over each pixel in the map, and if the pixel is identified as part of an obstacle, it sets the surrounding pixels within a specified radius to also be part of the obstacle.

When all nodes are placed, edges are created between each node with specific rules to ensure safety and an efficient network. Firstly, each edge should not surpass a distance threshold. This is mostly to optimise the second rule's calculations where it states that each edge should not pass through an occupied cell. This safety rule is checked with the use of Bresenham's line algorithm. Bresenham's line algorithm is an efficient method used in computer graphics for drawing straight lines on a grid by minimizing the use of floating-point arithmetic and rounding errors.[9] Edges are only placed when they are safe and not unnecessarily long. An additional minimum threshold for the distance can be added but by the use of the Halton sequence, as explained above, a certain uniformness in distribution of the quasi-random points can be assumed.

Combining these principles of placing nodes and edges, create a coherent network in an environment expressed in an occupancy grid map. This network is subsequently used in the A* path planning algorithm.

Psuedo Code

Function HaltonSequence(size, base):

sequence ← []

For i ← 1 to size Do

f ← 1

r ← 0

while i > 0 Do

f ← f / base

r ← r + f * (i mod base)

i ← floor(i / base)

sequence.append(r)

Return sequence

Function BresenhamLine(x0, y0, x1, y1):

points ← []

dx ← abs(x1 - x0)

dy ← abs(y1 - y0)

sx ← if x0 < x1 then 1 else -1

sy ← if y0 < y1 then 1 else -1

err ← dx - dy

while True Do

points.append((x0, y0))

If x0 == x1 and y0 == y1 Then

Break

e2 ← 2 * err

If e2 > -dy Then

err ← err - dy

x0 ← x0 + sx

If e2 < dx Then

err ← err + dx

y0 ← y0 + sy

Return points

A* path planning

Background

The A* (A-star) algorithm is a popular and widely used path finding and graph traversal algorithm. It is especially well-known for its use in applications such as AI in games, robotics, and GPS navigation systems. The A* algorithm combines the strengths of Dijkstra's algorithm [10] and Greedy Best-First-Search to efficiently find the shortest path between nodes in a graph. Staring form a specific node, it aims to find a path to the given goal node having the smallest cost. It does this by maintaining a tree of paths originating at the start node and extending those paths one edge at a time until the goal node is reached. At each iteration the algorithm needs to determine which part to extend. It does this by taking in to account the cost to come and the heuristic cost to go to the end node. The heuristic cost is can be different for different implementations of the algorithm, here however it is the absolute distance between the node that is being checked and the final node. It is important that the heuristic cost is never over estimated. The algorithm described so far gives us only the length of the shortest path. To find the actual sequence of steps, the algorithm can be easily revised so that each node on the path keeps track of its predecessor. After this algorithm is run, the ending node will point to its predecessor, and so on, until some node's predecessor is the start node.

Questions & Answers

More efficient note placement

A more efficient way of placing the nodes is to place nodes only in the corners where the robot would, or can change direction, this makes the graph easier to navigate due to the fewer points present. See figure below.

https://dsdwiki.wtb.tue.nl/images/Beter_node_placement.png

Halton node placement already improves, as previously noted, both the randomness and the uniformess over a grid or a random approach. Thus, there is already a more efficient note placement in place.

Is RPM for a maze efficient

It is not a good idea to use RPM for a maze since RPM is not well suited for narrow hallways. Points have to be randomly placed and will not be placed in the middle of the hallway. Thus, limiting the capacility. However, a grid approach has a similar problem where the grid should line up with the narrow hallway, otherwise the entire hallway will not be seen.

What would happen if the map updates?

If the map is updated, the robot will first attempt to follow the pre-determined path with the local planner. If it encounters a local minimum and cannot proceed, the robot will request a new global route and continue from its current position. This can be due to a limitation of the local navigation or because the path is not possible anymore due to the dynamic environment. If the second reason is the case, the map will be updated and a new path will be planned.

Combining PRM with A*

Test the combination of your local and global planner for a longer period of time on the real robot. What do you see that happens in terms of the calculated position of the robot? What is a way to solve this?

Due to the pose data being solely based on odometry data, the ground-truth will deviate from the calculated pose. Thus, the real robot is not following the correct path in the correct place anymore. This is solved by the use of active localisation. More can be read on this later.

Run the A* algorithm using the gridmap (with the provided nodelist) and using the PRM. What do you observe? Comment on the advantage of using PRM in an open space.

When using the gridmap, the robot travels through the middle of the corridors because the grid points are all located in the center of the corridors. However, when the robot uses PRM, it can cut corners slightly (if there are enough points). It is essential, though, that enough points are placed on the map to ensure that the route can be calculated.

The advantage of PRM is that in open spaces, it determines the most direct route and does not have to travel via a series of nodes through the space as it does with a grid map. This can be seen in the videos above, where the robot moves diagonally through a relatively large space.

Localization

Localization process:

To ensure the robot is aware of its current pose, a localization algorithm is implemented. This algorithm works as follows:

1. Initialization:

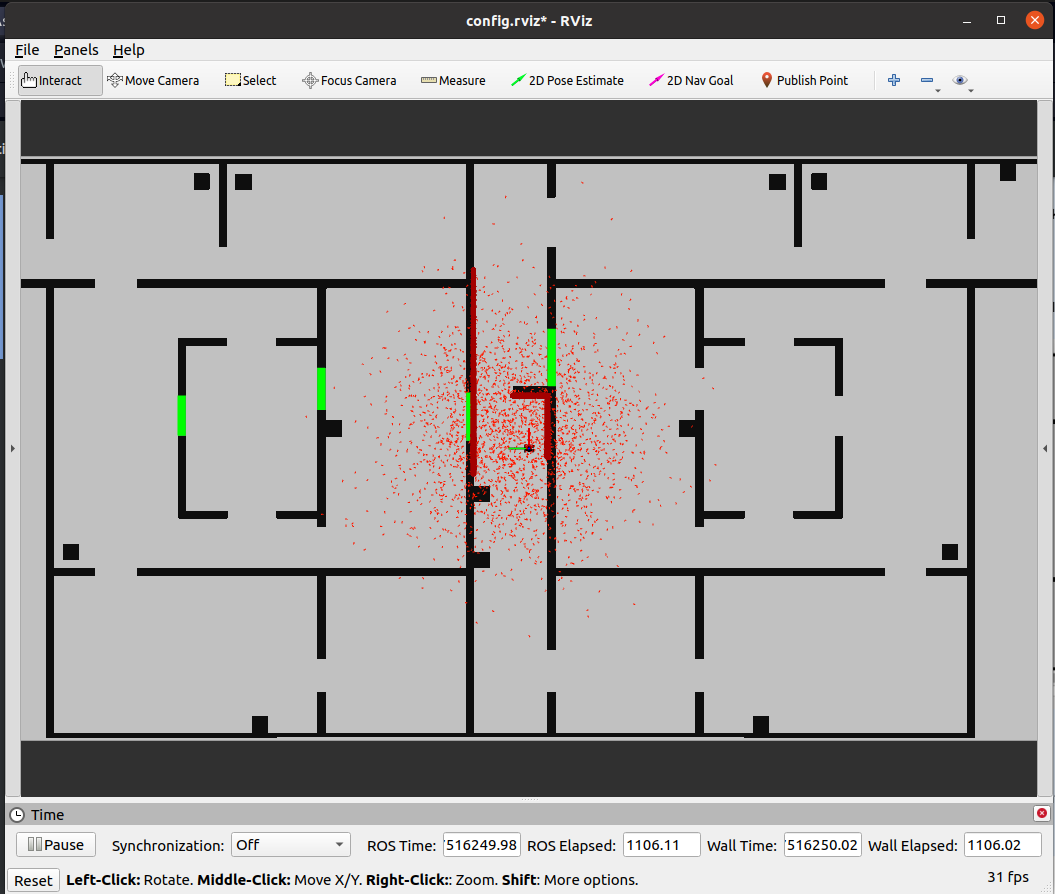

- The robot initializes a set of particles, each representing a possible pose (position and orientation) of the robot. These particles are distributed according to a prior distribution. This distribution may be uniform if there is no prior knowledge of the robot's location or more concentrated, following a Gaussian distribution, if there is some initial information available. In the case of the final assignment an initial guess is available and the Gaussian distribution is thus used for initialization.

The initialization is visualized in the figure on the right.

2. Pose Estimation:

From these particles an average pose is computed using the weighted average of all particles. In situations where particles form just one cluster this performs well, when multiple clusters of particles form the average pose will be a bad estimate.

Initialization including the estimated pose is visualized in the second figure on the right. Here a green arrow represents the final estimated pose, which is the weighted average of all particles. The particles are placed around the initial guess, since this initial guess is incorrect the estimate pose is also incorrect.

3. Motion Update:

As the robot moves, it uses odometry data to update the pose of each particle. The new pose of each particle is predicted based on the robot's motion model, which accounts for the control inputs (such as speed and steering angle) and incorporates some noise to reflect the uncertainty in the movement. This addition of noise is essential to account for drift. Without noise in the correction step the predicted pose would slowly divergence from the position of the robot.

The video on the right shows that the estimated position follows the robots movements, indicating the propagation is correctly implemented. Due to the fact that the localization is using no data of the measurements the particles slowly diverge, caused by the random noise that is implemented.

4. Measurement Update:

The robot then uses sensor data (such as laser range finders or cameras) to update the weights of each particle. This step involves comparing the expected measurements from each particle's pose to the actual sensor measurements. The weight of each particle is determined based on how well the predicted sensor readings from the particle's pose match the actual sensor data. Particles with poses that result in better matches receive higher weights.

The measurement model consist of four different components to calculate the probability of a predicted measurement being correct.

- p_hit

This first component calculates a probability of a measurement prediction corresponding to the actual sensor measurement.

- p_short

This represent the probability of the measurement being shorter than the prediction, for example due to an unexpected obstacle being present.. This is necessary in dynamic environments such as the one used for the final assignment.

- p_max

The ' p_max' component incorporates the probability for when no object is detected, which could also happen on non-reflective surfaces.

- p_rand

This last component is uniform over all ranges and accounts for random measurements due to for example noise or errors.

Combining these four forms the final likelihood of a measurement given the expected pose of the robot for a specific ray. For the full range of rays all probabilities are multiplied with one another. For a high number of rays the product will then become incredibly small (since the probability is between 0 and 1). This can cause numerical underflow, where the numbers are too small to accurately represent.

A solution would be to use the log-likelihood of the probability, which is often used in cases like this. Since the number of rays for the final use-case will not be extreme this does not have to be implemented here.

5. Resampling: To address the problem of particle depletion, where particles with very low weights contribute little to the estimate and effectively get lost, a resampling step is performed. This step involves selecting a new set of particles from the current set, with replacement, where the probability of selecting a particle is proportional to its weight. All these weights are normalized after resampling to ensure they sum up to 1.

The resampling methods that are applied are multinomial resampling and stratified resampling.

- The multinomial method samples according to the weight of the particles, the weight represents the probability that a particle is sampled. This straightforward method In specific situations it might be the case that only higher weight particles are resampled leading to a loss of variance if the same particles keep getting selected. In a situation where these particles are accurately representing the pose of the robot this method is preferred.

- The stratified method subdivides the particles into different groups, in each group particles are resampled. For this method the diversity of the particles is thus guaranteed, also particles with a lower weight are resampled. In situations where the estimated pose is incorrect this method would be preferred.

6. Iteration:

The prediction, correction, normalization, and resampling steps are repeated continuously as the robot moves and receives new sensor data. Over time, the set of particles converges to represent the most likely pose of the robot.

Code structure:

The code is structured into several components, each handling different aspects of the Particle Filter algorithm. Here's an overview:

1. ParticleFilter Class:

- Manages the overall Particle Filter algorithm, including updating and resampling particles.

- Implements methods like update, conventionalUpdate, configureResampler, and needsResampling.

2. ParticleFilterBase Class:

- Provides foundational functionalities for particle filters, such as initializing particles, propagating samples, computing likelihoods, and normalizing weights.

- Implements methods like propagateSamples, computeLikelihoods, set_weights, normaliseWeights, and utility methods like get_average_state.

3. Particle Class:

- Represents individual particles in the Particle Filter.

- Each particle has a weight and pose, and can compute its likelihood based on measurements and propagate its state based on odometry data.

- Implements methods like initialise, propagateSample, computeLikelihood, and utility methods like getWeight, setWeight, getPosition.

4. Resampler Class:

- Handles resampling of particles based on their weights using different algorithms like Multinomial and Stratified resampling.

- Implements methods like resample, _multinomial, _stratified, and utility methods for cumulative sum calculations.

5. Other Supporting Files:

- Files like tools.cpp, Robot.cpp, and World.cpp likely provide additional functionalities and data structures needed for the Particle Filter algorithm, such as random number generation, handling robot-specific data, and managing the world/environment.

Differences and Relationships Between Classes

ParticleFilter:

- This class extends the functionalities of ParticleFilterBase by providing more specific implementations related to particle filter updates and resampling.

- Contains specific methods for updating particles based on sensor measurements and odometry data.

- Uses the base class methods for propagating samples, computing likelihoods, and normalizing weights, but adds its own logic for when and how to resample particles.

ParticleFilterBase:

- Provides core functionalities and common methods needed by any particle filter.

- Implements methods for initializing particles, propagating them, computing their likelihoods, setting and normalizing their weights, and getting the average state.

- Acts as a foundation upon which more specialized particle filters (like ParticleFilter) can be built.

ParticleFilter:

- Manages a collection of Particle objects and handles the overall Particle Filter algorithm.

- Uses Particle objects to represent the state estimates and their associated weights.

- Calls methods on Particle objects to propagate their states and compute their likelihoods based on sensor data.

Particle:

- Represents an individual particle with a specific state (pose) and weight.

- Can propagate its state based on odometry data and compute its likelihood based on sensor measurements.

- Provides methods to initialize, propagate, and compute the likelihood of a particle, which are used by the ParticleFilter.

Physical Testing:

Physical testing is yet to be executed. It will be tested how the localization responds to unmodeled objects and e.g. how parameters must be tuned to work sufficiently for the final challenge.

Updates to previous sections

As some things were not yet described in the sections above these are now given here.

Localization - Physical Testing

The physical testing of the localization was done by rebuilding one of the maps on the test field. By putting the robot on the initial guess pose the algorithm could be tested, visualization in Rviz was done using io->sendPoseEstimate which shows where the robot thinks it is relative to the map.

On the right a video of the localization can be seen, the laser-data as seen by the robot is visualized in white. It can be seen that this data does indeed match with the map during the entire route. The localization algorithm, in this case, thus works correctly. In the middle of the map a box is added to see if the localization is robust enough to still function properly, indeed it is. It is noticeable that the average and thus estimated pose of the robot jumps around quite often. This turns out to not be a problem due to it only being small jumps every time.

Another video, with the physical test and RVIZ visualization, shows the localization doing its job. The spread of the particles is limited, but as long as the algorithm does not lose the correct position this is not a problem.

During testing it was noticed that the algorithm is quite robust, no parameters had to be tuned. Only when the robot moves through an open door, of which the localization algorithm thinks to be a closed wall does the estimated pose lose the correct pose. Recovering from this faulty estimated pose does usually not happen. To fix this the occupancy map used by the localization must be updated when a door is opened, the same happens for global path planning.

Diagrams

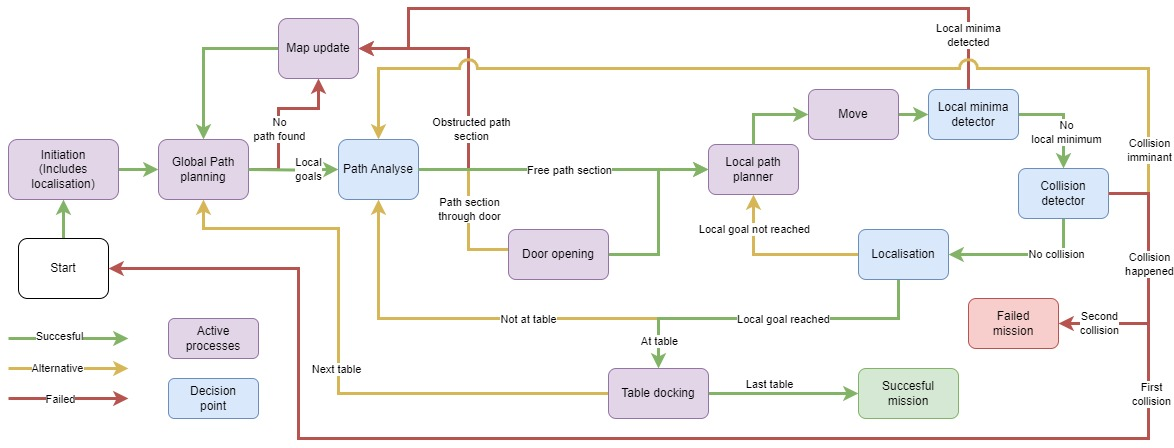

State diagram

Note: An important remark must be made about the state diagram. This is not a State machine of the robot. These are the processes of the code. How the single threaded code runs and in which algorithm/task the robot is in and to what algorithm it could progress to based upon decision points. A certain level of abstraction has been taken to create a clear overview. It was decided to create an overview of the code structure over the states of the robot as the phase of the project demanded a clear overview before each individual algorithm would be combined.

The flow between processes is indicated with arrow, these arrows split at some points, the condition for which arrow on the path is taken is indicated on the arrow itself.

A green arrow means a process was successful, e.g. a global path is planned. A red arrow shows that a processed failed, like there not being an available path to plan. The yellow arrow represents alternatives, e.g. the planning to a next table instead of finishing the mission.

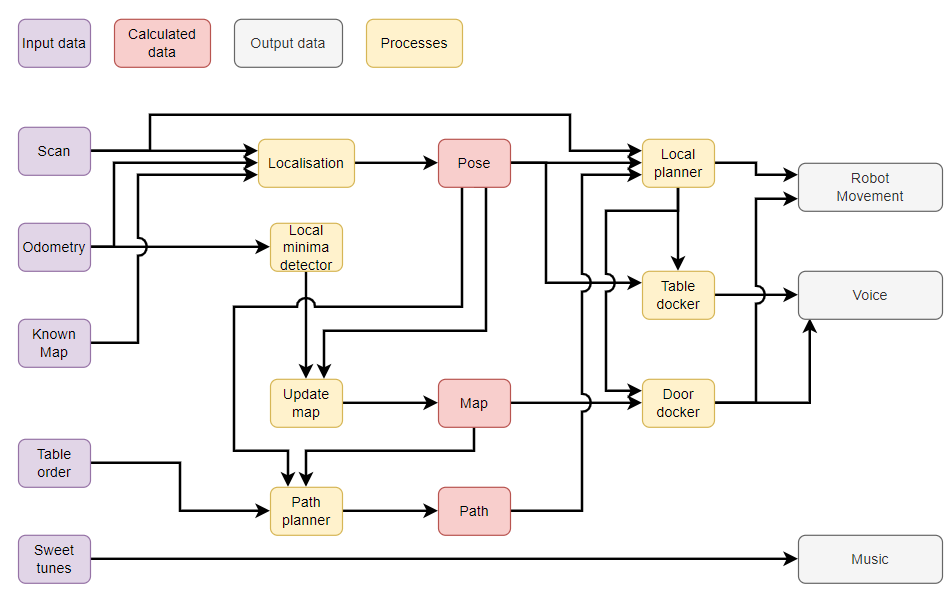

Data flow

This second diagram illustrates the data flow and process architecture of the system. Starting with the input data it is visualized in what processes this input data is used, what data is calculated based on this and finally what the output data is that results from all processes.

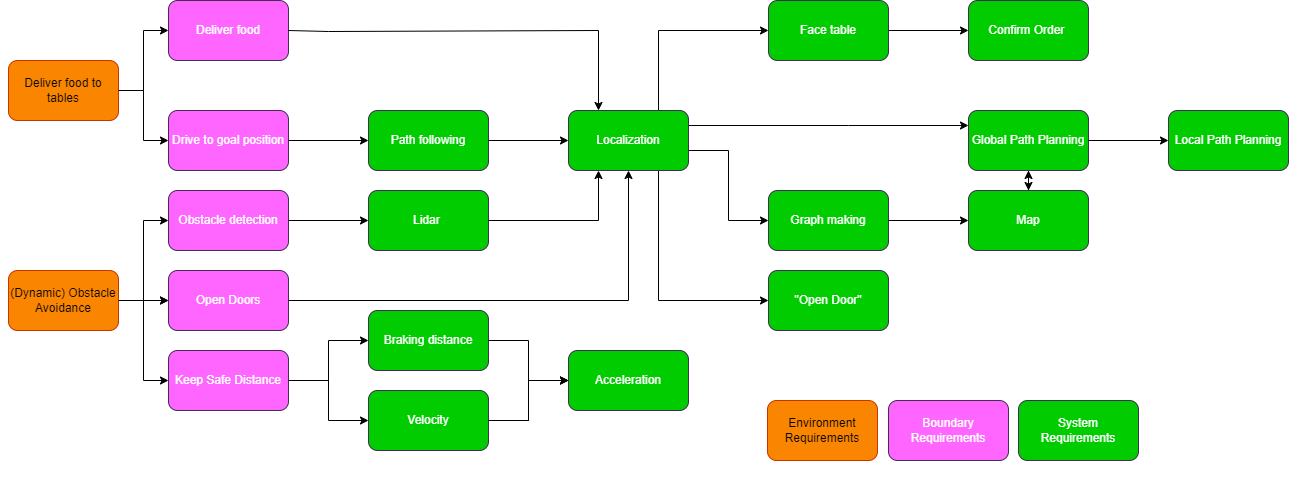

Requirements

Lastly, a small overview of the requirements is given. The requirements are split in three categories. The environment-, boundary- and system requirements. Again the diagram shows a flow of these requirements, where the boundary and system requirements are needed to meet the environment requirements.

Final Challenge

Additional logic

In order to obtain the goal of navigating the restaurant and deliver orders, several algorithms and logic pieces have to be added outside of the previously stated exercises. These algorithms will be presented in this section. They pertain initiation of localisation, table docking, door opening and a general overview of the system.

The parameters for the Artificial Potential Field APF

- k_a = 2: Gain for the attractive field (> 0)

- k_r= 0.00001: Gain for the repulsive field (> 0)

- eta_0 = 0.8: Threshold for the repulsive field in meters

- gamma = 2: A positive integer (> 0)

- k_lin = 1: Gain for the linear velocity

- k_ang = 1: Gain for the angular velocity

- eta_e = 0.2: Threshold for the local minimum in meters

- eta_t = 2.5: Threshold for the local minimum in seconds

- k_e = 10: Gain for increasing repulsive field in local minimum

- min_range_dec = 0.15: Minimum value for decreasing range (front robot)

- max_range_dec = 0.25: Maximum value for decreasing range (side robot)

- eta_odometry = {0.2, 0.2}: Threshold for the local minimum in meters (vector of doubles)

- range_step = 1: Range step size for LiDAR data used in APF

- eta_t_path = 20.0: Local minimum threshold for new path in seconds

- eta_e_path = 0.3: Local minimum threshold radius for new path in meters

Local minima and replanning

The downside of using the Artificial Potential Field algorithm is that it is sensitive of coming stuck in local minima while following the global path. There are two solutions programmed to prevent this. The first solution is gradualy increasing the repulsive field when the robot is stuck in a small local minimum to push the robot out of it. A small local minimum is considered when the robot is stuck in a circle with radius of 0.2 (eta_odometry[0]) meter, centered at the location where the robot moved 0.2 meter from the last local minimum, and stuck in an angle of 0.4 rad (eta_odometry[1]) for at least 2.5 seconds (eta_t). When it moves out of these thresholds the repulsive field is reset.

A large local minimum is considered when the robot is stuck in a circle with radius of 0.3 meter (eta_e_path), centered at the location where the robot moved 0.3 meter from its last updated local minimum location, for more than 20 seconds (eta_t_path). The robot recognizes that it is in a local minimum and it requests a new path, since its current path is blocked, when this happens. The map is now updated and at every range value within 0.5 meter of the robot a pixel is placed in the map. The 0.5 meter is chosen because objects further awaw than 0.5 meter are considered not to be hindering the robot. These pixels represent the detected walls and obstacles, also the ones that are not on the original map. Now a new PRM will be created with a map with inflates walls, same as before, and all pixels will inflate to be the actual objects. The PRM and A-star now recognize these objects and plan a new path around the blocked path to reach its final destination. An example of how this policy works can be seen in the video on the right, where obstacles are added which are not in the predescribed map.

Initiation of localisation

While the initiation of localisation was already created some additional logic was added so it worked better for the final challenge. For the final challenge the start pose of the robot is slightly known; It starts within a starting location. So firstly, it was added that during the start of the challenge when the pose is localised, it can only set a pose in the boundary of the starting area. When it localises outside of this region, it will start over again with localisation. To ensure that the localisation is different, the robot is made to rotate in its place during the localisation. This allows for different start positions for the localisation that might be better suited than the orientation the robot is started in.

The pose is considered localised when two consequetive localisation results return the same pose with an accuracy of one decimal point and the pose is within the starting position. This level of accuracy ensures a certain level of confidence while not being subject to sensor noise. This level was set with the use of simulations and then confirmed on the real-life robot.

Table docking

As is defined in the final challenge description, the robot has to deliver food at a table and then continue to the next table:

Position near the table, facing towards the table. The robot should be close enough for a customer to comfortably take their order from the tray. The exact part of the table that the robot stands next to does not matter.

With the initiation of the map tables are created and saved with several parameters: Corner points, centre point, and a boundary box. The corner points are for the drawing of the png that will be used for both the global navigation and the localisation. The centre point is the point where global navigation will navigate to. The boundary box is when the robot enters this box, it will start with docking at a table. Docking contains rotating to the table's centre, driving forward until it is close to the table, annouching its arrival and then rotating back and driving outside of the boundary box. This is a quick overview of the table docking algorithm where the next several subsections will explain each part more in depth:

Table initiation

As was mentioned before for each table, as defined in the provided json file, the data is extracted and 4 parameters are calculated and saved. (In addition to the three previously mentioned the table number is also saved for obvious reasons). The boundary box of the table is defined with the coordinate of the bottom left and of the top right point. These are calculated by going through each of the corners and extracting the minimum and maximum values of botht he x and y coordinates:

for (const auto& corner: corners) {

minX = std::min(minX, static_cast<float>(corner.x));

maxX = std::max(maxX, static_cast<float>(corner.x));

minY = std::min(minY, static_cast<float>(corner.y));

maxY = std::max(maxY, static_cast<float>(corner.y));

}

// Create top-left and bottom-right points for the bounding box

double offset = 0.4;

Point bottomLeft(minX-offset, minY-offset);

Point topRight(maxX+offset, maxY+offset);

boundingBox.push_back(bottomLeft);

boundingBox.push_back(topRight);

There is one particullary 'magic' number that deserves some attention: offset. This is how much the boundary box should extend in each direction in metres. The current value is chosen based upon maximising the boundary box while not allowing one particular drawback of the current implementation to occur. The boundary box should be big enough so the robot has place to dock from a safe position along the path and undock back to this safe position. When the boundary box is too small, the local planner will not come inside of the boundary box due to its repulsive nature near walls. However, making the value too big allows boundary boxes to extend past walls (as it is currently implemented). As can be seen from the figure the blue box extends past a wall, whenever the robot is on that side of the wall it wil occur that it will doc ans say it has reached the table on the wrong side of the wall. Thus, the value of 0.4 metres is chosen as it allows the robot to dock from a safe position that the local planner can achieve while also not allowing the robot to dock from the wrong side of the wall.

The boundary box could be made bigger when the boundary box calculation takes walls into account. They could also be added in the JSON file by hand. However, the current implementation appeared to suffice.

Global path planning

Several additions where made to the global path planner, see Global path planning[2], to make the nagivation to a table possible. It was decided that the goal for the path planner would be the centre of the table. This allows the robot to dock at any side of the table and shorten the path. Compared to pre-defining points near a table to dock at, this system of navigating to the centre and then docking is more flexible. When an unknown obstacle would be near a table the robot will just navigate around it and still dock as there is no predefined point. One of the additions that was made is the use of a flexible map for the PRM system. As the centre of the table is per definition not safe, it could not be added to the table previously. Currently, when a new table is selected to navigate to a new map is created where the goal table has no collision. This allows the PRM to create a network in this table as well. However, it can still be considered safe as when the robot gets near to this table the local planner kicks in and the boundary box is entered thus docking occurs. The path is thus unsafe but the unsafe portion can never occur with the robot.

Additionally, an addition was made to the starting point of the path. The pose of the robot is used as the first point within the path. We can assume that the current position of the robot is a safe position as otherwise the mission would have been stopped already. Thus, the assumption can also be made that the first point is safe within the path. Previously when the walls are blown up, it could occur that after docking at a table the robot's position was in the blown up table and thus 'unsafe' for the path planner. Thus, it was added that the first point is always safe as the robot is there currently. This grey box where the blown-up walls are made safe in the current location of the robot can also been seen in the image on the right in the lower left corner. This method works only if the localisation is accurate enough. When localisation fails and the pose of the robot is inside of a wall/table it will still try to create a path. However, this is an issue with localisation if it occurs and not with this implementation. During the extensive testing this has never occured but it must be mentioned.

Docking

The docking algorithm is started whenever the pose of the robot is within the boundary box of the table. This is coherent to 'the robot is close enough to the table that an extensive local planner is not needed anymore to reach the table as it can drive straight'.

Firstly, the robot will orientate itself towards the centre of the table. It will then try to drive straight until the robot is 0.2 metres close to an object seen from the LiDaR system. The 20 centimetres is takes as the value as it allows the robot to be safe while also being close enough to the table for people to comfortable grab stuff from it. When it discovers something in its way, it will annouche its arrival: 'I have docked at table _table number_' and wait five seconds. (A test time that simulates people grabbing their food). Afterwards, it will rotate 180 degrees and drive back until it is outside of the boundary box again. 180 degrees makes sure the robots leaves the same direction as it entered which is 'safe'. Whenever something walks infront of the robot while undocking, it will stop and wait until it has moved. This is of course not ideal as it might have another way to get out of the boundary box and to the next table. However, due to an unforeseen bug in the local planner, which kicks in when it is uncomfortable close to a table (when it has docked) it could not be used. Thus, a future point is to make sure the local planner works in all safe configurations. Then it could be used to undock at the table as well. After undocking has happened, the next table is selected and again the global navigation will try to plan a path and the cycle continues.

Door opening

The opening was an essential element for the final challenge as for a certain table, the robot could only reach it with opening the door. In principle, a lot of similarities happen with opening the door and docking at a table. A bounding box is also created for the door, only in the width of the door the bounding box is extended. Whenever the robot comes within this bounding box, it will rotate towards the door, perpendicular to the length of the door. This is done so the door is visible in the LiDaR. The door is asked to open and then the robot will check if the door has actually opened. It will wait five seconds and then ask to open the door again. If after five seconds the door has not opened, it will plan a new route with the door being blocked in the global path planner. If the door is opened, the map that is used for localisation is also updated so it knows the door is open and it can localise accordingly.

The assumption is made that all doors are closed at the start. Whenever, this assumption is not valid. The check if a door is open could be performed each time the pose is updated and update the map when a door is open/closed.

Check if a door has been opened

To check if the door is opened, the centre of the door is taken as representative of the door. The assumption is taken that if the centre is clear, the door has been opened. When the door gets stuck past the centre of the door but not open completely, the local planner will make sure it will go through the part that is open or reroute if the door is not open wide enough.

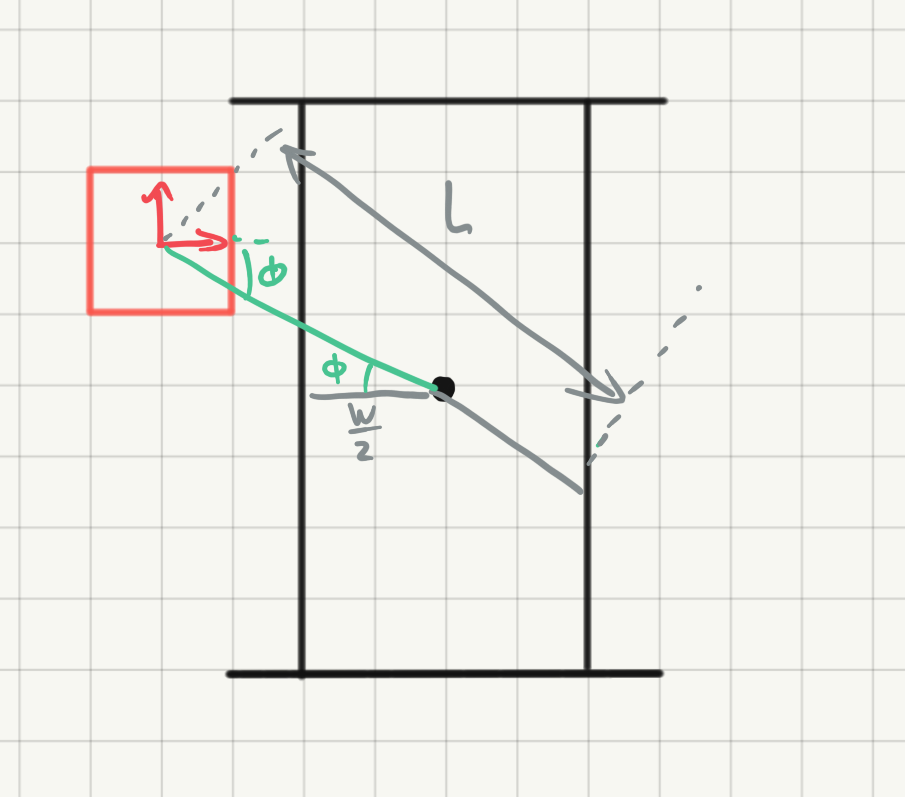

Firstly, the angle of the centre of the door is found in relation to the robot's pose. As the robot first rotates towards the door, the angle will fall within the LiDaR's range.

[math]\displaystyle{ \phi = \theta_{robot}-atan2\left(y_{door}-y_{robot}, \; x_{door}-x_{robot}\right) }[/math]

Secondly, the door width is calculated using the corners of the door that are saved. For this the minimum distance between the length in the x and y direction are taken to make sure the width and not length are taken. This only allows for doors that are horizontal or vertical compared to the global frame. Whenever this is not the case, the width of the door calculation must be updated.

Subsequently the distance to the centre of the door, [math]\displaystyle{ d_{centre} }[/math], from the position of the robot is calculated using pythagoras's theorem. Then using the width of the door, it is calculated what the minimum LiDaR distance should be if the door is open:

[math]\displaystyle{ d_{min,\;open}= d_{centre}-abs\left(\frac{width}{2\cos(\phi)}\right) }[/math]

Lastly, the LiDaR result in the direction of [math]\displaystyle{ \phi }[/math] is compared to the minimum distance if the door is open. Whenever the LiDaR exceeds this value, the door must be open.

Final challenge session

Updates between final test and final challenge

During the final test there was noticed that the robot was able to maneuver around all obstacles which are directly on his path except for objects like chairs. These are objects of which only small surfaces can be scanned, for a chair these are the 4 legs. There was no time to make changes and test again in real life, so a simulation map was made with a chair included (which was not in de map used to make the global path). The step size of the range data used in the APF (range_step) had a value of 10, which meant it only used 100 range values instead of 1000. This was done to make the APF run faster, but this was not necessary since the algorithm was fast enough. It was possible that the APF skipped the range values that detected the legs of the chair because of this step size, so the new value of range_step was set to 1 and all range values are used for the APF. This was tested in simulation and it did seem to work, the robot drove around the chair.

Final challenge

(The video of the final challenge is uploaded here Challenge 1 - DSCF9783, Challenge 2 - DSCF9785)

With all additions to the code the robot was ready for the final challenge in the full restaurant environment. The video shows the initialization of the localization, after which the robot estimates it position correctly and starts to move towards the first table. Here it goes through the docking and undocking procedure and the path to the second table is planned. The robots biggest enemy blocks part of the global path, meaning the local path must find a way around this chair. The chair is however difficult due to it only consisting of four thin legs. Unfortunately the robot manages to see the chair, but still drives straight into it. The first challenge hereby ended. In the video it can be seen that indeed the chair is seen by the robot, this was also visualized on the screen. The local path planning should use this information and drive around the chair. It does however not do this, and accelerates into the chair. It is not known why this happens, but a solution is given in the Recommendations section.

During a second challenge we decided to change the order of the tables (now 0 3 4) to allow showcasing of our door opening procedure. In addition, a box was placed under the chair the robot crashed into during the first challenge to improve the visibility. The initial part of the challenge is the same. The robot again sees the chair (or rather the box), but this time it moves around it towards the door. The APF seems to function fine here. At the door it asks for it to be opened and when it does the robot moves to the table and starts docking.

The undocking procedure is where it went wrong for this challenge. In undocking the robot rotates 180 degrees from the table and then drives forward until it is out of the boundary box of the table. This boundary box is represented by a certain range around the table. Due to the range only being set to 0.4, instead of the simulated 0.7. Due to this the robot moves away from the table, but only by a little bit. When it started moving the same error with APF and the chair occurs and the robot crashes into the chair. Having a bigger undocking range would have given the robot more range between itself and the chair. This would likely not give any problems with the APF.

Differences Simulation vs Real challenge

The situation of the final challenge was recreated in simulation. In this simulation the exact code was used that was used during the challenge. the problem encountered during the challenge was that the chair could not be detected. In the GIF next to this text it can be seen that the in the simulation the robot completes the challenge. The only difference that is seen during the real challenge and simulation, also in other tests beside the final challenge, is the avoidance of chairs as an obstacle. It seems like the small legs make the local path planner not work properly and follow a path in between the legs through the chair.